* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download Euclidean Space - Will Rosenbaum

Singular-value decomposition wikipedia , lookup

Orthogonal matrix wikipedia , lookup

Eigenvalues and eigenvectors wikipedia , lookup

Cross product wikipedia , lookup

Exterior algebra wikipedia , lookup

System of linear equations wikipedia , lookup

Laplace–Runge–Lenz vector wikipedia , lookup

Matrix calculus wikipedia , lookup

Euclidean vector wikipedia , lookup

Vector space wikipedia , lookup

Euclidean Space

Will Rosenbaum

Updated: October 17, 2014

Department of Mathematics

University of California, Los Angeles

In this essay, we explore the algebraic and geometric properties of n dimensional Euclidean space, Rn . Formally Rn is the n-fold Cartesian product of R:

Rn = {(v1 , v2 , . . . , vn ) | vi ∈ R for i = 1, 2, . . . , n} .

We refer to elements v ∈ Rn as vectors, which will be denoted in boldface. Rn inherits

an algebraic structure from R—that of a vector space. Thus, we define the operations of

vector addition and scalar multiplication. Using these operations, we define notions linear

combination, span, linear independence, and basis. These concepts form the foundation of

our algebraic understanding of Euclidean space.

In the second section we examine the geometry of Rn . We give a geometric view of the

vector space operations defined in the first section. We introduce the notion of an inner

product on Rn , which allows us to define angle and length in Euclidean space. We then

describe lines, planes, and balls in Rn .

In the final section we consider sequences of vectors in Rn and define what it means for

a sequence to converge to a limit.

1 Algebraic Properties

1.1 Vector space axioms Euclidean space Rn inherits two algebraic operations from R. Let

v, w ∈ Rn with

v = (v1 , v2 , . . . , vn ),

w = (w1 , w2 , . . . , wn ),

and let c ∈ R. We define

Vector addition v + w = (v1 + w1 , v2 + w2 , . . . , vn + wn )

Scalar multiplication cv = (cv1 , cv2 , . . . , cvn ).

Using these definitions and the algebraic properties of R, it is straightforward to verify the

following properties of Rn , known as the vector space axioms.

Proposition 1 (Vector space axioms). For all u, v, w ∈ Rn and c, d ∈ R, the following

properties hold.

Associativity of addition (u + v) + w = u + (v + w)

Additive Identity there exists 0 ∈ Rn such that 0 + u = u

Additive inverses there exists −u such that u + (−u) = 0

Commutativity of addtion u + v = v + u

Associativity of scalar multiplication c(du) = (cd)u

Multiplicative identity 1u = u

Euclidean Space

Distributivity over scalar addition (c + d)u = cu + du

Distributivity over vector addition c(u + v) = cu + cv

The proofs of these properties follow directly from the definitions of vector addition

and scalar multipliction, and the properties of R (i.e., the field axioms). For example, we

will prove the distributivity over vector addition. Write u = (u1 , u2 , . . . , un ) and v =

(v1 , v2 , . . . , vn ). Then

c(u + v) = c(u1 + v1 , u2 + v2 , . . . , un + vn )

= (c(u1 + v1 ), c(u2 + v2 ), . . . , c(un + vn ))

def. of vector addition

def. of scalar multiplication

= (cu1 + cv1 , cu2 + cv2 , . . . , cun + cvn )

= (cu1 , cu2 , . . . , cun ) + (cv1 , cv2 , . . . , cvn )

distributivity in R

def. of vector addition

= cu + cv

def. of scalar multiplication

The proofs of the other properties are similar.

Exercise 2. Prove the remaining properties in Propostion 1.

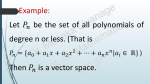

Remark 3. Any set V with vector addition and scalar multiplication (from R) satisfying the

properties Proposition 1 is called a (real) vector space, or a vector space over R. If scalar

multiplication is defined for elements from a different field F, we call V a vector space over

F.

1.2 Linear combinations and span Given vectors v, v1 , v2 , . . . , vk ∈ Rn , we say that v is a

linear combination of v1 , . . . , vk if v can be written

v = c1 v1 + c2 v2 + · + ck vk =

k

X

ci vi

i=1

for some c1 , c2 , . . . , ck ∈ R. Given a subset S ⊆ Rn , the span of S, denoted span S is the

set of vectors which are (finite) linear combinations of vectors in S. Specifically,

span S = {c1 v1 + c2 v2 + · · · + ck vk | k ∈ N, c1 , . . . , ck ∈ R, v1 , . . . , vk ∈ S} .

A vector subspace of V ⊆ Rn is a subset which is closed under vector addition and

scalar multiplication: for any v, w ∈ V and c ∈ R, v + w ∈ V and cv ∈ V . Since

V inherits vector addition and scalar multiplication from Rn , it automatically satisfies the

vector space axioms. Thus, we can think of V as vector space over R embedded into Rn .

Given a set S ⊆ Rn , it is straightforward to verify that the set V = span S is a vector

subspace of Rn .

Example 4. In Rn , define the vectors e1 , e2 , . . . , en by

e1 = (1, 0, 0, . . . , 0, 0)

e2 = (0, 1, 0, . . . , 0, 0)

..

.

en = (0, 0, 0, . . . , 0, 1).

These vectors are known as the stanard basis vectors in Rn . We will show that

Rn = span {e1 , e2 , . . . , en } .

2

Euclidean Space

To see this, take any vector v = (v1 , v2 , . . . , vn ) ∈ Rn . It is easy to see that

v = v1 e1 + v2 e2 + · · · + vn en ,

hence v ∈ span {e1 , e2 , . . . , en }.

Example 5. Consider the vectors

u = (1, 1, 1),

v = (1, 3, 1)

in R3 . We will show that

w1 = (1, 2, 1) ∈ span {u, v}

while

w2 = (1, 2, 3) ∈

/ span {u, v} .

Such computations require solving a system of linear equations. A vector w ∈ R3 is in

span {u, v} if and only if there exist coefficients a, b ∈ R such that w = au + bv. For

w = w1 , this equation becomes

(1, 2, 1) = a(1, 1, 1) + b(1, 3, 1) = (a + b, a + 3b, a + b).

(∗)

In order for two vectors to be equal, all of there coordinates must be equal. Thus (∗) is

equivalent to the system of equations

1=a+b

2 = a + 3b

1 = a + b.

Such equations can be solved by the method of elimination. This method amounts to solving

the first equation for one of the variables a or b, then substituting this expression into the

remaining equations, thus eliminating one variable from the remaining expressions. We

continue until we either find a solution or show that no such value exists. Solving the first

equation for b gives

b = 1 − a.

Substituting this into the second equation gives

2 = a + 3(1 − a) =⇒ 2 = 3 − 2a =⇒ a =

Then b = 1 − a gives b =

1

2

1

.

2

as well. Thus

1

2

satisfies all three equations. It is easy to verify that indeed

a=b=

1

1

(1, 1, 1) + (1, 3, 1).

2

2

On the other hand, consider w = w2 . Setting w2 = au + bu gives rise to the system

(1, 2, 1) =

1=a+b

2 = a + 3b

3 = a + b.

Solving the first two equations as before gives a = b = 12 . However, this choice of a and b

clearly does not satisfy the third equation, 3 = a + b. Therefore, no values of a and b satisfy

all three equations simultaneously, hence w2 6= au + bv for any choice of a, b ∈ R. This

shows that w2 ∈

/ span {u, v}, as desired.

3

Euclidean Space

Exercise 6. Consider the vectors

u = (1, −1, 0),

v = (1, 0, −1)

in R3 . Let V = span {u, v}. Determine which of the following vectors are in V . For those

vectors w ∈ V , express w as a linear combination of u and v.

(a) (2, −1, −1)

(b) (1, 0, 0)

(c) (0, 1, −1)

(d) (2, 5, −7)

Exercise 7. For V as in the previous exercise, show that w = (w1 , w2 , w3 ) ∈ V if and only

if w1 + w2 + w3 = 0.

Exercise 8. Let u = (1, 1) and v = (1, −1) in R2 . Show that every vector (a, b) ∈ R2 can

be written as a linear combination of u and v. Thus

R2 = span {(1, 1), (1, −1)}

Exercise 9. Prove that if S ⊆ T ⊆ Rn then

span S ⊆ span T.

1.3

Linear independence Let S = {v1 , v2 , . . . , vk } be a finite set of vectors in Rn . We say

that S is linearly independent if

k

X

i=1

ci vi = 0 =⇒ c1 = c2 = · · · = ck = 0.

A set which is not linearly independent is linearly dependent.

Example 10. We will show that the set S = {(1, 1), (1, −1)} is linearly independent in R2 ,

while T = {(1, 1), (1, −1), (1, 0)} is linearly dependent. Let u = (1, 1), v = (1, −1) and

w = (1, 0). To show that S is linearly independent, we must show that

au + bw = 0 =⇒ a = b = 0.

The left side of this expression is equivalent to the system of equations

a+b=0

a−b=0

We use elimination to solve this system. Solving the first equation for b gives b = −a.

Substituting this into the second equation gives a − (−a) = 0, hence 2a = 0, so a = 0.

Thus the only solution is for a = b = 0. Therefore, S = {u, v} is linearly independent, as

desired.

To show that T is linearly dependent, we must find coefficients a, b, c ∈ R, not all zero,

such that

au + bv + cw = 0.

4

Euclidean Space

This is equivalent to the system of equations

a+b+c=0

a − b = 0.

Solving the second equation for b gives b = a. Substituting this expression into the first

gives 2a + c = 0. This equation has infinitely many solutions with a, c 6= 0, for example,

a = 1, c = −2. Thus a = b = 1, c = −2 solves the system. Thus,

1u + 1v − 2w = 0

so T is linearly dependent. Note that this computation also shows that w = 12 u + 21 v.

Exercise 11. Suppose S ⊆ Rn is linearly independent and T ⊆ S. Show that T is also

linearly independent.

Exercise 12. Suppose S ⊆ Rn is linearly dependent and S ⊆ T . Show that T is linearly

dependent.

We give an equivalent characterization of linear independence in the following proposition.

Proposition 13. A finite subset S = {v1 , v2 , . . . , vk } ⊆ Rn is linearly independent if and

only if every every vector v ∈ span S has a unique representation as a linear combination

of elements from S.

Proof. ⇒: Suppose S is linearly independent, and v ∈ span S. Suppose we can write v as

k

X

ci vi = v =

k

X

i=1

ci vi =

k

X

di vi =⇒

k

X

i=1

i=1

=⇒

di vi .

i=1

i=1

Then

k

X

k

X

i=1

ci vi −

k

X

di vi = 0

i=1

(ci − di )vi = 0

=⇒ ci − di = 0

for

i = 1, 2, . . . , k.

Therefore, ci = di for all i, hence the representation of v is unique.

⇐: Suppose the representation of every v ∈ span S is unique. Notice that

0 = 0v1 + 0v2 + · · · + 0vk .

Since this representation of 0 is unique, we have

k

X

i=1

ci vi = 0 =⇒ c1 = c2 = · · · = ck = 0.

Therefore, S is linearly independent.

We can interpret Proposition 13 as showing that a linearly independent set is a smallest

set with a given span. Indeed, if S = {v1 , v2 , . . . , vk } is linearly independent, then we

cannot write any vi as a linear combination of the vj for j 6= i.

5

Euclidean Space

1.4 Basis Let V ⊆ Rn be a vector subspace. We call as set B ⊆ V a basis for V if every

v ∈ V has a unique expression as a linear combination of elements in B. An immediate

consequence of Proposition 13 is that B is a basis for V if and only if V = span B and B is

linearly indepent.

Example 14. The standard basis for Rn is the set

B = {e1 , e2 , . . . , en }

where as before, ei is the vector with 1 in it’s i-th coordinate while the other coordinates are

0. It is easy to see that span B = Rn and that B is linearly independent. Hence B is indeed

a basis for Rn . For any vector v = (v1 , v2 , . . . , vn ) the coefficient of ei when writing v as

a linear combination of B is precisely the i-th coordinate of v:

v = v1 e1 + v2 e2 + · · · + vn en .

The following “replacement theorem” is the main result of this section, and has many

useful consequences.

Theorem 15 (Replacement theorem). Let S = {v1 , v2 , . . . , vm } ⊆ Rn and V = span S.

Suppose T = {w1 , w2 , . . . , wℓ } ⊆ V is linearly independent. Then ℓ ≤ m, and there

exists a subset S ′ ⊆ S consisting of m − ℓ vectors such that V = span(T ∪ S ′ ).

Example 16. Let S = {e1 , e2 , e3 } ⊆ R3 . It is easy to see that span S = R3 . Consider the

set T = {(1, −1, 0), (1, 0, −1)}. We first observe that T is linearly independent:

a(1, −1, 0) + b(1, 0, −1) = (0, 0, 0) =⇒ a + b = 0, −a = 0, −b = 0.

We claim that taking S ′ = {e1 }, we have R3 = span T ∪ S ′ . To see this, we must show

that every v = (v1 , v2 , v3 ) is a linear combination of (1, −1, 0), (1, 0, −1), and (1, 0, 0). To

find such a linear combination, we must solve the equation

(v1 , v2 , v3 ) = c1 (1, −1, 0) + c2 (1, 0, −1) + c3 (1, 0, 0).

(∗)

This equation is equivalent to the system of equations

v1 = c1 + c2 + c3

v2 = −c2

v3 = −c3

Substituting the second two equations into the first, we find that

c1 = v1 + v2 + v3 ,

c2 = −v2 ,

c3 = −v3

satisfies (∗). Thus R3 = (span T ∪ S ′ ), as desired.

Proof of replacement theorem. We argue by induction on ℓ. The base case ℓ = 0 is trivial,

taking S ′ = S. For the inductive step, assume the theorem is true for some fixed ℓ, and

suppose T = {w1 , w2 , . . . , wℓ , wℓ+1 }. By the inductive hypothesis, there exists a set S ′′ ⊆

S of m − ℓ elements such that V = span(T ′ ∪ S ′′ ) where T ′ = {w1 , w2 , . . . , wℓ }. Since

wℓ+1 ∈ V , we can write

wℓ+1 = c1 w1 + c2 w2 + · · · + cℓ wℓ + d1 v1 + d2 v2 + · · · + dm−ℓ vm−ℓ

6

Euclidean Space

for some coefficients c1 , . . . , cℓ , d1 , . . . , dm−ℓ ∈ R. Since T is linearly independent, wℓ+1 ∈

/

span T ′ , so we must have ℓ < m. Further, there exists some di 6= 0. Without loss of generality, assume dm−ℓ 6= 0. Then we can write vm−ℓ as

vm−ℓ =

1 c 1 w1 + c 2 w2 + · · · + c ℓ wℓ

dm−ℓ

+ d1 v1 + d2 v2 + · · · + dm−ℓ−1 vm−ℓ−1 − wℓ+1 .

Let S ′ = {v1 , . . . , vm−ℓ−1 }. The equation above shows that vm−ℓ ∈ span(T ∪ S ′ ), hence

V = span(T ′ ∪ S ′′ ) ⊆ span(T ∪ S ′ ). By assumption span(T ∪ S ′ ) ⊆ V , so we have

V = span(T ∪ S ′ ), as desired.

Proposition 17. Let V ⊆ Rn be a vector space. Then

(a) every basis for V has the same number of elements, called the dimension of V , denoted

dim V

(b) dim Rn = n

(c) a set B ⊆ V is a basis for V if and only if B is linearly independent and contains exactly

dim V vectors

(d) every linearly independent set T ⊆ V can be extended to a basis B of V where T ⊆ B

Proof. (a) Suppose S, T ⊆ V are both bases for V , and assume that T contains ℓ elements

and S contains m elements. We must show that ℓ = m. Since T is linearly independent

and T ⊆ span S, the replacement theorem implies that ℓ ≤ m. Symmetrically, since S

is linearly independent and S ⊆ T , the replacement theorem gives m ≤ ℓ. Thus m = ℓ.

(b) Since B = {e1 , e2 , . . . , en } is a basis for Rn with n elements, dim Rn = n.

(c) First suppose B is a linearly independent set of dim V elements. Let B ′ be a basis for V

(which contains dim V elements by definition). Applying the replacement theorem to

B ⊂ span B ′ , we find that span B = span B ′ = V . Hence B is a basis for V . On the

other hand, by part (a), every basis B for V has n elements and is linearly independent

by Proposition 13.

(d) Choose your your favorite basis S for V and apply the replacement theorem to T ⊂

V = span S. We obtain a set S ′ where span(T ∪ S ′ ) = V . We must show that T ∪ S ′ is

linearly independent. If T ∪ S ′ is linearly dependent, then we can find a proper subset

T ′ ∪ S ′′ which is linearly independent and has the same span as T ′ ∪ S ′′ . But then we

have found a basis of V with fewer than dim V elements, contradicting (a).

Exercise 18. Let

S = {(1, 2, 3), (3, 2, 1), (1, 1, 1), (1, 0, −1)} ⊆ R3 .

and take V = span S. Find a basis for V and compute dim V . Extend the basis for V to a

basis for R3 .

7

Euclidean Space

2

Geometry of Rn

2.1 Geometric interpretation of vector space operations We can think of vectors in Rn as

quantities with magnitude and direction: arrows. For example, the vector v = (v1 , v2 , . . . , vn )

is an arrow whose “tail” is at the origin and whose “head” is located at the point (v1 , v2 , . . . , vn ).

We think of two arrows as representing the same vector if they have the same magnitude

and direction, regardless of where we place the tail of the arrows. Thus the arrow from

(0, 0, . . . , 0) to (v1 , v2 , . . . , vn ) and the arrow from (1, 1, . . . , 1) to (v1 +1, v2 +1, . . . , vn +

1) represent the same vector v. In general, the coordinates of a vector are obtained by subtracting the coordinates of the tail from the coordinates from the head.

Using this arrow representation of vectors, we give a geometric interpretation of vector

addtion and scalar multiplication. For u and v in Rn , place the arrow associated with u with

its tail at the origin, 0, and place v with its tail at the head of u. Then the arrow associated

with u + v has its tail at the origin (the tail of u) and its head at the head of v. Using

this picture, we can give a geometric interpretation of the commutativity of addition via the

“parallelogram law:” u + v and v + u correspond to arrows that form the two sides of a

parallelogram whose diagonal goes from 0 to u + v = v + u.

Given the arrow associated with a vector v and a scalar c ∈ R, the arrow associated

with cv is the vector of v scaled by a factor of c. For example, if c > 0 then cv points in the

same direction as v, but its length is c times the length of v. If c < 0, then cv points in the

opposite direction of v and has length |c| times the length of v.

2.2 Inner products and orthogonality Inner products (also commonly refered to as dot products) give a means of quantifying length and angles of vectors. An inner product in Rn is

a function that takes a pair of vectors u = (u1 , u2 , . . . , un ) and v = (v1 , v2 , . . . , vn ) and

gives a real number, denoted hu, vi. The standard inner product in Rn is defined by

hu, vi =

n

X

i=1

ui vi = u1 v1 + u2 v2 + · · · + un vn .

This inner product satisfies the inner product axioms as described in the following proposition, whose proof is left as an exercise to the reader.

Proposition 19 (Inner product axioms). Let u, v, w ∈ Rn and c ∈ R. Then h·, ·i satisfies

the following properties.

symmetry hu, vi = hv, ui

linearity hcu, vi = c hu, vi and hu + v, wi = hu, wi + hv, wi

positive definiteness hu, ui ≥ 0 with hu, ui = 0 if and only if u = 0.

Any (real) vector space V with an operator h·, ·i : V × V → R satisfying the inner

product axioms is known as an inner product space.

Definition 20. Two vectors u, v ∈ Rn are called orthogonal if hu, vi = 0. A subset S ⊆

Rn is an orthogonal set if for all distinct pairs u, v ∈ S with u 6= v, u and v are orthogonal.

A vector v ∈ Rn is normal if hv, vi = 1. A set S ⊆ Rn is orthonormal if S is orthogonal,

and each v ∈ S is normal:

(

1 if u = v

(∀u, v ∈ S) hu, vi =

0 if u 6= v.

8

Euclidean Space

Proposition 21. Let S ⊆ Rn be a finite orthogonal set not containing 0. Then S is linearly

independent.

Proof. Suppose S = {v1 , v2 , . . . , vk } is orthogonal and that

k

X

ci vi = 0.

i=1

We must show that ci = 0 for all i. To this end, take some vj ∈ S and compute the inner

product of vj with both sides of the equation above:

+

* k

X

ci vi , vj = h0, vj i

i=1

=⇒

k

X

i=1

ci hvi , vj i = 0

(linearity)

=⇒ cj hvj , vj i = 0

=⇒ cj = 0

(hvi , vj i = 0 for i 6= j)

(positive definiteness).

Thus, cj = 0 for all j, as desired.

Corollary 22. Orthonormal sets not containing 0 are linearly independent.

Corollary 23. An orthogonal subset S ⊆ Rn contains at most n nonzero vectors.

We refer to a basis B ⊆ Rn which is orthonormal as an orthonormal basis. We will see

that orthonormal bases are incredibly computationally and theoretically convenient.

Exercise 24. Show that the set

B=

1

1

√ ,√

2

2

1 −1

, √ ,√

2

2

is an orthonormal basis for R2 .

Exercise 25. Show that the standard basis B = {e1 , e2 , . . . , en } is an orthonormal basis for

Rn .

2.3 Length and angle Given a vector v = (v1 , v2 , . . . , vn ) ∈ Rn , we define the norm (or

length, or modulus) of v by

q

p

|v| = hv, vi = v12 + v22 + · · · + vn2 .

We call a vector v a unit vector if |v| = 1.

Suppose θ is the angle between the arrows associated with vectors u and v, when the

vectors have the same tail. Then we have

cos θ =

hu, vi

.

|u| |v|

(1)

Equation (1) is the single most important geometric fact about inner products. Indeed, it

connects algebra (the inner product) with trigonometry (the cosine function). In particular,

(1) shows that u and v are orthogonal if and only if they form a right angle.

9

Euclidean Space

√

Exercise 26. Let u = (1, 2, 3), v = (2, 2, 3), and w = (3/2, 3/2, 3). Show that u, v, and

w form an equalateral triangle.

Exercise 27. Show that |u|2 + |v|2 = |u + v|2 if and only if u and v are orthogonal. This is

the Pythagorean theorem.

Exercise 28. Show that the points u = (1, 3, 0), v = (3, 2, 1), w = (4, 3, 1) form a right

triangle.

2.4 Cauchy-Schwarz and triangle inequalities The Cauchy-Schwarz inequality has been called

the single most important inequality in mathematics. We state and prove this inequality

here.

Proposition 29 (Cauchy-Schwarz inequality). Let u, v ∈ Rn . Then

|hu, vi| ≤ |u| |v| .

Proof. We will prove the equivalent formulation

2

2

2

hu, vi ≤ |u| |v| .

To this end, let λ ∈ R. Using the properties of inner products, we have

0 ≤ hu − λv, u − λvi

= hu, ui − 2λ hu, vi + λ2 hv, vi

2

2

= |u| − 2λ hu, vi + λ2 |v| .

Choosing λ =

hu,vi

|v|2

minimizes the final expression and gives

2

0 ≤ |u| − 2

hu, vi2

|v|

2

+

hu, vi2

|v|

2

.

Simplifying this expression and solving for hu, vi2 gives the desired result.

A very useful consequence of the Cauchy-Schwarz inequality is the triangle inequality.

Corollary 30 (Triangle inequality). Let u, v ∈ Rn . Then |u + v| ≤ |u| + |v|.

Proof. We compute

2

|u + v| = hu + v, u + vi

= |u|2 + 2 hu, vi + |v|2

2

≤ |u| + 2 |u| |v| + |v|

2

(Cauchy-Schwarz)

2

= (|u| + |v|) .

The triangle inequality follows from taking square roots of the first and last expression.

10

Euclidean Space

2.5 Projection Let u, v ∈ Rn . We define the projection of v onto u by

proju v =

hv, ui

|u|2

u

Note that in the case where u is a unit vector (|u| = 1) then proju v = hv, ui u. We define

the orthogonal compliment of v relative to u by

orthu v = v − proju v.

It is clear from the defintions of proju v and orthu that

v = proju v + orthu v.

(2)

Further, proju v and orthu are orthogonal:

+

*

hv, ui

hv, ui

hproju v, orthu vi =

2 u, v −

2 u

|u|

|u|

*

+ *

+

hv, ui

hv, ui hv, ui

=

2 u, v −

2 u,

2 u

|u|

|u|

|u|

!2

hv, ui

hv, ui

hu, ui

=

2 hu, vi −

2

|u|

|u|

= 0.

Thus (2) decomposes v into a sum of vectors, one of which is parallel to u and the other of

which is orthogonal to u.

2.6 Orthonormal bases The following is a useful property of orthonormal bases.

Proposition 31. Suppose V ⊆ Rn is a vector subspace and B = {u1 , u2 , . . . , uk } is an

orthonormal basis for V . Then for any v ∈ V ,

v=

k

X

i=1

hv, ui i ui .

Problem 32. Prove Proposition 31.

Given that orthonormal bases make our lives computationally easy, it would be nice to

have a method for generating orthonormal bases from arbitrary linearly independent sets.

It turns out that this is possible, as the following proposition shows.

Proposition 33. Let S ⊆ Rn be a linearly independent. Then there exists an orthonormal

set B such that span B = span S. In particular, every vector subspace V ⊆ Rn has an

orthonormal basis.

Problem 34. Prove Proposition 33. Let S = {v1 , v2 , . . . , vk }. The idea is to use induction

on k. If k = 1, then S is trivially orthogonal. To normalize S, we can form

u1 =

v1

.

|v1 |

11

Euclidean Space

For the inductive step, if the theorem holds for k, we must show it holds for k + 1. By

the inductive hypothesis, given S ′ = {v1 , . . . , vk } we can find an orthonormal set B ′ =

{u1 , . . . , uk } with span S ′ = span B ′ . Now form

′

vk+1

= vk+1 −

k

X

i=1

hvk+1 , ui i ui .

′ ′

′

and

Argue that vk+1

is orthogonal to all ui ∈ B ′ . Thus taking uk+1 = vk+1

/ vk+1

′

B = B ∪ {uk+1 } gives an orthonormal set with the same span as S. This method is called

the Gram-Schmidt orthogonalization process for finding an orthonormal basis.

Exercise 35. Use the Gram-Schmidt orthogonalization process to convert

S = {(1, 1, 0), (1, 0, −1), (0, −1, 1)}

into an orthonormal basis for R3 .

2.7 Lines and planes A line in Rn is a set of the form

L = {x0 + tv | t ∈ R} .

We call L as above the line through x0 with direction vector v 6= 0. Given two lines

L = {x0 + tv | t ∈ R}

and

L′ = {y0 + tw | t ∈ R}

we say that L and L′ are parallel if there exists u ∈ Rn such that

L′ = L + {u} = {x + u | x ∈ L} .

L and L′ are intersecting if L ∩ L′ 6= ∅. If L and L′ are neither parallel nor intersecting.

Problem 36. Show that L and L′ are parallel if and only if their direction vectors v and w

are parallel. That is, there exists some c ∈ R such that w = cv.

Exercise 37. Determine which of the following lines are parallel, intersecting, and skew:

L1 = {(0, 1, 0) + t(1, 0, 1) | t ∈ R} ,

L2 = {(2, 0, 2) + t(1, −1, 1) | t ∈ R} ,

L3 = {(2, 1, 2) + t(−2, 0, −2) | t ∈ R} .

Given a line L = {x0 + tv} ⊆ Rn and a point w ∈ Rn , we define the distance from

w to L to be the minimum distance from w to any point x ∈ L:

dist(w, L) = min {|w − x| | x ∈ L} .

Problem 38. Prove that the closest point point to w in L is x = x0 + projv (w − x0 ).

Therefore,

dist(w, L) = |orthv (w − x0 )| .

In particular, show that this choice of x0 satisfies hw − x, vi = 0 and apply the Pythagorean

theorem.

Exercise 39. Compute the distance from (1, 1, 1) to the line

L = {(1, 1, −1) + t(1, 0, −1)} .

12

Euclidean Space

A plane in Rn is a subset of the form

P = {x0 + tv + sw | s, t ∈ R}

where v and w are linearly independent. We say the planes P1 and P2 are parallel if there

exists u ∈ Rn such that

P2 = P1 + {u} = {x + u | x ∈ P1 } .

Problem 40. Show that

P1 = {x1 + tv1 + sw1 | s, t ∈ R}

and

P2 = {x2 + tv2 + sw2 | s, t ∈ R}

are parallel if and only if

span {v1 , w1 } = span {v2 , w2 } .

2.8 Cross products in R3 In three dimensional space R3 , there is a very useful “multiplication”

defined on vectors: the cross product. For v = (v1 , v2 , v3 ) and w = (w1 , w2 , w3 ), we define

v × w = (v2 w3 − v3 w2 , v3 w1 − v1 w3 , v1 w2 − v2 w1 ).

The usefullness of the cross product is elucidated by the following proposition.

Proposition 41 (Characterization of the cross product). For all v, w ∈ R3 , the following

hold:

(a) v × w is orthogonal to both v and w,

(b) |v × w| = |v| |w| sin θ where θ is the angle between v and w,

(c) the direction of v × w is given by the “right hand rule.”

It turns out that the cross product is completely determined by the three properties

above.

Problem 42. Prove parts (a) and (b) of Proposition 41. For part (b), use the fact that sin2 θ =

1 − cos2 θ and Equation (1).

Exercise 43. Show that for all v, w ∈ R3

v × w = −w × v.

In particular, conlude that v × v = 0.

Exercise 44. Find vectors u, v, w ∈ R3 such that

(u × v) × w 6= u × (v × w).

Exercise 45. Let u, v, w ∈ R3 and c ∈ R. Show that

(a) (u + v) × w = (u × w) + (v × w),

(b) (cv) × w = c(v × w).

13

Euclidean Space

Cross products give a wonderful means of understanding planes in R3 . Let

P = {x0 + tv + sw | s, t ∈ R} .

We define the normal vector n of P to be

n = v × w.

Exercise 46. Show that for all x ∈ P , n is orthogonal to x − x0 . (Hint: use Proposition 41

and the result of Exercise 45).

Problem 47. Use the previous exercise to show that every plane P in R3 can be expressed

in the form

P = (x, y, z) ∈ R3 ax + by + cy = d

for some a, b, c, d ∈ R. This is the standard form of a plane in R3 . (Hint: take a, b, c with

n = (a, b, c).)

Problem 48. Let P1 and P2 be planes in R3 . Show that P1 and P2 are parallel if and only

their normal vectors are parallel.

Exercise 49. Consider the planes P1 and P2 in R3 given by

P1 = {(2, 2, 0) + s(1, 1, 1) + t(0, 0, 1) | s, t ∈ R}

and

P2 = {(2, 0, 1) + s(1, 0, 0) + t(1, −1, 0) | s, t ∈ R} .

Show that P1 ∩ P2 is a L and find a representation of L in the form

L = {x0 + tv | t ∈ R} .

(Hint: convince yourself that v should be orthogonal to normal vectors for both P1 and P2 .)

14