* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download Robust Statistics - COST Action IC0702

Survey

Document related concepts

Linear least squares (mathematics) wikipedia , lookup

Degrees of freedom (statistics) wikipedia , lookup

Taylor's law wikipedia , lookup

Bootstrapping (statistics) wikipedia , lookup

Statistics education wikipedia , lookup

Foundations of statistics wikipedia , lookup

Transcript

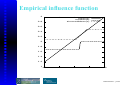

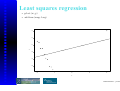

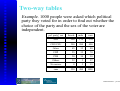

Robust Statistics Frank Klawonn [email protected], [email protected] Data Analysis and Pattern Recognition Lab Department of Computer Science University of Applied Sciences Braunschweig/Wolfenbüttel, Germany Bioinformatics & Statistics Helmholtz Centre for Infection Research Braunschweig, Germany Robust Statistics – p.1/98 Outline Motivation: Mean or median What is robust statistics? M-estimators Robust regression Median polish Summary and references Robust Statistics – p.2/98 Motivation: Mean or median Imagine a small town with 20 thousand inhabitants. In average, each inhabitant has a capital of 10 thousand $. Assume a very rich man named Bill G. owning a capital of 20 billion $ decides to move to this town. After Bill G. has settled there, the inhabitants own an average capital of roughly one million $. Robust Statistics – p.3/98 Motivation: Mean or median Imagine a small town with 20 thousand inhabitants. In average, each inhabitant has a capital of 10 thousand $. Assume a very rich man named Bill G. owning a capital of 20 billion $ decides to move to this town. After Bill G. has settled there, the inhabitants own an average capital of roughly one million $. And all but one inhabitants might own less capital than average. Robust Statistics – p.4/98 (Empirical) median denotes a sample in ascending order. Definition. The (sample or empirical) median denoted by , is given by ·½¾ ¾ ¾ · ½ if is odd if is even Robust Statistics – p.5/98 (Empirical) median m e d ia n n o d d n e v e n m e d ia n Robust Statistics – p.6/98 Motivation: Mean or median A less extreme example: Robust Statistics – p.7/98 Motivation: Mean or median A less extreme example: Robust Statistics – p.8/98 Motivation: Mean or median A less extreme example: Robust Statistics – p.9/98 What is a good estimator? Assume, we want to estimate the expected value of a normal distribution from which a sample was generated. For a symmetric distributions like the normal distribution, the expected value and median are equal. The median of a (continuous) probability distribution, representing the random variable , is the 50%-quantile, i.e. Robust Statistics – p.10/98 What is a good estimator? Classical statistics: (a) The estimator should be correct in average (unbiased), at least for large sample sizes (asymptotically unbiased). (b) The estimator should have a small variance (efficiency). (c) With increasing sample size the variance of the estimator should tend to zero. (a) and (b) together guarantee consistency: With increasing sample size, the estimator converges with probability one to the true value of the parameter to be estimated. Robust Statistics – p.11/98 What is a good estimator? Should we choose the mean or the median to estimate the expected value of our normal distribution? Both estimators are consistent. Robust Statistics – p.12/98 Mean or median 2500 2000 1500 Frequency 0 500 1000 1500 1000 500 0 Frequency 2000 2500 3000 Histogram for the estimation of the median, n= 20 3000 Histogram for the estimation of the mean, n= 20 −1 0 1 x mean 2 −1 0 1 x median Robust Statistics – p.13/98 2 Mean or median 2500 2000 1500 Frequency 0 500 1000 1500 1000 500 0 Frequency 2000 2500 3000 Histogram for the estimation of the median, n= 100 3000 Histogram for the estimation of the mean, n= 100 −1 0 1 x mean 2 −1 0 1 x median Robust Statistics – p.14/98 2 Mean or median 2500 2000 1500 Frequency 0 500 1000 1500 1000 500 0 Frequency 2000 2500 3000 Histogram for the estimation of the mean, n= 20 3000 Histogram for the estimation of the mean, n= 20 −1 0 1 x mean 2 −1 0 1 xxx mean (5% noise) Robust Statistics – p.15/98 2 Mean or median 2500 2000 1500 Frequency 0 500 1000 1500 1000 500 0 Frequency 2000 2500 3000 Histogram for the estimation of the mean, n= 100 3000 Histogram for the estimation of the mean, n= 100 −1 0 1 x mean 2 −1 0 1 x mean (5% noise) Robust Statistics – p.16/98 2 Mean or median 2500 2000 1500 Frequency 0 500 1000 1500 1000 500 0 Frequency 2000 2500 3000 Histogram for the estimation of the median, n= 20 3000 Histogram for the estimation of the median, n= 20 −1 0 1 x median 2 −1 0 1 x median (5% noise) Robust Statistics – p.17/98 2 Mean or median 2500 2000 1500 Frequency 0 500 1000 1500 1000 500 0 Frequency 2000 2500 3000 Histogram for the estimation of the median, n= 100 3000 Histogram for the estimation of the median, n= 100 −1 0 1 x median 2 −1 0 1 x median (5% noise) Robust Statistics – p.18/98 2 Mean or median Under the ideal assumption that the data were sampled from a normal distribution, the mean is a more efficient estimator than the median. If a small fraction of the data is for some reason erroneous or generated by another distribution, the mean can even become a biased estimator and lose consistency. The median is more or less not affected if a small fraction of the data is corrupted. Robust Statistics – p.19/98 Robust statistics Hampel et al. (1986): In a broad informal sense, robust statistics is a body of knowledge, partly formalized into “theories of robustness”, relating to deviations from idealized assumptions in statistics. Robust Statistics – p.20/98 Robust statistics idealized assumption: The data are sampled from the (possibly multivariate) random variable with cumulative distribution function . modified assumption: The data are sampled from a random variable with -contaminated cumulative distribution function outliers : The assumed ideal model distribution : (small) probability for outliers outliers : unknown and unspecified distribution Robust Statistics – p.21/98 Estimators (Statistics) Statistics is concerned with functionals (or better ) called statistics which are used for parameter estimation and other purposes. The mean the median or the (empirical) variance are typical examples for estimators. Robust Statistics – p.22/98 Estimators (Statistics) Two views of estimators: Applied to (finite) samples resulting in a concrete estimation (a realization of a random experiment consisting of the drawn sample). As random variables (applied to random variables). This enables us to investigate the (theoretical) properties of estimators. Samples are not needed for this purpose. Robust Statistics – p.23/98 Estimators (Statistics) Assuming an infinite sample size, the limit in probability can be considered (in case it exists). is then again a random variable. For typical estimators, is a constant random variable, i.e. the limit converges (with probability 1) to a unique value. Robust Statistics – p.24/98 Fisher consistency An estimator is called Fisher consistent for a paramater of probability distribution if i.e. for large (infinite) sample sizes, the estimator converges with probability 1 to the true value of the parameter to be estimated. Robust Statistics – p.25/98 Empirical influence function Given a sample and an estimator , what is the influence of a single observation on ? Empirical influence function: EIF Vary between and . Robust Statistics – p.26/98 Empirical influence function Consider the (ordered) sample 0.4, 1.2, 1.4, 1.5, 1.7, 2.0, 2.9, 3.8, 3.8, 4.2 med The (-)trimmed mean is the mean of the sample from which the lowest and highest % values are removed. (For the mean: , for the median: .) Robust Statistics – p.27/98 Empirical influence function 3 mean(x) median(x) trimmedMean(x) 2.8 2.6 2.4 2.2 2 1.8 1.6 1.4 1.2 1 -10 -5 0 5 10 Robust Statistics – p.28/98 Sensitivity curve The (empirical) sensitivity curve is a normalized EIF (centred around 0 and scaled according to the sample size): SC 15 mean(x) median(x) trimmedMean(x) 10 5 0 -5 -10 -10 -5 0 5 10 Robust Statistics – p.29/98 Influence function The influence function corresponds to the sensitivity curve for large (infinite) sample sizes. IF Æ Æ represents a (cumulative probability) distribution yielding the value with probability 1. In this sense, the influence function measures what happens with the estimator for an infinitesimal small contamination for large sample sizes. Note that the influence function might not be defined if the limit does not exist. Robust Statistics – p.30/98 Gross-error sensitivity The worst case (in terms of the outlier ) is called gross-error sensitivity. IF If is finite, is called a -robust estimator ( stands for bias) (at ). For the arithmetic mean, we have . For the median and the trimmed mean, the gross-error sensitivity depends on the sample distribution . Robust Statistics – p.31/98 Breakdown point The influence curve and the gross-error sensitivity characterise the influence of single (or even infinitesimal) outliers. A minimum requirement for robustness is that the influence curve is bounded. What happens when the fraction of outliers increases? Robust Statistics – p.32/98 Breakdown point The breakdown point is the smallest fraction of (extreme) outliers that need to be included in a sample in order to let the estimator break down completely, i.e. yield (almost) infinity. Let hd denote the Hamming distance between two samples and . Robust Statistics – p.33/98 Breakdown point The breakdown point of an estimator is defined as hd Normally, is independent of the specific choice of the sample . Robust Statistics – p.34/98 Breakdown point If is independent of the sample, for large (infinite) sample sizes the breakdown point is defined as Examples: Arithmetic mean: Median: -trimmed mean: Robust Statistics – p.35/98 Criteria for robust estimators Bounded influence function: Single extreme outliers cannot do too much harm to the estimator. Low gross-error sensitivity Positive breakdown point (the higher, the better): Even a number of outliers can be tolerated without leading to nonsense estimations. Fisher consistency: For very large sample sizes the estimator will yield the correct value. High efficiency: The variance of the estimator should be as low as possible. Robust Statistics – p.36/98 Criteria for robust estimators There is no way to satisfy all criteria in the best way at the same time. There is a trade-off between robustness issues like positive breakdown point and low gross-error sensitivity on the one hand and efficiency on the other hand. As an example, compare the mean (high efficiency, breakdown point 0) and the median (lower efficiency, but very good breakdown point). Robust Statistics – p.37/98 Robust measures of spread The (empirical) variance suffers from the same problems as the mean. (The estimation of the variance usually includes an estimation of the mean.) An example for a more robust estimator for spread is the interquartile range, the difference between the 75%- and the 25%-quantile. (The %-quantile is the value in the sample for which % are smaller than and % are larger than .) Robust Statistics – p.38/98 Error measures The expected value minimizes the error function Correspondingly, the arithmetic mean minimizes the error function Robust Statistics – p.39/98 Error measures The median minimizes the error function Correspondingly, the (sample) median error function minimies the Robust Statistics – p.40/98 Error measures This also explains, why the median is less sensitive to outliers: The quadratic error for the mean punishes outlier much stronger than the absolute error. Therefore, extreme outliers have a higher influence (“pull stronger”) than other points. Robust Statistics – p.41/98 Error measures How to measure errors? The error for an estimation including the sign is Minimizing does not make sense. . Even if we require , a small value for Usually does not mean that the errors are small. There might be large positive and large negative errors that balance each other. Robust Statistics – p.42/98 Error measures Therefore, we need a modified error . Which properties should the function Ê have? Ê , , , , if . Robust Statistics – p.43/98 Error measures Possible choices for : ? Advantage of : In order to minimize , we can take derivatives. This does not work for , since the function is not differentiable (at 0). Robust Statistics – p.44/98 Error measures Which other options do we have for ? The quadratic error is obviously not a good choice when we seek for robustness. Consider the more general setting of linear models of the form This covers also the special case of estimators for location: Robust Statistics – p.45/98 Linear regression linear model: computed model: objective function: Robust Statistics – p.46/98 Least squares regression Computing derivatives of (the constant factor does not change the optimisation problem) leads to The solution of this system of linear equations is straight forward and can be found in any textbook. Robust Statistics – p.47/98 Statistics tool R Open source software: http://www.r-project.org R uses a type-free command language. Assignments are written in the form > x <- y y is assigned to x. The object y must be defined (generated), before it can be assigned to x. Declaration of x is not required. Robust Statistics – p.48/98 R: Reading a file > mydata <-read.table(file.choose(),header=T) opens a file chooser. The chosen file is assigned to the object named mydata. header = T means that the chosen file will contain a header. The first line of the file contains the names of the variables. The following contain the values (tab- or space-separated). Robust Statistics – p.49/98 R: Accessing a single variable > vn <- mydata$varname assigns the column named varname of the data set contained in the object mydata to the object vn. The command > print(vn) prints the corresponding column on the screen. Robust Statistics – p.50/98 R: Printing on the screen [1] [19] [37] [55] [73] [91] [109] [127] [145] 0.2 0.3 0.2 1.5 1.5 1.2 1.8 1.8 2.5 0.2 0.3 0.1 1.3 1.2 1.4 2.5 1.8 2.3 0.2 0.2 0.2 1.6 1.3 1.2 2.0 2.1 1.9 0.2 0.4 0.2 1.0 1.4 1.0 1.9 1.6 2.0 0.2 0.2 0.3 1.3 1.4 1.3 2.1 1.9 2.3 0.4 0.5 0.3 1.4 1.7 1.2 2.0 2.0 1.8 0.3 0.2 0.2 1.0 1.5 1.3 2.4 2.2 0.2 0.2 0.6 1.5 1.0 1.3 2.3 1.5 ... ... ... ... ... ... ... ... 0.1 0.2 0.2 1.5 1.5 2.1 2.3 1.8 0.1 0.4 0.2 1.0 1.6 1.8 2.0 2.1 0.2 0.1 1.4 1.5 1.5 2.2 2.0 2.4 0.4 0.2 1.5 1.1 1.3 2.1 1.8 2.3 0.4 0.1 1.5 1.8 1.3 1.7 2.1 1.9 0.3 0.2 1.3 1.3 1.3 1.8 1.8 2.3 Robust Statistics – p.51/98 R: Empirical mean & median The mean and median can be computed using R by the functions mean() and median(), respectively. > mean(vn) [1] 1.198667 > median(vn) [1] 1.3 The mean and median can also be applied to data objects consisting of more than one (numerical) column, yielding a vector of mean/median values. Robust Statistics – p.52/98 R: Empirical variance The function var() yields the empirical variance in R. > var(vn) [1] 0.5824143 The function sd() yields the empirical standard deviation. > sd(vn) [1] 0.7631607 Robust Statistics – p.53/98 R: min and max The functions min() and max() compute the minimum and the maximum in a data set. > min(vn) [1] 0.1 > max(vn) [1] 2.5 The function IQR() yields the interquartile range. Robust Statistics – p.54/98 Least squares regression > reg.lsq <- lm(y˜x) > summary(reg.lsq) Call: lm(formula = y ˜ x) Residuals: Min 1Q -4.76528 -2.57376 Median 0.06554 3Q 2.27587 Max 4.33301 Coefficients: Estimate Std. Error t value Pr(>|t|) (Intercept) -3.3149 1.2596 -2.632 0.0273 * x 1.2085 0.9622 1.256 0.2408 --Signif. codes: 0 ’***’ 0.001 ’**’ 0.01 ’*’ 0.05 ’.’ 0.1 ’ ’ 1 Residual standard error: 3.372 on 9 degrees of freedom Multiple R-Squared: 0.1491, Adjusted R-squared: 0.05458 F-statistic: 1.577 on 1 and 9 DF, p-value: 0.2408 Robust Statistics – p.55/98 Least squares regression −6 −4 −2 y 0 2 4 > plot(x,y) > abline(reg.lsq) 0 1 2 3 4 x Robust Statistics – p.56/98 Least squares regression 0 −2 −4 y − predict.lm(reg.lsq) 2 4 plot(y-predict.lm(reg.lsq)) 2 4 6 8 10 Index Robust Statistics – p.57/98 Least squares regression 0 −2 −4 y − predict.lm(reg.lsq) 2 4 > plot(x,y-predict.lm(reg.lsq)) 0 1 2 3 4 x Robust Statistics – p.58/98 Least squares regression > reg.lsq <- lm(y˜x) > summary(reg.lsq) Call: lm(formula = y ˜ x) Residuals: Min 1Q Median 3Q -1.2437 -0.9049 -0.6414 -0.3554 Max 6.6398 Coefficients: Estimate Std. Error t value Pr(>|t|) (Intercept) 0.75680 0.33288 2.273 0.0248 * x 0.09406 0.05666 1.660 0.0995 . --Signif. codes: 0 ’***’ 0.001 ’**’ 0.01 ’*’ 0.05 ’.’ 0.1 ’ ’ 1 Residual standard error: 1.841 on 120 degrees of freedom Multiple R-Squared: 0.02245, Adjusted R-squared: 0.0143 F-statistic: 2.756 on 1 and 120 DF, p-value: 0.0995 Robust Statistics – p.59/98 Least squares regression 0 2 y 4 6 8 > plot(x,y) > abline(reg.lsq) 0 2 4 6 8 10 x Robust Statistics – p.60/98 Least squares regression 2 0 y − predict.lm(reg.lsq) 4 6 plot(y-predict.lm(reg.lsq)) 0 20 40 60 80 100 120 Index Robust Statistics – p.61/98 M-estimators Define , and . Computing derivatives of leads to The solution of this system of equations is the same as for the weighted least squares problem Robust Statistics – p.62/98 M-estimators Problem: The weights depend on the errors and the errors depend on the weights . Solution strategy: Alternating optimisation: 1. Initialise with standard least squares regression. 2. Compute the weights. 3. Apply standard least squares regression with the computed weights. 4. Repeat 2. and 3. until convergence. Robust Statistics – p.63/98 Robust regression Method least squares Huber Tukey if if ¾ ¾ if if Robust Statistics – p.64/98 M-estimators: Least squares 40 1 35 0.8 30 25 20 w rho 0.6 0.4 15 10 0.2 5 0 0 -6 -4 -2 0 e 2 4 6 -6 -4 -2 0 e 2 4 6 Robust Statistics – p.65/98 M-estimators: Huber 8 1 7 0.8 6 5 4 w rho 0.6 0.4 3 2 0.2 1 0 0 -6 -4 -2 0 e 2 4 6 -6 -4 -2 0 e 2 4 6 Robust Statistics – p.66/98 M-estimators: Tukey 3.5 1 3 0.8 2.5 0.6 w rho 2 1.5 0.4 1 0.2 0.5 0 0 -6 -4 -2 0 e 2 4 6 -6 -4 -2 0 e 2 4 6 Robust Statistics – p.67/98 Robust regression with R At least the package MASS will be required. Packages can be downloaded directly in R from the Internet. Once a package is downloaded, it can be installed by > library(packagename) Robust Statistics – p.68/98 Robust regression (Huber) > reg.rob <- rlm(y˜x) > summary(reg.rob) Call: rlm(formula = y ˜ x) Residuals: Min 1Q Median -4.76528 -2.57376 0.06554 3Q 2.27587 Max 4.33301 Coefficients: Value Std. Error t value (Intercept) -3.3149 1.2596 -2.6316 x 1.2085 0.9622 1.2559 Residual standard error: 3.678 on 9 degrees of freedom Correlation of Coefficients: (Intercept) x -0.5903 Robust Statistics – p.69/98 Robust regression (Huber) −6 −4 −2 y 0 2 4 > plot(x,y) > abline(reg.rob) 0 1 2 3 4 x Robust Statistics – p.70/98 Robust regression (Huber) 0 −2 −4 y − predict.lm(reg.rob) 2 4 > plot(y-predict.lm(reg.rob)) 2 4 6 8 10 Index Robust Statistics – p.71/98 Robust regression (Huber) 1.0 0.8 0.6 reg.rob$w 1.2 1.4 > plot(reg.rob$w) 2 4 6 8 10 Index Robust Statistics – p.72/98 Robust regression (Tukey) > reg.rob <- rlm(y˜x,method="MM") > summary(reg.rob) Call: rlm(formula = y ˜ x, method = "MM") Residuals: Min 1Q Median 3Q Max -0.7199 -0.2407 0.1070 0.3573 40.4858 Coefficients: Value Std. Error t value (Intercept) 1.2250 0.1819 6.7342 x -9.4277 0.1390 -67.8441 Residual standard error: 0.5866 on 9 degrees of freedom Correlation of Coefficients: (Intercept) x -0.5903 Robust Statistics – p.73/98 Robust regression (Tukey) −6 −4 −2 y 0 2 4 > plot(x,y) > abline(reg.rob) 0 1 2 3 4 x Robust Statistics – p.74/98 Robust regression (Tukey) 20 10 0 y − predict.lm(reg.rob) 30 40 plot(y-predict.lm(reg.rob)) 2 4 6 8 10 Index Robust Statistics – p.75/98 Robust regression (Tukey) 0.0 0.2 0.4 reg.rob$w 0.6 0.8 1.0 > plot(reg.rob$w) 2 4 6 8 10 Index Robust Statistics – p.76/98 Robust regression (Huber) > reg.rob <- rlm(y˜x) > summary(reg.rob) Call: rlm(formula = y ˜ x) Residuals: Min 1Q Median -0.65231 -0.29731 -0.02757 3Q 0.26270 Max 7.23700 Coefficients: Value Std. Error t value (Intercept) 0.1821 0.0797 2.2842 x 0.0876 0.0136 6.4581 Residual standard error: 0.4137 on 120 degrees of freedom Correlation of Coefficients: (Intercept) x -0.8657 Robust Statistics – p.77/98 Robust regression (Huber) 0 2 y 4 6 8 > plot(x,y) > abline(reg.rob) 0 2 4 6 8 10 x Robust Statistics – p.78/98 Robust regression (Huber) 4 2 0 y − predict.lm(reg.rob) 6 > plot(y-predict.lm(reg.rob)) 0 20 40 60 80 100 120 Index Robust Statistics – p.79/98 Robust regression (Huber) 0.6 0.4 0.2 reg.rob$w 0.8 1.0 > plot(reg.rob$w) 0 20 40 60 80 100 120 Index Robust Statistics – p.80/98 Robust regression (Tukey) > reg.rob <- rlm(y˜x,method="MM") > summary(reg.rob) Call: rlm(formula = y ˜ x, method = "MM") Residuals: Min 1Q Median 3Q Max -0.56126 -0.18056 0.08183 0.35255 7.33345 Coefficients: Value Std. Error t value (Intercept) 0.1066 0.0592 1.8005 x 0.0816 0.0101 8.0978 Residual standard error: 0.3781 on 120 degrees of freedom Correlation of Coefficients: (Intercept) x -0.8657 Robust Statistics – p.81/98 Robust regression (Tukey) 0 2 y 4 6 8 > plot(x,y) > abline(reg.rob) 0 2 4 6 8 10 x Robust Statistics – p.82/98 Robust regression (Tukey) 4 2 0 y − predict.lm(reg.rob) 6 plot(y-predict.lm(reg.rob)) 0 20 40 60 80 100 120 Index Robust Statistics – p.83/98 Robust regression (Tukey) 0.0 0.2 0.4 reg.rob$w 0.6 0.8 1.0 > plot(reg.rob$w) 0 20 40 60 80 100 120 Index Robust Statistics – p.84/98 Robust regression with R After plotting the weights by > plot(reg.rob$w) clicking single points can be enabled by > identify(1:length(reg.rob$w), reg.rob$w) in order to get the indices of interesting weights. Robust Statistics – p.85/98 Multivariate regression For simple linear regression , plotting the data often helps to identify problems and outliers. This no longer possible for multivariate regression Here, methods like residual plots and residual analysis are possible ways to gain more insight on outliers and other problems. In R, simply write for instance rlm(yx1+x2+x3). Robust Statistics – p.86/98 Two-way tables Example. 1000 people were asked which political party they voted for in order to find out whether the choice of the party and the sex of the voter are independent. pol. party sex female male sum SPD 200 170 370 CDU/CSU 200 200 400 Grüne 45 35 80 FDP 25 35 70 PDS 20 30 50 Others 22 5 27 No answer 8 5 13 520 480 1000 sum Robust Statistics – p.87/98 Two-way tables In such contexts, typically statistical tests like the -test (for independence, homogeneity), Fisher’s exact test (for -tables), Kruskal-Wallis test MANOVA Robust Statistics – p.88/98 Two-way tables The tests are not robust, have very restrictive assumptions (MANOVA, Fisher’s exact test) and the -test is only an asymptotic test. Alternative: Median polish Robust Statistics – p.89/98 Median polish Underlying (additive) model: : Overall typical value (general level) : Row effect (here: the political party) : Column effect (here: the sex) : Noise or random fluctuation Robust Statistics – p.90/98 Median polish Algorithm: 1. Subtract for each row its median. 2. For the updated table, subtract from each column its median. 3. Repeat 1. and 2. (with the corresponding updated tables) until convergence. Robust Statistics – p.91/98 Median polish Iterative estimation of the parameters: Rows: med " med ! ! Robust Statistics – p.92/98 Median polish Columns: # med " # med " Common value and effects: Robust Statistics – p.93/98 Median polish After convergence, the remaining entries in the table correspond to the . Median polish in R is implemented by the function medpolish(). Robust Statistics – p.94/98 Summary Robust statistics allows the deviation from the ideal model that the sample is not contaminated. Robust methods rely on the majority of the data. Few outliers can be disregarded or their influence is reduced. Robust Statistics – p.95/98 Key references F.R. Hampel, E.M. Ronchetti, P.J. Rousseeuw, W.A. Stahel: Robust Statistics. The Approach Based on Influence Functions. Wiley, New York (1986) S. Heritier, E. Cantoni, S. Copt, M.-P. Victoria-Feser: Robust Methods in Biostatistics. Wiley, New York (2009) D.C. Hoaglin, F. Mosteller, J.W. Tukey: Understanding Robust and Exploratory Data Analysis. Wiley, New York (2000) P.J. Huber: Robust Statistics. Wiley, New York (2004) Robust Statistics – p.96/98 Key references R. Maronna, D. Martin, V. Yohai: Robust Statistics: Theory and Methods. Wiley, Toronto (2006) P.J. Rousseeuw, A.M. Leroy: Robust Regression and Outlier Detection. Wiley, New York (1987) Robust Statistics – p.97/98 Software R: http://www.r-project.org Library: MASS Library: robustbase Library: rrcov Robust Statistics – p.98/98