* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download Notes on Sample Mean, Sample Proportion, and

Survey

Document related concepts

Transcript

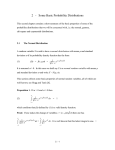

Econ 325/327

Notes on Sample Mean, Sample Proportion, Central Limit

Theorem, Chi-square Distribution, Student’s t distribution

By Hiro Kasahara

Sample Mean

Suppose that {X1 , X2 , ..., Xn } is a random sample from a population, where E[Xi ] = µ and

Var[Xi ] = σ 2 . We do not assume normality, namely, Xi is independently drawn from some

population distribution function of which exact form is not known to us but we know that

E[Xi ] = µ and Var[Xi ] = σ 2 .

The sample mean and the sample variance are defined as

n

1X

X̄ =

Xi ,

n i=1

m

1 X

(Xi − X̄)2 ,

s =

n − 1 i=1

2

respectively. Note that both X̄ and s2 are random variables because Xi ’s are random variables.

The expected value of X̄ is

n

n

1X

1X

E(X̄) = E(

Xi ) =

E(Xi )

n i=1

n i=1

n

1

1X

µ = nµ = µ,

=

n i=1

n

Therefore, X̄ is an unbiased estimator of µ.

1

(1)

The variance of X̄ is

n

1X

Xi

n i=1

2

σX̄

= Var(X̄) = E

=E

=

=

=

=

1

n2

n

X

!2

!

−µ

!2

n

X

=E 1

(Xi − µ)

n i=1

!2

X̃i

(Define X̃i = Xi − µ)

i=1

!

n

n−1 X

n

X

X

1

E

X̃i2 + 2

X̃i X̃j

n2

i=1

i=1 j=i+1

( n

)

n−1 X

n

X

1 X 2

E X̃i + 2

E X̃i X̃j

n2 i=1

i=1 j=i+1

( n

)

1 X

E X̃i2 + 0

(because Xi and Xj are independent)

2

n

i=1

n

1 X 2

σ

(because

E

X̃i2 = Var(Xi )) = σ 2 )

n2 i=1

(2)

σ2

1

2

nσ

=

.

n2

n

Note that E X̃i X̃j = E ((Xi − µ)(Xj − µ)) = Cov(Xi , Xj ) = 0 because Xi and Xj are

=

randomly drawn and, therefore, independent. The standard deviation of X̄ is

r

σ

σ2

σX̄ =

=√ .

n

n

This implies that, as the sample size n increases, the variance and the standard deviation of

X̄ decreases. Consequently, the distribution of X̄ will put more and more probability mass

around its mean µ as n increases and, eventually, the variance of X̄ shrinks to zero as long

as σ 2 < ∞ and the distribution of X̄ will be degenerated at µ as n goes to infinity. This

result is called the law of large numbers. See the next section for details.

2

It is important to emphasize that we have E[X̄] = µ and Var(X̄) = σn even when

the population distribution is not normal. However, without knowing the exact form of

population distribution where Xi is drawn from, we do not know the distribution function

of X̄ beyond E[X̄] = µ and Var(X̄) = √σn ; in particular, X̄ is not normally distributed

in general when n is finite. For example, if Xi is drawn from Bernouilli distribution with

Xi = 1 with probability p and Xi = 0 with probability 1 − p, then we still have E[X̄] = p

2

and Var(X̄) = σn = p(1−p)

but, given finite n, we do not expect that X̄ ∼ N (µ, σ 2 /n).

n

On the other hand, if we are willing to assume that the population distribution is normal,

i.e., Xi ∼ N (µ, σ 2 ), then we have that X̄ ∼ N (µ, σ 2 /n). This is because that the average of

independently and identically distributed normal random variables is also a normal random

variable.

2

The Law of Large Numbers

The formal definition of the law of large numbers is as follows.

P

Theorem 1 (The Law of Large Numbers). Let X̄n = (1/n) ni=1 Xi , where Xi is independently drawn from the identical distribution with finite mean and finite variance. Then, for

every > 0,

lim P (|X̄n − µ| < ) = 1.

n→∞

We say that X̄n converges in probability to µ, which is denoted as

X̄n →p µ.

That is, as the sample size n increases to infinity, the probability that the distance

between X̄n and µ is larger than approaches zero for any > 0 regardless of how small the

value of is. In other words, the relative frequency that X̄n falls within distance of µ is

arbitrary close to one when the sample size n is large enough.

This result is intuitive given that Var(X̄n ) = σ 2 /n so that the variance of X̄n shrinks to

zero as n → ∞. The proof of the law of large number uses Chebyshev’s inequality.

Chebyshev’s inequality: Given a random variable X with finite mean and finite vari.

ance, for every > 0, we have P (|X − E(X)| ≥ ) ≤ V ar(X)

2

Proof of the Law of Large Numbers: By choosing X = X̄n in Chebyshev’s inequality

above, we have P (|X̄n − E(X̄n )| ≥ ) ≤ V ar(2X̄n ) . Substituting E(X̄n ) = µ and V ar(X̄n ) =

σ2

σ2

σ 2 /n, we have P (|X̄n − µ| ≥ ) ≤ n

Because n

2 for every n = 1, 2, ....

2 → 0 as n →

∞ for every > 0, we have limn→∞ P (|X̄n − µ| ≥ ) = 0 for every > 0. Therefore,

limn→∞ P (|X̄n − µ| > ) = 1 − limn→∞ P (|X̄n − µ| ≥ ) = 1 − 0 = 1.

The Central Limit Theorem

Consider a random variable Z defined as

Z=

X̄ − µ

√ .

σ/ n

(3)

This is the standardized random variable of X̄ because E(X̄) = µ and V ar(X̄) = σ 2 /n.

Then, it is easy to prove (try to prove yourself) that

E(Z) = 0 and Var(Z) = 1.

Therefore, while X̄ tends to degenerate to µ as n → ∞, the standardized variable Z does

not degenerate even when n → ∞. Given finite n, the exact form of distribution of X̄,

and hence W , is not known unless we assume that Xi is normally distributed. What is the

distribution of Z when n → ∞? The Central Limit Theorem provides the answer.

Theorem 2 (The Central Limit Theorem). If {X1 , X2 , ..., Xn } is a random sample with

finite mean µ and finite positive variance (i.e., −∞ < µ < ∞ and 0 < σ 2 < ∞), then then

3

the distribution of Z defined in (3) is N (0, 1) in the limit as n → ∞, i.e., for any fixed

number x,

√

n(X̄ − µ)

lim P

≤ x = Φ(x),

n→∞

σ

Rx

2

where Φ(x) = −∞ √12π e−z /2 dz.

√

We say that n(X̄ − µ) converges in distribution to a normal with mean 0 and variance

σ 2 , which is denoted as

√

n(X̄ − µ) →d N (0, σ 2 ).

The proof is beyond the scope of this course.

• The central limit theorem is an important result because many random variables in

empirical applications can be modeled as the sums or the means of independent random

variables.

• In practice, the central limit theorem can be used to approximate the cdf of the means

of random variable by the normal distribution when the sample size n is sufficiently

large.

• The central limit theorem applies to the case when Xi has discrete value, for example,

Xi is a Bernoulli random variable with its support {0, 1}.

• If Z is approximately distributed

√ as N (0, 1) when n is large, then X̄ should be approximately distributed as N (µ, σ/ n).

Sample Proportion

Suppose that {X1 , X2 , ..., Xn } is a random sample, where Xi takes a value of zero or one

with probability 1 − p and p, respectively. That is,

0 with prob. 1 − p

Xi =

(4)

1

with prob. p

Then,P

from (1)-(2), the expected value and the variance of the sample mean p̂ := X̄ =

(1/n) ni=1 Xi are given by

X

E(X̄) = E(X) =

xpx (1 − p)1−x = (0)(1 − p) + (1)(p) = p,

x=0,1

Var(X)

p(1 − p)

=

,

n

n

P

where the last equality uses Var(X) = x=0,1 (x − p)2 px (1 − p)1−x = p(1 − p).

By the central limit theorem, the distribution of X̄ is approximately normal for large

sample sizes and the standardized variable

Var(X̄) =

X̄ − E(X̄)

X̄ − p

Z := p

=p

p(1 − p)/n

Var(X̄)

is approximately distributed as N (0, 1).

4

Sample Variance and Chi-square distribution

Suppose that {X1 , X2 , ..., Xn } is a random sample from a population, where E[Xi ] = µ and

Var[Xi ] = σ 2 . The sample variance are defined as

n

1 X

s =

(Xi − X̄)2 ,

n − 1 i=1

2

(5)

respectively. Note that s2 is a random variable because Xi ’s and X̄ are random variables.

As shown in the Appendix of Chapter 6 in Newbold, Carlson, and Thorne, the expected

value of the sample variance s2 is

E[s2 ] = σ 2

(6)

so that the sample variance s2 is an unbiased estimator of σ 2 . Also, it is possible to show

2σ 4

2σ 4

. The result that E[s2 ] = σ 2 and Var(s2 ) = n−1

does not require the

that Var(s2 ) = n−1

normality assumption, i.e., Xi is not necessarily normally distributed.

In the definition of (5), we divide the sum of (Xi − X̄)2 by (n − 1) rather than n. We

may alternatively consider the following estimator of the population variance of Xi :

n

σ̂ 2 =

1X

(Xi − X̄)2 .

n i=1

P

Pn

1

2

What is the expected value of σ̂ 2 ? Because σ̂ 2 = n1 ni=1 (Xi −X̄)2 = n−1

i=1 (Xi −X̄) =

n n−1

n−1 2

s , the expected value of σ̂ 2 is given by E[σ̂ 2 ] = n−1

E[s2 ] = n−1

σ 2 , which is not equal to

n

n

n

2

2

but strictly smaller than σ . Therefore, σ̂ is a (downward) biased estimator of σ 2 . On the

→ 1 so that the bias of σ̂ 2 will disappear as n → ∞ and, hence,

other hand, as n → ∞, n−1

n

we may use σ̂ 2 in place of s2 when n is large.

2σ 4

While E[s2 ] = σ 2 and Var(s2 ) = n−1

hold without assuming that Xi ’s are drawn from

normal distribution, it is not possible to know the exact form of the distribution of random

variable s2 in general when n is finite.

Consider a transformation of s2 by multiplying by n − 1 and divide by σ 2 :

Pn

2

(n − 1)s2

i=1 (Xi − X̄)

=

,

(7)

σ2

σ2

Pn

1

2

where the right hand side is obtained by plugging s2 = n−1

i=1 (Xi − X̄) into the left

hand side. This is a random variable because Xi ’s and X̄ are random. If we are willing to

assume that Xi is drawn from the normal distribution with mean µ and variance σ 2 , then

2

we may show that the random variable (n−1)s

has a distribution known as the chi-square

2

σ

distribution with n − 1 degree of freedom which we denote by χ2 (n − 1), i.e.,

(n − 1)s2

= χ2 (n − 1).

2

σ

(8)

The chi-square distribution with the r degree of freedom, denoted by χ2 (r), is characterized by the sum of r independent standard normally distributed random variables Z12 , Z22 ,

..., Zr2 , where Zi ∼ N (0, 1) and Zi and Zj are independent if i 6= j for i, j = 1, ..., r. Namely,

5

W = Z12 + Z22 + ... + Zr2 has a distribution that is χ2 (r). The proof for this is beyond the

scope of this class but is available in Chapter 5.4 of Hogg, Tanis, and Zimmerman.

In view of this characterization, if we consider a version of (7) by replacing X̄ with µ,

then

Pn

2 X

n

n 2

X

(X

−

µ)

X

−

µ

i

i

i=1

=

=

Zi2

2

σ

σ

i=1

i=1

Pn

(X −µ)2

where Zi ∼ N (0, 1) and Zi and Zj are independent if i 6= j, and therefore, i=1 σ2 i

is the

2

sum of n independent standard normally distributed random variables which is χ (n). This

2

is slightly different from (8) because we replace X̄ with µ in the definition of (n−1)s

. When

P σ2

2

=

we replace X̄ with µ, one degree of freedom is lost and, as a result, (n−1)s

σ2

2

2

distributed as χ (n − 1) rather than χ (n).

For example, consider the case of n = 2. Then, with X̄ = (1/2)(X1 + X2 ),

P2

2

2

(n − 1)s2

X1 − X2

i=1 (Xi − X̄)

√

=

=

,

σ2

σ2

2σ

where

X√

1 −X2

2σ

is a standard normal random variable so that

(n−1)s2

σ2

n

2

i=1 (Xi −X̄)

σ2

is

∼ χ2 (1).1

Example 1 (Chi-square distribution with the degree of freedom 1 and standard normal

distribution). If Z is N (0, 1), then P (|Z| < 1.96) = 0.95. Using the fact that Z 2 is the

chi-square distributed with the degree of freedom 1, i.e., Z 2 = χ2 (1), what is the value of a

in the following equation?

P (χ2 (1) < a) = 0.95.

To answer this, note that P (χ2 (1) < a) = P (Z 2 < (1.96)2 ) = P (|Z| < 1.96) = 0.95.

Therefore, a = (1.96)2 = 3.841. Checking the Chi-square table when the degree of freedom

equal to 1 confirms this result. Question: what is the value of b such that P (χ2 (1) < b) = 0.9?

Try to answer this using the Standard normal table and check the result with the Chi-square

table.

We may also derive the cumulative distribution function and the probability density function of chi-square random variable with the degree of freedom 1 from the standard normal

2

probability density function φ(z) = √12π e−z /2 as follows. Let Z ∼ N (0, 1). Then, the cumulative distribution function of chi-square random variable with the degree of freedom 1

is

Z √a

√

√

Fχ(1) (a) = P (χ2 (1) < a) = P (Z 2 < a) = P (− a ≤ Z ≤ a) = √ φ(z)dz.

− a

The probability density function can be obtained by differentiating Fχ(1) (a) as

√

dFχ(1) (a)

1 −1/2 √

1 −1/2 √

a−1/2 −a/2

−1/2

fχ(1) (a) =

= a

φ( a) + a

φ( a) = a

φ( a) = √ e

.

da

2

2

2π

In particular, fχ(1) (a) → ∞ as a → 0.

2

P2

2

2

The last equality follows from

= (X1 − X̄)2 + (X2 − X̄)2 = X1 − X1 +X

+

i=1 (Xi − X̄)

2

2

X1 +X2 2

X1√

−X2

X1 −X2 2

X2 −X1 2

X1 −X2 2

X2 − 2

=

+

=2

=

. Finally, when both X1 and X2 are

2

2

2

2

1

independently dranw from N (µ, σ 2 ), X1 − X2 are normally distributed with mean E(X1 − X2 ) = µ − µ = 0

and variance Var(X1 − X2 ) = Var(X1 ) + Var(X2 ) = 2σ 2 so that, by standardizing X1 − X2 , we have

X√

1 −X2

∼ N (0, 1).

2σ

6

Example 2 (Confidence Interval for Sample Variance). Suppose that you are a plant manager for producing electrical devices operated by a thermostatic control. According to the

engineering specifications, the standard deviation of the temperature at which these controls

actually operate should not exceed 2.0 degrees Fahrenheit. As a plant manager, you would like

to know how large the (population) standard deviation σ is. We assume that the temperature

is normally distributed. Suppose that you randomly sampled 25 of these controls, and the

sample variance of operating temperatures was s2 = 2.36 degrees Fahrenheit. (i) Compute

the 95 percent confidence interval for the population standard deviation σ. (ii) Test the null

hypothesis H0 : σ = 2 against the alternative hypothesis H1 : σ > 2 at the significance level

α = 0.05.

2

is given by chi-square distribution with (n − 1) degrees of freeThe distribution of (n−1)s

σ2

dom. Let χ2n−1 be a random variable distributed by chi-square distribution with (n−1) degrees

of freedom and let χ̄2n−1,α be the upper critical value such that Pr(χ2n−1 > χ̄n−1,α ) = α. Then,

(n−1)s2

(n−1)s2

(n−1)s2

2

2

2

Pr(χ̄n−1,1−α/2 ≤

≤ σ ≤ χ̄2

.

≤ χ̄n−1,α/2 ) = α and, therefore, Pr χ̄2

σ2

n−1,α/2

n−1,1−α/2

Now, when α/2 = 0.025, chi-square table gives χ̄224,α/2 = 39.364 and χ̄224,1−α/2 = 12.401

so that the lower bound of the 95 percent CI is

bound is

(n−1)s2

χ̄2n−1,1−α/2

=

24×2.36

12.401

(n−1)s2

χ̄2n−1,α/2

=

24×2.36

39.364

= 1.439 and the upper

= 4.567. Therefore, the 95 percent CI for the population vari-

ance is [1.439, 4.567] and, because of the monotonic relationship between the variance and

the

√ deviation, the 95 percent CI for the population standard deviation is given by

√ standard

[ 1.439, 4.567] = [1.200, 2.137].

To test the null hypothesis H0 , (a) find the distribution of “standardized” random variable

(n−1)s2

when H0 is true, i.e., σ 2 = (2)2 = 4, (b) find the rejection region which is the region

σ2

2

where the random variable (n−1)s

is unlikely (i.e., with the probability less than 5 percent)

4

2

to fall into if H0 is true, (c) look at the realized value of (n−1)s

and ask if the realized value

4

2

(n−1)s2

of

is an unlikely value to happen if H0 is true (by checking if (n−1)s

falls into the

4

4

rejection region).

2

For (a), when H0 is true, (n−1)s

is distributed according to the chi-square distribution

4

with the degree of freedom equal to n − 1 = 24. For (b), because H1 : σ > 2, we consider

2

is considered to be evidence

against

one-sided test; namely, the very high value of (n−1)s

4

H0

but not the low value of

(n−1)s2

.

4

Under H0 , Pr

(n−1)s2

σ2

≤ χ̄2n−1,α = Pr

24s2

≤

4

2

for 24s

4

χ̄224,α = α

for α = 0.05, where χ̄224,0.05 = 36.415. Therefore, the rejection region

is given by

24s2

(36.415, ∞), i.e., we reject H0 if 4 > 36.415, or equivalently, s2 > 36.415/6 = 6.024

because such a value of s2 is unlikely to happen if H0 is true. (c) The realized value of s2 is

2.36, which does not fall in the rejection region (i.e., 2.36 belongs to the region which is not

unlikely happen if H0 is true) and hence there is not sufficient evidence against H0 . We do

not reject H0 .

7

Student’s t distribution

Suppose that X1 , X2P

, ..., Xn are randomly sampled from N (µ, σ 2 ). Then for any n ≥ 2, the

sample mean X̄ = n1 ni=1 Xi is normally distributed,

X̄ ∼ N (µ, σ 2 /n).

If we standardize X̄ by subtracting mean µ and dividing by variance σ 2 /n, we have standard

normal variable, i.e.,

X̄ − µ

√ ∼ N (0, 1).

σ/ n

In practice, we do not know

of Xi . In such a case, we might want to use the

Pn the variance

1

2

2

sample variance s = n−1 i=1 (Xi − X̄) in place of the population variance σ 2 to standardize

X̄ as

X̄ − µ

t= 2 √ .

(9)

s/ n

This is called t-statistic. Note that the distribution of

X̄−µ

√

s2 / n

because s2 is a random variable while σ 2 is constant so that

is different than that of

X̄−µ

√

s2 / n

X̄−µ

√

σ/ n

contains the additional

X̄−µ

√

√

source of randomness from s2 . In fact, the variance of sX̄−µ

2 / n is larger than that of σ/ n .

The t-statistic defined in (9) has the known distribution called Student’s t distribution with

(n − 1) degrees of freedom.

Let Z ∼ N (0, 1) and χ2 ∼ χ2 (v) with v degrees of freedom, where Z and χ2 are independent. Then, a random variable from Student’s t distribution with v degrees of freedom can

be constructed as

Z

.

(10)

t= p

χ2 /v

To see the connection between (10)

√ and t-statistic defined in (9), divide both the numerator

and the denominator of (9) by σ/ n and rearrange the terms to obtain

t= q

Note that

X̄−µ

√

σ/ n

∼ N (0, 1) while

(n−1)s2

σ2

X̄−µ

√

σ/ n

(n−1)s2

/(n

σ2

.

− 1)

follows the chi-square distribution with (n−1) degrees

of freedom. Therefore, by letting Z =

X̄−µ

√ ,

σ/ n

χ2 =

(n−1)s2

,

σ2

and v = n − 1 in (10), we have

√

that t = sX̄−µ

2 / n follows the Student’s t distribution with (n − 1) degrees of freedom.

A few comments:

• The important assumption to obtain Student’s t distribution is that X1 , X2 , ..., Xn

are randomly drawn from N (µ, σ 2 ). For example, if Xi is a Bernoulli random variable

√

with P (Xi = 1) = p and P (Xi = 0) = 1 − p, then the distribution of sX̄−µ

2 / n is not

2

(n−1)s

X̄−µ

√ ∼ N (0, 1) nor

Student’s t distribution because neither σ/

∼ χ2 (n − 1). We

σ2

n

cannot use Student’s t distribution when Xi is not normally distributed.

• As n → ∞, we have s2 →p σ 2 . This suggests that the Student’s t distribution converges

to the standard normal distribution as n → ∞.

8