* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download Know how to find probabilities using theoretical and relative

Survey

Document related concepts

Transcript

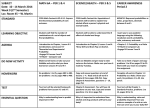

IQL Chapter 6 – Probability in Statistics Statistical Reasoning for everyday life, Bennett, Briggs, Triola, 3rd Edition 6.1 The Role of Probability in Statistics: Statistical Significance FROM SAMPLE TO POPULATION When the difference between what is observed and what is expected seems unlikely to be explained by chance alone, we say that the difference is statistically significant. Definition A set of measurements or observations in a statistical study is said to be statistically significant if it is unlikely to have occurred by chance. QUANTIFYING STATISTICAL SIGNIFICANCE Quantifying Statistical Significance If the probability of an observed difference occurring by chance is 0.05 (or 1 in 20) or less, the difference is statistically significant at the 0.05 level. If the probability of an observed difference occurring by chance is 0.01 (or 1 in 100) or less, the difference is statistically significant at the 0.01 level. 6.2 Basics of Probability LEARNING GOAL Know how to find probabilities using theoretical and relative frequency methods and understand how to construct basic probability distributions. IQL Chapter 6 Probability in Statistics Page 1 Definitions Outcomes are the most basic possible results of observations or experiments. An event is a collection of one or more outcomes that share a property of interest. Expressing Probability The probability of an event, expressed as P (event), is always between 0 and 1 inclusive. A probability of 0 means that the event is impossible and a probability of 1 means that the event is certain. THEORETICAL PROBABILTY As long as all outcomes are equally likely, we can use the following procedure to calculate the theoretical probabilities. Theoretical Method for Equally Likely Outcomes Step 1. Count the total number of possible outcomes. Step 2. Among all the possible outcomes, count the number of ways the event of interest, A, can occur. Step 3. Determine the probability, P(A), from P(A) = Theoretical Probabilities Counting Outcomes Suppose we toss two coins and want to count the total number of outcomes. The toss of the first coin has two possible outcomes: heads (H) or tails (T). The toss of the second coin also has two possible outcomes. The two outcomes for the first coin can occur with either of the two outcomes for the second coin. IQL Chapter 6 Probability in Statistics Page 2 So the total number of outcomes for two tosses is 2 × 2 = 4; the tree diagram of Figure 6.4a (next slide). they are HH, HT, TH, and TT, as shown in Counting Outcomes Suppose process A has a possible outcomes and process B has b possible outcomes. Assuming the outcomes of the processes do not affect each other, the number of different outcomes for the two processes combined is a × b. This idea extends to any number of processes. For example, if a third process C has c possible outcomes, the number of possible outcomes for the three processes combined is a × b × c. THEORETICAL PROBABILTY The second way to determine probabilities is to approximate the probability of an event A by making many observations and counting the number of times event A occurs. This approach is called the relative (or empirical) method. Relative Frequency Method Step 1. Repeat or observe a process many times and interest, A, occurs. count the number of times the event of Step 2. Estimate P(A) by P(A) = EXAMPLE 4 500-Year Flood Geological records indicate that a river has crested above a particular high flood level four times in the past 2,000 years. What is the relative frequency probability that the river will crest above the high flood level next year? Solution: Based on the data, the probability of the river cresting above this flood level in any single year is IQL Chapter 6 Probability in Statistics Page 3 Because a flood of this magnitude occurs on average once every 500 years, it is called a “500-year flood.” The probability of having a flood of this magnitude in any given year is 1/500, or 0.002. SUBJECTIVE PROBABILITES The third method for determining probabilities is to estimate a subjective probability using experience or intuition. Three Approaches to Finding Probability A theoretical probability is based on assuming that all outcomes are equally likely. It is determined by dividing the number of ways an event can occur by the total number of possible outcomes. A relative frequency probability is based on observations or experiments. It is the relative frequency of the event of interest. A subjective probability is an estimate based on experience or intuition. PROBABILITY OF AN EVENT NOT OCCURRING Probability of an Event Not Occurring If the probability of an event A is P(A), then the probability that event A does not occur is P(not A). Because the event must either occur or not occur, we can write P(A) + P(not A) = 1 or P(not A) = 1 – P(A) Note: The event not A is called the complement of the event A; the “not” is often designated by a bar, so Ā means not A. IQL Chapter 6 Probability in Statistics Page 4 PROBABILITY DISTRIBUTION A probability distribution is a distribution in which the variable of interest is associated with a probability. A probability distribution is a table or an equation that links each outcome of a statistical experiment with its probability of occurrence. Probability Distribution Prerequisites To understand probability distributions, it is important to understand variables. Random variables and some notation. A variable is a symbol (A, B, x, y, etc.) that can take on any of a specified set of values. When the value of a variable is the outcome of a statistical experiment, that variable is a random variable. Generally, statisticians use a capital letter to represent a random variable and a lower-case letter, to represent one of its values. For example, X represents the random variable X. P(X) represents the probability of X. P(X = x) refers to the probability that the random variable X is equal to a particular value, denoted by x. As an example, P(X = 1) refers to the probability that the random variable X is equal to 1. Probability Distributions An example will make clear the relationship between random variables and probability distributions. Suppose you flip a coin two times. This simple statistical experiment can have four possible outcomes: HH, HT, TH, and TT. Now, let the variable X represent the number of Heads that result from this experiment. The variable X can take on the values 0, 1, or 2. In this example, X is a random variable; because its value is determined by the outcome of a statistical experiment. A probability distribution is a table or an equation that links each outcome of a statistical experiment with its probability of occurrence. Consider the coin flip experiment described above. The table below, which associates each outcome with its probability, is an example of a probability distribution. IQL Chapter 6 Probability in Statistics Page 5 Number of heads Probability 0 0.25 1 0.50 2 0.25 The above table represents the probability distribution of the random variable X. http://stattrek.com/probability-distributions/probability-distribution.aspx Making a Probability Distribution A probability distribution represents the probabilities of all possible events. Do the following to make a display of a probability distribution: Step 1. List all possible outcomes. Use a table or figure if it is helpful. Step 2. Identify outcomes that represent the same event. Find the probability of each event. Step 3. Make a table in which one column lists each event and another column lists each probability. The sum of all the probabilities must be 1. 6.3 Probabilities with Large Numbers Understand the law of large numbers, use this law to understand and calculate expected values, and recognize how misunderstanding of the law of large numbers leads to the gambler’s fallacy. THE LAW OF LARGE NUMBERS Law of Large Numbers. The Law of Large Numbers says that in repeated, INDEPENDENT trials with the same probability p of success in each trial, the percentage of successes is increasingly likely to be close to the chance of success as the number of trials increases. More precisely, the chance that the percentage of successes differs from the probability p by more than a fixed positive amount, e > 0, converges to zero as the number of trials n goes to infinity, for every number e > 0. Note that in contrast to the difference between the percentage of successes and the probability of success, the difference between the number of successes and the EXPECTED number of successes, n×p, tends to grow as n grows. The following tool illustrates the law of large numbers; the button toggles between displaying the difference between the number of IQL Chapter 6 Probability in Statistics Page 6 successes and the expected number of successes, and the difference between the percentage of successes and the expected percentage of successes. The tool on this page illustrates the law of large numbers. http://www.stat.berkeley.edu/~stark/SticiGui/Text/gloss.htm#l The idea that large number of events may show some pattern even while individual events are unpredictable. The Law of Large Numbers The law of large numbers (or law of averages) applies to a process for which the probability of an event A is P(A) and the results of repeated trials do not depend on results of earlier trials (they are independent). It states: If the process is repeated through many trials, the proportion of the trials in which event A occurs will be close to the probability P(A). The larger the number of trials, the closer the proportion should be to P(A). EXPECTED VALUE The mean of a random variable is more commonly referred to as its Expected Value, i.e. the value you expect to obtain should you carry out some experiment whose outcomes are represented by the random variable. The expected value of a random variable X is denoted by Given that the random variable X is discrete and has a probability distribution f(x), the expected value of the random variable is given by: http://www.wyzant.com/Help/Math/Statistics_and_Probability/Expected_Value/ Expected Value The expected value of a variable is the weighted average of all its possible events. Because it is an average, we should expect to find the “expected value” only when there are a large number of events, so that the law of large numbers comes into play. IQL Chapter 6 Probability in Statistics Page 7 Calculating Expected Value Consider two events, each with its own value and probability. The expected value is expected value = (value of event 1) * (probability of event 1) + (value of event 2) * (probability of event 2) This formula can be extended to any number of events by including more terms in the sum. THE GAMBLERS FALLACY Definition The gambler’s fallacy is the mistaken belief that a streak of bad luck makes a person “due” for a streak of good luck. STREAKS Another common misunderstanding that contributes to the gambler’s fallacy involves expectations about streaks. Suppose you toss a coin six times and see the outcome HHHHHH (all heads). Then you toss it six more times and see the outcome HTTHTH. Most people would say that the latter outcome is “natural” while the streak of all heads is surprising. But, in fact, both outcomes are equally likely. The total number of possible outcomes for six coins is 2 × 2 × 2 × 2 × 2 × 2 = 64, and every individual outcome has the same probability of 1/64. 6.4 ideas of Risk and Life Expectancy Here we will examine the ideas of probability can help us quantify rist, therby allowing us to make informed decisions about such tradeloffs. RISK AND TRAVEL Often expressed in terms of an accidental rate or death rate. VITAL STATISTICS Data concerneing briths and deaths of citizens. IQL Chapter 6 Probability in Statistics Page 8 LIFE EXPECTANCY Often used to compare overall health at different times or in different countries. Definition Life expectancy is the number of years a person with a given age today can expect to live on average. 6.5 Combining Probabilities – (Supplementary Section) LEARNING GOAL Distinguish between independent and dependent events and between overlapping and non-overlapping events, and be able to calculate and and either/or probabilities. AND PROBABILITIES Suppose you toss two fair dice and want to know the probability that both will come up 4. For each die, the probability of a 4 is 1/6. We find the probability that both dice show a 4 by multiplying the individual probabilities: P(double 4’s) = P(4) × P(4) = In general, we call the probability of event A and event B occurring an and probability (or joint probability). And Probability for Independent Events Two events are independent if the outcome of one event does not affect the probability of the other event. Consider two independent events A and B with probabilities P(A) and P(B). The probability that A and B occur together is P(A and B) = P(A) × P(B) This principle can be extended to any number of independent events. For example, the probability of A, B, and a third independent event C is P(A and B and C) = P(A) × P(B) × P(C) And events can be independent or dependent IQL Chapter 6 Probability in Statistics Page 9 And Probability for Independent Events Two events are independent if the outcome of one event does not affect the probability of the other event. Consider two independent events A and B with probabilities P(A) and P(B). The probability that A and B occur together is P(A and B) = P(A) × P(B) This principle can be extended to any number of independent events. For example, the probability of A, B, and a third independent event C is P(A and B and C) = P(A) × P(B) × P(C) And Probability for Dependent Events Two events are dependent if the outcome of one event affects the probability of the other event. The probability that dependent events A and B occur together is P(A and B) = P(A) × P(B given A) where P(B given A) means the probability of event B given the occurrence of event A. This principle can be extended to any number of individual events. For example, the probability of dependent events A, B, and C is P(A and B and C) = P(A) × P(B given A) × P(C given A and B) EITHER/OR PROBABILITIES Either/Or Probability for Non-Overlapping Events Two events are non-overlapping if they cannot occur at the same time. If A and B are nonoverlapping events, the probability that either A or B occurs is P(A or B) = P(A) + P(B) This principle can be extended to any number of non-overlapping events. For example, the probability that either event A, event B, or event C occurs is P(A or B or C) = P(A) + P(B) + P(C) provided that A, B, and C are all non-overlapping events.Either/Or Probability for NonOverlapping Events IQL Chapter 6 Probability in Statistics Page 10 Either/Or Probability for Overlapping Events Two events A and B are overlapping if they can occur together. The probability that either A or B occurs is P(A or B) = P(A) + P(B) – P(A and B) SUMMARY IQL Chapter 6 Probability in Statistics Page 11