* Your assessment is very important for improving the workof artificial intelligence, which forms the content of this project

Download The Performance/Intensity Function - Rohan Accounts

Survey

Document related concepts

Transcript

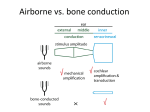

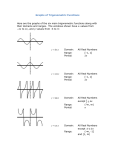

The Performance/Intensity Function: An Underused Resource Arthur Boothroyd The purpose of this tutorial is to demonstrate the potential value of the Performance versus Intensity (PI) function in both research and clinical settings. The PI function describes recognition probability as a function of average speech amplitude. In effect, it shows the cumulative distribution of useful speech information across the amplitude domain, as speech rises from inaudibility to full audibility. The basic PI function can be modeled by a cubed exponential function with three free parameters representing: (a) threshold of initial audibility, (b) amplitude range from initial to full audibility, and (c) recognition probability at full audibility. Phoneme scoring of responses to consonant-vowel-consonant words makes it possible to obtain complete PI functions in a reasonably short time with acceptable test–retest reliability. Two examples of research applications are shown here: (a) the preclinical behavioral evaluation of compression amplification schemes, and (b) assessment of the distribution of reverberation effects in the amplitude domain. Three examples of clinical application show data from adults with different degrees and configurations of sensorineural hearing loss. In all three cases, the PI function provides potentially useful information over and above that which would be obtained from measurement of Speech Reception Threshold and Maximum word recognition in Phonectically Balanced lists. Clinical application can be simplified by appropriate software and by a routine to convert phoneme recognition scores into estimates of the more familiar whole-word recognition scores. By making assumptions about context effects, phoneme recognition scores can also be used to estimate word recognition in sentences. It is hard to escape the conclusion that the PI function is an easily available, potentially valuable, but largely neglected resource for both hearing research and clinical audiology. 1968a), the form of this function is determined initially by the acoustic properties of the speech signal. Speech amplitude varies from moment to moment— over a range of 30 to 40 dB. As the speech level is raised from below audibility, the highest amplitude components are heard first and recognition probability begins to rise above zero. Further level increases add audibility of lower amplitude components. But it requires an increase of 30 or 40 dB above the threshold of initial audibility before all of the acoustic signal is audible to the listener. Thus the threshold of initial audibility is determined by the highest amplitude components of the speech signal, whereas the range from initial to full audibility is determined by the range over which sound energy is distributed in the amplitude domain. This process is illustrated in Figure 1, which shows the transformation of an acoustic waveform: first to an amplitude versus time plot; then to an amplitude distribution; and finally to a cumulative amplitude distribution. What is remarkable about the cumulative amplitude distribution of speech is how well it can predict the morphology of the normal PI function for phoneme recognition. The data from Figure 2, for example, are taken from a recent study by Haro (2007) in which 12 normally hearing young adults listened to the same consonant-vowel-consonant (CVC) words used to develop the data in Figure 1. Recordings of two female talkers were used. Ten words were presented at each of seven levels (dBleq) in a steadystate noise of 55 dB SPL. The spectrum of the noise was matched to that of the relevant talker. Differences in the forms of the two PI functions (or PSNR functions) follow closely the differences between the cumulative amplitude distributions of the two recordings.* Recognition probability, however, depends not just on the audibility of sound, but also on the amount of useful information it contains. The PI (Ear & Hearing 2008;29;479– 491) INTRODUCTION The Performance versus Intensity (PI) function is the relationship between speech recognition probability and average speech amplitude. Although influenced by noise spectra (Studebaker, et al., 1994) and pure-tone threshold configuration (Boothroyd, *The difference between these two cumulative amplitude distributions may reflect differences in the productions of the two talkers. It may also, however, reflect differences in recording technique. For talker 1, the microphone was placed at 0 degrees in both the horizontal and vertical planes. For talker 2, a clip-on microphone was used and this was at 90 degrees in the vertical plane—probably leading to attenuation of some high-frequency speech components. City University of New York, New York, New York; San Diego State University, San Diego, California; and House Ear Institute. 0196/0202/08/2904-0479/0 • Ear & Hearing • Copyright © 2008 by Lippincott Williams & Wilkins • Printed in the U.S.A. 479 480 BOOTHROYD / EAR & HEARING, VOL. 29, NO. 4, 479 –491 Fig. 1. The source of the normal PI function. The top panel shows the waveform of a sample of speech consisting of a string of monosyllabic words without intervening silences (pressure as a function of time). The second panel shows the RMS amplitude of this sample, measured over a moving 100 msec window (dB as a function of time). The third panel shows the distribution of instantaneous amplitudes, with high levels on the left and low levels on the right (number of samples at each dB level). The bottom panel shows the cumulative amplitude distribution, that is, the percentage of the total energy in the speech signal that is available to a listener as the speech level (in dB) increases from initial audibility to full audibility. function may be seen, therefore, as a behavioral measure of the cumulative distribution, across amplitude, of useful information in the speech signal. Knowledge of this distribution has potential value in both research and clinical applications. MATHEMATICAL MODELING The utility of the PI function can be enhanced by fitting empirical data to a valid underlying mathematical model. The benefits include smoothing data across several measurements, thereby improving test–retest reliability, and the reduction of these measurements to a smaller number of key parameters. Such a model is represented by the cubed exponential function of Eq. (1), the full development of which is given in Appendix A. p ⫽ m/0.99 * (1 ⫺ e(⫺(x⫺t)/r 5.7))3 * (1) where p is the phoneme recognition probability at amplitude x, m is the optimum score at full audibility, e is the base of natural logarithms (⬃2.72), x is the speech amplitude in dB, t is the threshold amplitude in dB, and r is the amplitude range from initial to full audibility in dB. Several points should be noted about Eq.: 1. The quantity (x ⫺ t)/r is the articulation index (AI), or speech intelligibility index (ANSI, 1969; French & Steinberg, 1947; Kryter, 1962a,b). 2. The AI is assumed to rise linearly from 0 to 1 as the level of speech rises from initial to full audibility. There is, therefore, an additional proviso that (x ⫺ t)/r cannot be less than zero or greater than one.† 3. The exponent of three at the end of the equation serves two purposes. First, it helps account for the effects of lexical context on phoneme recognition in CVC words (Boothroyd & Nittrouer, 1988). Second, it helps account for departures from the assumption that AI rises †This condition can also be satisfied with the proviso that 0 ⱕ p ⱕ m. In other words, p cannot be less than zero or greater than the value obtained when x ⫽ t ⫹ r. BOOTHROYD / EAR & HEARING, VOL. 29, NO. 4, 479 –491 100 80 60 Initial audibility at -21 dB 60 Range 34 dB 40 Full audibility at 13 dB 40 CumAmp for t1 CumAmp for t2 Talker 1 Talker 2 20 0 -20 Max 99 % 100 80 481 -15 -10 -5 0 5 10 15 20 20 Least-squares fit to equation 1 Data from Pegan (2007) 25 Fig. 2. Cumulative amplitude distribution as a predictor of the normal PI function. Data points show group-mean phoneme recognition scores (ⴞ1 SE), as a function of signal-to-noise ratio, for 12 young normally hearing adults listening to recordings of consonant-vowel-consonant word lists recorded by two talkers. These data are shown in relation to cumulative RMS amplitude distributions (measured over 100 msec windows) derived from the two recordings. The longterm average level of the two recordings differed by less than 1 dB. linearly with increasing audibility. Most importantly, however, it improves the fit between predicted and measured scores. 4. The divisor of 0.99 at the beginning of the equation reflects an assumption that full audibility occurs when p is within 99% of its asymptotic value, as defined by Eq. (1) (but without the proviso that the AI cannot exceed 1). 5. The multiplier of 5.7 after (x ⫺ t)/r ensures that the estimate of r represents the range from initial to full audibility—the latter being assumed to occur when p reaches 99% of its asymptotic value. Figure 3 shows the least-squares fit ‡ of Eq. (1) to group-mean PI data obtained by Pegan (2007) in a study of the distribution of reverberation effects across the intensity range of speech. The data of Figure 3 were obtained without reverberation. Twenty-nine young, normally hearing adults listened dichotically, via insert earphones, to CVC words recorded by a female talker. Each listener heard one list of 10 words at each of seven speech levels (dBleq), presented in spectrally matched steady-state noise that had a constant level of 60 dB SPL. The choice of word list was varied across signal-to-noise ratio and across listener. The parameters for a least-squares fit to the data are m ⫽ 99%; ‡Curve-fitting routines are available within Table Curve and Sigmaplot software from Systat Software, Inc. and within the CASPA program of Boothroyd (2006). 0 -25 -20 -15 -10 -5 0 5 10 15 20 25 Fig. 3. Least-squares fit of Eq. (1) to group mean PI data for 29 listeners. Data are taken from Pegan (2007). Error bars show ⴞ1 SE. t ⫽ ⫺21 dB; and r ⫽ 34 dB. In other words, mean phoneme recognition rises from zero to a maximum of 99% as the signal-to-noise ratio rises from ⫺21 dB, over a range of 34 dB, to a value of ⫹13 dB. The standard error of fit is 0.77 percentage points. THE PI FUNCTION AS A RESEARCH TOOL The PI function has potential value in the study of intentional or unintentional changes in the distribution of useful speech information in the amplitude domain. The following two examples are of amplitude compression in hearing aids and reverberation in enclosed spaces. Amplitude Compression One of the goals of fast-acting amplitude compression is to reduce the amplitude range over which useful speech information is distributed. Because the PI function for speech in noise represents an estimate of this range, it should provide a behavioral validation of compression schemes. This possibility was explored by Oruganti (2000) in a study of amplitude compression. Lists of recorded CVC words were presented to groups of eight normally hearing listeners in a background of pink noise having a constant octave-band level across frequency. The pink noise was used to simulate a relatively “flat” hearing loss of around 40 dB HL. Listeners heard the stimuli monaurally via supraaural earphones under four conditions: 1. Unprocessed. 2. High-frequency emphasis (HFE): Frequencies above 1100 Hz were amplified by 8 dB so as to compensate for high-frequency roll-off in the speech spectrum. 482 BOOTHROYD / EAR & HEARING, VOL. 29, NO. 4, 479 –491 Unprocessed HP 8dB + LP Envelope (over 5 msec window) High-frequency emphasis (HFE) only Fine structure 10dB 30dB Fig. 4. Amplitude compression scheme used by Oruganti (2000). compress amplify HFE plus 1-band compression 3. HFE ⫹ single-band compression: The top 30 dB of the amplitude distribution was compressed into a 10 dB range. Attack and release times were 2.5 msec (see Fig. 4). 4. HFE ⫹ two-band compression. The same compression was applied independently to frequencies below and above 1100 Hz. Figure 5 shows the least-squares fits of Eq. (1) to Oruganti’s group-mean phoneme recognition scores. The slope of the PI function increased with HFE— reflecting compensation for high-frequency roll-off in the speech spectrum (Cox & Moore, 1988). The addition of single-band compression increased the slope further—as one would expect. Switching from single-band to two-band compression, however, had only a small additional effect on slope. The derived 100 80 60 40 Unprocessed High-frequency emphasis (HFE) HFE + 1-band compression HFE + 2-band compression 20 0 0 5 10 15 20 25 30 35 40 Fig. 5. Phoneme recognition in pink noise by young hearing adults as a function of speech amplitude and type of processing. Data points show group-mean data (ⴞ1 SE) from Oruganti (2000). Curves are least-squares fits to Eq. (1). Apply compressed envelope ranges from initial to full audibility were 43, 34, 24, and 22 dB for the unprocessed signal, HFE alone, HFE⫹single-band compression, and HFE⫹doubleband compression, respectively. What is striking about these data is that the behaviorally measured compression ratio for singleband compression was only 1.4:1 and not the 3:1 that might be expected from the digital processing. The discrepancy is easily explained, however, by the fact that gain changes in single-band compression are dominated by amplitude at the spectral maximum but are applied to all frequencies (Dillon, 2001). It is usually assumed that this issue can be resolved by compressing independently in two or more channels. Oruganti’s data, however, did not support this assumption—the behavioral compression ratio increased to only 1.5:1 when compression was applied independently in low- and high-frequency bands. One could argue that the initial HFE made the two-band compression scheme redundant. The point here, however, is not to draw conclusions about compression algorithms but to demonstrate the potential value of the PI function as a technique for the preclinical, behavioral evaluation of hearing aid processing schemes that are designed to change the distribution of information in the amplitude domain. Reverberation Individuals listening to speech deep into the reverberant field in an enclosed space (at least threetimes the critical distance from the source) rely on multiple early reflections for audibility of the signal (Boothroyd, 2004; Bradley, et al., 2003). Unfortunately, these reflections are accompanied by multi- BOOTHROYD / EAR & HEARING, VOL. 29, NO. 4, 479 –491 ple late reflections that cause speech sounds to interfere with each other through a combination of energetic and informational masking (Bradley, 1986; Nabalek, et al., 1989). In modeling this effect, Boothroyd has treated late-arriving reverberation as an equivalent self-generated noise, the resulting signal-to-noise ratio being inferred from data on consonant recognition reported by Peutz (1997). An implied assumption of this approach is that the principal effects of late reflections are confined to the weaker components of the useful speech signal and consist of energetic masking. If, however, informational masking plays a major role one would expect the effects of late reflections to be distributed across the amplitude range of the useful speech signal. The study of Pegan mentioned earlier was designed to test these two hypotheses. If the negative effects of late reflections are confined to the weaker speech sounds, it was predicted that the PI functions for reverberated speech would not begin to separate from that of the unreverberated speech until fairly high signal-to-noise ratios were reached. If, on the other hand, the negative effects of late reflections are distributed throughout the amplitude range of speech, it was predicted that the PI functions would separate at all signal-to-noise ratios. Phoneme recognition was measured in sentencefinal CVC words. The sentences were subjected to simulated reverberation with reverberation times (RT60) of 1, 2, and 4 sec. After processing, overall levels were adjusted to equate dBleq levels across conditions. Stimuli were then mixed with steadystate random noise whose spectrum was matched to that of the talker. The condition of RT60 ⫽ 0 (i.e., no reverberation) was also included. Twelve young normally hearing adults were tested at RT60 ⫽ 0, 2, and 4 sec. An additional 17 listeners were tested at RT60 ⫽ 0, 1, and 2 sec. Each listener heard one list of 10 CVC words at each of seven signal-to-noise ratios, under three reverberation conditions. Signalto-noise ratio was controlled by varying speech level while keeping noise level constant. After confirming that there were no significant differences between the two listener groups at 0 and 2 sec, the data were pooled. Figure 6 shows Pegan’s data, together with leastsquares fits to Eq. (1). The findings were clearly in keeping with the second prediction and strongly support the conclusion that, for a listener who is relying on early reflections, the negative effects of late reflections are distributed throughout the amplitude range of the useful signal. They also strongly suggest that optimal phoneme recognition for such a listener requires a signal-to-noise ratio that is higher than the 15 dB recommended by ANSI (2002). Once again, however, the purpose here is not 483 80 60 40 20 Reverberation Time (RT60) 0 sec 1 sec 2 sec 4 sec 0 Fig. 6. Phoneme recognition by young hearing adults as a function of postreverberation signal-to-noise ratio and reverberation time. Data points show group-mean data (ⴞ1 SE) from Pegan (2007). Curves are least-squares fits to Eq. (1). to draw conclusions about reverberation itself but to illustrate the potential power of the PI function as a behavioral tool for studying the effects of distortions on the distribution of useful speech information in the amplitude domain. THE PI FUNCTION AS A CLINICAL TOOL The PI function underlies the two standard clinical speech measures—speech reception threshold (SRT) and word recognition in lists of phonetically balanced words (PBmax) (Brandy, 2002). SRT is the level giving 50% recognition probability for spondees and is expressed in dB HL relative to the value for normally hearing listeners. PBmax is the recognition probability for whole monosyllabic words in phonetically balanced lists when presented at a level believed to provide optimum performance. The SRT is used (a) to validate pure-tone thresholds, and (b) as the basis for estimating the optimum listening level. PBmax is used (a) as an estimate of auditory resolution, (b) as an indicator of communication difficulty, and (c) sometimes as a measure of hearing aid or cochlear-implant outcome. These two measures were adopted in the early days of clinical audiology in the United States, in part, because obtaining a complete PI function was considered both impractical and unnecessary (Carhart, 1965). With some modifications to test procedures, however, a complete PI function can be obtained in a reasonably short time. Moreover, the resulting information goes beyond estimates of threshold and optimum performance. One of the keys to the clinical utility of the PI function is phoneme scoring—that is, measuring performance, not as the percentage of whole words 484 BOOTHROYD / EAR & HEARING, VOL. 29, NO. 4, 479 –491 125 0 10 20 30 500 1000 2000 4000 8000 O Right Ear X Left Ear Headphone, binaural Aid, binaural PTA = 40 dB O X O O X X X O O X 40 50 60 Fig. 7. Pure-tone audiogram and binaural PI functions, via headphones and aided, for an adult with a moderate sensorineural hearing loss. Note that PRT stands for Phoneme Recognition Threshold and is the level in dB, re normal, at which the listener scores 50% of his maximum. PRT is similar to the more familiar SRT. 250 O XO X 70 80 90 100 Error bars show +/-1 se PTA = 3-frequency avg. PRT = phoneme recog. threshold MCL = most comfortable level UCL = uncomfortable level 110 Optimum score = 99% 100 80 Range of optimal listening 60 PRT = 39 dB Nor mal 40 20 MCL UCL 0 0 10 recognized, but as the percentage of the constituent vowels and consonants recognized. This relatively simple change has several benefits. 1. It increases the number of test items and, therefore, improves test–retest reliability and reduces the confidence limits of a single score (Boothroyd, 1968a; Gelfand, 1998; Thornton & Raffin, 1978). 2. It becomes possible to obtain reasonably reliable scores from very short lists and the time saved can be used for testing at several levels (Boothroyd, 1968a). 3. The phoneme score is less influenced by the listener’s vocabulary knowledge than is the whole-word score and is, therefore, a more valid measure of auditory resolution (Boothroyd, 1968b; Olsen, et al., 1996). As noted earlier, by fitting scores obtained at several levels to an underlying model, one essentially averages several data points, thereby improving overall test–retest reliability. The following three examples illustrate the potential value of a complete PI function in clinical applications. A Flat, Moderate, Sensorineural Hearing Loss Figure 7 shows pure tone and PI data for an adult with a moderate sensorineural hearing loss. Binaural phoneme recognition scores were obtained under headphones. Each score took roughly 1 min to obtain 20 30 40 50 60 70 80 90 100 110 and is based on a 10-word list (i.e., 30 phonemes). § Equation (1) was fit to the data. For this individual the estimated optimal score was 99%. The phoneme recognition threshold ¶ (PRT) was 39 dB HL, which is very close to the three-frequency-average hearing loss of 40 dB. The optimum score and the PRT provide, essentially, the same information as do PBmax and SRT. Note, however, that additional information is provided in terms of most comfortable and uncomfortable levels that are easily determined during testing. More importantly, one has information on the range of optimal listening (defined here as the dB range over which the estimated phoneme recognition probability is at least 90% of optimal). The aided PRT is some 10 dB better than that obtained under headphones. Moreover, at a conversational speech level of 60 dB, phoneme recognition improves from around 65% to over 90%. The aided data, however, would encourage one to explore the use of wide dynamic range compression to enhance audibility of low-amplitude speech. Even though this is a straightforward case, it can be seen that the preparation of complete PI functions provides useful §The error bars in Figures 6, 7, and 8 show ⫾1 SE, estimated from the normal approximation to the binomial distribution under the assumption that there are, effectively, 25 independent phonemes in a 10-word list. ¶Phoneme recognition threshold is defined here as the level, re: normal, at which the phoneme recognition score is 50% of the optimum score. For reasonably flat losses, the phoneme recognition threshold and the SRT are almost identical (Walker, 1975). BOOTHROYD / EAR & HEARING, VOL. 29, NO. 4, 479 –491 125 250 500 1000 2000 4000 8000 O Right Ear X Left Ear Headphone, binaural Aid, binaural 0 10 20 30 40 50 PTA = 72 dB O XO X XO O X X O X O O X 60 70 80 90 100 110 100 Error bars show +/-1 se PTA = 3-frequency avg. PRT = phoneme recog. threshold MCL = most comfortable level UCL = uncomfortable level Optimum score = 87% 80 Range of optimal aided listening 60 Aided PRT = 26 dB Nor mal 40 485 Range of optimal listening MCL Unaided PRT = 67 dB 20 Fig. 8. Pure-tone audiogram and binaural PI functions, via headphones and aided, for an adult with a severe sensorineural hearing loss. UCL 0 0 10 20 30 40 50 60 70 80 90 100 information over and above estimates of threshold and optimum performance—for little extra expenditure of time. A Flat Severe Sensorineural Loss Figure 8 shows threshold and PI data for a more challenging case. This is an adult with a flat severe sensorineural hearing loss. PRT is 67 dB HL, which compares favorably with the threefrequency average hearing loss of 72 dB. Optimum phoneme recognition under headphones is around 87%. Unlike with the moderate loss, however, there is a very narrow range over which this optimum score is attained. A small increase in level produces discomfort and a small decrease lowers performance. In this case, the hearing aids provide adequate gain—the aided PRT being reduced to 27 dB. More importantly, however, the range of optimal listening has been extended from a few dB to around 25 dB—thanks to a combination of high-frequency emphasis and compression limiting in the hearing aid. Once again, there is valuable information provided by the PI functions—which in this case took less than 15 min to prepare—that would not otherwise be available. A Severe High-Frequency Sensorineural Hearing Loss The final example (Fig. 9) is of an adult with a severe high-frequency sensorineural hearing loss. 110 For losses like this, the PI function typically has two sections (Boothroyd, 1968a). As speech amplitude increases, low-frequency speech information first becomes audible but phoneme recognition quickly stabilizes at a low value (note the upper speech area on the audiogram). With further increase in level, high-frequency speech information eventually becomes audible and phoneme recognition begins to rise again—in this case to an optimum level of around 86% (note the lower speech area on the audiogram). Figure 9 shows a leastsquares fit of Eq. (1) to scores obtained between 25 and 70 dB and a second fit to scores obtained between 75 and 95 dB. The SRT, using spondees, would most likely have been in the region of 25 dB HL for this subject and a rule of SRT ⫹30 or ⫹40 would have resulted in a search for PBmax at a level below the optimal listening range. In cases such as this, a full PI function may provide information that is not only richer than that provided by SRT and PBmax— but more valid.储 COMPUTER ASSISTANCE Although phoneme scoring makes it possible to obtain complete PI functions in a reasonably short time (typically 1 min for a 10-word list) it does add 储Note, however, that several researchers have recognized the shortcomings of SRT as a basis for predicting the optimal listening level and some of the proposed alternatives would work well in this case (Guthrie, 2007; Kamm, et al., 1983). 486 BOOTHROYD / EAR & HEARING, VOL. 29, NO. 4, 479 –491 125 250 500 1000 2000 4000 8000 O Right Ear X Left Ear Headphone, binaural 0 10 20 O X O X 30 40 50 X O Speech area X OO XO X OO XX O XX O 60 70 80 90 Fig. 9. Pure-tone audiogram and binaural PI function, via headphones, for an adult with a severe high-frequency sensorineural hearing loss. 100 Error bars show +/-1 se PTA = 3-frequency avg. PRT = phoneme recog. threshold MCL = most comfortable level UCL = uncomfortable level 110 100 Optimum score = 86% 80 Range of optimal listening Nor mal 60 40 MCL UCL 20 0 0 10 a little complexity to the processes of recording responses, logging data, averaging scores, estimating confidence limits, and graphing. These processes can be handled effectively, however, by custom software. An example is the CASPA program developed by the author and used to generate most of the behavioral data reported here (Boothroyd, 2006; Mackersie, et al., 2001). This program uses the AB isophonemic word lists (see Appendix B). There are 20 lists, each consisting of 10 CVC words. The 10 vowels and 20 consonants occurring most frequently in English CVC words appear once in each list. All stimuli exist as digital sound files. For aided and unaided sound-field testing, speech amplitude is controlled by the software and normally covers the range from 45 to 75 dB SPL (leq) in 5 dB steps. For testing over a wider range— either in the sound field or under headphones—the computer output is routed to an audiometer. The listener’s repetition of a test word is entered by the tester who is then shown the word in text form. The three phonemes are scored as correct or incorrect—additions, omissions, or substitutions counting as errors— before moving to the next word. After 10 words have been presented and scored, the results are automatically logged, together with the test words, the responses, and the test conditions. Data for a given listener can be recalled and specific runs selected for averaging (if more than one list was presented 20 30 40 50 60 70 80 90 100 110 at the same level under the same test condition), estimation of standard errors, graphing, and curve-fitting. RECONCILING PHONEME AND WHOLE-WORD RECOGNITION Although preparing a complete PI function in a clinical context is made possible by phoneme scoring, the resulting scores are not simple substitutes for whole-word scores. They can, however, be used to estimate whole-word scores using the following equation: w ⫽ 100 * (p/100)2.5 (2) where w is the whole-word recognition score in percentage and p is the phoneme recognition score in percentage. The exponent of 2.5 in Eq. (2) is the j-factor (Boothroyd & Nittrouer, 1988), and represents the effective number of independent phonemes per word. For meaningful CVC words, j is less than three (the actual number of phonemes in a word) because of the influence of word context on phoneme recognition. Equation (2) was used to generate the conversion chart of Table 1. This chart will be helpful to clinicians who have developed an implicit understanding of whole-word recognition scores in terms of their relationship to communication difficulties. BOOTHROYD / EAR & HEARING, VOL. 29, NO. 4, 479 –491 487 TABLE 1. Chart for converting percent phoneme recognition scores (p) into equivalent percent whole-word scores (w) and estimated word-in-sentence scores (s) p w s p w s p w s p w s p w s 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 0 0 0 0 0 0 0 0 0 0 0 0 0 1 1 1 1 1 1 2 0 0 0 0 0 0 0 1 1 1 1 2 2 2 3 3 4 5 5 6 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 2 2 2 3 3 3 3 4 4 5 5 5 6 6 7 7 8 8 9 9 7 8 9 10 11 12 13 14 16 17 18 20 21 23 24 26 28 29 31 33 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 10 11 11 12 13 14 14 15 16 17 18 19 19 20 21 22 23 25 26 27 35 37 38 40 42 44 46 48 50 52 54 56 58 60 62 64 66 68 69 71 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 28 29 30 32 33 34 35 37 38 40 41 42 44 46 47 49 50 52 54 55 73 75 76 78 80 81 83 84 85 87 88 89 90 91 92 93 94 95 95 96 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 57 59 61 63 65 67 69 71 73 75 77 79 81 83 86 88 90 93 95 98 100 97 97 98 98 98 99 99 99 99 100 100 100 100 100 100 100 100 100 100 100 100 PREDICTING WORD RECOGNITION SENTENCES IN One can also use phoneme recognition scores to estimate word-recognition probability in sentences using the k-factor (Boothroyd & Nittrouer, 1988): s ⫽ 100 * (1 ⫺ (1 ⫺ (p/100)2.5)k (3) where s is the percent word recognition in sentences, p the phoneme recognition score in percentage, and k is the effect of sentence context, expressed as a proportional increase in the effective number of independent channels of information (k is equivalent to the proficiency factor in AI theory). The value of k reflects several factors (a) semantic and syntactic redundancy in the sentence material, (b) the perceiver’s world and linguistic knowledge, and (c) the perceiver’s language processing skills. It can range from around 2 for complex, unfamiliar language to 10 or more for informal conversation. A value of 4 is used in the conversion chart of Table 1, representing relatively easy sentence material—at least from the perceiver’s perspective. The data in Table 1 are also shown graphically in Figure 10. SUMMARY The PI function represents the cumulative distribution of useful speech information in the amplitude domain. Its form is well predicted by a cubed exponential equation with three free parameters: (a) the threshold of initial audibility, (b) the amplitude range from initial to full audibility, and (c) the optimum recognition probability at full audibility. Phoneme scoring makes it possible to obtain PI functions in a reasonably short time. Moreover, phoneme recognition scores provide a more valid estimate of auditory resolution than do whole-word scores. Generation of complete PI functions can have value in research contexts by providing information on the effects of intentional or unintentional changes to the distribution of useful speech information Whole CVC words in isolation Words in relatively easy sentences 80 60 40 20 0 0 20 40 60 80 100 Fig. 10. Estimated word recognition in isolation and in sentence context, as functions of phoneme recognition in CVC words. 488 BOOTHROYD / EAR & HEARING, VOL. 29, NO. 4, 479 –491 across the amplitude domain. It can also have value in clinical contexts by providing information over and above estimates of threshold and optimum performance. The PI function is an easily available, potentially valuable, but largely neglected resource for both hearing research and clinical audiology. ACKNOWLEDGMENTS The contributions of Balaji Oruganti, Bethany Mulhearn, Nina Guerrero, Nancy Plant, Kathryn Pegan, Noemi Haro, Eddy Yeung, and Gary Chant to the work underlying this article are gratefully acknowledged. This work was supported, in part, by NIDRR RERC grant No. H1343E98 to Gallaudet University and by NIH grant No. DC06238 to the House Ear Institute. The CASPA software was developed by the author to facilitate the presentation and scoring of short isophonemic word lists for the generation of PerformanceIntensity functions. Interested clinicians and researchers may obtain a copy of this software, for a fee, by contacting the author. Based on the Raymond Carhart Memorial Lecture to the Annual meeting of the American Auditory Society, 2000. Address for correspondence: Arthur Boothroyd. 2550 Brant Street, San Diego, CA 92101. E-mail: aboothroyd@ cox.net. Received June 26, 2007; accepted January 14, 2008. Derivation of a Model of the PI Function This derivation begins with AI theory. The AI is a transform of recognition probability and is given by: (A1) where ai is the articulation index and p is the recognition probability in percentage. The motivation behind development of the AI was to bypass the tedious and expensive process of as- Fig. A1. Showing the identity of the AI-based and exponential functions and the fact that cubing either function provides a better fit to the experimental data. ai ⫽ (x ⫺ t)/r (A2) where ai is the articulation index when the speech level is x, x is the speech level in dB, t is the threshold of initial audibility in dB, and r is the range from initial to full audibility in dB with the restriction that 0 ⬍ ⫽ ai ⬍ ⫽ 1 APPENDIX A ai ⫽ ⫺log(1 ⫺ p/100) sessing early telephone systems with actual listeners (Fletcher, 1953). A principal virtue of the AI transform is that it is additive—meaning that if two independent sources of information have articulation indices of AI1 and AI2, the AI for the combination is simply the sum of the two. It was found that good prediction of behavioral outcomes was obtained if it was assumed that the useful speech information within a given band of frequencies was distributed uniformly over a limited range of amplitudes (originally 30 dB). For the moment, we will consider the band of frequencies to be very wide— covering the whole frequency range over which useful information is available. Under these assumptions, the cumulative AI rises linearly from 0 to 1 as the speech signal rises from initial audibility to full audibility. Thus Equation (A3) converts AI into recognition probability by reversing Eq. (A1) and combining with Eq. (A2): p ⫽ 100 * (1 ⫺ E((x⫺t)/r)) (A3) where p is the recognition probability in percentage, E is the residual error probability at full audibility. The broken line in Figure A1 shows a leastsquares fit of Eq. (A3) to the group-mean PI data of BOOTHROYD / EAR & HEARING, VOL. 29, NO. 4, 479 –491 Figure 3. The parameters for a least-squares fit to the data are: Residual error at full audibility ⫽ 0.9% Threshold of audibility ⫽ ⫺17 dB SNR Range from zero to full audibility ⫽ 34 dB The standard error of fit is 1.9 percentage points. The fit is improved if we cube the estimated recognition probability in Eq. (A3) before converting to percentage. Thus p ⫽ 100 * (1 ⫺ Fai)3 (A4) F ⫽ 1 ⫺ (1 ⫺ E)1/3 (A5) where The fit of Eq. (A4) to Pegan’s data is shown by the solid line in Figure A1. The parameters for best fit are now: F ⫽ 0.0027 (still giving residual error at full audibility of E ⫽ 0.9%) Threshold of audibility ⫽ ⫺21 dB SNR Range from zero to full audibility ⫽ 35 dB It will be seen from Figure A1 that the cubed AI function more closely resembles the typical PI function in that it predicts a linear portion between 20% and 60%. Moreover, the exponent of three can be justified as helping account for the influence of phonological and lexical redundancy on phoneme recognition—as in the j-factor of Boothroyd and Nittrouer (1988). It may also help deal with departures from the assumption that the AI rises linearly with increasing audibility. More importantly, however, the standard error of fit is reduced, here, from 1.9 to 0.77% pts. Unfortunately, the parameters of range and residual error in Eqs. (A4) and (A5) are not entirely independent. And if one wished to account for distortions of the signal or proficiency of the listeners, one would need to add a fourth parameter— equivalent to the k-factor of Boothroyd and Nittrouer (1988), or the proficiency factor of traditional AI theory. A small modification to Eq. (A4), however, can deal with these two problems. This modification involves replacing the variable F with e (the base of natural logarithms), adding a parameter representing recognition at full audibility, and introducing a couple of constants. The result is the following cubed exponential equation [Eq. (1) in the body of the text]: p ⫽ m/0.99 * (1 ⫺ e(⫺(x⫺t)/r 5.7))3 * (A6) where p is the phoneme recognition probability, m is the recognition probability at full audibility, x is the speech level in dB, e is the base of natural loga- 489 rithms (⬃2.72), t is the threshold of initial audibility in dB, and r is the amplitude range in dB from initial to full audibility. Note the constraint that 0 ⱖ (x ⫺ t)/r ⱕ 1. If (x ⫺ t)/r is not restricted to an upper limit of 1, the value of p will approach an asymptotic value of m/0.99. The constant (5.7) forces r to take the value at which recognition probability reaches 0.99 of this asymptote. Similarly, the constant (0.99) at the beginning of Eq. (5) forces m to take the value of the % recognition probability at full audibility. Thus the whole function is now defined by three parameters: the threshold of initial audibility in dB (t), the amplitude range between initial and full audibility in dB (r), and the percent phoneme recognition probability at full audibility (m). The least-squares fit of Eq. (A6) is shown in the Pegan data in Figure A1 but is indistinguishable from the fit to the cubed AI function. The fitting parameters are: m ⫽ 99% t ⫽ ⫺21 dB SNR r ⫽ 34 dB The standard error of fit is 0.77%pts.** Putting the limitation of 0 ⱕ ai ⱕ 1 into equation form can be accomplished with logarithmic addition. The equation is complicated but the result is simple—ai cannot fall below 0 or rise above 1: ai ⫽ 1 ⫺ log共1 ⫹ 10∧共共1 ⫺ log共1 ⫹ 10∧ ⫻ 共100 * 共x ⫺ t兲/r兲兲/100兲 * 100兲兲/100 (A7) Once the parameters of m, t, and r have been obtained, they can be used to estimate other quantities of possible interest. For example PRT ⫽ t ⫹ r * 0.275 (A8) where PRT is the amplitude at which the phoneme recognition score is 50% of the optimum value s ⫽ 234/r (A9) where s is the slope of the PI function between 20% and 60% of the maximum. o⫽t⫹r (A10) where o is the level at which the optimum recognition probability of m is first attained. **The two equations are, in fact, equivalent over most of their range. It can be shown that the amount by which AI is multiplied in Eq. (5) is 1/logF(e), which is ⫺5.9 (using the fitting data from Fig. 3). The shift from ⫺5.9 to ⫺5.7 ties the range to 0.99 of the asymptote of the exponential function and accounts for the 1 dB difference of range for the fits to Eqs. (A4) and (A6). 490 BOOTHROYD / EAR & HEARING, VOL. 29, NO. 4, 479 –491 This is a single-band model, but it can be expanded to account for frequency-dependence of speech amplitude, hearing aid gain, signal-to-noise ratio, or pure-tone threshold. APPENDIX B AB isophonemic word lists used in CASPA and to generate the behavioral data reported in this article. List 1 List 2 List 3 List 4 List 5 List 6 List 7 List 8 List 9 List 10 ship rug fan cheek haze dice both well jot move fish duck path cheese race hive bone wedge log tomb thug witch teak wrap vice jail hen shows food bomb fun will vat shape wreath hide guess comb choose job fib thatch sum heel wide rake goes shop vet June fill catch thumb heap wise rave got shown bed juice badge hutch kill thighs wave reap foam goose not shed bath hum dig five ways reach joke noose pot shell hush gas thin fake chime weave jet rob dope lose jug latch wick faith sign beep hem rod vote shoes List 11 math hip gun ride siege veil chose shoot web cough List 12 have wig buff mice teeth jays poach rule den shock List 13 kiss buzz hash thieve gate wife pole wretch dodge moon List 14 wish Dutch jam heath laze bike rove pet fog soon List 15 hug dish ban rage chief pies wet cove loose moth List 16 wage rag beach chef dime thick love zone hop suit List 17 jade cash thief set wine give rub hole chop zoom List 18 shave jazz theme fetch height win suck robe dog pool List 19 vase cab teach death nice fig rush hope lodge womb List 20 cave rash tease jell guide pin fuss home watch booth Note: Each list contains 1 instance of each of the same 30 phonemes. These are the 10 vowels and 20 consonants that occur most frequently in English CVC words. The lists are balanced only for phonemic content - not for word frequency or lexical neighborhood size. No word appears twice in the corpus of 200. REFERENCES ANSI. (1969). American national standard method for calculation of the articulation index (S3.5–1969). New York: American National Standards Institute. ANSI. (2002). Acoustical performance criteria, design requirements, and guidelines for classrooms (ANSI S12.6-2002). New York: American National Standards Institute. Boothroyd, A. (1968a). Developments in speech audiometry. Br J Audiol (Formerly Sound), 2, 3–10. Boothroyd, A. (1968b). Statistical theory of the speech discrimination score. J Acoust Soc Am, 43, 362–367. Boothroyd, A. (2004). Room acoustics and speech perception. Semin Hear, 25, 155–166. Boothroyd, A. (2006). CASPA 5.0. Software. San Diego: A Boothroyd. Boothroyd, A. & Nittrouer, S. (1988). Mathematical treatment of context effects in phoneme and word recognition. J Acoust Soc Am, 84, 101–114. Bradley, J. S. (1986). Predictors of speech intelligibility in rooms. J Acoust Soc Am, 80, 837– 845. Bradley, J. S., Sato, H., & Picard, M. (2003). On the importance of early reflections for speech in rooms. J Acoust Soc Am, 113, 3233–3244. Brandy, W. T. (2002). Speech audiometry. In J. Katz (Ed.), Handbook of clinical audiology (5 ed., pp. 96 –110). Baltimore: Lippincott Williams & Wilkins. Carhart, R. (1965). Problems in the measurement of speech discrimination. Arch Otolaryngol, 82, 253–260. Cox, R. M. & Moore, J. R. (1988). Composite speech spectrum for hearing aid gain prescriptions. J Speech Hear Res, 31, 102–107. Dillon, H. (2001). Compression systems in hearing aids. In Hearing aids (pp. 169). New York: Theime. Fletcher, H. (1953). Speech and hearing in communication. New York: Van Nostrand [edited by Jont Allen and republished in 1995 by the Acoustical Society of America. Woodbury, NY]. French, N. R. & Steinberg, J. C. (1947). Factors governing the intelligibility of speech sounds. J Acoust Soc Am, 19, 90 –119. Gelfand, S. (1998). Optimizing the reliability of speech recognition scores. J Speech Lang Hear Res, 41, 1088 –1102. Guthrie, L. A. (2007). Optimizing presentation level for speech recognition testing. Unpublished AuD research thesis. San Diego: San Diego State University. Haro, N. (2007). Development and evaluation of the Spanish computer-assisted speech perception assessment test (CASPA). Unpublished AuD research thesis. San Diego: San Diego State University. Kamm, C. A., Morgan, D. E., & Dirks, D. D. (1983). Accuracy of adaptive procedure estimates of PB-Max level. J Speech Hear Disord, 48, 202–209. Kryter, K. D. (1962a). Methods for the calculation and use of the articulation index. J Acoust Soc Am, 34, 1689 –1697. Kryter, K. D. (1962b). Validation of the articulation index. J Acoust Soc Am, 34, 1698 –1702. Mackersie, C. L., Boothroyd, A., & Minnear, D. (2001). Evaluation of the computer-assisted speech perception test (CASPA). J Am Acad Audiol, 12, 390 –396. Nabalek, A. K., Letowski, T. R., & Tucker, F. M. (1989). Reverberant overlap- and self-masking in consonant identification. J Acoust Soc Am, 86, 1259 –1265. BOOTHROYD / EAR & HEARING, VOL. 29, NO. 4, 479 –491 Olsen, W. O., Van Tasell, D. J., & Speaks, C. E. (1996). Phoneme and word recognition for words in isolation and in sentences. Ear Hear, 18, 175–188. Oruganti, B. (2000). The effects of high-frequency emphasis and amplitude compression on the short-term intensity range of speech. Unpublished Doctoral dissertation. New York: City University of New York. Pegan, K. (2007). Interactive effects of noise and reverberation on speech perception. Unpublished AuD research thesis. San Diego: San Diego State University. Peutz, V. (1997). Speech reception and information. In D. Davis & 491 C. Davis (Eds.), Sound system engineering (2 ed., pp. 639 – 644). Boston: Focal Press. Studebaker, G. A., Taylor, R., & Sherbecoe, R. L. (1994). The effect of noise spectrum on speech recognition performanceintensity functions. J Speech Hear Res, 37, 439 – 448. Thornton, A. R. & Raffin, M. J. M. (1978). Speech discrimination scores modeled as a binomial variable. J Speech Hear Res, 21, 507–518. Walker, J. (1975). A comparative study of two test procedures in speech audiometry. Unpublished Masters Thesis, MA: University of Massachusetts, Amherst.