* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download Week_1_LinearAlgebra..

Singular-value decomposition wikipedia , lookup

Eigenvalues and eigenvectors wikipedia , lookup

System of linear equations wikipedia , lookup

Cross product wikipedia , lookup

Exterior algebra wikipedia , lookup

Laplace–Runge–Lenz vector wikipedia , lookup

Matrix calculus wikipedia , lookup

Vector field wikipedia , lookup

Vector space wikipedia , lookup

Euclidean vector wikipedia , lookup

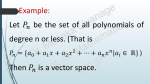

GX01 – Robotic Systems Engineering Dan Stoyanov George Dwyer, Krittin Patchrachai 2016 Vectors and Vector Spaces • • • • Introduction Vectors and Vector Spaces Affine Spaces Euclidean Spaces 2 Vectors • The most fundamental element in linear algebra is a vector • Vectors are special types of tuples which satisfy various types of scaling and addition operations • We shall predominantly deal with coordinate vectors in this course but vectors can be more complex, eg. functions • Vectors actually “live” in a vector space, but we can’t talk about this until we’ve looked a bit more at the properties of vectors 3 Vectors as Geometric Entities • Vectors are geometric entities which encode relative displacement (usually from an origin) • They do not encode an absolute position • They are not special cases of a matrix • They have their own algebra and rules of combination 4 4 Equivalency of Vectors Equivalent vectors are parallel and of the same length • Two vectors are the same iff (if and only if): – They have the same direction – They have the same length 5 5 Basic Operations on Vectors • Vectors support addition and scaling: – Multiplication by a scalar, – Addition of one vector to another, 6 Scaling Vectors by a Positive Constant • Length changed • Direction unchanged 7 7 Scaling Vectors by a Negative Constant • Length changed • Direction reversed 8 8 Addition and Subtraction of Vectors • Summation vectors “closes the triangle” • This can be used to change direction and length 9 9 Other Properties of Vectors • Commutativity • Associativity • Distributivity of addition over multiplication • Distributivity of multiplication over addition 10 Vector Spaces • A vector space has the following properties: – Addition and subtraction is defined, and the result is another vector – The set is closed under linear combinations: • Given and – There is a zero vector the combination such that 11 Illustrating Closure and the Zero Vector 12 Examples of Vector and Vector Spaces Which of the following are vector spaces? 1. A tuple of n real numbers: 2. The zero-vector: 3. The angle displayed on a compass: 13 Answers • All of the previous examples are vector spaces apart from an angle on a compass. The reason is that it usually “wraps around” – e.g., 180 degrees negative becomes 180 degrees positive 14 Yaw Angles Measured by 3 iPhones Over Time Angle discontinuities caused by “wrap around” Summary • Vectors encode relative displacement, not absolute position • Scaling a vector changes its length, but not its direction • All vectors “live” in a vector space • We can add scaled versions of vectors together • The vector space is closed under linear combinations and possesses a zero vector • We can analyse the structure of vector spaces looking, oddly enough, at the problem of specifying the coordinates of a vector 16 16 Spanning Sets and Vector Spaces • I am given a vector • I am given a spanning set of vectors, • I form the vector w as a linear combination of S, 17 Coordinate Representation of a Vector • I want to find the set of values l1, ln such that • What properties must S obey if: – For any value of x at least one solution exists? – For any value of x the solution must be unique? • It actually turns out that it’s easier to answer the second question first 18 Uniqueness of the Solution • We want to pick a set S so that the values of l1, ln are unique for each value of x • Because everything is linear, it turns out that guaranteeing linear independence is sufficient 19 Linear Independence • The spanning set S is linearly independent if only for the special case • In other words, we can’t write any member of the set as a linear combination of other members of the set 20 Dependent Spanning Set Example • For example suppose that, where and a, b are real scalar constants • This set is not linearly independent and so will yield an ambiguous solution 21 Linearly Dependent Example • To see this, compute w from the linear combination of the vectors of S, 22 Linearly Dependent Example • Suppose we want to match a vector w which can be written as • Matching coefficients, we have three unknowns but just two equations 23 Linear Independence and Dimensionality • Therefore, if our basis set solution is not unique is linearly dependent, the • Conversely, the number of linearly independent vectors in a spanning set defines its dimension 24 Linear Independence in the Dimension of 2 25 Existence of a Solution • The condition we need to satisfy is that x and w must lie in the same vector space • Now, • Because this is closed under the set of linear combinations, it must always be the case that 26 Linear Combinations and Vector Spaces 27 Existence of a Solution • We can go a bit further because we know that it must be the case that 28 Existence of a Solution • We have shown that • Can’t we automatically say that • No, because what we’ve shown so far is that 29 Arbitrary S Might Not “Fill the Space” • Therefore, in this case 30 Existence of a Solution • Therefore, it turns out that a solution is guaranteed to exist only if • This means that we must have a “sufficient number” of vectors in S to point “in all the different directions” in V • This means that the dimensions of both vector spaces have to be equal 31 Vector Subspaces • Consider the set of linearly independent vectors • Are these spanning sets dependent or independent and what’s the dimension and basis of the subspace? 32 The Question… • Recall the question – for any vector and where can we find a unique set of coefficients such that 33 The Answer… • For S a basis of we can always represent the vector uniquely and exactly • For S a basis of a subspace of some of the vectors exactly we can only represent • Expressing the “closest” approximation of the vector in the subspace is a kind of projection operation 34 The Answer… 35 Summary • Vector spaces can be decomposed into a set of subspaces • Each subspace is a vector space in its own right (closure; zero vector) • The dimension of a subspace is the maximum number of linearly independent vectors which can be constructed within that subspace 36 Summary • A spanning set is an arbitrary set of vectors which comprise a subspace • If the spanning set is linearly independent, it’s also known as a basis for that subspace • The coordinate representation of a vector in a subspace is unique with respect to a basis for that subspace 37 37 Changing Basis • In the last few slides we said we could write the coordinates of a vector uniquely given a basis set • However, for a given subspace the choice of a basis is not unique • For some classes of problems, we can make the problem significantly easier by changing the basis to reparameterise the problem 38 Example of Changing Basis • One way to represent position on the Earth is to use Earth Centered Earth Fixed (ECEF) Coordinates • Locally at each point on the globe, however, it’s more convenient to use EastNorth-Up (ENU) coordinates • If we move to a new location, the ENU basis has to change in the ECEF frame Local Tangent Plane 39 Example of an ENU Coordinate System 40 Changing Basis • Suppose we would like to change our basis set from to where both basis span the same vector space 41 Changing Basis 42 Changing Basis • Since the subspaces are the same, each vector in the original basis can be written as a linear combination of the vectors from the new basis, 43 Changing Basis • Clanking through the algebra, we can repeat this for all the other vectors in the original basis, 44 Changing Basis • Now consider the representation of our vector in the original basis, • Substituting for just the first coefficient, we get 45 45 Changing Basis • Substituting for all the other coefficients gives 46 Summary of Changing Basis • Sometimes changing a basis can make a problem easier • We can carry this out if our original basis is a subspace of our new vector space • The mapping is unique, and corresponds to writing the old basis in terms of the new basis • (It’s much neater to do it with matrix multiplication) 47 So What’s the Problem with Vector Spaces? • We have talked about vector spaces – – – – They encode displacement There are rules for adding vectors together and scaling them We can define subspaces, dimensions and sets of basis vectors We can even change our basis • However vector spaces leave a lot out! 48 The Bits Which Are Missing • There are no points – There is no way to represent actual geometric objects • There is no formal definition of what things like angles, or lengths mean – Therefore, we can’t consider issues like orthogonality • We haven’t discussed the idea of an origin – Everything floats in “free space” • Affine spaces start to redress this by throwing points into the mix 49 More Missing Bits • We still don’t have a notion of distances – Just ratios on lines between points • We still don’t have a notion of angles • We still don’t have an absolute origin • These are all introduced in Euclidean geometry 50 Euclidean Spaces • • • • Introduction Tuples Vectors and Vector Spaces Euclidean Spaces 51 Euclidean Spaces • Euclidean spaces extend affine spaces by adding notions of length and angle • The Euclidean structure is determined by the forms of the equations used to calculate these quantities • We are going to just “give” these without proof • However, we can motivate the problem by considering the problem of orthogonally projecting one vector onto another vector • First, though, we need some additional vector notation 52 Some More Vector Notation • Since we are going to define lengths and directions, we can now decompose a vector into – A scalar which specifies its length – An orthonormal vector which defines its direction Length (+ve) Orthonormal vector (length=1) 53 Projecting Vectors onto Vectors • Consider two vectors affine space and that occupy the same • What’s the orthogonal projection of onto ? 54 The Answer… Projected vector 55 The Answer… Projected vector Projected vector 56 Computing the Answer • We need to compute both the direction and length of the projection vector • The direction of the vector must be parallel to • Therefore, we are going to define the orthogonal projection as Scale factor Orthonormal vector parallel to the “right direction 57 General Case of the Scalar Product • If we now let both of our vectors be non-normalised, then • The scalar product is a special case of an inner product 58 Lengths, Angles and Distances • Lengths and angles are (circularly) defined as • The distance function (or metric) between two points is the length of the vector between them, 59 Properties of Scalar Products • Bilinearity: • Positive definiteness: 60 Properties of Scalar Products • Commutativity: • Distributivity of the dot product over vector addition, • Distributivity of vector addition over the dot product, 61 Scalar Product and Projection Mini-Quiz • What’s the value of 62 Summary • The Euclidean structure is defined by the scalar product • The scalar product is used to compute the orthogonal projection of one vector onto another vector • The scalar product works in any number of dimensions • It has many useful properties including bilinearity • However, it only defines a one dimensional quantity (length) • Vector products generalise this 63