* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download Answers to Homework set #4

Bootstrapping (statistics) wikipedia , lookup

History of statistics wikipedia , lookup

Mean field particle methods wikipedia , lookup

Inductive probability wikipedia , lookup

Foundations of statistics wikipedia , lookup

Statistical hypothesis testing wikipedia , lookup

Student's t-test wikipedia , lookup

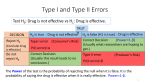

Ch 21. #11 a) one-tailed because I would like to prove that the population proportion is too low, rather than too low or too high. B) probability of rejecting a true null. The null hypothesis is that the proportion is .27 and the alternative is that it is lower (smaller) than .27. so this would be the probability of deciding based on the (unusual/misleading) data that the proportion of minorities is too low (i.e. below .27) when really it is truly .27. c) probability of accepting a false null. So this would be the probability of deciding that .27 (or 27%) are minorities when something else is true, like perhaps only 22% are minorities or 18% or…..many beta errors. D) power is 1-beta. So it is doing the right thing (opposite of beta error). It is the probability of rejecting a false null. So it is the probability of stating that less than .27 (or 27%) are minorities when some proportion smaller (in the population) is the truth. E) when the p-value is less than alpha we reject the hypothesis so if alpha goes from .01 to .05 it is easier to reject the null hypothesis. So if you can reject it more often you are more likely to reject a false null hypothesis which is good so that power must increase. F) since power is a good thing and it is good to get more data then only using 37 rather than 87 employees should result in the loss of power for the test. G) n=37 and p-hat=.19 and 95% using 2 then .19 +- 2* (sqrt ((.19*.81)/37))=.19+-.13=(.06, .32) To test use a z-score of (.19-.27)/(sqrt((.27*.73)/37)) = -1.09 Using the A-51 normal tables we get a p-value of .1379 comparing this to an alpha=.05 says we find supportive evidence for p=.27. Ch. 23 #9 98% is there. a) original distribution normal by histogram, independence n/N<.10 b) 98.28+-t(df=51)*(.68/sqrt(52))=98.28+-2.403 *.094=98.28+.226 = (98.03, 98.5) approximately. C) we are 98% sure that the mean for all folks’ body temperature is within that interval d) if we picked 52 people again and again, say 100 times then we would make 98 intervals that have the mean inside them e) a test of the mean is 98.6 against it is not 98.6 would produce a t-score of (98.28-98.6)/.094 =-3.4, look for df=52-1=51 (closest is 50) and find a number near 3.4 in A-53..that is something larger than 2.678 so we know the p-value (looking up to the top of A-53 under two tail) is less than .01. We reject the idea that the mean is 98.6 since .01<.05 (alpha I suggested). So, this data makes us conclude that the normal temperature is not 98.6 #11 a) the less sure we need to be the narrower we can make the interval, so 90% is less sure so I could build a narrower interval, it would be 98.28 +-1.676*.094 = (98.1, 98.4) b) the more sure interval makes it more likely that we have the true (all people’s) mean body temperature identified but at the sacrifice of having to all more ‘wiggle room’ about where that value really lies. c) more data means more information meaning we can be more sure and/or narrow our interval. If we compare this new 98% interval made from 500 people it will be narrower than the last based on only 52 people. D) using the approximate formula I derived in class of (2s/MOE)*(2s/MOE) we get ((2*.68)/.1)*((2*.68)/.1)=184.96 which you should round up to 185 people. Deveaux uses a more exact formula to get 252. Ch. 24 #7 from the authors’ website (via our class website or directly http://media.pearsoncmg.com/aw/aw_deveaux_introstats_1/data/d ata_index.html) I downloaded the cereal data and used DDXL. Highlighting the 2 columns and choosing ‘confidence intervals’ and then 2-sample, with 95% confidence: the sample mean for the children’s is 46.85 and for the adult’s is 10.367; we know that the stdev of the difference of 48.65 and 10.367 (or 36.483) is gotten by using the sqrt of (((7.67*7.67)/27)+ ((6.6*6.6)/18)) = sqrt(2.18+2.42)=2.14 (note the 7.67 and 6.6 were gotten by using chart/histogram in DDXL. The df=40 are shown by DDXL to left and come from the formula in the footnote on p. 454. So t with df=40 and confidence of 95% is 2.021 (check this in A-53). So 36.483+-2.021* 2.14 = (32.15, 40.82) although not shown the histogram for the adult cereal looks somewhat triangular rather than bell-shaped and may pose a concern about the truth of assumptions. To test whether the null hypothesis that there is no difference in the mean sugar content of children and adult cereals versus that the mean for the children’s is larger (greater, higher) we will need a t-score = (36.483-0)/2.14=17.05. Looking at A-53 with df=40 we see that the closest number is 2.704….so the p-value is less than .01. with .01<.05=alpha we definitely reject the idea that the mean sugar contents are the same and accept/decide that the children’s mean content is higher than the adult’s.