* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download Handling Uncertainties - using Probability Theory to

Survey

Document related concepts

Transcript

Brics

“ Uncertainty comes in many forms and in real world problems it is usually

impossible to avoid uncertainties. Further looking towards the design of

intelligent systems, one of the common problems is “ how to handle

uncertain, imprecise, and incomplete information ?” So that resulting

designed system come closer to the human intelligence level.”

Handling Uncertainties - using Probability Theory

to Possibility Theory

By Ashutosh Dwivedi, D Mishra and Prem k Kalra

1. Introduction

Uncertainty comes in many forms and in the

real world problems it is usually impossible to

avoid uncertainties. At the empirical level,

uncertainties are associated with the

measurement errors and resolution limits of

measuring instruments. At the cognitive level, it

emerges from the vagueness and ambiguities

in natural language. At the social level, it is

created and maintained by people for

different purposes (e.g. privacy, secrecy etc.).

The processing of uncertainties in the

information’s have always been a hot topic of

research since mainly the 18th century. Up to

middle of the 20th century, most theoretical

advances were devoted to the theory of

probabilities through the works of eminent

mathematicians like J. Bernoulli (1713), A. De

Moivre (1718), T. Bayes (1763), P. Laplace

(1774), K. Gauss (1823), S. Poisson (1837), E.

Borel (1909), R. Fisher (1930), A.

Kolmogorov (1933), B. De Finetti (1958), L.

Savage (1967), T. Fine (1973), E. Jaynes

(1995), to name just few of them.

The second half of the 20th century had

become very prolific for the development of

new original theories dealing with

uncertainties and imprecise information’s.

Mainly three major theories are available now as

alternative of the theory of probability for

the automatic plausible reasoning in expert

systems: the fuzzy set theory developed by

L. Zadeh in sixties (1965), the Shafer’s

theory of evidence in seventies (1976) and

the theory of possibilities by D. Dubois

and H. Prade in eighties (1985).

2. Sources of Uncertainties and Inexact

Information

The sources of uncertainty can be given as

follows:

2.1. Incomplete Domain Knowledge

The knowledge about the domain in which

work is done may be imprecise or incomplete.

Incomplete domain knowledge compels to

use some form of rules or heuristics, which

may not always give appropriate or correct

results as outcome of the work.

2.2. Noisy and Conflicting Data

Any data when it is collected with some

purpose will always not be accurate and

complete. Evidences from different sources

may be conflicting or noisy. For example it

can be shown that the statement “Ram is tall”

is vague and results in sources of uncertainty.

Suppose given a number x represents the

height of Ram in feets then x, xÎ [0,8] is given.

It is to be decided if this number is large

enough to decide the tallness of a person or

not. One way to answer this question is that if

x ³ d , (where d is some threshold or limit)

then it is large enough to say that a person is

tall else he is not. Second way can be thought

as to mark either agree or disagree or place no

mark based on subjective knowledge of

source and this arises the uncertainty whether

is large. Another way is to create a scale of

measurement. If we are on one end of the

scale then we completely disagree and if we

are on another extreme end then we

completely agree. But if we are in between,

we have some value associated with our

measurement. Thus there is a lack of sharp

boundaries for the result.

2.3 Incomplete Information

It occurs when a factor in a decision or model

1

is not known. Sometimes it can be resolved

(through research, enquiry etc.) but not always.

Some factors are necessarily uncertain because

they are indeterminate, e.g. all future

developments. Sometimes they may be

determinate but not practically measurable.

Factors or parameters change with time, hence

it also produces a kind of incomplete

information which further leads to uncertainty.

2.4 Selection among the alternatives

When we have a situation where there are

several alternatives available, there may be

disagreement in a choosing among several

alternatives. This procedure also leads to

uncertainty.

Arising from interpretation of data by a

person.

(B) Linguistic Uncertainty

(i) Vagueness

(ii) Ambiguity

(iii) Context Dependency

(Iv) Nonspecificity

Human reasoning often involves inexact

information. The sources and the nature of

inexact information may be different for

different problem domain. For example the

possible logical sources of the inexactness of

information for the medical domain are:

Lack of adequate data

Inconsistency of data

·

Inherent human fuzzy concept

·

Matching of similar situations

·

Different opinions

Ignorance

·

·

Lack of available theory to describe a

particular situation

·

·

2.5 Linguistic Imprecision

In real world people share their thoughts by

speaking. People often make use of imprecise

terms to express their own subjective

knowledge. But when such imprecise terms are

interpreted by others, who are not familiar with

the meaning or their context changes, this

situations may lead to uncertainty. There are

mainly two types of uncertainty as proposed by

Regan (2002). The first one is called as Epistemic

Uncertainty (indeterminate facts) and other one

is Linguistic Uncertainty (indeterminacy in

language).

(A) Epistemic Uncertainty Following factors can

be considered the causes of such uncertainties.

(i) Systematic error

It is the result of bias or miscalibrationc in

experiment while doing some experimentation.

(ii)Measurement error

It is nothing but imprecision or inaccuracy in

the measurement procedure itself.

(iii) Natural Variation

This is due to the unpredictable changes that

occur in surroundings.

(iv) Inherent Randomness

This is due to the factors contributing (i), (ii) or

(iii) or combination of them.

(v) Imperfect Data

The data or information used in decision

making procedure may be corrupt and

imperfect which further leads to wrong

decision.

(vi)Subjective Judgment

2

For example, at the starting of the consultation

session, a doctor asks the patient and the

person accompanying him/her about the

condition of the patient. When the patient

describes his/her situation, there are

inexactness of informations such as source of

disease, period of suffering or about symptoms

of disease and the doctor has to deal with this

kind of incomplete, inexact information’s.

Thus the proper management of inexact

information is necessary for decision making.

3. Methods to Handle Uncertainties

Different methods for handling uncertainties

are as follows:

1. Probability Theory

2.Certainty Factor

3.Dempster Shafer Theory of Evidence

4.Possibility Theory

3.1 Probability Theory

Probability has been discussed for a long time.

Indeed, the arguments go back over two or

three centuries. There are three schools of

thought, each leading to approximately similar

mathematical methods.

Three views of probability:

• Frequentist

• Mathematical

• Bayesian (knowledge-based)

One school of thought treats the word

"Probability" as a synonym for "frequency" or

"fraction" of events. If we are trying to

determine the fraction of defects in a lot (an

enumerative problem), we imagine that the lot

before us has been drawn at random for a

"super lot", that is, a lot of lots. Then we

sample it and based on the sample, attempt to

say something useful about the entire lot and,

by inference, the characteristics of the "super

lot".

2

Other people like the "long run frequency"

interpretation. The idea is that if we were to

repeat what we are doing, over and over again,

if only in our head, we can imagine that the

fraction of defects (or whatever we are finding)

is in some way, the "probability".

There are the "equal a-priori" thinkers, who,

like Laplace, considered that every problem

could be considered broken into cases, which

had "equal a-priori probability". Tossing of

dice or dealing of cards are some examples.

Then there are the "subjectivists" who are

Bayesians, but they start from a different basis.

They say that the probability measures strength

of belief. To them a probability assignment

represents a logical combination of what we

believe and what the data tell us to believe. As a

result, the end up using Bayes equation and,

except for the way they get started on a

problem. The math is similar but, again, there

are important differences in the way they would

formulate the problem and how they take into

account fact, what is known and what is

unknown. This debate continues to occur

among the Bayesian community.

Probability represents a unique encoding of

incomplete information. The essential task of

probability theory is to provide methods for

translating incomplete information into this

code. The code is unique because it provides a

method, which satisfies the following set of

properties for any system:

(a) If a problem can be solved in more than

one way, all ways must lead to the same answer.

(b) The question posed and the way the answer

is found must be totally transparent. There

must be no "laps of faith" required to

understand how the answer followed from the

given information’s.

(c) The methods of solution must not be "adhoc". They must be general and admit to being

used for any problem, not just a limited class of

problems. Moreover the applications must be

totally honest. For example, it is not proper for

someone to present incomplete information,

develop an answer and then prove that the

answer is incorrect because in the light of

additional information a different answer is

obtained.

(d)The process should not introduce

information that is not present in the original

statement of the problem.

3.1.1. Basic terminologies used in Probability Theory

and Uncertainty handling using probability theory

(through example):

(a) The Axioms of Probability

The language system of probability theory, as

most other systems, contains a set of axioms

(un-provable statements which are known to

be true in all cases) that are used to constrain

the probabilities assigned to events. Three

axioms of probability are as follows:

1. All values of probabilities are between zero

and one i.e. A[0 P ( A) 1]

2. Probabilities of an event that are necessarily

true have a value of one, and those that are

necessarily false have a value of zero i.e.

P (True ) 1 and P ( False) 0 .

3. The probability of a disjunction is given by:

A, B[ P ( A B ) P ( A) P ( B ) P ( A B )

An important result of these axioms is

calculating the negation of a probability of an

event . If we know that P ( A) 0.55 then it

follows from these axioms that,

P ( A) 1 P ( A) 0.45 .

(b) The Joint Probability Distribution

The joint probability distribution is a function

that specifies a probability of certain state of

the domain given the states of each variable in

the domain. Suppose that our domain consists

3

Of three random variables or atomic events

{x1 , x2 , x3 } . Then an example of the joint

probability distribution of this

domain where x1 = 1, x2 0 and x3 = 1 would

be P( x1 , x2 , x3 ) = some value depending upon

the values of different probabilities of x1 , x2

and x3 . The joint probability distribution will

be one of the many distributions that can be

calculated using a probabilistic reasoning

system.

(c) Conditional Probability and Bayes'

Rule

Suppose a rational agent begins to perceive

data from its world, it stores this data as

evidence. This evidence is used to calculate a

posterior or conditional probability which will

be more accurate than the probability of an

atomic event without this evidence (known as a

prior or unconditional probability).

Example:

-The probability that a car can start is 0.96 .

-The probability that a car can move is 0.94.

-The probability that a car both starts and

moves is 0.91.

Now suppose that the agent perceives

information that the car starts. The agent can

now calculate a more accurate probability that

the car moves given that the car started.

Ø

What is the probability that the car moves given

that the car starts?

In order to calculate the example above, we

need a mathematical formula to relate the

conditional probability to the probability of

the given atomic event, and the probability of

the conjunction of the two events occurring.

Lets define:

P( A | B ) = Probability of

happening of A where

B has already happened.

P( A Ç B) =Probability of happening of A & B at

same time.

P( B) = Probability of happening of B alone.

So the relation

P (A / B) =

P( A Ç B)

P(B)

(1)

Now using this equation for conditional

probability, to calculate our example:

4

P ( moves | starts ) =

P ( moves Ç starts )

P(starts )

0.91

=

= 0.95(approx )

0.96

The agent would be able to update the prior

probability of the car moving from 0.94 to 0.95

after perceiving the evidence that the car

started. Algebraic manipulation of the

equation for conditional probability yields the

product rule.

P ( A Ç B) = P( A | B).P( B)

And , since

P( A | B) =

P ( A Ç B) = P( B Ç A)

P( B | A).P( A)

P( B)

Using the equations for the product rule above,

and since P( A Ç B) = P( B Ç A) , we can now

derive Baye’s Rule as:

(2)

P( B | A).P( A)

P( A | B) =

P( B)

Baye’s Rule is of great importance in practice.

Using the example above again, let us add the

knowledge that the probability that the car

moves given that the car starts is 0.95. We can

now find the probability that the car starts given that the

car moves.

P(moves | starts ).P( starts )

P(moves )

0.95 ´ 0.96

=

= 0.97(approx)

0.94

P( starts | moves ) =

(D) Conditional Independence

When the result of one atomic event, A, does

not affect the result of another atomic event, B,

those two atomic events are know to be

independent of each other and this helps to

resolve the uncertainty. This relationship has

the following mathematical property :

P( A, B) = P( A).P( B)

Another mathematical implication is that

P( A | B) = P( A)

Independence can be extended to explain

irrelevant data in conditional relationships. In

previous example, assume that we want to

know the probability of a car starting. We are

given information that the car's spark plugs

fire, and that the battery is charged,

P (starts|sparkplugs Ç battery).

therefore irrelevant to finding the probability

that the car starts.

P (starts | sparkplugs Ç battery )

= P( starts | sparkplugs )

Advanta ges and Pr oblems with

Probabilistic Method:

Advantages

(1) Formal foundation

(2) Reflection of reality (a posteriori)

(3)Well-defined semantics for decision

making

Problems

(1) This may be inappropriate.

• The future is not always similar to the past.

(2) Complex data handling

• Requires large amounts of probability data.

• Non-sufficient sample sizes.

(3) Inexact or incorrect

•Especially for subjective probabilities.

•Subjective evidence may not be reliable.

(4) Ignorance

•Probabilities must be assigned even if no

information is available.

•Assigns an equal amount of probability to all

such items.

•Independence of evidences assumption often

not valid.

(5) Non-local reasoning

•Requires the consideration of all available

evidence, not only from the rules currently

under consideration.

•High computational overhead.

(6) No compositionality

•Complex statements with conditional

dependencies cannot be decomposed into

independent parts.

•Relationship between hypothesis and

evidence is reduced to a number.

(7)Always requires prior probabilities which

are very hard to found out apriori.

3.2. Certainty Factor

Certainty factor is used to express how

accurate, truthful or reliable you judge a

predicate to be. This issue is how to combine

various judgments. It is neither a probability

nor a truth value but a number that reflects the

overall belief in a hypothesis given available

information. It may apply both to facts and to

rules, or rather to the conclusion(s) of rules.

They are logic and rules which provide all or

nothing answers.

Decision is based on evidences and

interpretation of evidences is subjective. Idea

here is to minimize amount of computation

required to update the probability, as evidences

are accumulated incrementally. Approach used

is to model human expert belief about events

rather than probabilities of events. Probability

described frequency of occurrence of

reproducible events but in CF we represent

amount of support for a particular fact based

on some other facts. Probability does not

account for positive and negative examples but

CF does.

CF (Certainty factor) denotes the belief in a

hypothesis H given that some pieces of

evidence E are observed. No statement about

the belief means that no evidence i.e.

separation of belief, disbelief, ignorance is

possible. It ranges from -1 to +1 (Scale on same

range) where

CF=-1 no belief at all in the hypothesis (H)

CF= +1 fully believes the hypothesis (H)

CF=0 nothing is known about the hypothesis

(H)

Certainty factor combine belief and disbelief

into a single number based on some evidence.

Strength of the belief or disbelief in H

depends on the kind of the observed evidence

E:

MB( H , E ) - MD( H , E )

CF ( H , E ) =

1 - min[ MB( H , E ) - MD( H , E )]

MB is a measure of belief, MD is a measure

of disbelief. Suppose MB( E ® H ) = 0.7 and

MB( E ® H ' ) = -0.3. In probability theory this

is true but not in CF.

• Positive CF implies evidence support

hypothesis since MB < MD

• CF of 1 means evidence definitely supports

the hypothesis.

• CF of 0 means either there is no evidence

or that the belief is cancelled out by the

disbelief.

• Negative CF implies that the evidence favors

negation of hypothesis since MB < MD

5

Certainty Factor Algebra :

There are rules to combine CFs of several

facts:

Interpretation of CF value:

Uncertain terms

Definitely Not

Almost Not

Probably Not

May be not

Unknown

May be

Probably

Almost Certain

Definitely

(CF ( x1 ) AND CF ( x2 ) = min(CF ( x1 ), CF ( x2 ))

(CF ( x1 ) OR CF ( x2 ) = max(CF ( x1 ), CF ( x2 ))

A rule may also have a certainty factor

CF (rule) .

CF (action) = CF (condition) * CF (rule)

Consequent of multiple rules: Suppose the

following

IF A is X THEN C is Z

IF B is Y THEN C is Z

What certainty should be attached to C having Z if

both rules are fired?

CFR1 (C ) + CFR2 (C ) - CFR1 (C )* CFR2 (C )

When CFR (C ) and CFR2 ( C ) are both

1

positive

CFR1 (C) CFR2 (C) CFR1 (C)*CFR2 (C)

When

CFR1 (C) and CFR2 (C) are both

negative

CFR1 (C) + CFR2 (C)

1- min[| CFR1 (C) |,| CFR2 (C) |]

When

CFR1 (C ) and CFR2 (C ) are of

opposite sign

Where, CFR (C ) is the confidence in hypothesis

established by Rule 1 and CFR (C ) is the

confidence in hypothesis established by Rule 2.

CF

-1

-0.8

-0.6

-0.4

-0.2 or 0.0

0.4

0.6

0.8

1

Difficulties with certainty Factors

•Results may depend on the order in which

evidences are considered in some cases. For

example:

Suppose CF ( A) = 0.4, CF ( B1 ) = 0.3 and

CF ( B2 ) = 0.6

( A Ç ( B1 È B2 ) is C

If

then it is evaluated as

CF (C )= min(0.4,max(0.3,0.6)) = min(0.4,0.6) = 0.4

If they are evaluated as follows :

( A Ç B1 then CF(C(1)))

and if

( A Ç B2 then CF(C(1)))

Then

CF(C) = 0.3 + 0.4 - 0.3 ´ 0.4 = 0.58

Thus, the results vary with the way in which the

evidences are considered.

• Combination of non-independent evidences

is unsatisfactory.

• New knowledge may require changes in the

certainty factors of existing knowledge.

• Not suitable for long increase chains.

Comparison of Certainty Factors with

Probability Theory

1

2

Example

CF(Sheep is a dog) = 0.7;

CF(Sheep has wings) = -0.5

CF(Sheep is a dog and has wings) = min(0.7, 0.5) = -0.5

Suppose there is a rule. If x has wings then x is a

bird. Let the CF of this rule be 0.8

If (Sheep has wings) then (Sheep is a bird)

= -0.5 * 0.8 = -0.4

6

•Probability theory is used in areas where

statistical data is available whereas certainty

factors are used in the areas where expert’s

judgments are used.

e.g., Diagnosis of disease.

•Assumptions of conditional independence

cannot be made.

•In probability theory Measure of Beliefs:

(MB(Evidence->Hypothesis + MB (Evidence> Not hypothesis) = 1. But in certainty factor

this is not true.

•Sometimes when two events A and B are

given then CF(A B) = CF(A B) .

•Used in cases where probabilities are not

known or too difficult or expansive to obtain.

3.3. Dempster-Shafer Theory of Evidence

Dempster-Shafer Theory (DST) is a

mathematical theory of evidence. The seminal

work on the subject is done by Shafer [Shafer,

1976], which is an expansion of previous work

done by Dempster [Dempster, 1967]. In a finite

discrete space, Dempster-Shafer theory can be

interpreted as a generalization of probability

theory where probabilities are assigned to sets as

opposed to mutually exclusive singletons. In

traditional probability theory, evidence is

associated with only one possible event. In

DST, evidence can be associated with multiple

possible events, e.g., sets of events. DST allows

the direct representation of uncertainty. There

are three important functions in DempsterShafer theory:

• the basic probability assignment function

(bpa or m),

• the Belief function (Bel), and the

• Plausibility function (Pl).

Basic probability assignment does not refer to

probability in the classical sense. The bpa,

represented by m, defines a mapping of the

power set to the interval between 0 and 1.

m:

P(X) 0,1]

(1)

The bpa of the null set is 0 i.e.

m (Æ ) = 0

(2)

Where Æ is the null set

The value of the bpa for a given set A

(represented as m(A), A is a set in the power set

AÎ P(X)), expresses the proportion of all

relevant and available evidence that supports

the claim that a particular element of X (the

universal set) belongs to the set A but to no

particular subset of A.

m (A) = 1

(3)

AÎP (X )

The value of m(A) pertains only to the set A

and makes no additional claims about any

subsets of A. Any further evidence on the

subsets of A would be represented by another

bpa, i.e. B A, m(B) would the bpa for the subset

B. The summation of the bpa’s of all the subsets

of the power set is 1. As such, the bpa cannot be

equated with a classical probability in general.

From the basic probability assignment, the

upper and lower bounds of an interval can be

defined. This interval contains the precise

probability of a set of interest (in the classical

sense) and is bounded by two nonadditive

continuous measures called Belief and

Plausibility. The lower bound Belief for a set A is

defined as the sum of all the basic probability

assignments of the proper subsets (B) of the

set of interest (A) (B - A).

Bel(A) = m(B)

(4)

BÍA

The upper bound, Plausibility , is the sum of all

the basic probability assignments of the

sets (B) that intersect the set of interest

(A)(B Ç A ¹ Æ ).

Pl(A) = m(B)

(5)

BÇA ¹ Æ

The two measures, Belief and Plausibility are

nonadditive. It is possible to obtain the basic

probability assignment from the Belief measure

with the following inverse function:

m(A) =

(-1)

|A-B|

(6)

Bel(B)

BÍA

where|A-B| is the difference of the cardinality

of the two sets.

In addition to deriving these measures from the

basic probability assignment (m), these two

measures can be derived from each other. For

example, Plausibility can be derived from Belief

in the following way:

Pl(A) = 1- Bel (not A) and

Bel (A) = 1 - Pl(not A)

(7)

where not A is the classical complement of A.

This definition of Plausibility in terms of

belief comes from the fact that all basic

assignments must sum to 1. And because of

this, given any one of these measures (m(A),

Bel(A), Pl(A)) it is possible to derive the values

of the other two measures. The precise

probability of an event (in the classical sense)

lies within the lower and upper bounds of Belief

and Plausibility, respectively.

Bel(A)(10)

< P(A) >Pl(A)

The probability is uniquely determined if Bel

(A) = Pl(A). In this case, which corresponds to

classical probability, all the probabilities, P(A)

are uniquely determined for all subsets A of

7

the universal set X. Otherwise, Bel (A) and

Pl(A) may be viewed as lower and upper

bounds on probabilities, respectively, where the

actual probability is contained in the interval

described by the bounds.

Dempster’s rule, we find that m(brain tumor) =

Bel(brain tumor) = 1. Clearly, this rule of

combination yields a result that implies

complete support for a diagnosis that both

physicians considered to be very unlikely.

RULES FOR COMBINING EVIDENCES

Example

Combination rules are the special types of

aggregation methods for data obtained from

multiple sources. These multiple sources

provide different assessments for the same

frame of discernment and Dempster-Shafer

theory is based on the assumption that these

sources are independent.

Dempster’s rule combines multiple belief

functions through their basic probability

assignments (m). The combination (called the

joint m12) is calculated from the aggregation of

two bpa’s m1 and m2 in the following manner:

M12(A)= m1(B) m2(C) /( 1- K )

(11)

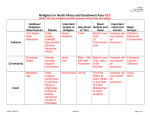

Suppose two experts are consulted regarding a

system failure. The failure could be caused by

Component A, Component B or Component

C. The distributions can be represented by the

following:

Expert 1:

m1(A) = 0.99 (failure due to Component A)

m1(B) = 0.01 (failure due to Component B)

Expert 2:

m2(B) = 0.01 (failure due to Component B)

m2(C) = 0.99 (failure due to Component C)

B ÇC = A

when A ¹ Æ

and m12(Æ ) = 0

Expert 1

; when A=

where K = m1(B) m2 (C)

Æ

(12)

A

B

C

Failure

cause

(13)

0.99

0.01

0.0

m1

Æ

K represents basic probability mass associated

with conflict. This is determined by the

summing of the products of the bpa’s of all

sets where the intersection is null. The

denominator in Dempster’s rule, 1-K, is a

normalization factor. This has the effect of

completely ignoring conflict and attributing any

probability mass associated with conflict to the

null set. The problem with conflicting evidence

and Dempster’s rule was originally pointed out

by Lotfi Zadeh in his review of Shafer’s book, A

Mathematical Theory of Evidence.

Zadeh had given a compelling example of

erroneous result found by using Dempster

Shafer theory. Suppose a patient is seen by two

physicians regarding the patient’s neurological

symptoms. The first doctor believes that the

patient has either meningitis with a probability

of 0.99 or a brain tumor, with a probability of

0.01. The second physician believes the patient

actually suffers from a concussion with a

probability of 0.99 but admits the possibility of

a brain tumor with a probability of 0.01. Using

the values to calculate the m(brain tumor) with

8

Failure

E cause m2

X

P

A

Æ

Æ

E

m1(A)m2(A) m1(B)m2(A) m1(C)m2(A)

R

A

0.0 0.99 x 0.0 0.01 x 0.0 0.0 x 0.0

T

= 0.0

= 0.0

= 0.0

2

Æ

B

Æ

m1(A)m2(B) m1(B)m2(B) m1(C)m2(B)

B

0.01 0.99 x 0.01 0.01 x 0.01 0.0 x 0.01

= 0.0

= 0.0099 = 0.0001

C

Æ

Æ

m1(A)m2(C) m1(B)m2(C) m1(C)m2(C)

C

0.9 9 0.99 x 0.99 0.01 x 0.99 0.0 x 0.99

= 0.0

= 0.0099

=0.9801

Table 1 : Dempster Combination of Expert 1 and Expert 2

Ø Steps:

Using Equations 11-13:

1. To calculate the combined basic probability

assignment for a particular cell, simply multiply

the masses from the associated column and

row.

2. Where the intersection is nonempty, the

masses for a particular set from each source are

multiplied, e.g., m12(B) = (0.01)(0.01) = 0.0001.

3. Where the intersection is empty, this

represents conflicting evidence. For the empty

intersection of the two sets A and C associate

with Expert 1 and 2, respectively, there is a

mass associated with it.

m1(A) m2(C)=(0.99)(0.99) =(0.9801).

4. Then sum the masses for all sets and the

conflict.

5. The only nonzero value is for the

combination of B, m12(B) = 0.0001.

6. For K, there are three cells that contribute to

conflict represented by empty intersections.

Using Equation 13, K = (0.99)(0.01) +

(0.99)(0.01) + (0.99)(0.99) = 0.9999

7. Using Equation 11, calculate the joint, m1(B)

m2(B) = (.01)(.01) / [1-0.9999] = 1

Though there is highly conflicting evidence,

the basic probability assignment for the failure

of Component B is 1, which corresponds to a

Bel (B) = 1. This is the result of normalizing

the masses to exclude those associated with

conflict. This points to the inconsistency when

Dempster’s rule is used in the circumstances of

significant relevant conflict that was pointed

out by Zadeh.

CONTEXT RELAVENCE

CONFLICT

EVIDENCE

Type of evidence

Amount of evidence

Accuracy of evidence

SOURCES

-Type of sources

-Number of sources

-Reliability of sources

-Dependence between sources

-Conflict between sources

COMBINATION

OPERATION

-Type of operation

-Algebraic properties

-Handling of conflict

-Advantages

- Disadvantages

Figure 1: Important Issues in the Combination of Evidence

Much of the research in the combination rules

in Dempster-Shafer theory is devoted to

advancing a more accurate mathematical

representation of conflict. In Figure 1, all of

the contextual considerations like the type,

amount, and accuracy of evidence as well as the

type and reliability of sources and their

interdependencies can be interpreted as

features of conflict assessment. Once values

are established for degree of conflict, the most

important consideration is the relevance of the

existing conflict. Though conflict may be present, it

may not always be contextually relevant.

Advantages and Problems of DempsterShafer Theory of Evidence:

Advantages

Clear, rigorous foundation

Ability to express confidence through intervals

Proper treatment of ignorance

Problems

Non-intuitive deter mination of mass

probability

Very high computational overhead

May produce counterintuitive results due to

normalization

3.4. Possibility Theory

Possibility theory was introduced to allow a

reasoning to be carried out on imprecise or

vague knowledge, making it possible to deal

with uncertainties on this knowledge.

Possibility is normally associated with some

fuzziness, either in the background knowledge

on which possibility is based, or in the set for

which possibility is asserted. This constitutes a

method of formalizing non-probabilistic

uncertainties on events: i.e. a mean of assessing

to what extent the occurrence of an event is

possible and to what extent we are certain of its

occurrence, without knowing the evaluation of

the possibility of its occurrence. For example,

imprecise information such as “Ram’s height is

above 170 cm” implies that any height h above

170 is possible and any height equal to or below

170 is impossible for him. This can be

represented by a “possibility” measure defined

on the height domain whose value is 0 if

h < 170 and 1 if h ³170

(0 = impossible and 1 = possible). When the

predicate is vague like in “Ram is tall”, the

possibility can be accommodate degrees, the

largest the degree, the largest the possibility.

Possibility theory was initialized by Zadeh, as a

complement to probability theory to deal with

9

uncertainty. Since Zadeh, there has been much

research on possibility theory. There are two

approaches to possibility theory: one,

proposed by Zadeh, was to introduce possibility

theory as an extension of fuzzy set theory; the

other, taken by Klir and Folger and others, was to

introduce the possibility theory in the frame

work of Dempster-Shafer’s Theory of

evidence. The first approach is intuitively

plausible and closely related to the original

motivation for introducing possibility theoryrepresenting the meaning of information. The

second approach puts possibility theory on an

axiomatic basis.

1. The intuitive Approach to Possibility (as

proposed by Zadeh in light of Fuzzy set

theory):

Let F be a fuzzy set in a universe of discourse U

which is characterized by its membership

function m F (U) , which is interpreted as the

compatibility of u U with the concept labeled

F. Let X be a variable taking values in U and F

act as a fuzzy restriction, R(X), associated with

X. Then the proposition "X is F ", which

translates into R(X) = F associates a possibility

distribution, p X x , with X which is postulated

to be equal to R(X). The possibility distribution

function, X (u ) ,characterizing the possibility

distribution p X is defined to be numerically

equal to the membership function F (u ) of F,

that is,

p X := m F ; Where the symbol := stands for

"denotes" or "is defined to be".

Example:

Let U be the universe of integers and F be the

fuzzy set of small integers defined by

F = {(1, 1), (2, 1), (3, 0.8), (4, 0.6), (5, 0.4), (6,

0.2)}

Then the proposition "X is a small integer"

associates with X the possibility distribution

p x = F ; in which a term such as (3, 0.8) signifies

that the possibility that x is 3, given that x is a

small integer, is 0.8.

2. The Axiomatic Approach to Possibility:

For consonant body of evidences, the belief

measure becomes necessity measure and

plausibility measure becomes possibility

measure.

Hence Bel ( A Ç B) = min[bel ( A), bel(B)]

10

becomes h ( A Ç B ) = min[h ( A), h (B)]

And

Pl ( A È B) = max[ Pl ( A), Pl(B)]

Becomes p ( A È B) = max[p ( A), p (B)]

And also

( A) 1 ( A ) ; ( A) 1 ( A)

Now if possibility distribution function is

defined as: r : U [0,1]

This mapping is related to possibility measure

as: ( A) max r(X)

X A

Now the basic probability assignment is

defined for consonant body of evidences as:

m=(1 , 2 , 3 ,......., n )

n

mi = 1

Where å

i=

and if possibility distribution function is

defined as: r ( 1 , 2 , 3 , ............., n )

1

n

Where

ri =

n

å m = åm (A )

k

k =i

k

k =i

Example

Let three sets represents different geometrical

shapes of a figure as

A = { circles} ; B = {Octagons} ;

C = {Pentagons}

There are two expert opinions m1 and m2

regarding the shape of figure. The focal

elements are given as follows in table below.

Focal

elements

Null

C

B

BÈC

A

AÈ C

AÈ B

A È BÈ C

A

B

C

m1

m2

0

0

0

0

1

1

1

1

0

0

1

1

0

0

1

1

0

1

0

1

0

1

0

1

0

0

0.2

0.3

0

0

0

0.5

0

0.1

0.1

0

0.1

0

0.3

0.4

For Expert 1 opinion, we have

B Ì (BÈ C)Ì (AÈ B È C)

Thus the probability distribution is given by

r = ( 1, 1, 0.8, 0.5, 0.5, 0.5, 0.5)

But for Expert 2 the evidences are not nested

and hence plausibility measures leads to

conflict.

Comparison Between Possibility Theory and

Probability Theory

1. Probability theory is based on measure of one

type: Probability measure.

Possibility theory is based on the measures of

two types : Possibility and Necessity

measure.

2. Probability theory: Here body of evidences

consists of singletons.

Possibility theory: Body of evidences consists

of a family of nested subsets.

3. Probability theory: Additivity i.e.

P ( A È B) = P( A) + P( B ) - P( A Ç B)

Possibility theory: Max/Min Rules i.e.

Poss ( A È B) = max[ Poss ( A), Poss ( B )]

4. Probability theory: P( A) + P( A) = 1

Possibility theory:

Poss ( A) + Poss ( A) ³ 1 ;

Nec( A) + Nec( A) £ 1

max[ Poss ( A), Poss ( A)] = 1

min[ Nec( A), Nec( A)] = 0

5. Probability theory: P ( A) =

p ( x)

r : X ® [ 0,1] ,

Poss (A) = max(r) A

xÎ A

Difference between Probability Measures

Possibility Measures

and

1. Notion of noninteractivness on possibility

theory is analogous to the notion of

independence in probability theory. If two

random variables x and y are independent, their

joint probability distribution is the product of

their individual distributions. Similarly if two

linguistic variables are non interactive, their

joint probabilities are formed by combining

their individual possibility distribution through

a fuzzy conjunction operator.

2 Even through possibility and probability are

different, they are related in a way. If it’s

impossible for a variable x to take a value xi , it is

also impossible for x to have the value xi , i.e.

p X ( xi ) = 0 Þ PX ( xi ) = 0

In general, possibility can serve as an upper

hand on probability.

Probability Vs Possibility through an Example

x ÎA

Possibility theory: for

Similarity between Probability Measures

Possibility Measures

and

1. Each model based on a measure that maps

the subsets of the frame of discernment W to

the [0,1] interval and is monotype for inclusion.

The main property of Probability measure is

W

P :2 ® [0,1] And

P ( A È B) = P( A) + P ( B) - P( A Ç B)

"A,B Í W

Similarly the possibility measure can be given as

p :2 W® [0,1] and

p ( A È B) = max[p ( A), p ( B)], "A, B Í W

2. Possibility measures degree of ease for a

variable to be taken a value whereas

probability measures the likelihood for a

variable to take a value.

3. Being a measure of occurrence, the

probability distribution of a variable must add

to exactly 1. Whereas there is no such

restriction in possibility distribution since the

variable can have multiple possible values.

4. Possibility measures replace the additivity

axiom of probability with the weaker

subadditivity condition.

Consider the number of eggs X that Hans is

going to order tomorrow morning. Let p ( u)

The degree of ease with which Hans can eat

eggs. Let p(u) is the probability that Hans will

eat u eggs at breakfast tomorrow. Given our

knowledge, assume the value of p ( u) and p(u)

as shown in table below:

u

1

2

p ( u) 1

1

p(u) 0.1 0.8

3

4

5

6

7

8

1

0.1

1

0

0.8

0

0.6

0

0.4

0

0.2

0

We observe that whereas the possibility that

Hans may eat 3 eggs for breakfast is 1, the

probability that he may do might quite small

e.g. 0.1. Thus, a high degree of possibility does

not imply a high degree of probability, nor does

a low degree probability imply a low degree of

possibility. But if an event is impossible, it is

bound to be impossible.

Concluding Remark

The various forms of uncertainties may be

encountered simultaneously and it is necessary

that we be able to integrate them. E.g., in

common –sense reasoning, two or tree forms

of uncertainties are often encountered in the

same statement. Here in integration process of

various methods of dealing with uncertainties,

11

care must be taken to define the domain of

each method.

Understanding of the meaning of statements

and their translation into appropriate models is

delicate. For example, how do we translate

“usually bald men are old”. Which of

P(bald|old) or P(old|bald) is somehow large?

“When x shaves himself, usually x does not

die”, which conditioning is appropriate:

Pl(dead|shaving) or Pl(shaving|dead)? Is it a

problem of plausibility or possibility? These

examples are just illustrative of the kind of

problems that must be addressed.

Every method has some limitations in

comparison to other method and no one give

the full power to tackle all kind of uncertainties

that we face in real life. Almost no work has

been done in the area to integrate all methods.

References

1.George J. Kir and Bo Yuan (2002), “Fuzzy

Sets and Fuzzy Logic”, PHI

Publication.

2. Timothy J. Ross (1995), “Fuzzy Logic with Engineering Applications”, McGraw

Hill International Applications.

3.Philippe Smets (1999), “Verities of ignorance and the need for well-founded

theories”, IRIDIA University, Belgium

4. Philippe Smets (1999), “Theories of Uncertainties”, IRIDIA University, Belgium

5. Eric Raufaste , Rui da Silva Neves and Claudette Marine`, “Testing the

Descriptive validity in human judgments of uncertainty”, Reprint Version to appear

in “Artificial Intelligence”.

6. Helen M. Regan, Mark Colyvan and Mark A. Burgman (1999), A proposal for

fuzzy International Union for the Conservation of Nature (IUCN categories and

criteria“, Biological Coservation, Elsevier Science Ltd.

7. From class notes and exercises of course “Fuzzy Sets, Logics, Systems and

Applications EE658 (2004)” taught by Prof. P K Kalra at IIT Kanpur.

About the author: Ashutosh Dwivedi is pursuing his Ph. D. in the Department of Electrical

Engineering in Indian Institute of Technology Kanpur, India. He obtained Masters of

Technology in Control System from MBM Engineering College, Jodhpur. His major areas of

study include Control System, Neural Networks and Fuzzy Logic.

About the author: Deepak Mishra is pursuing his Ph. D. in the Department of Electrical

Engineering in Indian Institute of Technology Kanpur, India. He obtained Masters of

Technology in Instrumentation from Devi Ahilya University, Indore in 2003. His major field

of study is Neural Networks and Computational Neuroscience. (Home page:

http://home.iitk.ac.in/~dkmishra)

About the author: Professor Prem Kumar Kalra obtained his PhD in Electrical

Engineering from University of Manitiba, Canada. He is currently Professor and Head of

Electrical Engineering Department at IIT Kanpur, India. His research interests are Power

systems, Expert Systems applications, HVDC transmission, Fuzzy logic and Neural

Networks application and KARMAA (Knowledge Acquisition, Retention, Management,

Assimilation & Application).

12