* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download Confidence Interval

Inductive probability wikipedia , lookup

Degrees of freedom (statistics) wikipedia , lookup

Foundations of statistics wikipedia , lookup

Bootstrapping (statistics) wikipedia , lookup

History of statistics wikipedia , lookup

Taylor's law wikipedia , lookup

Resampling (statistics) wikipedia , lookup

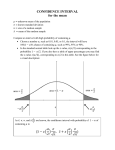

Learning Objectives for Chapter 8 After careful study of this chapter, you should be able to do the following: 1. 2. 3. 4. 5. 6. Construct confidence intervals on the mean of a normal distribution, using normal distribution or t distribution method. Construct confidence intervals on variance and standard deviation of normal distribution. Construct confidence intervals on a population proportion. Constructing an approximate confidence interval on a parameter. Prediction intervals for a future observation. Tolerance interval for a normal population. Chapter 8 Learning Objectives 2 Introduction Statistical Intervals For A Single Sample Confidence Interval. We have learned in the previous chapter how a parameter can be estimated from sample data It is important to understand how good is the estimate obtained. An interval estimate for population parameter is called a Confidence Interval. •The confidence interval is a random interval • The appropriate interpretation of a confidence interval (for example on ) is: The observed interval [l, u] brackets the true value of , with confidence 100(1-). Tolerance interval is another important type of interval estimate. Confidence interval • A range of values, derived from sample statistics, that is likely to contain the value of an unknown population parameter. • Because of their random nature, it is unlikely that two samples from a given population will yield identical confidence intervals. • But if you repeated your sample many times, a certain percentage of the resulting confidence intervals would contain the unknown population parameter. • The percentage of these confidence intervals that contain the parameter is the confidence level of the interval. • For example, suppose you want to know the average amount of time it takes for an automobile assembly line to complete a vehicle. You take a sample of completed cars, record the time they spent on the assembly line, and use the 1-sample t procedure to obtain a 95% confidence interval for the mean amount of time all cars spend on the assembly line. Because 95% of the confidence intervals constructed from all possible samples will contain the population parameter, you conclude that the mean amount of time all cars spend on the assembly line falls between your interval's endpoints, which are called confidence limits. 8-1.1 Confidence Interval and its Properties A confidence interval estimate for is an interval of the form l ≤ ≤ u, where the end-points l and u are computed from the sample data. There is a probability of 1 α of selecting a sample for which the CI will contain the true value of . The endpoints or bounds l and u are called lower- and upperconfidence limits ,and 1 α is called the confidence coefficient. Sec 8-1 Confidence Interval on the Mean of a Normal, σ2 Known 5 Confidence interval • Creating confidence intervals is analogous to throwing nets over a target with an unknown, yet fixed, location. Consider the graphic below, which depicts confidence intervals generated from 20 samples from the same population. The black line represents the fixed value of the unknown population parameter; the blue confidence intervals contain the value of the population parameter; the red confidence interval does not. • A 95% confidence interval indicates that 19 out of 20 samples (95%) from the same population will produce confidence intervals that contain the population parameter. • Confidence Interval on the Mean of a Normal Distribution, Variance Known Figure 8-1 Repeated construction of a confidence interval for . Guidelines for Constructing Confidence Intervals Prediction Interval: Provides prediction on future observations. Hw 2 groups • Probability Tables • Description, Objective and uses …5 ++ slides Confidence Interval on the Mean of a Normal Distribution, Variance Known 8-2.1 Development of the Confidence Interval and its Basic Properties • The endpoints or bounds l and u are called lower- and upper-confidence limits, respectively. • Since Z follows a standard normal distribution, we can write: Confidence Interval on the Mean of a Normal Distribution, Variance Known One sided confidence Interval Confidence Interval on the Mean of a Normal Distribution, Variance Known Example 8-1 Confidence interval • A range of values, derived from sample statistics, that is likely to contain the value of an unknown population parameter. • Because of their random nature, it is unlikely that two samples from a given population will yield identical confidence intervals. • But if you repeated your sample many times, a certain percentage of the resulting confidence intervals would contain the unknown population parameter. • The percentage of these confidence intervals that contain the parameter is the confidence level of the interval. • For example, suppose you want to know the average amount of time it takes for an automobile assembly line to complete a vehicle. You take a sample of completed cars, record the time they spent on the assembly line, and use the 1-sample t procedure to obtain a 95% confidence interval for the mean amount of time all cars spend on the assembly line. Because 95% of the confidence intervals constructed from all possible samples will contain the population parameter, you conclude that the mean amount of time all cars spend on the assembly line falls between your interval's endpoints, which are called confidence limits. Confidence Interval on the Mean of a Normal Distribution, Variance Known Figure 8-1 Repeated construction of a confidence interval for . Confidence Interval on the Mean of a Normal Distribution, Variance Known Confidence Level and Precision of Error The length of a confidence interval is a measure of the precision of estimation. Figure 8-2 Error in estimating with . x Sample Confidence Interval on the Mean of a Normal Distribution, Variance Known 8-2.2 Choice of Sample Size Confidence Interval on the Mean of a Normal Distribution, Variance Known EXAMPLE 8-2 Metallic Material Transition Example 8-2 8-1.3 One-Sided Confidence Bounds A 100(1 − α)% upper-confidence bound for is x z / n (8-3) and a 100(1 − α)% lower-confidence bound for is x z / n l Sec 8-1 Confidence Interval on the Mean of a Normal, σ2 Known (8-4) 26 Example 8-3 One-Sided Confidence Bound The same data for impact testing from Example 8-1 are used to construct a lower, one-sided 95% confidence interval for the mean impact energy. Recall that zα = 1.64, n = 10, = l, and x 64.46 . A 100(1 − α)% lower-confidence bound for is x z n 10 Sec 8-1 Confidence Interval on the Mean of a Normal, σ2 Known 27 Confidence Interval on the Mean of a Normal Distribution, Variance Known 8-2.5 A Large-Sample Confidence Interval for See example 8-3 Confidence Interval on the Mean of a Normal Distribution, Variance Known 8-2.5 A Large-Sample Confidence Interval for Definition Confidence Interval on the Mean of a Normal Distribution, Variance Known, Example 8-5 Mercury Contamination Example 8-3 Example 8-5 Mercury Contamination (continued) The summary statistics for the data are as follows: Variable Concentration N 53 Mean Median StDev Minimum Maximum Q1 Q3 0.5250 0.4900 0.3486 0.0400 1.3300 0.2300 0.7900 Because n > 40, the assumption of normality is not necessary to use in Equation 8-5. The required values are n 53, x 0.5250, s 0.3486 , and z0.025 = 1.96. The approximate 95 CI on is s s μ x z0.025 n n 0.3486 0.3486 0.5250 1.96 μ 0.5250 1.96 53 53 0.4311 μ 0.6189 x z0.025 Interpretation: This interval is fairly wide because there is variability in the mercury concentration measurements. A larger sample size would have produced a shorter interval. Sec 8-1 Confidence Interval on the Mean of a Normal, σ2 Known 31 • • • • • Text and Material Exam 1 Probability Tables Home Work Policy Attendance policy t-distribution • • • In probability and statistics, Student's t-distribution (or simply the t-distribution) is any member of a family of continuousprobability distributions that arises when estimating the mean of a normally distributed population in situations where thesample size is small and population standard deviation is unknown. he t-distribution plays a role in a number of widely used statistical analyses, including Student's t-test for assessing thestatistical significance of the difference between two sample means, the construction of confidence intervals for the difference between two population means, and in linear regression analysis. The Student's t-distribution also arises in theBayesian analysis of data from a normal family. The t-distribution is symmetric and bell-shaped, like the normal distribution, but has heavier tails, meaning that it is more prone to producing values that fall far from its mean. This makes it useful for understanding the statistical behavior of certain types of ratios of random quantities, in which variation in the denominator is amplified and may produce outlying values when the denominator of the ratio falls close to zero. The Student's t-distribution is a special case of the generalised hyperbolic distribution Cumulative distribution function Probability density function Confidence Interval on the mean of a normal distribution, Variance Unknown 8-2.2 The Confidence Interval on Mean, Variance Unknown Table III If and s are the mean and standard deviation of a random sample from x a normal distribution with unknown variance 2, a 100(1 ) confidence interval on is given by x t /2, n 1s/ n x t /2, n 1s/ n (8-7) where t2,n1 the upper 1002 percentage point of the t distribution with n 1 degrees of freedom. One-sided confidence bounds on the mean are found by replacing t/2,n-1 in Equation 8-7 with t ,n-1. Sec 8-2 Confidence Interval on the Mean of a Normal, σ2 Unknown 36 Example Example 8-6 Alloy Adhesion Construct a 95% CI on to the following data. 19.8 15.4 11.4 19.5 The sample mean is 10.1 18.5 14.1 8.8 14.9 7.9 17.6 13.6 7.5 12.7 16.7 11.9 15.4 11.9 15.8 11.4 15.4 11.4 x 13.71 and sample standard deviation is s = 3.55. Since n = 22, we have n 1 =21 degrees of freedom for t, so t0.025,21 = 2.080. The resulting CI is x t /2, n 1s / n x t /2, n 1s/ n 13.71 2.080(3.55) / 22 13.71 2.0803.55 / 22 13.71 1.57 13.71 1.57 12.14 15.28 Interpretation: The CI is fairly wide because there is a lot of variability in the measurements. A larger sample size would have led to a shorter interval. Sec 8-2 Confidence Interval on the Mean of a Normal, σ2 Unknown 39 Sec 8-7 to write 40 Sec 8-7 to write 41 • • • • Exam : First week on March No cell phones Signature Cheat Sheet First three Chapters + Sat 1 (Introduction) (Chi-square or χ²-distribution) • • The Chi Square test is the most important and most used method in statistical tests. The purpose of Chi Square test A-Test how well a sample fits a theoretical distribution B- The difference between an observed frequency and expected frequency. (differences between the two or more observed data). C- Its value can be calculated by using the given observed frequency and expected frequency. D- Test the independence between categorical variables. For example, a manufacturer wants to know if the occurrence of four types of defects (missing pin, broken clamp, loose fastener, and leaky seal) is related to shift (day, evening, overnight). • • • • The chi-squared distribution (also It is a special case of the gamma distribution and is one of the most widely used probability distributions in inferential statistics, For example, you can use a goodness-of-fit test of an observed distribution to a theoretical one and classification of qualitative data, and in confidence interval estimation for a population standard deviation of a normal distribution from a sample standard deviation. also to determine whether your sample data fit a Poisson distribution. The shape of the chi-square distribution depends on the number of degrees of freedom. The distribution is positively skewed, but skewness decreases with more degrees of freedom. When the degrees of freedom are 30 or more, the distribution can be approximated by a normal distribution. Goodness of Fit Test (observed and expected test) Failure times,Bulbs Poisson Distribution (no of defects in finite space A/C Mech sys..lamda represent mean and variance binomial and standard no upper bound of calls Chi Square Formula • The Chi Square is denoted by X2and the formula is given as: Here, • O = Observed frequency E = Expected frequency ∑ = Summation X2 = Chi Square value Confidence Interval on the (Variance and Standard Deviation of a Normal Distribution) Figure 8-8 Probability density functions of several 2 distributions. Distribution 2 Distribution 2 Let X1, X2, , Xn be a random sample from a normal distribution with mean and variance 2, and let S2 be the sample variance. Then the random variable X 2 n 1 S 2 2 (8-8) has a chi-square (2) distribution with n 1 degrees of freedom. Sec 8-3 Confidence Interval on σ2 & σ of a Normal Distribution 54 Confidence Interval on the Variance and Standard Deviation If s2 is the sample variance from a random sample of n observations from a normal distribution with unknown variance 2, then a 100(1 – )% confidence interval on 2 is (n 1) s 2 n 1 n 1 (n 1) s 2 n 1 (8-9) where and n 1 are the upper and lower 100/2 percentage points of the chi-square distribution with n – 1 degrees of freedom, respectively. A confidence interval for has lower and upper limits that are the square roots of the corresponding limits in Equation 8–9. Sec 8-3 Confidence Interval on σ2 & σ of a Normal Distribution 55 Sec 8-7 to write 56 Confidence Interval on the Variance and Standard Deviation of a Normal Distribution One-Sided Confidence Bounds Example 8-7 Detergent Filling An automatic filling machine is used to fill bottles with liquid detergent. A random sample of 20 bottles results in a sample variance of fill volume of s2 = 0.01532. Assume that the fill volume is approximately normal. Compute a 95% upper confidence bound. A 95% upper confidence bound is found from Equation 8-10 as follows: n 1 s 2 20 1 0.0153 2 2 10.117 2 0.0287 A confidence interval on the standard deviation can be obtained by taking the square root on both sides, resulting in 0.17 Sec 8-3 Confidence Interval on σ2 & σ of a Normal Distribution 58 Sec 8-7 to write 59 8-6 Tolerance and Prediction Intervals 8-6.1 Prediction Interval for Future Observation A 100 (1 )% prediction interval (PI) on a single future observation from a normal distribution is given by x t n 1 s 1 1 1 X n 1 x t n 1 s 1 n n (8-15) The prediction interval for Xn+1 will always be longer than the confidence interval for . Sec 8-6 Tolerance & Prediction Intervals 60 Repeated for comparison Example 8-11 Alloy Adhesion The load at failure for n = 22 specimens was observed, and found that x 13.71 and s 3.55. The 95% confidence interval on was 12.14 15.28. Plan to test a 23rd specimen. A 95% prediction interval on the load at failure for this specimen is x t n1s 1 1 1 X n1 x t n1s 1 n n 13.71 2.0803.55 1 1 1 X 23 13.71 2.0803.55 1 22 22 6.16 X 23 21.26 Interpretation: The prediction interval is considerably longer than the CI. This is because the CI is an estimate of a parameter, but the PI is an interval estimate of a single future observation. See next slide Sec 8-6 Tolerance & Prediction Intervals 61 Example 8-6 Alloy Adhesion Construct a 95% CI on to the following data. 19.8 15.4 11.4 19.5 The sample mean is 10.1 18.5 14.1 8.8 14.9 7.9 17.6 13.6 7.5 12.7 16.7 11.9 15.4 11.9 15.8 11.4 15.4 11.4 x 13.71 and sample standard deviation is s = 3.55. Since n = 22, we have n 1 =21 degrees of freedom for t, so t0.025,21 = 2.080. The resulting CI is x t /2, n 1s / n x t /2, n 1s/ n 13.71 2.080(3.55) / 22 13.71 2.0803.55 / 22 13.71 1.57 13.71 1.57 12.14 15.28 Interpretation: The CI is fairly wide because there is a lot of variability in the measurements. A larger sample size would have led to a shorter interval. Sec 8-2 Confidence Interval on the Mean of a Normal, σ2 Unknown 62 8-6.2 Tolerance Interval for a Normal Distribution A tolerance interval for capturing at least % of the values in a normal distribution with confidence level 100(1 – )% is x ks, x ks where k is a tolerance interval factor found in Appendix Table XII. Values are given for = 90%, 95%, and 99% and for 90%, 95%, and 99% confidence. Sec 8-6 Tolerance & Prediction Intervals 63 Example 8-12 Alloy Adhesion The load at failure for n = 22 specimens was observed, and found that x 13.71 and s = 3.55. Find a tolerance interval for the load at failure that includes 90% of the values in the population with 95% confidence. From Appendix Table XII, the tolerance factor k for n = 22, = 0.90, and 95% confidence is k = 2.264. The desired tolerance interval is x ks [13.71 2.264 3.55 , x ks , 13.71 2.264 3.55] (5.67 , 21.74) Interpretation: We can be 95% confident that at least 90% of the values of load at failure for this particular alloy lie between 5.67 and 21.74. Sec 8-6 Tolerance & Prediction Intervals 65 Important Terms & Concepts of Chapter 8 Chi-squared distribution Confidence coefficient Confidence interval Confidence interval for a: – Population proportion – Mean of a normal distribution – Variance of a normal distribution Confidence level Error in estimation Large sample confidence interval 1-sided confidence bounds Precision of parameter estimation Prediction interval Tolerance interval 2-sided confidence interval t distribution Chapter 8 Summary 67 Guidelines for Constructing Confidence Intervals 8-5 Guidelines for Constructing Confidence Intervals Table 8-1 provides a simple road map for appropriate calculation of a confidence interval. Sec 8-5 Guidelines for Constructing Confidence Intervals 69 8-4 A Large-Sample Confidence Interval For a Population Proportion Normal Approximation for Binomial Proportion If n is large, the distribution of Z X np np (1 p) Pˆ p p (1 p) n is approximately standard normal. The quantity p(1 p) /isncalled the standard error of the point estimator Sec 8-4 Large-Sample Confidence Interval for a Population Proportion . P̂ 70 Approximate Confidence Interval on a Binomial Proportion If p̂ is the proportion of observations in a random sample of size n, an approximate 100(1 )% confidence interval on the proportion p of the population is pˆ z pˆ (1 pˆ ) pˆ (1 pˆ ) p pˆ z n n (8-11) where z/2 is the upper /2 percentage point of the standard normal distribution. Sec 8-4 Large-Sample Confidence Interval for a Population Proportion 71 Example 8-8 Crankshaft Bearings In a random sample of 85 automobile engine crankshaft bearings, 10 have a surface finish that is rougher than the specifications allow. Construct a 95% two-sided confidence interval for p. A point estimate of the proportion of bearings in the population that exceeds the roughness specification is pˆ x / n 10 / 85 0..12 A 95% two-sided confidence interval for p is computed from Equation 8-11 as pˆ z0.025 0.12 1.96 pˆ 1 pˆ p pˆ z0.025 n pˆ 1 pˆ n 0.12 0.88 0.12 0.88 p 0.12 1.96 85 85 0.0509 p 0.2243 Interpretation: This is a wide CI. Although the sample size does not appear to be small (n = 85), the value of is fairly small, which leads to a large standard error for contributing to the wide CI. Sec 8-4 Large-Sample Confidence Interval for a Population Proportion 72 Choice of Sample Size Sample size for a specified error on a binomial proportion : If we set E z p 1 p / n and solve for n, the appropriate sample size is 2 z n p1 p E (8-12) The sample size from Equation 8-12 will always be a maximum for p = 0.5 [that is, p(1 − p) ≤ 0.25 with equality for p = 0.5], and can be used to obtain an upper bound on n. z n E 2 0.25 Sec 8-4 Large-Sample Confidence Interval for a Population Proportion (8-13) 73 Example 8-9 Crankshaft Bearings Consider the situation in Example 8-8. How large a sample is required if we want to be 95% confident that the error in using p̂ to estimate p is less than 0.05? ˆ 0.12 as an initial estimate of p, we find from Equation 8-12 that the Using p required sample size is 2 2 z 1.96 n 0.025 pˆ 1 pˆ 0.120.88 163 E 0 . 05 If we wanted to be at least 95% confident that our estimate p̂ of the true proportion p was within 0.05 regardless of the value of p, we would use Equation 8-13 to find the sample size 2 2 z 0.025 1.96 n 0.25 0.25 385 0.05 E Interpretation: If we have information concerning the value of p, either from a preliminary sample or from past experience, we could use a smaller sample while maintaining both the desired precision of estimation and the level of confidence. Sec 8-4 Large-Sample Confidence Interval for a Population Proportion 74 Approximate One-Sided Confidence Bounds on a Binomial Proportion The approximate 100(1 )% lower and upper confidence bounds are pˆ z pˆ 1 pˆ p n and p pˆ z pˆ 1 pˆ n (8-14) respectively. Sec 8-4 Large-Sample Confidence Interval for a Population Proportion 75 Example 8-10 The Agresti-Coull CI on a Proportion Reconsider the crankshaft bearing data introduced in Example 8-8. In that example we reported that pˆ 0.12 and n 85. The 95% CI was 0.0509 p 0.2243 . Construct the new Agresti-Coull CI. pˆ 1 pˆ z2 2 pˆ z 2 2 2 n n 4n UCL 2 1 z 2 n z2 2 0.12 0.88 1.962 1.962 0.12 1.96 2(85) 85 4(85) 2 1 (1.962 / 85) 0.2024 pˆ 1 pˆ z2 2 pˆ z 2 2 2 n n 4n LCL 2 1 z 2 n z2 2 0.12 0.88 1.962 1.962 0.12 1.96 2(85) 85 4(85) 2 1 (1.962 / 85) 0.0654 Interpretation: The two CIs would agree more closely if the sample size were larger. Sec 8-4 Large-Sample Confidence Interval for a Population Proportion 76 Probabilities Normal Distributions • • • • Normal Distributions Determining Normal Probabilities Finding Values That Correspond to Normal Probabilities Assessing Departures from Normality 7: Normal Probability Distributions 77 §7.1: Normal Distributions • This pdf is the most popular distribution for continuous random variables • First described de Moivre in 1733 • Elaborated in 1812 by Laplace • Describes some natural phenomena • More importantly, describes sampling characteristics of totals and means 7: Normal Probability Distributions 78 Normal Probability Density Function • Recall: continuous random variables are described with probability density function (pdfs) curves • Normal pdfs are recognized by their typical bell-shape Figure: Age distribution of a pediatric population with overlying Normal pdf 7: Normal Probability Distributions 79 Area Under the Curve • pdfs should be viewed almost like a histogram • Top Figure: The darker bars of the histogram correspond to ages ≤ 9 (~40% of distribution) • Bottom Figure: shaded area under the curve (AUC) corresponds to ages ≤ 9 (~40% of area) 7: Normal Probability Distributions x 12 1 f ( x) e 2 80 2 Parameters μ and σ • Normal pdfs have two parameters μ - expected value (mean “mu”) σ - standard deviation (sigma) μ controls location σ controls spread 7: Normal Probability Distributions 81 Mean and Standard Deviation of Normal Density σ μ 7: Normal Probability Distributions 82 Standard Deviation σ • Points of inflections one σ below and above μ • Practice sketching Normal curves • Feel inflection points (where slopes change) • Label horizontal axis with σ landmarks 7: Normal Probability Distributions 83 Two types of means and standard deviations • The mean and standard deviation from the pdf (denoted μ and σ) are parameters • The mean and standard deviation from a sample (“xbar” and s) are statistics • Statistics and parameters are related, but are not the same thing! 7: Normal Probability Distributions 84 68-95-99.7 Rule for Normal Distributions • 68% of the AUC within ±1σ of μ • 95% of the AUC within ±2σ of μ • 99.7% of the AUC within ±3σ of μ 7: Normal Probability Distributions 85 Example: 68-95-99.7 Rule Wechsler adult intelligence scores: Normally distributed with μ = 100 and σ = 15; X ~ N(100, 15) • 68% of scores within μ±σ = 100 ± 15 = 85 to 115 • 95% of scores within μ ± 2σ = 100 ± (2)(15) = 70 to 130 • 99.7% of scores in μ ± 3σ = 100 ± (3)(15) = 55 to 145 7: Normal Probability Distributions 86 Symmetry in the Tails Because the Normal curve is symmetrical and the total AUC is exactly 1… 95% … we can easily determine the AUC in tails 7: Normal Probability Distributions 87 Example: Male Height • Male height: Normal with μ = 70.0˝ and σ = 2.8˝ • 68% within μ ± σ = 70.0 2.8 = 67.2 to 72.8 • 32% in tails (below 67.2˝ and above 72.8˝) • 16% below 67.2˝ and 16% above 72.8˝ (symmetry) 7: Normal Probability Distributions 88 Reexpression of Non-Normal Random Variables • Many variables are not Normal but can be reexpressed with a mathematical transformation to be Normal • Example of mathematical transforms used for this purpose: – logarithmic – exponential – square roots • Review logarithmic transformations… 7: Normal Probability Distributions 89 §7.2: Determining Normal Probabilities When value do not fall directly on σ landmarks: 1. State the problem 2. Standardize the value(s) (z score) 3. Sketch, label, and shade the curve 4. Use Table B 7: Normal Probability Distributions 90 §7.2: Determining Normal Probabilities When value do not fall directly on σ landmarks: 1. State the problem 2. Standardize the value(s) (z score) 3. Sketch, label, and shade the curve 4. Use Table B 7: Normal Probability Distributions 91 Step 1: State the Problem • What percentage of gestations are less than 40 weeks? • Let X ≡ gestational length • We know from prior research: X ~ N(39, 2) weeks • Pr(X ≤ 40) = ? 7: Normal Probability Distributions 92 Step 2: Standardize • Standard Normal variable ≡ “Z” ≡ a Normal random variable with μ = 0 and σ = 1, • Z ~ N(0,1) • Use Table B to look up cumulative probabilities for Z 7: Normal Probability Distributions 93 Example: A Z variable of 1.96 has cumulative probability 0.9750. 7: Normal Probability Distributions 94 Step 2 (cont.) Turn value into z score: z x z-score = no. of σ-units above (positive z) or below (negative z) distribution mean μ For example, the value 40 from X ~ N (39,2) has 40 39 z 0.5 2 7: Normal Probability Distributions 95 Steps 3 & 4: Sketch & Table B 3. Sketch 4. Use Table B to lookup Pr(Z ≤ 0.5) = 0.6915 7: Normal Probability Distributions 96 Probabilities Between Points a represents a lower boundary b represents an upper boundary Pr(a ≤ Z ≤ b) = Pr(Z ≤ b) 7: Normal Probability Distributions − Pr(Z ≤ a) 97 Between Two Points Pr(-2 ≤ Z ≤ 0.5) = .6687 = .6687 -2 0.5 Pr(Z ≤ 0.5) − .6915 − .6915 0.5 Pr(Z ≤ -2) .0228 .0228 -2 See p. 144 in text 7: Normal Probability Distributions 98 §7.3 Values Corresponding to Normal Probabilities 1. State the problem 2. Find Z-score corresponding to percentile (Table B) 3. Sketch 4. Unstandardize: x z p 7: Normal Probability Distributions 99 z percentiles zp ≡ the Normal z variable with cumulative probability p Use Table B to look up the value of zp Look inside the table for the closest cumulative probability entry Trace the z score to row and column 7: Normal Probability Distributions 100 e.g., What is the 97.5th percentile on the Standard Normal curve? z.975 = 1.96 Notation: Let zp represents the z score with cumulative probability p, e.g., z.975 = 1.96 7: Normal Probability Distributions 101 Step 1: State Problem Question: What gestational length is smaller than 97.5% of gestations? • Let X represent gestations length • We know from prior research that X ~ N(39, 2) • A value that is smaller than .975 of gestations has a cumulative probability of.025 7: Normal Probability Distributions 102 Step 2 (z percentile) Less than 97.5% (right tail) = greater than 2.5% (left tail) z lookup: z.025 = −1.96 z .00 –1.9 .0287 .01 .02 .03 .04 .05 .06 .07 .08 .09 .0281 .0274 .0268 .0262 .0256 .0250 .0244 .0239 .0233 7: Normal Probability Distributions 103 Unstandardize and sketch x z p 39 (1.96)( 2) 35 The 2.5th percentile is 35 weeks 7: Normal Probability Distributions 104 Example: A Z variable of 1.96 has cumulative probability of …… z lookup: z.025 = ----What is the 97.5th percentile on the Standard Normal curve? z.975 = Between Two Points Q1: z lookup: z.025 = ----Q 2:A Z variable of 1.96 has cumulative probability of …… Q3: Find P(Z less than 1.96) .6915 .6687 -2 q2 What is the 97.5th percentile on the Standard Normal curve? z.975 = Find P(Z greater than 1.96) 0.5 0.5 7: Normal Probability Distributions .0228 -2 106