* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download a comparison of predictive modeling techniques

Survey

Document related concepts

Instrumental variables estimation wikipedia , lookup

Data assimilation wikipedia , lookup

Regression toward the mean wikipedia , lookup

Choice modelling wikipedia , lookup

Interaction (statistics) wikipedia , lookup

Linear regression wikipedia , lookup

Transcript

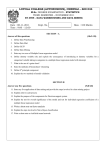

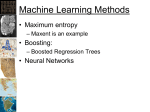

A COMPARISON OF PREDICTIVE MODELING TECHNIQUES: ILLUSTRATED WITH EXAMPLES UTILIZING DATA MINING AND OTHER TRADITIONAL TECHNIQUES Phyllis A. Schumacher, Bryant University, (401) 232-6328, [email protected] Alan D. Olinsky, Bryant University, (401) 232-6266, [email protected] ABSTRACT Traditional methods of predictive modeling include ANOVA, linear, non-linear and logistic regression. It is important in considering these techniques to emphasize the advantages or disadvantages of one technique over another. Data mining techniques, including neural nets and decision trees, are not often taught in applied statistics courses. It would be nice if students were made aware of some alternatives to regression, particularly since many students, both in business and in the social sciences, go on to use statistical analysis on research projects. This paper will compare and contrast different statistical techniques as alternatives for predictive modeling. Key Words: Regression, ANOVA, Data Mining, Neural Nets, Decision Trees INTRODUCTION The traditional method of predicting a dependent quantitative variable in terms of one or more independent quantitative and/or qualitative variables is linear or non-linear regression. In the case of multiple regression, step-wise regression is a popular technique. If the dependent variable is qualitative, logistic regression is most commonly used. In most applied undergraduate and graduate statistics courses, analysis of variance (ANOVA) and regression analysis are covered and some two semester courses may also cover logistic regression and step-wise regression. It is important in considering these techniques to emphasize the advantages or disadvantages of using one technique over another. Data mining techniques, which are alternative methods for prediction are not usually discussed in most applied statistics courses, whether liberal arts or business statistics courses. Although social scientists lean heavily toward ANOVA, many undergraduate business and MBA statistics classes devote much of the course to different regression models. Since a single one or two semester undergraduate or graduate statistics course may be the only statistics class that many students take, it would be nice if students were made aware of some alternatives to regression, particularly since many of these students, both in business and in the social sciences, go on to use statistical analysis on research projects. This paper will compare and contrast different statistical techniques as alternatives for predictive modeling. In particular, we will discuss and compare the use of ANOVA and regression for prediction of a quantitative dependent variable with one or more qualitative independent variables. Next, we will look at the problems associated with numerous independent variables and discuss the use of factor analysis to reduce the dimensionality. Finally, we will consider the use of the data mining techniques of neural nets and decision trees as alternatives to regression analysis for prediction with a qualitative dependent variable. In each case, we will present examples and compare the analysis of an appropriate data set. ANOVA VERSUS REGRESSION WITH DUMMY VARIABLES Although it is possible to run a regression when the dependent variable is quantitative and the independent variable is qualitative, this requires the introduction of dummy variables, which can quickly lead to a cumbersome equation, particularly if there is more than one independent variable where some have several levels and also interactions are considered. This often happens when regression is used for model building. When several independent qualitative variables and their interaction are to be considered, ANOVA is probably the method to use because the results are much easier to interpret, especially for a non-statistician. As an example for application to this first comparison, we make use of data from the General Social Survey. This data set, published by the National Opinion Research Council at the University of Chicago, is often used in the social sciences and has many variables which are easily analyzed with an ANOVA design. [1] We have chosen the dependent variable, income and the independent variables gender and marital status. One can see from the SPSS output provided in Table 1 below that there are significant differences in income by gender and marital status but that there is also significant interaction present. This is a large data set with over 4000 observations and 2913 were used in this model, so the significance is not surprising, however, the interaction plot, provided in Figure 1, illustrates the importance of the interaction that is present. It is most interesting to note that in most cases males earn significantly more than females but that there is actually a much smaller difference in the case of those who were either divorced or never married. Similar results could be obtained by running a multiple regression; however, since there are 5 levels of marital status, we would need to create 4 dummy variables to obtain an equivalent result to the ANOVA. Tests of Between-Subjects Effects Dependent Variable: rincome Source Corrected Model Intercept sex marital sex * marital Error Total Corrected Total Type III Sum of Squares 1307.389a 90701.925 156.538 587.855 163.714 22378.410 346621.000 23685.799 df 9 1 1 4 4 2903 2913 2912 Mean Square 145.265 90701.925 156.538 146.964 40.929 7.709 F 18.844 11766.148 20.307 19.065 5.309 Sig. .000 .000 .000 .000 .000 a. R Squared = .055 (Adjusted R Squared = .052) Table 1: SPSS Output of ANOVA Table of Income by Gender by Marital Status FACTOR ANALYSIS VERSUS STEP-WISE REGRESSION When running a regression with many independent variables step-wise regression or best subsets regression are often used to reduce the dimensionality of the problem and take care of any correlation issues. Often when there are many variables, multi-collinearity is a concern but even if the there is not serious multi-collinearity present, the more independent variables in the problem, the more complicated the model becomes. We have been told by some of our colleagues who teach econometrics that they do not like step-wise regression because the procedure involves a mathematical “Black Box” in that it leaves out variables by an unseen mathematical procedure which compares correlations between variables and omits variables that may be highly correlated with variables that are kept in the model. This procedure may therefore drop out variables that the economist may consider the most important. Indeed, it is typically the coefficient of the variable and its significance in the model that is of interest to the economist. The economist therefore may not be interested in the most parsimonious model. However, since the interpretation of the coefficients may become meaningless in the presence of multicollinearity, it is important to omit some highly correlated variables in a regression model. Also, if the number of independent variables in a model is large, one would need large data sets for future predictions using the model. Therefore, it is often useful to reduce the number of independent variables used when obtaining a regression model. Although the use of step-wise regression accomplishes this, in order to avoid the mathematical elimination of key variables, the use of Factor Analysis, either exploratory or confirmatory, can be used, with the set of independent variables to reduce the number of variables thus eliminating the multi-collinearity prior to running the multiple regression. One of the major advantages of this is that the researcher can choose the variables that he wishes to keep. Estimated Marginal Means of RESPONDENTS INCOME Estimated Marginal Means Gender 11.5 MALE FEMALE 11 10.5 10 9.5 MARRIEDWIDOWED DIVORCED SEPARATED NEVER MARRIED MARITAL STATUS Figure 1: SPSS Output of Interaction Plot of Income by Gender by Marital Status For illustration purposes here, we use a data set consisting of sales information for various models of Toyota Corollas sold in the Netherlands. This data set contains the selling price, which will be used as the dependent variable and many possible independent variables from which to select, including both quantitative and qualitative variables. We have chosen to use 19 independent variables: Age, KM (odometer reading), HP (horse power), Automatic Transmission (yes, no), CC(engine size), Doors(3, 4, 5), Gears ( 5 or 6), Weight, Mfr_Guarantee (yes, no), ABS(automatic breaks, yes, no), Airbag(yes, no), Air-conditioning (yes, no), CD Player( yes-no), Central Locking System (yes, no), Powered_Windows (yes-no), Power-Steering(yes-no), Radio (yes, no), Sport-Model(yes-no), Metallic-Rims(yes-no). We first ran this with a step-wise regression using SPSS, and obtained the results presented in Table 2. It should be noted that of the 19 variables included in the model, 11 are in the final model. Thus, the stepwise regression does reduce the number of variables but it does not necessarily include the variables that might be most important to the researcher. We have followed up this analysis with a factor analysis of the same data again using SPSS. Principal component factor analysis, with varimax rotation converged in 8 iterations and resulted in 7 factors which are presented in Table 3. The factors seem to have some relevance to certain features in which a car buyer might be interested. One could follow up this factor analysis with a predictive regression model which has 7 independent variables which can be either a representative variable from each group or can use a factor score for each of the factors. Unstandardized Coefficients Model Standardized Coefficients T Sig. Beta B Std. Error -2.706 .007 B Std. Error (Constant) -2567.092 948.829 Age_08_04 -120.735 3.005 -.619 -40.172 .000 Weight 17.252 .793 .250 21.742 .000 KM -.019 .001 -.194 -15.823 .000 HP 25.208 2.551 .104 9.881 .000 Powered_Windows 428.583 77.185 .059 5.553 .000 Sport_Model 470.737 77.027 .060 6.111 .000 ABS -418.445 99.545 -.045 -4.204 .000 Mfr_Guarantee 266.720 72.297 .036 3.689 .000 Metallic_Rim 260.385 90.644 .029 2.873 .004 CD_Player 244.738 96.980 .028 2.524 .012 341.568 152.095 .022 2.246 .025 Automatic Dependent Variable: Price Table 2: SPSS Output: Final Table – Step-wise Regression Factor 1 Factor 2 Factor 3 Age-Related Luxuries Power Features Air-conditioning Related Age Central_Lock Features KM (mileage) Powered_Windows Cc HP Metallic_Rims Weight MFC_Guarantee CD_Player Table 3: Factor Analysis – Toyota Car Data Factor 4 Sport Enhanced Features Doors Gears Metallic_RI Factor 5 Safety Features Airbag Powered_Steering ABS( automatic breaking system) Factor 6 Radio Factor 7 Automatic Transmission NEURAL NETS AND DECISSION TREES VERSUS REGRESSION With large data sets, data mining techniques such as neural nets and decision trees may be used as an alternative to regression for prediction of a single variable in terms of one or more other variables. Although the dependent variable in these techniques could be continuous, quantitative variables are typically coded into categorical variables. Since this involves giving up some of the precision of the data, when the dependent variable is a purely continuous variable, traditional multiple regression is probably the best choice. Data mining models are a good alternative to logistic regression which is appropriate for prediction with a categorical dependent variable. The use of data mining techniques is appropriate for large data sets. With large data sets, one does not worry about p-values because most variables will be statistically significant. Instead, one focuses on classification accuracy. Therefore, these techniques are good alternatives when one is concerned with prediction and not with the relationship between the dependent variable and the various independent variables. These techniques also deal better with missing values. When running a regression one might impute missing values by using the mean for a quantitative variable and the mode for a qualitative variable. The data mining techniques, especially the trees, were designed to deal with missing values and they often obtain much better classification results than are obtained with a traditional logistic regression. We have found this to be true in a previous paper [2] by comparing the results obtained analyzing a large data dealing with the prediction of success of college students with traditional logistic regression and data mining techniques. The simplest neural net is equivalent to running a linear regression. As the network becomes more complex with hidden nodes, allowing for a better fit, it may actually be equivalent to a non-linear regression. One drawback of the neural net is that one cannot interpret the coefficients, which would be a problem for the economist, who is interested in how the independent variables influence the dependent variable. This model is however much better for classification accuracy. In fact, when running a neural net, one typically withholds a part of the sample, which is later used for validation. Decision trees are very popular. The results are presented as trees which are visually attractive and easy to interpret and explain. The CART (classification and regression tree) actually uses regression. The output of the decision tree is composed of a diagram of a tree with nodes and branches with rules for splitt ing the data at each of the nodes. There are different methods for splitting the data which can be done automatically or interactively with the analyst making the decision where the split should occur. Since the trees can grow very large, they are typically pruned back by the use of the withheld validation sample. Here we use a data set from SAS with missing values to predict a qualitative variable with logistic regression, a neural net and a decision tree. We compare the results based on ease of interpretation and accuracy of classification. This example relates to a supermarket that is trying to determine which of its loyalty program customers are likely to purchase organics products. The dataset contains over 22,000 observations and several variables. The dependent variable is a binary variable indicating whether or not the customer purchases organic products. The independent variables include: customer ID, age, gender, affluence grade, type of neighborhood, geographic region, loyalty status, total amount spent, and time as a loyalty card member. The output from the decision tree is presented in Figure 2. Figure 2: Classification Tree: Organic Product Purchasers The tree has been pruned in order to obtain a parsimonious model. It is approximately 80 % accurate and predicts customer loyalty based on two predicting variables age and affluence. One can see that the younger and more affluent shoppers are more likely to purchase organic products. A comparison of the classification accuracies for the three models is presented in Figure 3. The tree has a slightly higher misclassification of 20.6% as compared to the regression and neural net procedures which have a misclassification rate of 18.6% and 18.7% respectively. However, this is the result of the extensive pruning of the tree. Before pruning, the tree was as accurate as the other models but even with severe pruning it is still remarkable accurate and very interpretable. It does therefore reduce the problem to the consideration of the most important independent variables. Figure 3: Comparison of Model Classification Accuracies CONCLUSION Alternative methods for predictive modeling provide students with different approaches to dealing with data analysis. Traditional methods such as simple and multiple regression for quantitative dependent variables and logistic regression for qualitative variables may be adequate for many situations. However, with an abundance of independent variables and with very large data sets, non-traditional methods may be useful. Principal component factor analysis is useful for reducing the dimensionality prior to performing a regression analysis. Data mining approaches such as neural nets and decision trees provide accuracy in prediction with large data sets. The decision tree provides an easily readable and attractive output and is also adept at handling missing values. We recommend that these alternative methods be considered as part of a tool set for applied statistical analysis. REFERENCES [1] Davis, J. A. and T.W. Smith. 1972-2004. General Social Surveys. Chicago: National Opinion Research Center. [2] Olinsky, A., Schumacher, P., Smith, R. and Quinn, R. “A Comparison of Logistic Regression, Neural Networks, and Classification Trees in a Study to Predict Success of Actuarial Students.” Proceedings of the Northeast Decision Sciences Institute, Baltimore, Maryland, March, 2007.