* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download Experimental framework

Computer network wikipedia , lookup

TCP congestion control wikipedia , lookup

Airborne Networking wikipedia , lookup

Multiprotocol Label Switching wikipedia , lookup

Asynchronous Transfer Mode wikipedia , lookup

Network tap wikipedia , lookup

Distributed firewall wikipedia , lookup

Cracking of wireless networks wikipedia , lookup

Wake-on-LAN wikipedia , lookup

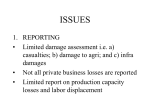

A Simulative Study Of Distributed Speech Recognition Over Internet Protocol Networks Daniele Quercia [email protected] MS Thesis Defense December 6, 2001 University of Illinois at Chicago Politecnico di Torino Outline Distributed Speech Recognition: Our Focus Experimental framework Experimental results Conclusions: the impact of packet losses 2 Introduction Internet has proliferated rapidly Strong interest in transporting voice over IP networks Novel Internet applications can benefit from Automatic Speech Recognition (ASR) 3 Introduction (cont’d) Desire for speech input for handheld devices (mobile phones, PDA’s, etc.) Speech recognition requires high computation, RAM, and disk resources If hand-held devices connected to a network, the speech recognition can take place remotely (e.g. ETSI Aurora Project) 4 Distributed Speech Recognition Architecture Speech recognition task distributed between two end systems: client side (light-weight) server side Client Side IP Networ k Server Side 5 Distributed Speech Recognition Architecture (cont’d) Front-end Client Device Packing and Framing IP Network Recognizer Unpacking Remote site 6 Technical challenges IP networks are not designed for transmitting real-time traffic Lack of guarantees in terms of packet losses, network delay, and delay jitter 7 Technical challenges (cont’d) Speech packets must be received: without significant losses with low delay with small delay variation (jitter) The design of Distributed Speech Recognition systems must consider the effect of packet losses 8 Our Focus Performance evaluation of a Distributed Speech Recognition (DSR) system operating over simulated IP networks 9 Our Research Contributions Evaluation of a standard front-end that achieves state-of-the-art performance Simulative study of DSR under increasingly more realist scenarios: Random losses Gilbert-model losses Network simulations 10 Experimental framework Front-end Recognizer Speech Database Network scenarios 11 Experimental framework Results Summary & Conclusions Front-end Front-end extracts from the speech signal significant information for recognition Spectral Envelope 12 Front-end (cont’d) Experimental framework Results Summary & Conclusions ETSI Aurora Standard Front-end produces 14 coefficients Front-end based on mel coefficients Mel coefficients represent the short-time spectral envelope Frequency axis is warped closer to perceptual axis 13 Recognizer Experimental framework Results Summary & Conclusions HMM-based speech recognizer HMM model consists of: -states qi 16 states per word -transition probabilities aij -initial state distribution pi -emission distribution bi(o) 3 Gaussian mixtures per state 14 Experimental framework Results Summary & Conclusions Recognizer (cont’d) TRAINING PHASE Word 1 Word 2 M 1 M Word 3 2 M 3 Training examples Estimated Models RECOGNITION PHASE Unknown observation sequence O P(O|M 1) P(O|M 3) P(O|M 2) Max Probability chosen Evaluated probabilities 15 Speech Database Experimental framework Results Summary & Conclusions ETSI Aurora TIdigits Database 2.0 For training, 8440 utterances selected For test, 4004 utterances selected without noise added 16 Network scenarios Experimental framework Results Summary & Conclusions 3 network scenarios was considered: Random losses Gilbert-model losses Network simulations 1 frame per packet 17 Random losses Experimental framework Results Summary & Conclusions Each packet has the same loss probability Packet loss ratios: 10% - 40% 18 Experimental framework Results Summary & Conclusions Random losses (cont’d) Generally, packet losses appear in burst When the net is congested ... packet loss t High Probability(packet loss) !! t+d time Random losses does not model the temporal dependencies of loss 19 Experimental framework Results Summary & Conclusions Gilbert-model losses 2-state Markov model: p = P(next packet lost | previous packet arrived) q = P(next packet arrived | previous packet lost) p 1-p STATE 1 no loss STATE 2 loss 1-q q 20 Gilbert-model losses (cont’d) Experimental framework Results Summary & Conclusions 2-state Markov model is less accurate than a nth order Markov model, but (accuracy vs. complexity) is better. Documented in the literature: “Gilbert model is a suitable loss model” Simulated Packet loss ratios: 10% - 40% 21 Network simulations Experimental framework Results Summary & Conclusions Previous models are mathematically simple Network simulations represent more realistic IP scenarios 22 Network simulations (cont’d) Experimental framework Results Summary & Conclusions VINT Simulation Environment was used Components: NS-2 (network simulator version 2) NAM (network animator) NS package allows extension by user 23 Network simulations (cont’d) Experimental framework Results Summary & Conclusions NS simulator receives a scenario as input produces trace files Network Simulator 24 Network simulations (cont’d) Experimental framework Results Summary & Conclusions Our analysis: Scenario in which the users are speaking, while interfering FTP traffic is going on Speech sources 1 ms 3 ms Speech receivers 64 kb/s FTP sources FTP receivers 25 Network simulations (cont’d) Experimental framework Results Summary & Conclusions Playout Buffer required to deal with delay variations Sender Time IP net Receiver Buffer Network delay Time Buffer size Time 26 Network simulations (cont’d) Experimental framework Results Summary & Conclusions Characteristics of the scenario: Speech traffic uses RTP protocol with header compression (8-bytes long packet) Round-trip time: 10 ms Playout buffer size: 100 ms Competing traffic: on/off TCP sources Simulation: 350 s Simulated Packet loss ratios: 5% - 20% 27 Performance measures Experimental framework Results Summary & Conclusions Word Accuracy is a good measure of performance Word Accuracy of the baseline system (no errors): 99% 28 Performance measures (cont’d) Experimental framework Results Summary & Conclusions Three kinds of errors: Reference: I want to go to Venezia Recognized: - want to go to the Verona D=Deletion I=Insertion Word Accuracy=100 ( 1_ S=Substitution S+D+I #spoken words )% 29 Packet loss concealment Experimental framework Results Summary & Conclusions Error concealment technique for packet losses When packet losses occur, the missing packets replaced by interpolation 30 Results for random losses Experimental framework Results Summary & Conclusions For Packet Loss Ratio =10% and 20%, predominantly single packet losses occur Overall, 94% of burst lengths < 5 packet.s 31 Experimental framework Results Summary & Conclusions Results for random losses As Packet Loss Ratio increases performance deteriorates Word Accuracy (%) Recognition performance with random losses 100 95 90 85 80 75 without error concealment 10 20 with error concealment 30 Recovery from 83% to 99% 40 Packet loss ratio 32 Results for Gilbert-model losses Experimental framework Results Summary & Conclusions For Packet Loss Ratio=10% and 20%, predominantly single packet losses occur For Packet Loss Ratio=30% and 40%, burst lengths < 6 packets 33 Results for Gilbert-model losses Experimental framework Results Summary & Conclusions With Packet Loss Ratio=40%, Average loss burst length: 4 packets Recognition performance for Gilbertmodel losses Word Accuracy (%) without Error Concealment with Error Concealment 100 95 90 Recovery from 80% to 98% 85 80 75 10 20 30 Packet loss ratio 40 34 Results for network simulations Experimental framework Results Summary & Conclusions Average burst length: 45 packets. Why? TCP packets are much larger than speech ones: when speech packets get delayed in the queues, they may reach the receiver too late 35 Results for network simulations Experimental framework Results Summary & Conclusions Loss burst lengths are very large Recognition performance for network simulations Word Accuracy(%) without Error Concealment With long loss bursts, nothing can be done 100 90 80 70 60 50 10 20 30 Packet loss ratio 40 36 Summary and Conclusions Experimental framework Results Summary & Conclusions We have analyzed the impact of packet losses on a DSR system over IP networks using the ETSI Aurora database Packet losses were modeled by: random losses Gilbert-model losses network simulations 37 Summary and Conclusions (cont’d) Experimental framework Results Summary & Conclusions Expected recognition performance from length of burst losses Small burst length losses: good recognition results Large burst length losses: degraded recognition results 38 Summary and Conclusions (cont’d) Experimental framework Results Summary & Conclusions Single packet losses and short bursts can be tolerated Bursty packet losses lead to large performance degradation Error concealment technique provides good results if the error bursts are short (4-5 packets) 39 Submission Submitted to the IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP), 2002 40