* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download csci5211: Computer Networks and Data Communications

TV Everywhere wikipedia , lookup

Remote Desktop Services wikipedia , lookup

Zero-configuration networking wikipedia , lookup

Piggybacking (Internet access) wikipedia , lookup

Serial digital interface wikipedia , lookup

Video on demand wikipedia , lookup

Streaming media wikipedia , lookup

A Quick Primer on

CDN & Multimedia Networking

• Web Caching and CDN

• Multimedia vs. (conventional) Data Applications

– analog “continuous” media: encoding, decoding & playback

– service requirements

• Classifying multimedia applications

– Streaming (stored) multimedia

– Live multimedia broadcasting

– Interactive multimedia applications

• Making the best of best effort service: streaming Stored

Multimedia over “Best-Effort “Internet (optional)

– client buffering, rate adaption, etc.

• Large-scale video delivery over the Internet: YouTube

and Netflix case studies (optional)

Required Readings:

csci4221 Textbook, sections 2.2.5, 2.6, 9.1-9.2; optional 9.4-9.6

1

Web Performance:

impact of bottleneck link

assumptions:

avg object size: 100K bits

avg request rate from browsers to

origin servers:15/sec

avg data rate to browsers: 1.50 Mbps

RTT from gateway router to any

origin server: 2 sec

access link rate: 1.54 Mbps

consequences:

LAN utilization: 15% problem!

access link utilization = 99%

total delay = Internet delay + access

delay + LAN delay

= 2 sec + minutes + usecs

origin

servers

public

Internet

home or

institutional

network

1.54 Mbps

access link

100Mbps LAN

or

1 Gbps LAN

2

Web performance: fatter access link

assumptions:

avg object size: 100K bits

avg request rate from browsers to origin

servers:15/sec

avg data rate to browsers: 1.50 Mbps

RTT from institutional router to any

origin server: 2 sec

access link rate: 1.54 Mbps

154 Mbps

origin

servers

public

Internet

consequences:

LAN utilization: 15%

access link utilization = 99%

9.9%

total delay = Internet delay + access

delay + LAN delay

= 2 sec + minutes + usecs

msecs

home or

institutional

network

1.54 Mbps

154 Mbps

access link

100 Mbps LAN

or 1 Gbps LAN

Cost: increased access link speed

(not cheap!)

3

Improving Web Performance

via (local) browser cache

assumptions:

avg object size: 100K bits

avg request rate from browsers to origin

servers:15/sec

avg data rate to browsers: 1.50 Mbps

RTT from gateway router to any origin

server: 2 sec

access link rate: 1.54 Mbps

consequences:

origin

servers

public

Internet

problem!

LAN utilization: 15%

access link utilization = 99%

total delay = Internet delay + access

delay + LAN delay

= 2 sec + minutes + usecs

home or

institutional

network

1.54 Mbps

access link

100Mbps LAN

or

1 Gbps LAN

(local) browser cache:

when & how much it can help?

4

Conditional GET

• Goal: don’t send object if

cache has up-to-date cached

version

– no object transmission

delay

– lower link utilization

• cache: specify date of cached

copy in HTTP request

If-modified-since:

<date>

• server: response contains no

object if cached copy is up-todate:

HTTP/1.0 304 Not

Modified

client

server

HTTP request msg

If-modified-since: <date>

HTTP response

HTTP/1.0

304 Not Modified

object

not

modified

before

<date>

HTTP request msg

If-modified-since: <date>

HTTP response

HTTP/1.0 200 OK

object

modified

after

<date>

<data>

5

Web caches (proxy server)

goal: satisfy client request without involving origin server

• user sets browser: Web

accesses via cache

• browser sends all HTTP

requests to cache

– object in cache: cache

returns object

– else cache requests

object from origin

server, then returns

object to client

proxy

server

client

client

origin

server

origin

server

6

More about Web caching

• cache acts as both

client and server

– server for original

requesting client

– client to origin server

• typically cache is

installed by ISP

(university, company,

residential ISP)

why Web caching?

• reduce response time for

client request

• reduce traffic on an

institution’s access link

• Internet dense with caches:

enables “poor” content

providers to effectively

deliver content (so too does

P2P file sharing)

7

Caching example: install local cache

assumptions:

avg object size: 100K bits

avg request rate from browsers to origin

servers:15/sec

avg data rate to browsers: 1.50 Mbps

RTT from institutional router to any

origin server: 2 sec

access link rate: 1.54 Mbps

origin

servers

public

Internet

consequences:

LAN utilization: 15%

access link utilization = ?

total delay = ?

How to compute link

utilization, delay?

Cost: web cache (cheap!)

1.54 Mbps

access link

institutional

network

1 Gbps LAN

local web

cache

8

Caching example: install local cache

Calculating access link utilization,

delay with cache:

• suppose cache hit rate is 0.4

origin

servers

– 40% requests satisfied at cache,

60% requests satisfied at origin

public

Internet

access link utilization:

60% of requests use access link

data rate to browsers over access link

= 0.6*1.50 Mbps = .9 Mbps

utilization = 0.9/1.54 = .58

total delay

= 0.6 * (delay from origin servers) +0.4

* (delay when satisfied at cache)

= 0.6 (2.01) + 0.4 (~msecs) = ~ 1.2 secs

less than with 154 Mbps link (and

cheaper too!)

All good!

1.54 Mbps

access link

institutional

network

1 Gbps LAN

local web

cache

Potential issues?

CSci4211:

Application Layer

9

Dealing with (Internet) Scale : CDNs

Challenges: one single “mega-server” can’t possibly

handle all requests for popular service

DNS

not

enough bandwidth: Netflix video streaming at 2

Mbps per connection

only 5000 connections over fastest possible

(10Gbs) connection to Internet at one server

30 Million Netflix customers

too far from some users: halfway around the globe to

someone

reliability: single point of failure

A single server

doesn’t “scale”

10

Content distribution networks

• challenge: how to stream content (selected

from millions of videos) to hundreds of

thousands of simultaneous users?

• option 1: single, large “mega-server”

–

–

–

–

single point of failure

point of network congestion

long path to distant clients

multiple copies of video sent over outgoing link

….quite simply: this solution doesn’t scale

11

Content distribution networks

• challenge: how to stream content (selected from

millions of videos) to hundreds of thousands of

simultaneous users?

• option 2: store/serve multiple copies of videos at

multiple geographically distributed sites (CDN)

– enter deep: push CDN servers deep into many access

networks

• close to users

• used by Akamai, 1700 locations

– bring home: smaller number (10’s) of larger clusters in

POPs near (but not within) access networks

• used by Limelight

12

CDN: “simple” content access scenario

Bob (client) requests video http://netcinema.com/6Y7B23V

video stored in CDN at http://KingCDN.com/NetC6y&B23V

1. Bob gets URL for for video

http://netcinema.com/6Y7B23V

from netcinema.com

web page

1

2

6. request video from

KINGCDN server,

streamed via HTTP

netcinema.com

5

3. netcinema’s DNS returns URL

http://KingCDN.com/NetC6y&B23V

3

netcinema’s

authorative DNS

2. resolve http://netcinema.com/6Y7B23V

via Bob’s local DNS

KingCDN.com

4

4&5. Resolve

http://KingCDN.com/NetC6y&B23

via KingCDN’s authoritative DNS,

returns IP address of KIingCDN

server with video

which

KingCDN

authoritative DNS

13

CDN cluster selection strategy

• challenge: how does CDN DNS select

“good” CDN node to stream to client

– pick CDN node geographically closest to client

– pick CDN node with shortest delay (or min # hops) to

client (CDN nodes periodically ping access ISPs,

reporting results to CDN DNS)

• alternative: let client decide - give client a

list of several CDN servers

– client pings servers, picks “best”

3-14

Akamai CDN: quickie

• pioneered creation of CDNs circa 2000

• now: 61,000 servers in 1,000 networks in

70 countries

• delivers est 15-20% of all Internet traffic

• runs its own DNS service (alternative to

public root, TLD, hierarchy)

• hundreds of billions of Internet

interactions daily

• more shortly….

3-15

Multimedia and Quality of Service

Multimedia applications:

network audio and video

(“continuous media”)

QoS

network provides

application with level of

performance needed for

application to function.

16

Digital Audio

• Sampling the analog signal

– Sample at some fixed rate

– Each sample is an arbitrary real number

• Quantizing each sample

– Round each sample to one of a finite number of values

– Represent each sample in a fixed number of bits

4 bit representation

(values 0-15)

17

Audio Examples

• Speech

– Sampling rate: 8000 samples/second

– Sample size: 8 bits per sample

– Rate: 64 kbps

• Compact Disc (CD)

– Sampling rate: 44,100 samples/second

– Sample size: 16 bits per sample

– Rate: 705.6 kbps for mono,

1.411 Mbps for stereo

18

Why Audio Compression

• Audio data requires too much bandwidth

– Speech: 64 kbps is too high for a dial-up modem user

– Stereo music: 1.411 Mbps exceeds most access rates

• Compression to reduce the size

– Remove redundancy

– Remove details that human tend not to perceive

• Example audio formats

– Speech: GSM (13 kbps), G.729 (8 kbps), and G.723.3 (6.4

and 5.3 kbps)

– Stereo music: MPEG 1 layer 3 (MP3) at 96 kbps, 128

kbps, and 160 kbps

19

A few words about audio compression

• Analog signal sampled at

constant rate

– telephone: 8,000

samples/sec

– CD music: 44,100

samples/sec

• Each sample quantized, i.e.,

rounded

– e.g., 28=256 possible

quantized values

• Each quantized value

represented by bits

– 8 bits for 256 values

• Example: 8,000

samples/sec, 256 quantized

values --> 64,000 bps

• Receiver converts it back

to analog signal:

– some quality reduction

Example rates

• CD: 1.411 Mbps

• MP3: 96, 128, 160 kbps

• Internet telephony: 5.3 13 kbps

20

Digital Video

• Sampling the analog signal

– Sample at some fixed rate (e.g., 24 or 30 times per sec)

– Each sample is an image

• Quantizing each sample

– Representing an image as an array of picture elements

– Each pixel is a mixture of colors (red, green, and blue)

– E.g., 24 bits, with 8 bits per color

21

The

2272 x 1704

hand

CSci5221:

The

320 x 240

hand

Multimedia

22

A few words about video compression

• Video is sequence of

images displayed at

constant rate

Examples:

• MPEG 1 (CD-ROM) 1.5

Mbps

– e.g. 24 images/sec

• MPEG2 (DVD) 3-6 Mbps

• Digital image is array of

• MPEG4 (often used in

pixels

Internet, < 1 Mbps)

• Each pixel represented

Research:

by bits

• Layered (scalable) video

• Redundancy

– spatial

– temporal

– adapt layers to available

bandwidth

23

Video Compression: Within an Image

• Image compression

– Exploit spatial redundancy (e.g., regions of same color)

– Exploit aspects humans tend not to notice

• Common image compression formats

– Joint Pictures Expert Group (JPEG)

– Graphical Interchange Format (GIF)

Uncompressed: 167 KB

Good quality: 46 KB

Poor quality: 9 KB

24

Video Compression: Across Images

• Compression across images

– Exploit temporal redundancy across images

• Common video compression formats

– MPEG 1: CD-ROM quality video (1.5 Mbps)

– MPEG 2: high-quality DVD video (3-6 Mbps)

– Proprietary protocols like QuickTime and RealNetworks

25

MM Networking Applications

Classes of MM applications:

1) Streaming stored audio

and video

2) Streaming live audio and

video

3) Real-time interactive

audio and video

Jitter is the variability

of packet delays within

the same packet stream

CSci4211:

Fundamental

characteristics:

• Typically delay sensitive

– end-to-end delay

– delay jitter

• But loss tolerant:

infrequent losses cause

minor glitches

• Antithesis of data,

which are loss intolerant

but delay tolerant.

Multimedia Networking

26

Application Classes

• Streaming

– Clients request audio/video files from servers

and pipeline reception over the network and

display

– Interactive: user can control operation (similar

to VCR: pause, resume, fast forward, rewind,

etc.)

– Delay: from client request until display start

can be 1 to 10 seconds

27

Application Classes (more)

• Unidirectional Real-Time:

– E.g., real-time video broadcasting of a sport event

– similar to existing TV and radio stations, but delivery on

the network

– Non-interactive, just listen/view

• Interactive Real-Time :

– Phone conversation or video conference

– E.g., skype, Google handout, VoIP & SIP, …

– More stringent delay requirement than Streaming and

Unidirectional because of real-time nature

– Video: < 150 msec acceptable

– Audio: < 150 msec good, <400 msec acceptable

28

Multimedia Over Today’s Internet

TCP/UDP/IP: “best-effort service”

• no guarantees on delay, loss

?

?

?

?

?

?

But you said multimedia apps requires ?

QoS and level of performance to be

?

? effective!

?

?

Today’s Internet multimedia applications

use application-level techniques to mitigate

(as best possible) effects of delay, loss

29

Challenges

• TCP/UDP/IP suite provides best-effort, no

guarantees on expectation or variance of packet

delay

• Streaming applications delay of 5 to 10 seconds is

typical and has been acceptable, but performance

deteriorate if links are congested (transoceanic)

• Real-Time Interactive requirements on delay and

its jitter have been satisfied by over-provisioning

(providing plenty of bandwidth), what will happen

when the load increases?...

30

Internet (Stored) Multimedia:

Simplest Approach

• audio or video stored in file

• files transferred as HTTP object

– received in entirety at client

– then passed to player

audio, video not streamed:

• no, “pipelining,” long delays until playout!

31

Streaming Stored Multimedia

Streaming:

• media stored at source

• transmitted to client

• streaming: client playout begins

before all data has arrived

• timing constraint for still-to-be

transmitted data: in time for playout

32

Streaming Stored Multimedia:

What is it?

1. Video

pre-recorded

2. video

sent

network

delay

3. video received,

played out at client

time

streaming: at this time, client

playing out early part of video,

while server still sending later

part of video

33

Internet multimedia: Streaming Approach

• browser GETs metafile

• browser launches player, passing metafile

• player contacts server

• server streams audio/video to player

34

Streaming from a streaming server

• This architecture allows for non-HTTP protocol

between server and media player

• Can also use UDP instead of TCP.

35

Streaming Stored Multimedia

Application-level streaming

techniques for making the

best out of best effort

service:

– client side buffering

– use of UDP versus TCP

– multiple encodings of

multimedia

Media Player

•

•

•

•

jitter removal

decompression

error concealment

graphical user interface

w/ controls for

interactivity

36

Streaming Multimedia: UDP or TCP?

UDP

• server sends at rate appropriate for client (oblivious to

network congestion !)

– often send rate = encoding rate = constant rate

– then, fill rate = constant rate - packet loss

• short playout delay (2-5 seconds) to compensate for network

delay jitter

• error recover: time permitting

TCP

•

•

•

•

send at maximum possible rate under TCP

fill rate fluctuates due to TCP congestion control

larger playout delay: smooth TCP delivery rate

HTTP/TCP passes more easily through firewalls

37

Streaming Multimedia: Client Buffering

video sent at

Certain bit rates

variable

network

delay

constant bit

rate video

playout at client

buffered

video

client video

reception

client playout

delay

time

• Client-side buffering, playout delay compensate

for network-added delay, delay jitter

38

Streaming Multimedia: Client Buffering

“constant

”

drain

rate,

d

variable

fill

rate, x(t)

buffered

video

• Client-side buffering, playout delay compensate

for network-added delay, delay jitter

39

Streaming Stored Multimedia:

Interactivity

• VCR-like functionality: client can

pause, rewind, FF, push slider bar

– 10 sec initial delay OK

– 1-2 sec until command effect OK

• timing constraint for still-to-be transmitted data:

in time for playout

40

Streaming Live Multimedia

Examples:

• Internet radio talk show

• Live sporting event

Streaming

• playback buffer

• playback can lag tens of seconds after

transmission

• still have timing constraint

Interactivity

• fast forward impossible

• rewind, pause possible!

41

Interactive, Real-Time Multimedia

• applications: IP telephony,

video conference, distributed

interactive worlds

• end-end delay requirements:

– audio: < 150 msec good, < 400 msec OK

• includes application-level (packetization) and network

delays

• higher delays noticeable, impair interactivity

• session initialization

– how does callee advertise its IP address, port number,

encoding algorithms?

42

Large-scale Internet Video Delivery:

YouTube & Netflix Case Studies

Based on two active measurement studies we have

conducted

•Reverse-engineering YouTube Delivery Cloud

– Google’s New YouTube Architectural Design

•Unreeling Netflix Video Streaming Service

– Cloud-sourcing: Amazon Cloud Services & CDNs

43

YouTube Video Delivery Basics

Front end

web-servers

2. HTTP reply

containing html to

construct the web page 4. HTTP reply

and a link to stream the FLV stream

FLV file

Video-servers

(front end)

Internet

1. HTTP GET request

for video URL

3. HTTP GET

request

for FLV stream

User

44

www.youtube.com

45

Embedded Flash Video

46

Google’s New YouTube

Video Delivery Architecture

Three components

• Videos and video id space

• Physical cache hierarchy

•

three tiers: primary,

secondary, & tertiary

primary caches:

“Google locations” vs.

“ISP locations”

Implications:

Layered organization of

a) YouTube videos are not replicated at all locations!

namespaces

b) only replicated at (5) tertiary cache locations

representing “logical”

c) Google

likely utilizes some form of location-aware

video servers

load-balancing

(among primary cache locations)

five

“anycast” namespaces

two “unicast” namespaces

47

YouTube Video Id Space

• Each YouTube video is assigned a unique id

e.g., http://www.youtube.com/watch?v=tObjCw_WgKs

• Each video id is 11 char string

• first 10 chars can be any alpha-numeric values [0-9, a-z,

A-Z] plus “-” and “_”

• last char can be one of the 16 chars {0, 4, 8, ..., A, E, ...}

Video id space size: 6411

Video id’s are randomly

distributed in the id space

CSci4211:

Multimedia Networking

48

Physical Cache Hierarchy & Locations

~ 50 cache locations

• ~40 primary locations

• including ~10 non-

Google ISP locations

• 8 secondary locations

• 5 tertiary locations

Geo-locations using

• city codes in unicast hostnames,

e.g., r1.sjc01g01.c.youtube.com

• low latency from PLnodes (< 3ms)

• clustering of IP addresses using

latency matrix

P: primary

S: secondary

T: Tertiary

CSci4211:

Multimedia Networking

49

Layered Namespace Organization

Two types of namespaces

– Five “anycast” namespaces

• lscache: “visible” primary ns

• each ns representing fixed #

of “logical” servers

• logical servers mapped to

physical servers via DNS

– 2 “unicast” namespaces

• rhost: google locations

• rhostisp: ISP locations

• mapped to a single server

Examples:

50

YouTube Video Delivery Dynamics:

Summary

• Locality-aware DNS resolution

• Handling load balancing & hotspots

– DNS change

– Dynamic HTTP redirection

– local vs. higher cache tier

• Handling cache misses

– Background fetch

– Dynamic HTTP redirection

51

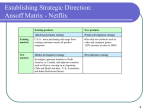

What Makes Netflix Interesting?

• Commercial, feature-length movies and TV shows

and not free; subscription-based

• Nonetheless, Netflix is huge!

25 million subscribers and ~20,000 titles (and growing)

consumes 30% of peak-time downstream bandwidth in North

America

• A prime example of cloud-sourced architecture

Maintains only a small “in-house” facility for key functions

e.g., subscriber management (account creation, payment, …)

user authentication, video search, video storage, …

Akamai, Level 3 and Limelight

Majority of functions are sourced to Amazon cloud

(EC2/S3)

DNS service is sourced to UltraDNS

Leverage multiple CDNs (content-distribution networks) for

video delivery

Netflix Architecture

• Netflix has its own “data center” for certain crucial operations

(e.g., user registration, billing, …)

• Most web-based user-video interaction, computation/storage

operations are cloud-sourced to Amazon AWS

• Video delivery used to out/cloud-sourced to 3 CDNs; now

increasingly is delivered via its “own” OpenConnect boxes

placed at participating ISPs

• Users need to use MS

Silverlight or other

video player for video

streaming

Netflix Videos and Video Chunks

• Netflix uses a numeric ID to identify each movie

– IDs are variable length (6-8 digits): 213530, 1001192,

70221086

– video IDs do not seem to be evenly distributed in the

ID space

– these video IDs are not used in playback operations

• Each movie is encoded in multiple quality levels, each is

identified by a numeric ID (9 digits)

– various numeric IDs associated with the same movie appear to

have no obvious relations

54

Netflix Videos and Video Chunks

• Videos are divided in “chunks” (of roughly 4 secs),

specified using (byte) “range/xxx-xxx?” in the URL path:

Limelight:

http://netflix-094.vo.llnwd.net/s/stor3/384/534975384.ismv/range/057689?p=58&e=1311456547&h=2caca6fb4cc2c522e657006cf69d4ace

Akamai:

http://netflix094.as.nflximg.com.edgesuite.net/sa53/384/534975384.ismv/range/0

-57689?token=1311456547_411862e41a33dc93ee71e2e3b3fd8534

Level3:

http://nflx.i.ad483241.x.lcdn.nflximg.com/384/534975384.ismv/range/057689?etime=20110723212907&movieHash=094&encoded=06847414df0656e6

97cbd

• Netflix uses a version of (MPEG-)DASH for video streaming

55

DASH: dynamic adaptive streaming over HTTP

• Not really a protocol; it provides formats to enable efficient

and high-quality delivery of streaming services over the

Internet

– Enable HTTP-CDNs; reuse of existing technology (codec, DRM,…)

– Move “intelligence” to client: device capability, bandwidth

adaptation, …

• In particular, it specifies Media Presentation Description (MPD)

56

Ack & ©: Thomas Stockhammer

DASH Data Model and Manifest Files

• DASH MPD:

Segment Info

Initialization Segment

http://www.e.com/ahs-5.3gp

Media Presentation

Period, start=0s

Media Segment 1

Period,

•start=100

•baseURL=http://www.e.com/

…

…

Period, start=100s

Representation 1

Representation 2

Period, start=295s

…

100kbit/s

•bandwidth=500kbit/s

•width 640, height 480

…

Segment Info

500kbit/s

…

Representation 1

…

duration=10s

Template:

./ahs-5-$Index$.3gs

start=0s

http://www.e.com/ahs-5-1.3gs

Media Segment 2

start=10s

http://www.e.com/ahs-5-2.3gs

Media Segment 3

start=20s

http://www.e.com/ahs-5-3.3gh

…

• Segment Indexing: MPD only; MPD+segment; segment only

Media Segment 20

start=190s

Segment Index in MPD only

<MPD>

...

<URL

sourceURL="seg1.mp4"/>

<URL

sourceURL="seg2.mp4"/>

<MPD>

</MPD>

...

<URL sourceURL="seg.mp4" range="0-499"/>

<URL sourceURL="seg.mp4" range="500999"/>

</MPD>

seg1.mp4

http://www.e.com/ahs-5-20.3gs

seg2.mp4

...

seg.mp4

57

Ack & ©: Thomas Stockhammer

Netflix Manifest Files

• A manifest file contains metadata

• Netflix manifest files contain a lot of information

o

o

o

Available bitrates for audio, video and trickplay

MPD and URLs pointing to CDNs

CDNs and their "rankings"

<nccp:cdn>

<nccp:name>level3</nccp:name>

<nccp:cdnid>6</nccp:cdnid>

<nccp:rank>1</nccp:rank>

<nccp:weight>140</nccp:weight>

</nccp:cdn>

<nccp:cdn>

<nccp:name>limelight</nccp:name>

<nccp:cdnid>4</nccp:cdnid>

<nccp:rank>2</nccp:rank>

<nccp:weight>120</nccp:weight>

</nccp:cdn>

<nccp:cdn>

<nccp:name>akamai</nccp:name>

<nccp:cdnid>9</nccp:cdnid>

<nccp:rank>3</nccp:rank>

<nccp:weight>100</nccp:weight>

</nccp:cdn>

58

Netflix Manifest Files …

A section of the manifest containing the base URLs, pointing to

CDNs

<nccp:downloadurls>

<nccp:downloadurl>

<nccp:expiration>1311456547</nccp:expiration>

<nccp:cdnid>9</nccp:cdnid>

<nccp:url>http://netflix094.as.nflximg.com.edgesuite.net/sa73/531/943233531.ismv?token=1311456547_e329d42

71a7ff72019a550dec8ce3840</nccp:url>

</nccp:downloadurl>

<nccp:downloadurl>

<nccp:expiration>1311456547</nccp:expiration>

<nccp:cdnid>4</nccp:cdnid>

<nccp:url>http://netflix094.vo.llnwd.net/s/stor3/531/943233531.ismv?p=58&e=1311456547&h=8adaa52cd06db9219790bbdb323

fc6b8</nccp:url>

</nccp:downloadurl>

<nccp:downloadurl>

<nccp:expiration>1311456547</nccp:expiration>

<nccp:cdnid>6</nccp:cdnid>

<nccp:url>http://nflx.i.ad483241.x.lcdn.nflximg.com/531/943233531.ismv?etime=20110723212907&movieHash

=094&encoded=0473c433ff6dc2f7f2f4a</nccp:url>

</nccp:downloadurl>

</nccp:downloadurls>

59

Netflix: Adapting to Bandwidth Changes

• Two possible approaches

Increase/decrease quality level using DASH

Switch CDNs

• Experiments

Play a movie and systematically throttle available

bandwidth

Observe server addresses and video quality

• Bandwidth throttling using the “dummynet” tool

Throttling done on the client side by limiting how fast it

can download from any given CDN server

First throttle the most preferred CDN server, keep

throttling other servers as they get selected

60

Adapting to Bandwidth Changes

• Lower quality levels in response to lower bandwidth

• Switch CDN only when minimum quality level cannot be

supported

• Netflix seems to use multiple CDNs only for failover

purposes!

61

CDN Bandwidth Measurement

• Use both local residential hosts and PlanetLab nodes

13 residential hosts and 100s PlanetLab nodes are used

Each host downloads small chunks of Netflix videos from all

three CDN servers by replaying URLs from the manifest files

• Experiments are done for several hours every day for about

3 weeks

total experiment duration was divided into 16 second intervals

the clients downloaded chunks from CDN 1, 2 and 3 at the

beginning of seconds 0, 4 and 8.

at the beginning of the 12th second, the clients tried to

download the chunks from all three CDNs simultaneously

• Measure bandwidth to 3 CDNs separately as well as the

combined bandwidth

• Perform analysis at three time-scales

average over the entire period

daily averages

instantaneous bandwidth

CSci4211:

Multimedia Networking

62

There is no Single Best CDN

63

Video on Demand: OTT

Over-the-top: VoD provider uses public Internet to

deliver content (augmented by CDNs)

use Internet best effort service (no

guarantees)

ISPs (AT&T, Comcast, Version) relegated to

role of “bit pipes” - carrying traffic but not

offering “services”

unicast HTTP (e.g., DASH)

minimal infrastructure costs

user subscription fee or advertising revenue

64

Video on Demand: in-network

in-network: access network owner (Comcast, Verizon)

provides VoD service to its customers

high-quality user experience (QoE)

because ISP manages network

servers in same edge network as viewers

efficient network use: multicast possible

(one packet to many receivers)

ISP pays infrastructure cost

OTT versus in-network: who will win, and why?

65