* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download An example of CRS is presented below

Survey

Document related concepts

Transcript

ELEG 652 Principles of Parallel Computer Architectures

Handout # 2 Problem Set # 2

Issued: Wednesday, September 21, 2005

Due: Wednesday, October 5, 2005

Please begin your answer to every problem on a new sheet of paper. Be as concise and clear as you can.

Make an effort to be legible. To avoid misplacement of the various components of your assignment, make sure

that all the sheets are stapled together. You may discuss problems with your classmates, but all solutions must be

written up independently.

1.

One important application of high performance computing systems is solving large engineering

problems efficiently. Some physical/engineering problems can be formulated as mathematical problems

involving large number of algebraic equations, a million equatio ns with million unknowns is not a rarity.

The core computation in solving such systems of equations is matrix operations, either Matrix -Matrix

Multiply or Matrix Vector Multiply depending on the problem and solution methods under study.

Therefore, a challenge to computer architectures is how to handle these two matrix operations efficiently.

In this problem, you will study the implementation of the matrix vector multipl y (or MVM for short).

There are lots of challenging issues associated with the implementation of MVM. First of all, matrices in

many engineering problems are sparse matrices – where the number of non-zeroes entries in a matrix is

much less than the total size of the matrix. Such sparse matrices pose an interesting problem in terms of

efficient storage and computation. The use of different compressed formats for such matrices have been

proposed and use in practice.

There are several ways of compressing sparse matrices to reduce the storage space. However, identifying

the non-zero elements with the right indices is not a trivial task. The following questions deals with three

types of the sparse matrix storage.

Compressed Row Storage (CRS) is a method that deals with the rows of a sparse matrix. It consists of 3

vectors. The first vector is the “element value vector”: a vector which contains all the nonzero elements of

the array (collected by rows). The second vector has the column indices of the non-zero values. This

vector is called “column index vector”. The third vector consists of the row pointers to each of the

elements in the first vector. That is, elements of this “row pointer vector” consists of the element number

(for example. xth element start from row k) with which a new row starts.

With the above format in mind try to compress the following sparse matrix and represent it in a CRS

format:

A =

0

1

0

0

0

3

0

0

0

0

0

4

0

0

0

0

0

0

0

0

0

0

0

1

0

0

0

1

0

7

0

0

6

0

8

0

0

0

2

0

0

0

0

0

0

3

0

0

0

0

0

0

0

0

0

5

2

0

0

0

0

1

0

0

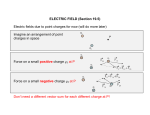

An example of CRS is presented below

0

0

0

0

6

0

0

1

0

0

0

0

0

0

0

0

8

0

12

0

0

5

0

0

0

0

0

10

0

0

0

0

5

0

2

0

B =

Vector 1:

Non Zero

elements

6

1

8

12

5

10

5

2

Vector 2:

Column

indices

4

1

4

0

3

3

2

4

Vector 3:

Row

pointers

0

1

2

3

5

6

9

The last element is defined as number

of number non-zero elements plus

one

This is the result if the initial index is zero. If the initial index is one then every entry in vector 2 and 3 will

be plus one, except for the last entry of Vector 3, which will be 9 in both cases. Both results (initial index 0

or 1) are acceptable, but please be consistent.

2.

In this problem, you are going to experiment with an implementation of Matrix-Vector Multiply (MVM)

using different storage formats. Matrix-Vector Multiply has the following pseudo-code:

for I: 1 to N

for J: 1 to N

C[I] += A[I][J] * B[J];

where C and B are vectors of size N and A is a sparse matrix of size NxN 1. In this specific code, the order

is Sparse Matrix times Vector. Create a C code that:

a. Create a random dense vector of N size (B).

b. Reads a sparse matrix of size NxN (A) and transform it to CRS format

c. Calculate the MVM (A*B) of the vector and the sparse matrix in CRS format. Make sure that your

code has some type of timing function (i.e. getrusage, clock, gettimeofday, _ftime, etc) and time

the MVM operation using the CRS matrix.

d. Repeat the operation with a Compress Column Storage 2 format matrix and compare performance

numbers. Report your findings, as which method has better performance, and run it for at least

five matrix/vector sizes. You can use the same methods that were used in Homework 1 to

1

If you want to get more information about the MVM, please take a look at the Fortran Implem entation of MVM at

http://www.netlib.org/slatec/lin/dsmv.f

2

Compress Column Storage is the same as CRS, but it uses columns pointers instead of row pointers; the non -zero elements

are gathered by columns and not rows; and Vector 2 contains the row indices instead of the column indices

calculate performance. Please report your machine configuration as you did in Homework 1.

3.

Have a look at the following storage representation, known as Jagged Diagonal Storage (JDS).

C =

1

0

0

2

1

0

2

0

0

0

3

0

3

0

0

0

0

0

4

0

0

1

0

0

5

C

1

3

1

2

4

2

3

5

1

jC

1

1

2

2

4

4

3

5

5

Off C

1

6

9

10

1

3

5

2

Perm

4

i. Explain how it is derived (Hint: Think in the sequence of E.S.S. which stands for Extract, Shift and

Sort)

ii. Why is this format claimed to be useful for the implementation of iterative methods on parallel and

vector processors?

iii. Can you imagine a situation in which this format will perform worse?

iv. Modify your program to create (read) matrices in a JDS and repeat your experi ments as in (2). Report

your results for this storage method as you did for CRS and CCS.

4.

It has been a privilege for a computer architect to work directly with real world problems and associates

matrices. Here is an opportunity for you. The following Fortran code generates a matrix representing a

real world engineering problem.

From ftp://www.capsl.udel.edu/pub/courses/eleg652/2004/codes/, download the Fortran code

(generate.f)

Read the code and identify the storage format of the matrix generated;

Create a C version of the matrix generator

Match this format with one of the formats used for the MVM code in problem 2. (Hint: The matrix

is too big to be handed converted!)

Run your MVM code on this matrix

Record your observations, issues and your results working with real matrices

5.

You have learned from class that the performance of a vector architecture can often be described by the

timing of a single arithmetic operation of a vector of length n. This will fit closely to the following generic

formula for the time of the operation, t, as a function of the vector length n:

t = r -1∞ (n + n1/2)

The two parameters r ∞ and n1/2 describe the performance of an idealized computer under the

architecture model/technology and give a first order description of a real computer. They are

defined as:

o

o

The maximum asymptotic performance r ∞ - the maximum rate of computation in floating

point operation performed per second (in MFLOPS). For the generic comput er, this

occurs asymptotically for vectors of infinite length.

The half-performance length n 1/2 – the vector length required to achieve half the

maximum possible performance.

The benchmark used to measure (r ∞, n1/2) is shown below:

T1 =

T2 =

T0 =

FOR

{

call Timing_function;

call Timing_function;

T2-T1;

N = 1, NMAX

T1 = call Timing_function;

FOR I = 1, N

{

A[I] = B[I] * C[I];

}

T2 = call Timing_function;

T = T2 – T1 – T0;

}

Please replace the call Timing_function with a C code timing function (clock, getrusage, etc) .

Please identify and explain your choices.

Assume vector machine X is measured (by the benchmark above) such that its r ∞ = 300

MFLOPS and n 1/2 = 10 and vector machine Y is measured (by the benchmark above) such

that its r∞ = 300 MFLOPS and n 1/2 = 100. What these numbers mean? How can you judge

the performance difference between X and Y? Explain.

Describe how would you use this benchmark (or its variation) to derive (r ∞, n1/2)

Rewrite the code to C, port them onto a Sun Workstation and run it. Tell us which

machine you used. Choose a sensible NMAX (Suggestion: NMAX = 256).

Report your results and make plots of:

o Runtime V.S. Vector Length

o Performance (MFLOPS) V.S. Vector Length

o What are the values of the two parameters you obtained?

State on which machine model and type you performed your experiments.

Problem 3.1

Match each of the following computer systems: KSR-1, Dash, CM-5, Monsoon, Tera MTA, IBM SP-2, Beowulf,

SUN UltraSparc-450 with one of the best descriptions listed below. The mapping is a one -to-one

correspondence in this case.

1.

2.

3.

4.

5.

6.

7.

8.

A massively parallel system built with multiple-context processor and a 3-D torus architecture. (Tera

MTA)

Linux-based PCs with fast Ethernet. (Beowulf).

A ring-connected multiprocessor using cache-only memory architecture. (KSR-1)

An experimental multiprocessor built with a dataflow architecture.(Monsoon)

A research scalable multiprocessor built with distributed shared memory coherent caches . (DASH)

An MIMD distributed-memory computer built with a large multistage switching network.(CM -5)

A small scale shared memory multiprocessor with uniform address space.( SUN UltraSparc -450 )

A cache-coherent non-uniform memory access multiprocessor built with Fat Hypercube network.

You are encouraged to search conference proceedings, related journals, and the Internet extensively for answers.

For each computer system, write one paragraph about the system characteristics such as the maximum node

numbers.

Hint: You need to learn how to quickly find references from Proceedings of International Symposium of

Computer Architecture (ISCA), IEEE Trans. of Computer, IEEE Micro, the Journal of Supercomputing, IEEE

Parallel & Distributed Processing.

Problem 3.2

Please try to fit the machine: “Cray Red Storm” into one of the classifications above. If none of them fits, please

provide a short architecture description.