* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download Agents - Hiram College

Agent-based model wikipedia , lookup

History of artificial intelligence wikipedia , lookup

Embodied language processing wikipedia , lookup

Agent-based model in biology wikipedia , lookup

Adaptive collaborative control wikipedia , lookup

Soar (cognitive architecture) wikipedia , lookup

Agent (The Matrix) wikipedia , lookup

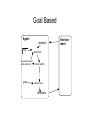

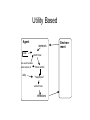

Agents CPSC 386 Artificial Intelligence Ellen Walker Hiram College Agents • An agent perceives its environment through sensors, and acts upon it through actuators. • The agent’s percepts are its impression of the sensor input. • (The agent doesn’t necessarily know everything in its environment) • Agents may have knowledge and/or memory A Simple Vacuum Cleaner Agent • • • • 2 Locations, A and B Dirt sensor (current location only) Agent knows where it is Actions: left, right, suck • “Knowledge” represented by percept, action pairs (e.g. [A, dirty] -> (suck)) Agent Function vs. Agent Program • Agent function: – Mathematical abstraction f(percepts) = action – Externally observable (behavior) • Agent program: – Concrete implementation of an algorithm that decides what the agent will do – Runs within a “physical system” – Not externally observable (thought) Rational Agents • Rational Agents “do the right thing” based on – Performance measure that defines criterion of success – The agent’s prior knowledge of the environment – Actions that the agent can perform – Agent’s percept sequence to date • Rationality is not omniscience; it optimizes expected performance, based on (necessarily) incomplete information. Program for an Agent • Repeat forever 1. 2. 3. 4. • Record latest percept from sensors into memory Choose best action based on memory Record action in memory Perform action (observe results) Almost all of AI elaborates this! A Reasonable Vacuum Program • • • • [A, dirty] -> suck [B, dirty] -> suck [A, clean] -> right [B, clean] -> left • What goals will this program satisfy? • What are pitfalls, if any? • Does a longer history of percepts help? Aspects of Agent Behavior • Information gathering - actions that modify future percepts • Learning - modifying the program based on actions and perceived results • Autonomy - agent’s behavior depends on its own percepts, rather than designer’s programming (a priori knowledge) Specifying Task Environment • • • • Performance measure Environment (real world or “artificial”) Actuators Sensors • Examples: – – – – Pilot Rat in a maze Surgeon Search engine Properties of Environments • Fully vs. partially observable (e.g. map?) • Single-agent vs. multi-agent – Adversaries (competitive) – Teammates (cooperative) • Deterministic vs. stochastic – May appear stochastic if only partially observable (e.g. card game) – Strategic: deterministic except for other agents • (Uncertain = not fully observable, or nondeterministic) Properties (cont) • Episodic vs. Sequential – Do we need to know history? • Static vs. Dynamic – Does environment change while agent is thinking? • Discrete vs. Continuous – Time, space, actions • Known vs. Unknown – Does the agent know the “rules” or “laws of physics”? Examples • • • • • • Solitaire Driving Conversation Chess Internet search Lawn mowing Agent Types • • • • Reflex Model-based Reflex Goal based Utility based Reflex Agent Agent sensors world now rules action now effectors Environment Model-Based Reflex Agent Agent sensors state world now how world evolves what actions do rules action now effectors Environment Goal Based Agent sensors state how world evolves what actions do goals world now future world action now effectors Environment Utility Based Agent sensors state how world evolves what actions do utility world now future world "happiness" action now effectors Environment Learning Agent Critic Feedback Sensors changes Learning Element L. Goals Problem Generator Performance Element know(was agent) ledge Effectors Environment Classes of Representations • Atomic – State is indivisible • Factored – State consists of attributes and values • Structured – State consists of objects (which have attributes and relate to other objects)