* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download 9 Tests and Confidence Intervals

History of statistics wikipedia , lookup

Bootstrapping (statistics) wikipedia , lookup

Taylor's law wikipedia , lookup

Psychometrics wikipedia , lookup

Foundations of statistics wikipedia , lookup

Confidence interval wikipedia , lookup

Statistical hypothesis testing wikipedia , lookup

Misuse of statistics wikipedia , lookup

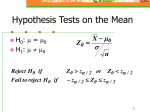

9 Tests and Confidence Intervals 9.1 9.1.1 Testing Hypotheses Introduction A statistical hypothesis is an assertion about some population or probability distribution. We do not know whether or not the assertion is valid but we may find relevant evidence in the data. Here are some examples of hypotheses: • the population mean number of vehicles using a short ferry crossing on summer Saturdays is 500. • the population mean number of vehicles using a short ferry crossing on summer Saturdays is more than 500. • a modification to the design of hulls has no effect on fuel consumption. • a modification to the design of hulls does have an effect on fuel consumption. The usual way to test hypotheses is by means of significance tests. In a significance test we test a hypothesis called the null hypothesis by assessing the evidence in the data against the null hypothesis and in favour of an alternative hypothesis. 9.1.2 Testing a mean: the normal distribution Many naturally occuring continuous variables have, at least approximately, normal distributions. This is, in fact, the origin of the name “normal” distribution. Suppose, for example, we do an experiment to measure some physical quantity. Suppose that the true value of the physical quantity is M but this value is, of course, unknown to us. Our measuring instrument gives measurement errors in such a way that any measurement X has a N (M, 16) distribution. That is, the standard deviation of the measurement errors is 4. Suppose also that we can repeat the experiment and the measurement errors in the replications are independent. (This example is a little artificial because, at this stage, we need to assume that the variance is known whereas, in reality, it is usually unknown. We will deal with the case of unknown variance in Lecture 10). Suppose that we will make n = 25 measurements. It can be shown that the sample mean X̄ has a normal distribution with mean M and variance σ2 16 = = 0.64. n 25 So X̄ is an unbiassed estimator of M and its standard error is r r σ2 16 = = 0.8. n 25 Now, suppose that we have some theoretical value M0 for M and we want to test the hypothesis that M = M0 . Then our null hypothesis is H 0 : M = M0 There are various different alternative hypotheses which we could have. For example we could have HA : M < M 0 That is we are testing against the alternative that M is less than M0 . We need a test statistic. We use X̄ − M0 . Z= p σ 2 /n 1 When the null hypothesis is true Z ∼ N (0, 1). The N (0, 1) distribution is called the standard normal distribution. We need a critical region (or rejection region). This is the set of values of Z which will lead us to reject the null hypothesis in favour of the alternative. In this example the critical region will have the form Z < k (since small values of Z would be expected under the alternative hypothesis). The value of k depends on the significance level (or size) of the test. This is the probability that we would reject the null hypothesis if it were true. The usual values used for significance levels are 0.05 (5%), 0.01 (1%) and 0.001 (0.1%). Thus the null hypothesis is rejected if a value of the test statistic occurs which would be unusual under the null hypothesis but more likely under the alternative. It can be shown that, if Z ∼ N (0, 1), then Pr(Z < −1.6449) = 0.05 Pr(Z < −2.3263) = 0.01 Pr(Z < −3.0902) if if if if Z Z Z Z > −1.6449 < −1.6449 < −2.3263 < −3.0902 we we we we = 0.001 do not reject H0 , reject H0 at the 5% level, reject H0 at the 1% level and reject H0 at the 0.1% level. If we reject H0 then we conclude that the evidence suggests that M < M0 . Note that, if we reject H0 at the 5% level we say that the result is “significant at the 5% level” and so on. Sometimes “significant at the 5% level” is called “significant”, “significant at the 1% level” is called “highly significant” and “significant at the 0.1% level” is called “very highly significant.” Figure 1 shows the rejection region at the 5% level. 9.1.3 Two-sided tests The alternative hypothesis above, M < M0 is called one-sided. The test is one-sided since we reject H0 only when Z < k. We often test against two-sided alternatives, e.g. M 6= M0 and reject if Z < k1 or Z > K2 . For example, in a 5% test we reject if Z < −1.96 or Z > 1.96. This is because, under H0 , Pr(Z < −1.96) = Pr(Z > 1.96) = 0.025. So the total probability of rejection is 0.05, as required. The rejection region is shown in figure 2. 9.1.4 Testing a proportion: Example It is suggested that most components of a certain type will work for 2000 hours without failing. Let p be the probability that a component of this type will fail before completing 2000 hours of work. Suppose we intend to test 100 components in order to test the null hypothesis that p = 0.5 against the alternative that p > 0.5. Null hypothesis Alternative hypothesis H0 : HA : p = 0.5 p > 0.5 We need a test statistic. Let us use the number of components which fail before 2000 hours. Call this T. We need a critical region (or rejection region). This is the set of values of T which will lead us to reject the null hypothesis in favour of the alternative. In this example the critical region will have the form T > k (since large values of T would be expected under the alternative hypothesis). The value of k depends on the significance level (or size) of the test. This is the probability that we would reject the null hypothesis if it were true. The usual values used for significance levels are 0.05 (5%), 0.01 (1%) and 0.001 (0.1%). Thus the null hypothesis is rejected if a value of the test statistic occurs which would be unusual under the null hypothesis but more likely under the alternative. 2 0.4 0.3 0.2 0.0 0.1 density −4 −2 0 2 4 Z 0.2 0.1 0.0 density 0.3 0.4 Figure 1: Rejection region, one-sided, 5%. −4 −2 0 2 Z Figure 2: Rejection region, two-sided, 5%. 3 4 In this example, under H0 , the distribution of the test statistic is T ∼Bin(100, 0.5). When we have a normal distribution with large n and with both np and n(1 − p) not too close to zero then we can use the normal distribution to calculate the binomial probabilities approximately. We use a normal distribution with the same mean and variance as the required binomial distribution. So we use µ = np and σ 2 = np(1 − p). In this case the distribution of T may be approximated as N (50, 25). We can show that if if if if T T T T ≤ 58 > 58 > 61 > 65 we we we we do not reject H0 , reject H0 at the 5% level, reject H0 at the 1% level and reject H0 at the 0.1% level. If we reject H0 then we conclude that the evidence suggests that p > 0.5. Note that, if we reject H0 at the 5% level we say that the result is “significant at the 5% level” and so on. Sometimes “significant at the 5% level” is called “significant”, “significant at the 1% level” is called “highly significant” and “significant at the 0.1% level” is called “very highly significant.” 9.1.5 Power The probability that H0 is rejected when it is true is the significance level. The probability that H0 is rejected when HA is true is the power of the test. This usually depends on the value of a parameter. 9.1.6 Example continued Consider the test at the 5% level. The distribution of the test statistic is T ∼Bin(100, p) or, approximately, N (100p, 100p[1 − p]). We reject H0 if T > 58. We can calculate the power of this test for any given value of p. We can increase the power by increasing the sample size, n. If n is small then fairly large values of p are likely to fail to lead to rejection of H0 . If n is very large then H0 might well be rejected as a result of a trivial difference between p and 0.5. In designing the experiment we must choose the value of n bearing in mind the cost of testing components and the size of deviation from 0.5 which we wanted to reasonably sure we will detect. See figure 3. 9.1.7 Two-sided tests The alternative hypothesis above, p > 0.5, is called one-sided. The test is one-sided since we reject H0 only when T > k. We often test against two-sided alternatives, e.g. p 6= 0.5 and reject if T > k2 or T < k1 . For example, in a 5% test as above with n = 100 and H0 : p = 0.5 and HA : p 6= 0.5, we reject H0 if T < 41 or if T > 59. Note that, when H0 is true, Pr(T < 41) = Pr(T > 59) = 0.025 giving a total of 0.05. 9.2 9.2.1 Interval Estimation (Confidence Intervals) Introduction A point estimate of an unknown parameter gives us a single value calculated in such a way that it is “likely” to be “close” to the true value. We can use a hypothesis test to test the hypothesis that a parameter takes a particular value. It is also useful to be able to give a range of values, around our point estimate, to indicate just how close the estimate is “likely” to be to the true value and just how “confident” we can be in this. I have put quotation marks around some of the words here because I used them rather loosely. When dealing with confidence intervals it is necessary to choose words carefully. If we were willing to take the approach of adjusting our beliefs, expressed as a probability distribution, about an unknown quantity when we observe data, then we could simply give the probability that the true 4 Figure 3: Power of Test value of the quantity was inside a given range. However many people are apparently unwilling to do this so the approach of confidence intervals has been developed. Suppose we want to estimate some quantity, say µ, the mean mark of students on a standard test, and we are going to do this by collecting a sample of data. Suppose we define two statistics L and U which we will calculate from these data (in the same way that the sample mean, X̄, is a statistic calculated from data). Suppose we know that, if we repeated this experiment many times then 95% of the time µ would be between L and U, i.e. L < µ < U. That is, before we collect the data, we can say that Pr(L < µ < U ) = 0.95. Then, once we have observed the data and calculated L = l and U = u, we can say that we are 95% confident that l<µ<u and this interval is a 95% confidence interval. In the same sort of way we can have, for example, 90% or 99% confidence intervals. A 99% confidence interval from the same experiment would be wider than a 95% confidence interval but a 90% confidence interval would be narrower. 9.2.2 Example 1 There are several ways to find confidence intervals but we can illustrate one of them by using the example in 9.1.4. Suppose we are going to observe 100 components and observe T, the number failing before 2000 hours. Suppose the true value of p is p? . We know that the probability of rejecting the null hypothesis H0 : p = p? in a test at the 5% level is 0.05. The probability of not rejecting H0 must therefore be 0.95. Now suppose we test all possible values of p in this way. That is, for every value x we test the null hypothesis that p = x. Suppose we then collect together all of the values which were not rejected. Since there is a 95% chance that p? will not be rejected, there must be a 95% chance that p? will be in the interval formed in this way. Therefore it is a 95% confidence interval. (Actually, for technical reasons, it will only be approximately a 95% confidence interval). 5 9.2.3 Example 2: Estimating the mean of a normal distribution when the variance is known When our data are measurements, such as fuel consumptions or times to complete a voyage, we often use the normal distribution as a model. Sometimes we transform the data first so that the normal distribution is a better description. For example, with the voyage times we might use the logarithms of the times. The normal distribution with mean µ and variance σ 2 is written N (µ, σ 2 ). Usually we would not know the value of either µ or σ 2 but the analysis is slightly simpler if we know the value of σ 2 so, just for now, we assume that we do. Let X1 , . . . , Xn be i.i.d. observations from a N (µ, σ 2 ) distribution where σ 2 is known. Then, when the data have been observed, if the observed value of the sample mean X̄ is x̄, we say that we are 95% confident that −1.96 < x̄ − µ √ < 1.96. σ/ n That is √ √ x̄ − 1.96σ/ n < µ < x̄ + 1.96σ/ n. This is a 95% confidence interval for µ. We can have other confidence levels, e.g. 99%, by replacing 1.96 with appropriate constants found by inspection of tables of the standard normal distribution function. We can also have one-sided confidence intervals, e.g. N (µ, σ 2 ) with σ 2 known. For example √ µ > x̄ − 1.6449σ/ n is a one-sided 95% confidence interval for µ. 9.2.4 Sample size determination for point estimation: Example Suppose we plan to take n i.i.d. observations X1 , . . . , Xn on the N (µ, 4) distribution. What is the probability that X̄ is within ±1 of µ? We can show that the probability, for different values of n, is as follows. n 1 2 3 4 5 6 7 8 Pr(µ − 1 < X̄ < µ + 1) 0.3829 0.5205 0.6135 0.6827 0.7364 0.7793 0.8141 0.8427 n 9 10 11 12 13 14 15 16 Pr(µ − 1 < X̄ < µ + 1) 0.8664 0.8862 0.9027 0.9167 0.9286 0.9386 0.9472 0.9545 What is the smallest sample size which gives Pr(µ − 1 < X̄ < µ + 1) ≥ 0.95 when X ∼ N (µ, 4)? The smallest sample size which gives Pr(µ − 1 < X̄ < µ + 1) ≥ 0.95 when X ∼ N (µ, 4) is n = 16. 9.3 Problems 1. The minimum diameters, in mm, of 50 roller bearings are measured. The measurements are given below. Assume that, because the sample size is fairly large, we may use a normal distribution with standard deviation given by the sample standard deviation as a reasonable approximation. Give a symmetric 99% confidence interval for the population mean minimum diameter. Do the data suggest that the population mean is not 30mm? 6 30.05 30.06 29.99 30.05 30.09 30.06 30.14 30.06 29.98 30.07 30.04 30.05 30.05 30.00 30.00 30.06 30.08 30.10 30.09 30.16 30.02 30.10 30.10 30.13 30.02 30.05 30.05 30.00 30.08 30.02 30.09 30.06 30.07 30.11 30.01 30.00 30.01 30.01 30.09 30.15 30.11 30.06 30.01 30.01 30.07 30.09 30.08 30.07 30.04 30.05 2. A factory makes outboard motors. In a sample of 50 motors, 22 are found to need carburettor adjustments. Suppose that the population proportion requiring adjustment is θ. Test the null hypothesis that θ = 0.4 against the alternative that θ > 0.4 and give a symmetric 95% confidence interval for θ. Hint: To find the confidence interval, if we ignore the continuity correction, solve the equations x − nθ p and nθ(1 − θ) x − nθ p nθ(1 − θ) = 1.96 = −1.96 for θ when x = 22 and n = 50. We can make a slight modification to allow for the continuity correction. 7