* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download Worksheet A

History of statistics wikipedia , lookup

Taylor's law wikipedia , lookup

Confidence interval wikipedia , lookup

Bootstrapping (statistics) wikipedia , lookup

Sampling (statistics) wikipedia , lookup

Gibbs sampling wikipedia , lookup

Resampling (statistics) wikipedia , lookup

Misuse of statistics wikipedia , lookup

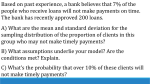

Topic 6 A Estimation In quantitative research, we are often interested in knowing certain characteristics of the population. In the context of education, this could be the average student achievement in mathematics, the percentage of students reaching a particular level, comparisons between girls and boys, etc. Typically, we will not have access to population data. So sampling is carried out to estimate the population parameters of interest. It is straight forward to compute means, percentages, and other statistics based on the sample. The issue is: how accurate are these estimates as compared to the population parameters? Given that we don’t have population data, how are we going to assess the accuracies of estimates? If we don’t provide accuracy measures associated with our estimates (technically termed confidence intervals), incorrect conclusions may be drawn. For example, we may conclude that there are 75% of the students reaching minimal proficiency standard, when, in the population, there are actually 80%. Whether this difference (between the estimated and the actual parameter values) matters or not, it depends on the purposes of using these numbers. We may erroneously conclude that a policy measure failed (or succeeded) because our estimates are not accurate enough. Whenever estimation is carried out, it is essential to report confidence intervals, so the accuracy of estimates can be taken into account when conclusions are drawn. One way to determine the confidence interval is to repeatedly draw samples. The sample estimate of a parameter is computed for each sample. An examination of the variations of the estimates across all samples will give us an idea of how different the estimates are. For example, if we want to estimate the average height of people in a population, we draw samples of certain size, and compute the averages. For 10 samples, we obtained 175, 175, 175, 176, 174, 175, 176, 176, 175, 175 as the sample averages. We may then conclude that our sample means seem quite accurate, because there is not much variation across samples. On the other hand, if we obtained 178, 164, 185, 190, 163, 174, 172, 166, 187, 184 as the sample averages, we may conclude that there is a great deal of variation from sample to sample, so our confidence about the accuracy of ONE sample mean is not very high. In the latter case, our confidence interval will be large, reflecting the uncertainty of the estimate. In the former case, our confidence interval will be narrow, showing that the sample estimate is quite accurate. The dilemma is that, typically, we only have resources to draw one sample. We have to make an estimate of the confidence based on this one sample only. In this case, statistical theory can help us. To understand the statistical process of computing confidence intervals, a number of simulations are designed below. The advantage of simulation is that, we begin with population parameters that are known, and we simulate sampling processes and examine the degree of variation across samples. We can then compare sample estimates and population estimates, and assess how different these are. Simulation will help us understand the notion (and computation) of confidence intervals in estimation. In real life, typically, we cannot carry out simulation in a straight forward way, as we do not know the true population values. We typically apply formulas to compute confidence intervals. The simulation exercises below are aimed to help us understand these formulas, so they are not just some black boxes from which we plug out a few numbers. Simulation 1 Description We are interested in estimating the proportion of heads in tossing a coin, to assess whether the coin is fair or biased. To do this, we can toss a coin 10 times, and record the number of heads in 10 tosses. The proportion of heads in 10 tosses is our estimated value of the population proportion. We know that, even if the coin is fair, in 10 tosses, the proportion of heads may not be exactly 50%. We could get 7 out of 10 (7/10), or 8 out of 10 (8/10), or 2 out of 10 (2/10), etc. If we just base our estimate on 10 tosses, and use our estimate as the proportion for the population parameters (say, in 1 million tosses), more often than not, we will make incorrect conclusions. However, if we take the confidence interval into account, and say, for example, that our estimates mostly vary between 3 and 7, so the coin could still be fair, even if we don’t get exactly 50% in the 10 tosses. To determine the likely number of heads in 10 tosses for a fair coin, do the following simulation. Step 1 Do the simulation in 10CoinTosses_demo.swf. EXCEL following the animated software demo Copy the values in column “ No. of heads in 10 tosses” in EXCEL to SPSS, and do a histogram. (Your picture will look a little different from mine, as your random numbers will not likely be the same as mine. Paste your results over mine.) This distribution is called the sampling distribution of the random variable number of heads in 10 tosses of a fair coin. From this distribution, we get an idea of the variability of our results. For example, from the histogram, we see that we can easily get 3 to 8 heads in 10 tosses of a coin for a fair coin. (Your conclusion may be slightly different from mine. If we simulate this 1000 times instead of 100 times, our results are likely to be more similar.) If we have a coin and we want to assess whether the coin is fair or not, we are not likely to conclude that the coin is biased if we get 3 to 8 heads, since we know that it is quite likely to have these results from a fair coin. (An additional exercise is to change the probability of getting a head, say, to 0.7 instead of 0.5, and then find the sampling distribution. That is, instead of the formula “=if(rand()>0.5,1,0”, use the formula “if(rand()>0.7,1,0”) Step 2 Repeat the process for 50 tosses instead of 10 tosses. (Replace my graph with yours. Make sure the scale goes from 0 to 50. You can do this by double-clicking on the graph in SPSS, and then double click on the x-axis. Change the maximum and minimum.) This is the sampling distribution when the proportion of heads is estimated from 50 tosses. We can see that the sampling distribution is tighter than that from the 10 tosses. That is, we are more confident about our estimated proportion of heads from one sample of 50 tosses (than from one sample of 10 tosses). We might say that, in 50 tosses, if we get between 20 and 30 heads, we will conclude that the coin is fair. Step 3 Repeat the process for 100 tosses instead of 50 tosses. [Insert your sampling distribution below.] What range of values would be your acceptance range to conclude that the coin is fair? Level of Confidence In deciding on the confidence interval associated with an estimate, we first need to decide on a level at which the confidence interval is stated. For example, we may say that at the 95% confidence level, our estimate will range between 18 and 32. What this means is that, if we repeatedly draw samples, we expect our sample estimate to be within the range of 18 to 32, 95% of the time. For 5% of the time, our sample estimate will be outside this range. Statistically, we say that the 95% confidence interval is 18 to 32. We can choose a different level of confidence. For example, we may choose 90% confidence level. That means that the range we quote will cover 90% of our sample estimates if we repeatedly draw samples. For 10% of the time, the sample estimate will be outside the 90% confidence interval. Consequently, the higher the confidence level (e.g., 99% instead of 95%), the wider the confidence interval will be. Theoretical formula for a random variable with a binomial distribution The coin tossing exercise is an example of the binomial distribution, where the number of heads in n tosses, X, follows a binomial distribution. The number of tosses, n, is the “number of trials”, and the probability of success, p, (of tossing a head) is 0.5 in our case. For a binomial random variable, the mean is np, and the variance is npq, where q=1-p. So the standard deviation is the square root of (npq). Therefore, we can compute the confidence interval using an approximate formula. Remember that for a normal distribution, 95% of the observations lies within mean±1.96×standard deviation. So the 95% confidence interval for a binomial random variable is approximately np±1.96×sqrt(npq). Check this with your simulated results. Simulation 2 Description This time, we will simulate a height distribution and sample from it. Step 1 Assume that, in some population, the average height of people is 175 cm, and standard deviation is 20cm, and that the distribution is normally distributed. We will randomly sample from this population 10 people and record their heights. We then compute the average of the 10 heights, and use this as our estimate of the population average. Do the simulation in EXCEL 10HeightSample_demo.swf. following the animated software Copy the values in column “ Average” in EXCEL to SPSS, and do a histogram. demo (Replace my graph with yours) How confident are we about using the average height of 10 randomly selected people to represent the population average? If our estimate from a sample of 10 is 185 cm, can we say that IS the population average? The sampling distribution shown above tells us that, if the true mean is 175 cm, we can easily get a mean of 185 cm in a sample of 10 people. Should we say that, while our estimate is 185 cm, given the inaccuracies caused by sampling, the true mean is likely to lie somewhere between x and y? If we increase our sample size from 10 to 100, would our values of x and y be much closer to each other? Step 2 Repeat the process for a sample of 50 people instead of 10 people. [Insert your sampling distribution below.] Step 3 Repeat the process for a sample of 100 people. [Insert your sampling distribution below.] Step 4 In SPSS, compute the average and standard deviation for the 100 sample means when 10 people were selected. Do the same for the 100 samples when 50 people were selected. Do the same for the 100 samples when 100 people were selected. As the sample size increases from 10 people to 100 people, are the sample means getting closer to 175 cm? As the sample size increases from 10 people to 100 people, do the sample means vary less? In SPSS, we have recorded sample means for 100 samples. If a histogram is made, this is the sampling distribution of sample means. The sampling distribution of the sample means tells us about how accurate our estimated means are. Standard errors The standard deviation of the sampling distribution of the sample means is called the standard error of the mean. From statistical theory, the standard error of the mean is given by height n where height is the standard deviation of the population distribution. In our example, this is 20 cm. n is the sample size (e.g., 10, 50, 100). We can use this formula to estimate the variation of the sample means, without resorting to simulation. Fill in the table below. Sample size Observed mean Observed standard deviation of the means Expected mean Expected standard deviation of the means (as calculated from the formula above) 10 50 100 However, the formula for computing the standard error requires the knowledge of the population standard deviation which is usually unknown to us. In practice, we use the observed standard deviation as an estimate. This is the topic for the next part. A Practical Example A data set (not simulated) (StudentLiteracyScores.sav). of students’ reading scores is provided The data set contains student scores on a reading test for a population. Compute population mean and standard deviation of reading scores. Compute population means for girls and boys separately. Use SPSS sampling procedures (Data -> Select Cases -> Random sample) to sub sample just 10 students. Compute sample mean for all 10 students, and compute sample means for girls and boys separately. Are you happy these are close enough to the population means? Increase your sample size. At what sample size are you happy enough that the sample means are good estimates of the population means? Summary By the end of this class, you should understand the need to specify the degree of accuracy of an estimate. Typically, in reports, you will see results such as This means that, with 95% certainty, the mean score lies within 418 ± 14.3. That is, you are making a statement that the population mean lies between 403.7 and 432.3, and there is 5% chance this statement is incorrect.