* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download lecture 7, 8 organising, summerising, understanding data and

Bootstrapping (statistics) wikipedia , lookup

Taylor's law wikipedia , lookup

Foundations of statistics wikipedia , lookup

History of statistics wikipedia , lookup

Confidence interval wikipedia , lookup

Regression toward the mean wikipedia , lookup

Resampling (statistics) wikipedia , lookup

Lecture 7,8

Summarizing Data, hypothesis

testing and expression of results

1

Purpose of Statistics

• To describe & summarize information,

thereby reducing it to smaller, more

meaningful sets of data

• To make predictions or to generalize about

occurrences based on observations

• To identify associations, relationships or

differences between sets of observations

2

Main Types of Statistics

• Descriptive Statistics

• Inferential Statistics

3

Descriptive Statistics

• Descriptive Statistics involves

organizing, summarizing & displaying

data to make them more

understandable.

• Most common statistics used are

frequencies, percents, measures of

central tendency, summary tables,

charts & figures.

4

Inferential Statistics

• Inferential Statistics: a set of

statistical techniques that provides

predictions about population

characteristics based on information

from a sample drawn from that

population.

• Inferential statistics report the

degree of confidence of the sample

statistic that predicts the value of

the population parameter.

5

Describing data

15

Describing data

16

Measures of Central

Tendency

• When assessing the central tendency of

your measurements, you are attempting to

identify the “average” measurement

– Mean: best known & most widely used

average, describing the center of a frequency

distribution

– Median: the middle value/point of a set of

ordered numbers below which 50% of the

distribution falls

– Mode: the most frequent value or category in

a

distribution

17

Thinking Challenge

$400,000

$70,000

$50,000

$30,000

... employees cite low pay -most workers earn only

$20,000.

$20,000

... President claims average

pay is $70,000!

18

Comparison of

Central Tendency Measures

• Use Mean when distribution is

reasonably symmetrical, with few

extreme scores and has one

mode.

• Use Median with nonsymmetrical

distributions because it is not

sensitive to skewness.

• Use Mode when dealing with

frequency distribution for

nominal data

19

Variability

• A quantitative measure of the degree to

which scores in a distribution are spread out

or are clustered together;

• Types of variability include:

– Standard Deviation: a measure of the

dispersion of scores around the mean

– Range: Highest value minus the lowest value

– Interquartile Range: Range of values

extending from 25th percentile to 75th

percentile

20

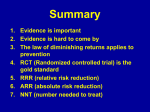

Key Principles of Statistical

Inference

26

30

Explaining precision or

certainty of effect

• Studies can only ever hazard a guess

at the effect of exposures or

interventions in the target

population.

31

Explaining precision or

certainty of effect

• Imagine you tested a tablet on 100 adults to see

whether it reduced arthritic pain. You find that it

reduces pain in 63 of 100 people. That is, it has an

absolute risk reduction of 63%. Say you now

randomly sampled 100 different adults, and

repeated exactly the same experiment. Do you

think 63 of 100 would have pain relief? Probably

not. By chance, this time you might find that only

58 of the 100 people had pain relief. This second

study would have, therefore, reported an absolute

risk reduction of 58%. If you repeated the

experiment enough times, you might, by chance,

get a few really weird results, like 30% or 88%,

which were a long way away from the rest.

32

Explaining precision or

certainty of effect

•

Luckily, there is a law of statistics which

begins to get around this difficulty.

•

It says that if you were to conduct more

and more studies on random samples

from the target population and keeping

averaging out their results, you will get

closer and closer to the ‘Truth.’

34

Explaining precision or

certainty of effect

•

This is all well and good if you can average out

results from lots of studies (this is what metaanalyses do – providing they are carried out

properly).

•

But what if there are only one or two studies?

Fortunately, the second part of the law says

that the results from different studies are

distributed in a predictable way (in a normal or

Gaussian distribution) around the ‘True’ value.

So, even from a single study, we can predict

what the results of other, similar studies might

be, were we to conduct them.

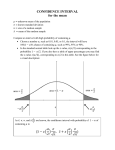

35

Explaining precision or

certainty of effect

•

•

Statisticians can, therefore, use this aspect of

the law to calculate confidence intervals around

a single study result . Thus, the confidence

interval may be accurately interpreted in the

following way:

The range of values in which you would

expect a specified percentage (usually 95%)

of study results from studies like this one to

fall, had you conducted them.

36

39

CENTRAL LIMIT THEOREM

• A. For a randomly selected sample of size n (n should be

at least 25, but the larger n is, the better the

approximation) with a mean u and a standard

deviation o-.

•

_

– 1.

The distribution of sample means x is

approximately normal regardless

of

whether the population distribution is normal

• From statistical theory come these two additional theories

•

– 2.

The mean of distribution of sample

means is equal to the mean of the

41

population distribution – that is x

3. The standard deviation of the distribution of

sample means calculated by the usual methods

is very close to the standard error of the

population mean calculated by

the

standard deviation of the population divided by

the square root of

the sample size –

• ẍ = SEẍ = /sr n

42

STANDARD ERROR OF THE MEAN

The measure of variation of the distribution of sample means,

n , referred to as the standard error of the mean, is denoted as

SE x - that is

SE x x

n

43

POINT ESTIMATES AND

CONFIDENCE INTERVALS

95% CI of x 1.96

n

44

Important note

• We are confident that 95% of

samples mean are within 1.96 sd of

the population mean and then 95% of

all samples will provide A CI that

capture the actual population mean

45

And

• We are confident that 95% of

samples mean differences are within

1.96 sd of the population mean

difference and then 95% of all

samples mean differences will

provide A CI that capture the actual

population mean difference

46

Population estimates

AND Confidence interval

• If we have a sample of 25 people and

their average blood glucose is 161.84

( equals 58.15) what is the 95% CI

of mean=

=sample mean±1.96SE= 138.6-185.24

(upper and lower confidence limit)

47

Interpretation of the

confidence interval

• The upper and lower limits of the confidence

interval can be used to assess whether the

difference between the two mean values is

clinically important.

• For example, if the lower limit is close to zero,

this indicates that the true difference may be

very small and clinically meaningless, even if the

test is statistically significant.

48

Significance

Testing

50

Null Hypothesis - Ho

• Ho proposes no difference or

relationship exists between the

variables of interest

• Foundation of the statistical test

• When you statistically test an

hypothesis, you assume that Ho

correctly describes the state of

affairs between the variables of

interest

52

Example (Hypothesis

testing using p value)

• Drug A= 95±12.4 (n=10)

• Drug B=105±11.8 (n=10)

1. Null hypothesis is that mean

difference=0

2. alpha=0.05

3. Mean difference =10

4. SE for the difference=5.4

58

59

60

SPSS Output for Two Sample

Independent t-test Example

Group Statistics

Pulse rate

Group

1

2

N

10

10

Mean

95.00

105.00

Std. Deviation

12.428

11.776

Std. Error

Mean

3.930

3.724

Independent Samples Test

Levene's Test for

Equality of Variances

t-tes t for Equality of

Means

Pulse rate

Equal variances

Equal variances

ass umed

not as sumed

.134

.719

-1.847

-1.847

18

17.948

.081

.081

F

Sig.

t

df

Sig. (2-tailed)

Mean Difference

Std. Error Difference

95% Confidence Interval

of the Difference

Lower

Upper

-10.000

-10.000

5.414

5.414

-21.374

1.374

-21.377

1.377

62

What does this mean

• 1. the difference is due to chance

• 2. the samples came from a population with

a true mean difference that is equal to 0

• 3. mean difference of 10 has a probability

of 8%

63

P-values

• The p value measures how likely a particular

difference between groups is to be due to chance.

• A p-value of 0.1 means that, extrapolating from the

results of our study, there is a 10% chance that in

‘Truth,’ there is no effect.

• A p-value of 0.05 means that there is a 5% chance

the in ‘Truth’ there is no effect.

• Usually, we studies define p-values of <0.05 as being

statistically significant. There is nothing magic

about this cut-off. What the authors are saying

when they choose this cut-off is that they are happy

to report that there is an effect, providing the

chance that they are wrong (ie in ‘Truth’ there is no64

effect) is less than 5%.

Hypothesis testing Using

CI

•

•

•

•

•

•

Mean difference =10

SE=5.4

CI=2*5.4

10±10.8= -0.2 to 20.8

Accept the null hypothesis

P>0.05

65

Confidence Interval

• The range of the actual difference

between the two drugs

• Mean difference in HBA1c reduction

1 (0.1-1.9)

• Absolute risk reduction 30% (3-70%)

• NNT 3 (1-10000)

• RRR 40% (20-120)

66

Interpretation of the

confidence interval

• The upper and lower limits of the confidence

interval can be used to assess whether the

difference between the two mean values is

clinically important.

• For example, if the lower limit is close to zero,

this indicates that the true difference may be

very small and clinically meaningless, even if the

test is statistically significant.

67

SPSS Output for Two Sample

Independent t-test Example

Group Statistics

Pulse rate

Group

1

2

N

10

10

Mean

95.00

105.00

Std. Deviation

12.428

11.776

Std. Error

Mean

3.930

3.724

Independent Samples Test

Levene's Test for

Equality of Variances

t-tes t for Equality of

Means

Pulse rate

Equal variances

Equal variances

ass umed

not as sumed

.134

.719

-1.847

-1.847

18

17.948

.081

.081

F

Sig.

t

df

Sig. (2-tailed)

Mean Difference

Std. Error Difference

95% Confidence Interval

of the Difference

Lower

Upper

-10.000

-10.000

5.414

5.414

-21.374

1.374

-21.377

1.377

68

• Confidence intervals show a statistically

significant result if they “do not cross the

line of no effect.” What does this mean?

Basically, you can tell whether a result is

statistically significant by seeing whether

the confidence intervals include zero (for

comparative absolute measures of risk,

such as absolute risk reduction) or one

(for relative measures of risk, including

relative risks and odds ratios).

69

Statistical Significance VS

Meaningful Significance

• Common mistake is to confuse statistical

significance with substantive

meaningfulness

• Statistically significant result simply

means that if Ho were true, the observed

results would be very unusual

• With N > 100, even tiny

relationships/differences are statistically

significant

74

Statistical Significance VS

Meaningful Significance

• Statistically significant results say

nothing about clinical importance or

meaningful significance of results

• Researcher must always determine if

statistically significant results are

substantively meaningful.

• Refrain from statistical

“sanctification” of data

75

76

Confidence Interval (0.3 – 4) •

Lower limit must be > 2 to be Clinical

significant

77

HOW THE RESULTS ARE

EXPRESSED

Understanding Results

78

Mean Difference

• Drug A reduced HBA1c by 1%

• Drug B reduced HBA1c by 2%

• Mean difference =

80

• The benefits or harms of a treatment can be

shown in various ways:

• Drug X produced an absolute reduction in deaths

by 7 per cent ("absolute risk reduction")

•

Drag X reduced the death rate by 28 per cent

("relative risk reduction")

• Drug X increased the patients' survival rate from

75 to 82 percent

• 14 people would need to be treated with drug X to

prevent one death ("number needed to treat")

81

8 week

Treatment

New Drug

death

No death

1

a

c

Old drug

2

999999999

1000000000

b

d

999999998

1000000000

200000

82

GERD Treatment: 2 x 2

Table

8 week

Treatment

Proton Pump

Inhibitor

Esophageal

bleeding

No bleeding

45

55

100

75

25

100

120

80

a

c

H2

Antagonist

b

d

200

83

Relative Risk (RR)

• The relative risk of an outcome is the chances of

that outcome occurring in the treatment group

compared with the chances of it occurring in the

control group.

• If the chances are the same in both groups, the

relative risk is 1.

• The relative risk reduction (RRR) is the amount by

which the risk (of death) is reduced by drug X as

a comparative percentage of the control,

calculated as:

84

Risk: The incidence of an event. •

EER: Experimental event rate. –

CER: Control event rate. –

EER

RR =

Relative Risk

CER(RR) •

Used: Randomized trial, Cohort study •

Not used: Case-control study •

85

Relative Risk (Risk Ratio)

GERD Ttreatment:

Green et al. Br. J. Clin. Res. 6: 63-76, 1995.

RR =

RR =

a/(a+b)

c/(c+d)

45 / 99

73 / 97

RR = 0.60

86

Relative Rate Reduction

(RRR)

Used to measure treatment effects •

CER – EER

RRR =

.100

•

CER

87

Relative Rate Reduction

GERD Ttreatment:

Green et al. Br. J. Clin. Res. 6: 63-76, 1995.

EER = 45/99 = 0.45

CER = 73/97 = 0.75

RRR = CER - EER

CER

RRR = 0.75 – 0.45 . 100

0.75

RRR = 40%

88

Absolute Rate Reduction

(ARR)

ARR is the reduction in the rate of •

an event (sometimes “undesirable”)

that comes about from applying a

treatment.

Absolute Rate Reduction (re: •

undesirable)

ARR = CER - EER

Absolute Rate Reduction (re: •

desirable) 89

ARR = EER - CER

Absolute Rate Reduction

GERD Ttreatment:

Green et al. Br. J. Clin. Res. 6: 63-76, 1995.

ARR = CER - EER

ARR = 73/97 – 45/99

ARR = 0.75 – 0.45

ARR = 0.30

30 per 100

90

Number Needed to Treat

(NNT)

NNT Interpret as the number of •

patients that must receive treatment

to have one success.

More effective = lower NNT •

1

NNT =

1

=

ARR

(EER-CER)

91

Number Needed to Treat

GERD Ttreatment:

Green et al. Br. J. Clin. Res. 6: 63-76, 1995.

1

NNT =

ARR

1

NNT =

0.30

NNT = 3.33

92

94

95

96

97

98