* Your assessment is very important for improving the workof artificial intelligence, which forms the content of this project

Download Let [R denote the set of real numbers and C the set of complex

Elementary algebra wikipedia , lookup

Tensor operator wikipedia , lookup

Quartic function wikipedia , lookup

History of algebra wikipedia , lookup

Quadratic form wikipedia , lookup

Cartesian tensor wikipedia , lookup

Bra–ket notation wikipedia , lookup

System of polynomial equations wikipedia , lookup

Matrix (mathematics) wikipedia , lookup

Determinant wikipedia , lookup

Non-negative matrix factorization wikipedia , lookup

Basis (linear algebra) wikipedia , lookup

Orthogonal matrix wikipedia , lookup

Singular-value decomposition wikipedia , lookup

Linear algebra wikipedia , lookup

Four-vector wikipedia , lookup

Gaussian elimination wikipedia , lookup

Fundamental theorem of algebra wikipedia , lookup

Matrix calculus wikipedia , lookup

Matrix multiplication wikipedia , lookup

System of linear equations wikipedia , lookup

Perron–Frobenius theorem wikipedia , lookup

Jordan normal form wikipedia , lookup

4

actions result.

and vice versa.

L-U'LUHULLI.HU

facilitates a stochastic inter-

of

For the historical survey in this chapter we

used the MacTutor

Mathematics Archive from the

of St. Andrews in Scotland (2003) and the

Furthermore, the paper by

outline of the history of game theory by Walker

Breitner (2002) was used for a reconstmction of the early days of dynamic game theory.

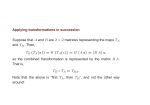

This chapter reviews some basic linear algebra including material which in some

instances goes beyond the introductory level. A more detailed treatment of the basics

of linear algebra can for example, be found in

(2003), whereas Lancaster and

Tismenetsky (1985) provide excellent work for those who are interested in more details at

an advanced level. We will outline the most important basic concepts in linear algebra

together with some theorems we need later on for the development of the theory. A

detailed treatment of these subjects and the proofs of most of these theorems is omitted,

since they can be found in almost any textbook that provides an introduction to linear

algebra. The second part of this chapter deals with some subjects which are, usually, not

dealt with in an introduction to linear algebra. This part provides more proofs because

either they cannot easily be found in the standard linear algebra textbooks or they give an

insight into the understanding of problems which will be encountered later on in this

book.

Let [R denote the set of real numbers and C the set of complex numbers. For those who

are not familiar with the set of complex numbers, a short introduction to this set is given

where each

is

in section 2.3.

let [Rll be the set of vectors with n

an element of [R. Now let Xj, ... ,Xk E [Rll. Then an element of the form Cl:jXj + ... + Cl:kXk

with Cl:i E [R is a linear combination of XI ,

,Xk. The set of all linear combinations of

XI, X2, ... ,Xk E [Rll, called the

of XI ,X2,

,Xk, constitutes a linear

of [Rll.

That is, with any two elements in this set the sum and any scalar multiple of an element

also belong to this set. We denote this set by Span {Xl,X2,'" ,Xk}.

A set of vectors Xl, X2, ... ,Xk E [Rll are called

if there exists

Cl:], ... ,Cl:k E [R, not all zero, such that Cl:]Xl + ... + Cl:kXk = 0; otherwise they are said to

be liin'O>OJIrhT nl(le:pe]l1dc~nt.

Upt'imi:Gatil'Jn and Differential Games

Sons, Ltd

© 2005 John Wiley &

J. Engwerda

7

16

Let S be a

of [H1l, then a set of vectors {b I , b 2 ...

this set of vectors are linearly

and S

Consider the vectors

el :=

[b]

/ ]} are a basis for

and e2:=

[n

, bk}

is called a basis for S if

... ,bd·

Given a basis

VI

= [~~] then, nsing the theorem of Pythagoras, the length of x is II x

Using induction it is easy to verify that the length of a vector x

112 =

=

E [H1l is

II x

J

aT + ... + a~. Introducing the superscript T for

[al ... all], we can rewrite this result in shorthand as

Two vectors x, y E

[H1l

are perpendicular if and only if x T y

II x

= O.

D

Based on this result we next introduce the concept of orthogonality. A set of vectors

{XI, ... ,xll } are mutually

if Xj = 0 for all i i=- j and orthonormal if

X.i = bu. Here bU is the Kronecker delta function with bU = 1 for i = j and bU = 0

for i i=- j. More generally, a collection of subspaces SI, ... ,Sk are mutually orthogonal if

x T y = 0 whenever x E Si and y E Sj, for i i=- j.

The

of a subspace S is defined by

xf

xf

S1- :=

{y E [HnlyTx =

0 for all

bf

._ b

b~VI

b~V2

V,n ·- m --T-VI - - T - V2 _ ...

VI VI

v 2 V2

Then

{II~: I '

b~Vm-1

T

Vm_ 1Vm-I

,... , II~;;;: II} is an orthonormal basis for S.

Vm-l'

..,,-,,"~

I-;·nc..th."'......

Span{vI, V2,"" vd = Span{h, b 2,···, bd·

In the above sketched case, with

Ui

D

=

S1- = Span{uk+l, ... ,un}'

of a vector, i.e.

112 = ~. Now, two

vectors x and yare perpendicular if they enclose an angle of 90°. Using Pythagoras

theorem again we conclude that two vectors x and yare perpendicular if and only if the

length of the hypotenuse II x - y 11 2 = II X 11 2 + II Y 11 2 . Or, rephrased in our previous

terminology: (x - y)T (x - y) = x TX + yTy. Using the elementary vector calculation

rules and the fact that the transpose of a scalar is the same scalar (i.e. x Ty = yT x)

straightforward calculation shows that the following theorem holds.

112 =

=

:=h

bf

all

xT

define

VI

V2

V3 := b 3 - -T-VI - -T-- V2

VI VI

v 2 V2

.jai + a~.

[~I]

[H1l,

V2 := b 2 - y-VI

VI VI

. The set {e I, e2} is called the standard basis for

So, a basis for a subspace S is not unique. However, all bases for S have the same number

of elements. This number is called the dimension of S and is denoted by dimeS). In the

above example the dimension of [H2 is 2.

Next we consider the problem under which conditions are two vectors perpendicular.

of a vector x is introduced which will be denoted by II x 112.

First the

for a sut)space S of

b~vI

in 1hl2 Then, both {el, e2} and

D

If x

{b I , b 2 , ... , b m}

Since {u I, ... , Un} form a basis for [Hn, {Uk+ I, ... , Un} is called an orthonormal

cmr:npletion of {u I , U2, ... , Uk}.

An ordered array of mn elements au E [H, i = 1, ... ,n; j = 1, ... ,m, written in the

form

al2

A=

aim]

[ all

a21

a22

a2m

a:71

a ,J2

al~1II

is said to be an n x m-matrix with entries in lH. The set of all n x m-matrices will be

denoted by [HnxIII. If m = n, A is called a square matrix. With matrix A E [HIlXIII one can

associate the linear map x ---7 Ax from [Hm ---7 [H1l. The kernel or null space of A is defined

by

ker A = N(A) := {x E

and the

[Hili

= O},

or range of A is

1m A = R(A) := {y E [Hnl y = Ax,X E [Hili}.

XES}.

A set of vectors {u I, U2, ... , Uk} is called an orthonormal basis for a subspace S c [H1l if

they form a basis of S and are orthonormal. Using the orthogonalization procedure of

Gram-Schmidt it is always possible to extend such a basis to a full orthonormal basis

{UI' U2, ... ,ull } for [H1l. This procedure is given in the following theorem.

IGram was a Danish mathematician who lived from 1850-1916 and worked in the insurance

business. S~hn:idt was a German mathematician who lived from 1876-1959. Schmidt ~eproved the

orthogonahzatlOn procedure from Gram in a more general context in 1906. However, Gram was not

the first to use this procedure. The procedure seems to be a result of Laplace and it was essentially

used by Cauchy in 1836.

is a

Let A E

of

of [R\m and

fundamental result.

[R\1l.

2. A is invertible if and

[Rllxm.

+

5. det([~ ;])

= in.

D

= dim((kerA)l-).

2.

Let ai, i = 1, ...

,in,

denote the columns of matrix A E

[RIlX'Il,

then

1m A = Span{a1,"" am}.

The rank of a matrix A is defined by rank(A) = dim(ImA), and thus the rank of a matrix

T

is just the number of independent columns in A. One can show that rank(A) = rank(A ).

Consequently, the rank of a matrix also coincides with the number of independent rows in

A. A matrix A E [RIlX'1l is said to have full row rank if n ::; in and rank(A) = n. Equally, it

is said to have full column rank if in ::; nand rank (A ) in. A full rank square matrix is

called a

or invertible

otherwise it is called singular. The following

result is well-known.

if

:f: 0;

D

=

Next we present Cramer's rule to calculate the inverse of a matrix. This way of

calculating the inverse is sometimes helpful in theoretical calculations, as we will see

later on.

For any n x n matrix A and any b E [R\'\ let Ai (b) be the matrix obtained from A

replacing column i by the vector b

Let A be an invertible n x n matrix. For any b E

entries given

[R'\

the

solution x of Ax

xi=-d-e-tA-' i= 1,2, ... ,n.

Let A E

[Rllxm

and b E

[R1l.

Then, Ax = b has

b has

D

Cramer's rule leads easily to a

formula for the inverse of an n x n matrix A. To

see this, notice that the jth column of

is a vector x that satisfies

1. at most one solution x if A has full column rank;

2. at least one solution x if A has full row rank;

3. a solution if and only if rank([Alb]) = rank(A);

a unique solution if A is invertible.

where ej is jth column of the identity matrix.

Cramer's rule,

D

xij

If a matrix A is invertible one can show that the matlix equation AX = 1 has a unique

matrix with entries eij := Dij, i, j =

solution X E [RIlXIl. Here 1 is the n x n

1, ... , n, and Dij is the Kronecker delta. Moreover, this matrix X also satisfies the matrix

equation XA = 1. Matrix X is called the inverse of matrix A and the notation A-I is used

:= [(i,j) - entry of

_ detAi(ej)

detA

(2.1.1)

Let

denote the submatrix of A formed by deleting row j and column i from matrix A.

An expansion of the determinant down column i of A i ( ej) shows that

to denote this inverse.

A notion that is useful to see whether or not a square n x n matrix A is singular is the

determinant of

denoted by det(A). The next theorem lists some properties of

determinants.

Let

Then,

B E [RIlXIl; C E [RIlXIIl; D E [RlIlxm; and 0 E [R'IlXIl be the matrix with all entries zero.

2Cramer was a well-known Swiss mathematician who lived from 1704-1752. He showed this

rule in an appendix of his book (Cramer, 1750). However, he was not the first one to give this rule.

The Japanese mathematician Takakazll and the German mathematician Leibniz had considered this

idea already in 1683 and 1693, respectively, long before a separate theory of matrices was

developed.

20

If the entries of

Thus

divided

is

the

Qii.

are

next

Qij,

i ,j

= 1, ... ,n, the

of

is defined as

are well-known.

ell

=

C.12

_1_

det

:

[

\fA E

= trace

The entries

are the so-called cofactors of matrix A. Notice that the subscripts on

are the reverse of the entry number (i,j) in the matrix. The matrix of cofactors on the

light-hand side of (2.1.2) is called the

of

denoted

adj A. The next theorem

.2).

simply restates

Let A be an invertible n x n matrix. Then

1

adj (A).

(2.1.3)

D

If

A

=

1

[2 -0 A 2-A

-1o J,

1

-1

= trace

3.

~IlXIl

and a E

~;

+ trace

\fA E

~Ilxm,

B E

~mxll.

D

Let A E ~IlXIl, then A E ~ is called an

of A if there exists a vector x E ~1Z,

different from zero, such that Ax = Ax. If such a scalar A and conesponding vector x

the vector x is called an

If A has an eigenvalue A it follows that there

exists a nonzero vector x such that (A = O. Stated differently, matrix A - AI is

singular.

according to Theorem 2.5, A is an eigenvalue of matrix A if and

if

= O. All vectors in the null space of A AI are then the eigenvectors

corresponding to '\. As a consequence we have that the set of eigenvectors conesponding

with an eigenvalue A forming a subspace. This subspace is called the

of ,\ and

we denote this subset by EA' So, to find the eigenvalues of a matrix A we have to find

= O. Since p(A) := det(A is a polynomial of

those values ,\ for which det(A degree n, p(A) is called the characteristic

of A. The set of roots of this

polynomial is called the

of A and is denoted by a(A).

An important property of eigenvectors corresponding to different eigenvalues is that

they are always independent.

3-A

then

[2 - A 30A] -det[ 1 -1 ] det[ 1 ~1 ]

2-A

-1 3-A

-1

1] -det[2 A 01]

-det[~ 3~ A] det [2-A

0

13,\

A] -det [2 -1 A ~1 ] det [2 - A 2~ A]

det[~ 20

-1

[A 5A + 6 ,\2 ,\-2

-H2

o J.

5,\ + 7

0

Let A E ~IlXIl and AI, A2 be two different eigenvalues of A with conesponding eigenvectors Xl and X2, respectively. Then {Xl, X2} are linearly independent.

det

adj(A)

=

j1XI, for some nonzero scalar j1 E ~. Then 0 =

/-LX]) =

j1AIX] = A2j1Xj - j1AjXj = j1('\2 - )1]

I- 0, due to the stated assumptions. So this yields a contradiction and therefore our assumption that X2 is a multiple

Assume X2

= A7X7

x]

D

must be inconect.

2

-

=

A-2

-,\ + 3

A2 - 4A +4

Notice that all entries of the adjoint matrix are polynomials with a degree that does not

exceed 2. Furthermore, det(A) = -A 3 + 7 A2 - 17 A + 14, which is a polynomial of

degree 3.

D

1. Consider matrix

__ [-21

-3]

4 . The characteristic polynomial of

p(A) = det(A j -'\I) = (,\ - 1)('\ - 2).

is

22

) = {I,

and

i].

2. Consider matrix

3. Consider matrix

=

=

=[

={a[i],aE~}

+

[~ ~ ] . The characteristic polynomial of

= {3}. Furthermore,

Consider matrix

is (A +

The characteristic

={-2}.

=

3I)

for [Rn

with columns b 1 , ..• ,bn •

=

is (A - 3

f

where ltc denotes the k x k

[R2.

Then

~2 : ] . The characteristic polynomial of A 4 is (A 2 -

4A + 5).

has no real eigenvalues.

D

The above

UHfJ~V illustrates a number of properties that hold in the general

(Lancaster and

1985).

too

This polynomial has no real roots. So, matrix

""A •.

, we have A = S [0

this relation by

det(A -

det

[

D

0

([

= det( [(AJ

polynomial p(A) can be factorized as the product of different linear and quadratic

terms, i.e.

a square (n - k) x (n - k) matrix.

matrix and

. Therefore

]S-l _

D

0

D]

]

-

D

0

])

= (AI - A) kdet(En-k

D

It turns out that

for some scalars c, Ai, hi and Ci·

quadratic terms do not have real roots.

for i f j, ,\, f A, and

ni

[~:]

f

[~;]

and the

+ 2 L:;~k-I-l ni = n.

D

The power index ni appearing in the factorization with the factor A - Ai is called the

~I<Il'4J>h.1r'Qli ... nlUltlJlIUcny of the eigenvalue Ai. Closely related to this number is the soof the eigenvalue Ai, which is the dimension of the

cOlTesponding eigenspace

In Example 2.3 we see that for every eigenvalue the

geometric multiplicity is smaller than its algebraic multiplicity. For instance, for

both

multiplicities are 1 for both eigenvalues, whereas for

the geometric multiplicity of the

eigenvalue -2 is 1 and its algebraic multiplicity is 2. This property holds in general.

Let Ai be an eigenvalue of A. Then its geometric multiplicity is always smaller than

to) its algebraic multiplicity.

for an eigenvalue AI, there holds a strict inequality between its

geometric and algebraic multiplicity, there is a natural way to extend the eigenspace

towards a larger subspace whose dimension has the cOlTesponding algebraic multiplicity.

of the eigenvalue A1 and is

This larger subspace is called the

by

. In fact, there exists a minimal index p ::; n for which

follows from the property that

C

and the fact that whenever

(see Exercises).

In the previous subsection we saw that the characteristic polynomial of an n x n

involves a polynomial of degree n that can be factorized as the product of different linear

and quadratic terms (see Theorem 2.10). Furthermore, it is not possible to factorize any of

these quadratic terms as the product of two linear terms. Without loss of generality such a

quadratic term can be written as

(2.3.1)

Next introduce the

Assume Al is an eigenvalue of A and {b I , ... ,bk } is a set of independent eigenvectors

that span the cOlTesponding eigenspace. Extend this basis of the eigenspace to a basis

i to denote the square root of

i :=

1. So, by definition

25

24

o has two

this notation the eqllarJlon

\. _ 2a- ±- : - - - - - ' - - - - ' -

A] -

L>VJ.IUU\.JUL>,

=a±

(2.3.1) has, with this notation, the two square roots

Al = a + hi and

OPE~ratlOn

also induces a mIL1tl1Pw::atlon rule of two vectors in

as

= a ± hi, j = 1,2.

2

stated differently, p(A) in

Note that this

I.e.

=a -

(2.3.2)

hi.

An expression z of the form

We will not,

elaborate this point.

From equation (2.3.2) it is clear that closely related to the complex number z = x + yi

is the complex number x - yi. The complex number x yi is called the

of z

and denote it by z (read as 'z bar').

the conjugate of a complex number z is obtained

by reversing the sign of the imaginary part.

z = x + yi

where x and yare real numbers and i is the formal symbol satisfying the relation i2 = -1

is called a

number. x is called the real

of z and y the

of z.

Since any complex number x + iy is uniquely determined by the numbers x and y one can

visualize the set of all complex numbers as the set of all points (x,y) in the plane [R2, as in

Figure 2.1. The horizontal axis is called the real axis because the points (x,O) on it

= -2 + 4i.

The conjugate of z = -2 - 4i is z = -2 + 4i, that is

Geometrically, is the minor image of z in the real axis (see Figure 2.2).

z

Imaginary

axis

Imaginary

axis

y

z

x + iy

)'

.........•

x + iy

i

x

Real axis

-y

2.2

2.1

D

The complex plane C

conespond to the real numbers. The vertical axis is the

axis because the points

(O,y) on it conespond to the pure

numbers of the form 0 + yi, or simply yi.

Given this representation of the set of complex numbers it seems reasonable to introduce

the addition of two complex numbers just like the addition of two vectors in [R2, i.e.

x- iy

The conjugate of a complex number z

+ yi is the length of the

in ~2 That is, the absolnte value of z is the real number Izi defined

The absolute value or modulus of a complex number z = x

associated vector [; ]

by

Izi =

This number

Note that this rule reduces to ordinary addition of real numbers when Yl and Y2 are zero.

Furthermore, by our definition of i 2 = -1, we have implicitly also introduced the

of two complex numbers. This operation is defined by

operation of

:z

Real axis

Izi = ViZ,

Izi coincides with the square root of the product of z with its conjugate z, i.e.

We now turn to the division of complex numbers. The objective is to define devision as

the inverse of multiplication. Thus, if z =I=- 0, then the definition of ~ is the complex

number w that satisfies

wz = 1.

(2.3.3)

27

26

number Z #- 0 there

relatlon~shlp. The next theorem states that this

c.V'~"'"'1i- n~presemt(lt1(m of this number.

it is not a

number w

number w

exists and

vVJ.HjJ''-'''

ro""Y>-nIc.~

Just as vectors

IR Il and matrices in IR llxm are

can define vectors in CIl and

llxm

as vectors and matrices whose entries are now

numbers. The

matrices in C

VIJ'~H-<UVJ'hJ of addition and

are defined in the same way. FurtherZ of Z is defined as

more for a matrix Z with elements Zij from C the

ro"'.","". ....

the matrix obtained from Z

all its entries to their

other

the entries of Z are

VV'.HlJl.vA

If Z

#- 0,

(2.3.3) has a

then

L)Vl.UUVH.

which is

Let Z be any complex numtler. z\ =

4 + 2i

[ -2 - i

Let Z = a + bi and w

ax-by

l+(bx+ay)i=O.

[Z(2

Therefore, the equation (2.3.3) has a unique solution if and only if the next set of two

equations has a unique solution x, y

ax - by

has a unique solution. Note that det ([

~ ~b]) =

unique. It is easily verified that x = a2~b2 and y

the stated result follows straightforwardly.

a2

+

b oF O. So, the solution [~] is

2

-4z

=

l],z[ 2-4i.] _ [

3

- 3 + 2z

-4i)],

+

[(2 ~13~32+~)3 :42i 6i] = [~~ ~:]

(1+i)(4+2i)+i(-2-i)

[ (23i)(4+

+2(-2-i)

l

(l+i)(l-i)+i = [3+4i

(2-3i)(l-i)+2J

10-lOi

2+

i].

1 - 5i

vector Z E CIl can be written as Z = x + yi, where x, y E 1R1l •

any matrix

Z E C llxm can be written as Z = A + Bi, where B E IR IlX11l •

The eigenvalue-eigenvector theory already developed for matrices in IR IlXIl applies

well to matrices with complex entries. That is, a

scalar A is called a

IlXIl

,",'U"lUV"'"'"'" ej~~envalille of a complex matrix Z E C

if there is a nonzero

vector Z

such that

ZZ:::: AZ.

can be written in the

Before we elaborate this

we first

the notion of determinant to

complex matrices. The definition coincides with the definition of the determinant of a real

Z = [Zij] is

matrix. That is, the determinant of

o

detZ

z1 and Z2

+ 22

2. Z1Z2 = 2122 (and consequently

o

= Zll detZIl - Z12detZ12 + ... + (-1

r+ Zlll detZ

1

lll,

where

denotes the submatrix of Z formed

deleting row i and column j and the

determinant of a complex number Z is z. One can now copy the theory used in the real

in the

case, to derive the results of Theorem 2.5, and show that these properties also

case. In particular, one can also show that in the complex case a square matrix Z

if its determinant differs from zero. This result will be used to

is nonsingular if and

analyze the eigenvalue problem in more detail. To be self-contained, this result is now

shown. Its proof

the basic facts that, like in the real case, adding a multiple of

one row to another row of matrix Z, or adding a multiple of one column to another

column of Z does not change the determinant of matrix Z. Taking these and the fact that

'-'V'ClllJL'-'''

ZI.

[5+~i

0

Theorem 2.13 lists some useful properties of the complex conjugate. The proofs are

elementary and left as an exercise to the reader.

For any cOlTInlex numbers

+

~;;)~:J,

satisfy the equation, from which

= 3 + 4i, then ~ =:is (3 - 4i). The complex number Z =

standard form z =:is (2 i)(3 - 4i) =:is (2 lIi) =:fs - *i.

If Z

=

1 + ..]

[ 2 - ~i ~ and

o

stated differently, the equation

3. 21

!3-+4~i l

0

bx+ay = O.

Z2 = 21

ZF [

1 -. i]

1 .

ZI+ Z2= [3-3i.]

-1 - z

or

ZI

[21+Ll

= x + yi. Then equation (2.3.3) can be written as

(x + yi)(a + bi) = 1,

1.

0'0

29

28

det( 0

;]) =

the next fundamental prop-

for

elty on the existence of solutions for the set of linear eOlLlatlOflS Zz

0 is

"..O"Hror>rr.... C'

=0.

oon,esI:lonc11l112: to A.

[ ~2

also Example 2.3 part 4) Let

Let Z E C IlXIl • Then the set of

- 4A + 5. The

A2

= A + iB and z = x + iy, with

B E IR llxll and x,y E IR II .

Zz = Ax - By + i(Bx +

Therefore Zz = 0 has a unique solution if and only if the next set of equations are

uniquely solvable for some vectors x,y E 1R1l :

Ax

By = 0 and Bx +

= O.

Since this is a set of equations with only real entries, Theorem 2.4 and 2.5 can be used to

conclude that this set of equations has a solution different from zero if and only if

det([

~

-:]) =

o.

Since adding multiples of one row to another row and adding multiples of one column to

another column does not change the determinant of a matrix

that the above mentioned addition operations can indeed be represented in this

way.) Spelling out the right-hand side of this equation yields

det ( [ A

B

So, det (

[~

CII (i- 0)

D

I]. Its characteristic

is

=2-

VV<UV'VA

are AI

roots of this

i. The eigenvectors conesponding to AJ are

= 2 + i and

- (2+

z i- 0 if and only if det Z = O.

a E C}. The

Let Z

zE

'-''-I'UUllVlh)

Zz = 0

has a COJmPleX solution

all

- : ]) = det(A

-AB ] ) = det ( [A +

B iB

+ iB)det(A

0] ).

eH!(~nv(=ctr)r~

conesponding to

are

D

a E C}.

From Example 2.7 we see that with AI = 2 + i being an eigenvalue of

its

This property is, of course, something one would

2 - i is also an eigenvalue

given the facts that the characteristic polynomial of a matrix with real entries can be

factorized as a product of linear and quadratic terms, and equation (2.3.2).

Let A E IRllxll • If A E C is an eigenvalue of A and z a corresponding eigenvector, then ,\ is

also an eigenvalue of A and z a conesponding eigenvector.

definition x and A satisfy Az = AZ. Taking the conjugate on both sides of this equation

so that its

gives

Using Theorem 2.13 and the fact that A is a real

by definition, z is an

conjugate is matrix A again, yields Az = ,\z.

D

conesponding with the eigenvalue '\.

eigenvalue, then

Theorem 2.17 below, shows that whenever A E IRllxll has a

has a so-called two-dimensional invariant subspace

Section 2.5 for a formal

introduction to this notion) a property that will be used in the next section.

Let A E IR llxll . If A = a + bi (a, b E IR, b i- 0) is a complex eigenvalue of A and

z = x + iy, with x, y E 1R1l , a conesponding eigenvector, then A has a two-dimensional

y]. In particular:

invariant subspace S =

A - iB

AS =

s[

a

-b

b].

a

iB). Therefore, Zz = 0 has a solution differ-

ent from zero if and only if det(A + iB)det(A - iB) = O. Since w := det(A + iB) is a

complex number and det(A iB) = w (see Exercises) it follows that det(A + iB)

2

det(A iB) = 0 if and only if ww Iwl = 0, i.e. w = 0, which proves the claim. D

- (2-

Theorem 2.16 shows that both

Az = AZ and Az = ,\z.

30

out both

=ax-

Ax+

+

+

=axand

"~<~'UU.vLU.'';;;

~1

2

arnF'T1Anc

both eqllatJlOnS,

+

the next two

n~SDI~ctJlve.Lv

"''-!

Sion~

~'~'UVH"

= ax - by

= bx+ay.

VH'-'LLuv~'-".<'JU'-

one real root Al =

are

= 2 + 3i and

roots of this

+

The

inv::ant

{Q

=

two COJl11nlex

2 - 3i. The plCrpnUpf,tfY"C

[o~iL:,:[I ~J

indeed with a = 2 and h = 3, AS = S

c()rr{~sDon(1-

has a two-dimenVerification shows

[~h ~].

D

y] =

So what is left to be shown is that

y} are linearly independent. To show this, assume

that y = /-LX for some real /-L =J O. Then from equations (2.3.4) and (2.3.5) we get

Ax = (a - b/-L)x

Ax

1

= -(b + a/-L)x.

(2.3.7)

~t

\cc:onjmlll to equation

x is a real eigenvector corresponding to the real

Illp

a - b/-L; whereas according to equation (2.3.7) x is a real eigenvector

to the

real eigenvalue 1 (b + ap). However, according to Theorem 2.9 eigenvectors correspondfJ

ing to different eigenvalues are always linearly

the

a bp

and 1 (b + ap) must coincide, but this implies p2 = 1, which is not possible.

D

A theorem that is often used in linear

is the

theorem. the

section this theorem will be used to derive the Jordan canonical form.

To introduce and prove this

theorem let p ( A) be the characteristic

of

that is

1"1 Opn1J'A

/1.

1. (see also Example 2.7) Let A

(A 2

-

i ~ J.

[~2

The characteristic

this eigenvector is x:= [

m

I - (2 + i)l) = {Q [ I

~l J and

~i

l

Q E iC}. The real

.-

we

obtain the

+ bi

1] =s[ -b

a

a str;al,ll.httopW'a:rd

side of the next

'HJVUiUI;;:.

+ ... +

+

of

+

'-''-I,.LUL'VU,

+ ... +

can

+

+

A has a two-dimensional invariant subspace

2

with

is

the imaginary part of this

with 2 + i =: a

+···+an ·

of A is

A has one real root Al = 1 and two complex roots. The

roots of this equation are

= 2 + i and A3 = 2 - i. The

are

=Xl+al

1)

4A + 5)(A -

to

y:=

=

p(A)

VV'LL"',JUL·<F

of

if p ( A) = Xl +

+ ... + an, let

defined as the matrix formed

each power of A in p(A)

the '-'VJl.l'-"~I.J'"'HULH"" power of A

'-'IJLuvUil;;:.

+ ... +

we have

Theorem 2.17)

Comparing the right-hand sides of equations (2.4.1) and (2.4.3) it follows that the next

holds

b].

a

- AI)adj(A -

AI)Q(A) +

(2.4.5)

33

32

Next, assume that the characteristic

Theorem 2.10. That is

where we used the shorthand notation

Q(A)

+

:=

+ ... +

+

Using equation (2.4.2),

~r""n"H"'"

+ Q(A)} = p(A).

(2.4.6)

+ Q(A) =

AI)

adj(A

p(A)

(2.4.7)

p(A).

Using the definition of the adjoint matrix, it is easily verified that every entry of the

adjoint of A - Al is a polynomial with a degree that does not exceed n - 1 (see also

Example 2.2). Therefore, the left-hand side of equation (2.4.7) is a polynomial matrix

function. Furthermore, since the degree of the characteristic polynomial p(A) of A is n,

p(A)

withpi(A) = (A - Aiyzi, i = 1, ... ,k, andpi(A) = (A 2 + biA + CiYZi, i = k + 1, ... , r. The

next lemma shows that the null spaces of Pi(A) do not have any points in common

for the zero vector). Its proof is provided in the Appendix to this chapter

(2.4.6) can be rewritten as

adj(A - AI)

adj(A - AI)

is factorized as

+ ... +

+

rewrite equation (2.4.5) as

- AI){adj

p(A) of

1

= Po p(A) +

-A+ ... +

p(A)

p(A)

Let Pi (A) be as described above. Then

= {O}, if i =/-J.

The next lemma is rather elementary but, nevertheless, gives a useful result.

Let

B E [f:RIlXIl. If AB

= 0 then

dim(kerA)

is a strict rational function of A. Therefore, the left-hand side and right-hand side of

equation (2.4.7) coincide only if both sides are zero. That is,

adj (A

p(A)

AI) ( ) = 0

PA

.

D

+ dim (ker B)

;:::: n.

;:::: dim(ker B)

+ ~UU\ ~UL

(2.4.8)

Note that 1mB C kerA. Conse;oulentlv

In particular this equality holds for A = .\, where .\ is an arbitrary number that is not an

eigenvalue of matrix A. But then, adj - .\I) is inver1ible. So, from equation (2.4.8) it

follows that p(A) = O. This proves the Cayley-Hamilton theorem.

Therefore, using Theorem 2.3,

dim(ker B)

Let A E

[f:RIlXIl

+ dim (ker A)

D

=n.

and let p(A) be the characteristic polynomial of A. Then

p(A) = O.

D

Reconsider the matrices

i = 1, ... ,4 from Example 2.3. Then straightforward

2I) = 0;

+ 21/ = 0; (A 3 - 3I)2 = 0 and

calculations show that (AI - I)

- 4A 4 + 51 = 0, respectively.

D

We are now able to prove the next theorem which is essential to constmct the Jordan

Canonical form of a square matrix A in the next section.

Let the characteristic polynomial of matrix A be as described in equation

that the set {bjl , ... , bjmJ forms a basis for kerpj(A), with pj(A) as before, j

Then,

the set of vectors {b u , ... , b Irlll , ... , brl , ... , bnn,.} forms a basis for

3Cayley was an English lawyer/mathematician who lived from 1821-1895. His lecturer was the

famous Irish mathematician Hamilton who lived from 1805-1865 (see also Chapter 1).

Assume

=

1 ... , r.

[f:R1l;

2. lni=ni, i= l, ... ,k, and lni=2ni, i=k+l, ... ,r. That is, the algebraic multiplicities of the real eigenvalues coincide with the dimension of the cOlTesponding

34

and the

twice their

'-"PlAJlU''--

E

that any

result in linear

UHUVU,HVH

~IlXIl

a

HH.UUl-/uvA.L'''''.

First construct for the

of each

a basis {bjl , ... , bjmj }. From

Lemma 2.19 it is clear that all the vectors in the collection {b lI ,···, bIml"'" b rl , · · · ,

b./Ill,. } are

Since all vectors are an element of ~Il this

that

the number of vectors that

to this set is smaller than or

to the dimension

of ~Il, i.e. n.

On the other hand it follows "llu~f"ro;Lht·.j..L·~,·nF, ...rlh, by induction from Lelmna 2.20 that,

= 0, the sum of the

since

theorem PI

combining both

dimensions of the nullspaces

should be at least n.

we conclude that this sum should be exactly 11.

Since the dimension of the nullspace of PI (A) is m I, the dimension of the nullspace of

is at most mi. So A has at most In I 1l1(1el:lendelllt

with the

AI. A reasoning similar to Theorem 2.11 then shows that the

characteristic polynomial p(A) of A can be factorized as (A - Adlllh(A), where h(A)

Il1h

of degree n-ml' Since by

p(A) = (A-Ad 2(A),

17 2(A) does not contain the factor A AI, it follows that

mi :S ni, i = I, ... , k. In a similar way one can show that mi:S

1=

k + 1, ... ,r. Since according to Theorem 2.10

To grasp the idea of the Jordan

first consider the case that A has n different

Ai, i = 1, ... ,n. Let

i = 1, ... ,n, be the

From Theorem 2.9 it then follows

that

, X2, ... ,x,z} are

im1er,enaeJllt and in fact constitute a basis for ~Il. Now let matrix S := [XI X2

Since this matrix is full rank its inverse exists. Then AS =

AI

]. So if matrix A has n

where 11 =

[

different eigenvalues it can be factorized as

. Notice that this same DHKedm"e

can be used to factorize matrix A as long as matrix has n

The

fact that the eigenvalues all differed is not crucial for this construction.

to the

Next consider the case that A has an eigenvector Xl

the

and that the generalized eigenvectors X2 and X3 are obtained

A -

=0

=Xl

= X2·

From the second

= X2

can

hold if Ini

=

ni, i

= 1, ... ,k, and

mi

o

we see that

With S:=

X2 X3]

= Xl +

we

and from the third eOllatlon

therefore have AS =

I~

l

~.

if E

1

J. In

0

0 Al

and {Xl, X2, X3} are linearly independent, we conclude that S is inveliible and therefore

can be factorized as A = ShS- 1 •

For the

case, assume that matrix A has an o-"",-o""'ro1',,," Xl COJlTe~mClndll1g with

X2,' .. ,Xk are obtained

the eigenvalue Al and that the gel.1eraWwd

the following eql.latJlons:

Xl

it is clear that this

i = k + ,... , r.

~~"n~"~~

+

+

x,

+ )\jx,] =

with

Jz =

IJLUU ... LUUl.

LH.L'V""'::.H

Let A be an eigenvalue of A E ~IlXIl, and x be a corresponding eigenvector. Then Ax = Ax

and

A( ax) for any a E ~. Clearly, the eigenvector x defines a one-dimensional

that is invariant with respect to pre-multiplication

A since

VIc In

general, a subspace S C ~Il is called A-invariant if Ax E S for every xES. In other

words, S is A-invariant means that the image of S under A is contained in S, i.e. 1m

AS C S. Examples of A-invariant subspaces are the trivial subspace {O}, ~n, kerA and

=0

=XI

0UlJ0!.JUv,--

ImA.

A-invariant subspaces play an

role in calculating solutions of the so-caned

algebraic Riccati equations. These solutions constitute the basis for determining various

equilibria as we will see later on. A-invariant subspaces are intimately related to the

generalized eigenspaces of matrix A. A complete picture of all A-invariant subspaces is

in fact provided by considering the so-called Jordan canonical form of matrix A. It is a

=Xk-I·

let S be an A-invariant subspace that contains X2. Then Xl should also be in S.

since from the second equality above

= AIX2 + Xl, and, as both

and AI X2 belong

to S, Xl has to belong to this subspace too. Similarly it follows that if X3 belongs to some

A-invariant subspace S, X2 and, consequently, Xl have also to belong to this subspace S.

So, in general, we observe that if some Xi belongs to an A-invariant subspace S all its

'predecessors' have to be in it too.

36

37

= O. That

Furthermore note that

1)

An A-invariant subspace S is called a stable

of A constrained to Shave

'.d.""'-'UY <''''''''''-'u

Consider a set of vectors {Xl, ... ,xp } which are

= 0;

obtained as follows:

= Xi,

i

= 1, ... ,p

1.

(2.5.2)

Consider the

{b l , ••• ,bd constitute a basis for the COlTe~;oo'nC11l12: elg~ensp(lce

the Jordan chain generated

the basis vector bi.

basis for

with S :=

), ... ,

and

constitutes a

holds.

Then, with S :=

AS=

where A E !RIPxp is the matrix

(2.5.3)

S has full column

From Lemma 2.22 it is obvious that for each bi , J (b i ) are a set of 111"~Clr", 1I1C1c~penC1lent

vectors. To show that, for example, the set of vectors {J(bd,

inctepenlctellt too, assume that some vector Yk in the Jordan chain

is in the span

of).

) = {b I ,X2,""

and

= {b 2 ,Y2,'" ,Ys}, then there exist

ILi, not all zero, such that

rank.

From the reasoning

the first part of the lemma is obvious. All that is left to be

shown is that the set of vectors {Xl, ... ,Xp } are linearly independent. To prove this we

similarly.

assume that

consider the case p = 3. The general case can be

(2.5.4 )

the definition of the Jordan chain we conclude that if Ie > r all vectors on the

contradicts our

hand side of this equation become zero. So b 2 = 0, which

that b 2 is a basis vector. On the other

if Ie :::; r, the

reduces to

(2.5.5)

From this we see,

again, that

U""'UULIJLlVH

Then also

both sides of this

~'-l'~~"'~H

on the left

0=

consequently,

0=

(2.5.6)

Since Xi i=- 0 it is clear from equations (2.5.4) and (2.5.5) that necessarily Pi = 0,

i = 1,2, 3. That is {XI, X2, X3} are linearly independent.

0

Since in !RIll one can find at most 17 linearly independent vectors, it follows from Lemma

2.22 that the set of vectors defined in equation (2.5.2) has at most 17 elements. A sequence

+ ... +

= Pk+l (A

= Pk+lbI + ... + PrXr-k·

according Lemma 2.22 the vectors in the Jordan chain J(h) are linearly

independent, so Pk+I = ... = Pr = 0; but this implies according to equation (2.5.7) that

b2 = pkbI, which violates our assumption that {h, b 2 } are linearly independent. This

shows that in general {J(bd, ... ,J(bd} are a set of linearly independent vectors.

Furthermore we have from equation (2.5.1) and its preceding discussion that all vectors

in J(b i ) belong to Ef. So, what is left to be shown is that the number of these independent

38

the dimension of

that purpose we show that every vector

chosen Jordan chain for some vector Xl E

Let X E

=0

n 1 is the

of

or,

vectors

C'111TClr\H,

Below we

=0.

to denote the

matrices

for square, but

r\Clrt1t1AT,=rI

= 0, where

= Xl·

= Xl ..

introduce X2 :=

see that {Xl, .. ' ,XIl1 -1,X}

L".Vl-'v,-,-ULL.I:;,

'-''-'iva,;;.u

0

For any square matrix A

E [RIIlXIl

there exists a

rran~';lrr:g'\J.l~u

matrix

such that

this process we

where I =

extended

i=1, ... ,nl·

From this it is

to a Jordan chain for some Xl E

Which cOJnplet(~s the

... ,

and Ii either has one of the

COJmpleX (C) or

forms

a matrix A has the

real

verified

o

i)

'n~~~nn

[~

A=

=Xi-l,

....

matrix

-IX. Then,

Let Xl :=

X

use the notation

ii)

form

A=

iii)

Then it is easy to

iv)

that

= Span{x1};

S3 = Span{x3};

= Span{x4};

Sl

Here { Ai, i

o

are all A-invariant subspaces.

i

= k + ,... , r, and is the 2 x 2

hV"Vi'U.HL~VU

",-v\JU<'vUJlv

+

=

A1(a1 x l

hi

ai

= , ... , r} are the distinct real

eigenvalue Al and that its

Assume that matrix A has a

multiplicity is 2. Then A has infinitely many invariant subspaces.

in the case of Al E [RI, let {Xl,

be a basis for the nullspace of A Then any

linear combination of these basis vectors is an A-invariant subspace, because

+

ai

-hi

of

c, =

[

matrix.

corresDl)n(11l12: to the

definition

AI, which has an

Vi"'.'-'UL>U'-'-VV

i

?:. O.

+

Similarly, if A = a + bi (with b =I- 0) is a

with geometric

there exist four linearly independent vectors Xi,Yi, i = 1,2 such that

2,

=0.

4

•

u,

Jordan was a famous French engineer/mathematician who lived from 1838-1922.

40

From which we conclude that

Let A =

c

Consequently, 1111rocLUcmg

some matrix

such that

=SI

for

So, assuming the characteristic polynomial of A is factorized as in Theorem 2.10, we

i = 1, ... ,r, such that

conclude that there exist matrices Si and

. . .Sr] = [SI ... Sr]diag{ )q/ +

, where I is the Jordan canonical form COITeSp4Jnl1mg to A. Then

since Tis

ImST = ImS.

ImAS = ImSJ.

This

that the columns of matrix S are either

eigenvectors of A.

ImAST = ImSTJ.

, ... ,

+ Vr } .

This proves the first part of the theorem. What is left to be shown is that for each

generalized eigenspace we can find a basis such that with respect to this basis

=SJi,

where Ii has either one of the four representations

or

this was

basically shown in Proposition 2.23. In the case where the eigenvalue )\1 is real and the

In the case

geometric multiplicity of )\1 coincides with its algebraic multiplicity, Ii =

where its geometric multiplicity is one and its algebraic multiplicity is larger than one,

Ii =

If the geometric multiplicity of Al is larger than one but differs from its

and

occurs. The exact form of this

algebraic multiplicity, a mixture of both forms

mixture depends on the length of the Jordan chains of the basis vectors chosen in

- A1I). The corresponding results Ii =

and Ii =

in the case where Al = ai +

bii, bi =I=- 0, is a complex root, follow similarly using the result of Theorem 2.17.

D

i

. the boxes Ci = [a

In the above theorem the numbers ai and bi 111

-bi

2. From part 1 it follows that all A-invariant subspaces can be determined from the Jordan

canonical form of A. In Example 2.11 we already showed that if there is an eigenvalue

which has a geometric multiplicity larger than one, there exist infinitely many invariant

subspaces. So what remains to be shown is that if all real eigenvalues have exactly one

there correcorresponding eigenvector and with each pair of conjugate

sponds exactly one two-dimensional invariant subspace, then there will only exist a

finite number of A-invariant subspaces.

However, under these assumptions it is obvious that the invariant subspaces corresponding with the eigenvalues are uniquely determined. So, there are only a finite

number of such invariant subspaces. This implies that there are also only a finite

number of combinations possible for these subspaces, all yielding additional A-invariant

subspaces. Therefore, all together there will be only a finite number of invariant

D

subspaces.

From the above corollary it is clear that with each A-invariant subspace V one can

associate a part of the spectrum of matrix A. We will denote this part of the ",,,,c'0h"'H~~

CT(Alv)'

generically, a polynomial of degree 11 will have 11 distinct (possibly complex)

roots. Therefore if one considers an arbitrary matrix A E IR. IlXIl its Jordan form is most of

the times a combination of the first,

and third,

Jordan form.

bi]

ai come f rom the

complex roots ai + bJ, i = k + 1, ... ,r, of matrix A. Note that the characteristic

polynomial of Ci is A2 + 2aiA + aT + bT·

An immediate consequence of this theorem is the following corollary.

If A E IR.

IlXIl

has

11

distinct (possibly complex) eigenvalues then its Jordan form is

AI

Let A E IR. IlXIl •

All A-invariant subspaces can be constructed from the Jordan canonical form.

2. Matrix A has a finite number of invariant subspaces if and

multiplicities of the (possibly complex) eigenvalues are one.

if all geometric

I=

ak+I

-bk+I

bk+I

ak+l

ar

1. Let 1m S be an arbitrarily chosen k-dimensional A-invariant subspace. Then there

exists a matrix A such that

ImAS

= ImSA.

br

ar

where all numbers appearing in this matrix differ. If A has only real roots the numbers

ai, bi disappear and k = 11.

D

42

-4A+

2+i

J =

l~1 ~ :l

Therefore it has one real

and two

the Jordan canonical form of A is

2 - i.

o

start this section with the formal introduction of the tra.nsDositiion

The

a matrix E ~Ilxm, denoted

, is obtained

H!lVL~,uau~"LH~therows

if

and columns of matrix A. In more

VIJ'-'H.HJ.\JH.

tnlm~p()Se of

[all

a2l

al~z1

an

a22

al"

a2n ]

a 1l1 2

al~lll

then

all

a21

an

an

a m2

al n

a21l

al~lll

ami]

If A

J=

then there exists an orthonormal matrix U and a

such that

~HLIL;;;'VHLH

matrix

A=

The ith-column of U is an

o

Ai.

correSDl)nl1m£ to the

transpose, i.e. A = , the matrix is called

One

matrices is that a

matrix has no

to different

are per-

A

Let A E

~nxll

>0

(~

0) if and

if all

,001cr~'nu"

be SVlnnletnc.

if A is an

then A is a real

i = 1,2, are two different

= O.

then

Let X be an ,001.r,oor,u,oo0tr,r "r.'"1·,ooC'·nr.-nrl-,,,

eigenvalue of

too and x is a

xT,\x =

On the other

clude that ,\

of A and

Xi,

i = ,2,

A

from Theorem 2.27. Choose

Consider the

= Ai and the result is obvious.

the ith-column of U. Then

The converse statement is left as an exercise for the reader.

X

=

Ui,

where

Ui

is

0

~~r,_r!H'~ to

Theorem 2.16 ,\ is then an

Therefore

is a scalar and A is

E ~, different from zero, we con-

= A. So A E R

p=

On the other

p=

on the one

= AIXf X2. SO comparing both results we conclude that

it follows that

= O.

0

Since Al f

In this subsection we

the so-called

or ARE for short.

AREs have an impressive range of applications, such as linear quadratic optimal control,

SCount Jacopa Francesco Riccati (1676-1754) studied the differential equation x(t)+

t- n x 2 (t) ntlll +n - 1 = 0, where 111 and n are constants (Riccati, 1724). Since then, these kind of

equations have been extensively studied in literature. See Bittanti (1991) and Bittanti, Laub and

Willems (1991) for an historic overview of the main issues evolving around the Riccati equation.

44

45

and stochastic

of linear

networks and robust

control.

this book we will see that

also

a

central role in the determination of 'A..

in the

of linear

differential

games.

Q and R be real n x n matrices with Q and R symmetric. Then an algebraic

Let

Riccati eOllatJlOn in the n x n matrix X is the following quadratic matrix equation:

I'.HH.UULU

a solution to the Riccati ~~"~ ...;~,~

the solution is

of

+

of the basis of V.

Since V is an H-invariant

+

+

'-''-«J'-'I_'U'v'v,

there is a matrix A E

~IlXIl

such that

(2.7.1)

+Q=O.

The above equation can be rewritten as

Post-multiplying the above equation

Q

[I

From this we infer that the image of matrix [I

Q

R] [X]·

orthoQ:o:nal complement of the image of matrix [I

ARE has a solution if and

(2.7.3)

is orthogonal to the image of

stated differently, the image of

of the image of matrix [I

we get

[~ R] [~]

belongs to the

Now pre-multiply equation (2.7.3)

I] to get

It is easily veIified that the

is given by the image of [ -:].

if there exists a matrix A E ~IlXIl such that

Rewriting both sides of this equality

-XA-

Premultiplication of both sides from the above equality with the matrix

then

-XRX

Q= 0,

which shows that X is indeed a solution of equation (2.7.1). Some

(2.7.3) also gives

[~I ~]

""""W,t"~,,

of eqllatJlon

A+RX=

stated

the symmetric solutions X of ARE can be obtained by considering

the invariant subspaces of matrix

= 0-( A). However, by definition, A is a matrix rer)re~~entatlOn of the

= o-(Hlv)' Next notice that any other basis

V can be

therefore,

map Hlv' so

represented as

(2.7.2)

H:= [ A

for some nonsingular P. The final conclusion follows then from the fact that

= X2X 11 .

Theorem 2.29 gives a precise formulation of this observation.

D

The converse of Theorem 2.29 also holds.

Let V C ~2n be an n-dimensional invariant subspace of H, and let

real matrices such that

E

~Ilxn

be two

If X E ~IlXIl is a solution to the Riccati equation (2.7.1), then there exist matrices

,

E

~"n"

with

invertible, such that X = X2Xj 1 and the columns of

basis of an n-dimensional invariant subspace of H.

[~~] form a

46

are ,2, - ,

The

Define A := A

+ RX.

l\/h'<lb •..,I""-. ...

this

and

",nl,,,,1"",nn

(2.7.1)

+

Write these two relations as

All solutions of the Riccati

follows.

the columns of [ ~] span an n-dimensional invariant snbspace of

:= I,

and

:=

and defining

"'-Lll.LLlLJlVU

l

1. Consider Span{ VI, V2} =:

yields

X:=

Matrix H is called a Hamiltonian matrix. It has a number of nice properties. One of

them is that whenever A E

then also - A E

That is, the spectrum of a

Hamiltonian matrix is symmetric with respect to the

axis. This fact is

easily established by noting that with J:=

+

[~ ~ll

H

det( -JH JAI) =

+ =p(-A).

det(

_HT -

From Theorems 2.29 and 2.30 it will be clear

the Jordan canonical form is so

important in this context. As we saw in the previous section, the Jordan canonical form of

a matIix H can be used to construct all invariant subspaces of H. So, all solutions of

equation (2.7.1) can be obtained by

all n-dimensional invariant subspaces

~nxn, that have the additional

is invertible. A subspace V that satisfies this property is called a

it can be 'visualized' as the graph of the map: x -7

X:=

=

= det

D

E

=

2. Consider Span{vI, v-d =:

which

ptA) =

=

1

V = 1m [~~] of equation (2.7.2), with

that

=

X:=

4. Let Span{ V2,

yields

V-I}

=:

= [ 1 -3]

4 '

2

R = [2

0

0]

1 ' and Q = [00

0]

0 .

X'-

2

H=

[

o

o

-3

4

o

o

2

0

1

3

6. Let Span{ V-I, V-2} =:

yields

X:=

l

l

=

Then

-1

[~ ~ ] , which

=

[~ ~]

[~~].

and

Then

Then

~I [~

[~:l Then

1 [ 66

- 67 -114

and

+

= {1,

-33]

=

[~2

72]

and

+

[ ~I

4

108

Then

~2 [i

= { ,2}.

+

Then

~I [~ ~]

-I [48

51 72

=

5. Let Span{ V2, V-2} =:

yields

(see also Example 2.3) Let

l

3. Let Span{vI, V-2} =:

yields

X:=

A

Then

D

X completes the proof.

T

combinations of these vectors as

are obtained

:] and

=

~]

[~I

5

1}.

[~

' and

24]

36 ' which

{1, -2}.

13] '

+

and

n

[~

=

which

={2,-1}.

-33]

5

'

and a-(A +

and

=

[~

24]

. h

36 ' WhIC

[~

24]

.

36 ' whIch

= {2, -2}.

[-2 -33]

5

-114]

-270 and a-(A +

' and

, -2}.

D

48

49

natural

that arises is whether one can make any statements on the number of

solutions of the algebraic Riccati

(2.7.1). As we already noted, there is a one-toone relationship between the number of solutions and the number of graph subspaces of

matrix H. So, this number can be estimated by the number of invariant subspaces of

matrix H. From the Jordan canonical form, Theorem 2.24 and more in particular

Corollary

we see that if all eigenvalues of matrix H have a geometric multiplicity

of one then H has only a finite number of invariant subspaces. So, in those cases the

algebraic Riccati equation (2.7.1) will have either no, or at the most a finite number, of

solutions. That there indeed exist cases where the equation has an infinite number of

solutions is illustrated in the next example.

Let

A=R

[b ~]

and Q =

[~

n

3.

[

-2

o

0]

-2

+

= {-1,-

D

to this

we have said nothing about the structure of the solutions

Theorems 2.29 and 2.30. In Chapters 5 and 6 we will see that

solutions that are

C C- (the so-called

will

and for which a(A +

interest us. From the above example we see that there is only one stabilizing solution and

that this solution is symmetric. This is not a coincidence as the next theorem shows. In

fact the propeliy that there will be at most one stabilizing solution is already indicated

if matrix H has n different eigenvalues in C-, then

our Note following Theorem 2.30.

it also has n different eigenvalues in C+. So, there can exist at most one appropriate

context,

invariant subspace of H in that case. To prove this observation in a more

we use another well-known lemma.

Consider the Sylvester equation

Then

AX+XB=C

(2.7.4)

1

o

-1

o

The eigenvalues of Hare {I, 1,

1, -I} and the corresponding eigenvectors are

The set of all one-dimensional H-invariant subspaces, from which all other H-invariant

subspaces can be determined, is given by

where A E [RIlXIl, BE IR lIlxlIl and C E [R/lXIIl are given matrices. Let {/\, i = 1, ... ,n} be

the eigenvalues (possibly complex) of A and {Pj, } = 1, ... ,m} the eigenvalues (possibly

complex) of B. There exists a unique solution X E [RIlXIIl if and only if Ai(A)+

!-L.i(B) =1= 0, Vi = 1, ... ,n and} = 1, ... ,m.

First note that equation (2.7.4) is a linear matrix equation. Therefore, by rewriting it as a

set of linear equations one can use the theory of linear equations to obtain the conclusion.

For readers familiar with the Kronecker product and its corresponding notation we will

provide a complete proof. Readers not familiar with this material are referred to the

literature (see, for example, Zhou, Doyle and Glover (1996)). Using the Kronecker

product, equation (2.7.4) can be rewritten as

= vec(C).

All solutions of the Riccati equation are obtained by combinations of these vectors. This

yields the next solutions X of equation (2.7.1)

1.

2.

[~ ~]

An immediate consequence of this lemma is the following corollary.

yielding cr(A + RX) = {I, I}.

[~2 ~l[ ~2 ~l[~ ~2l[~ ~J

a(A +RX) = {-I, I}.

This is a linear equation and therefore it has a unique solution if and only if matrix

E9 A

is nonsingular; or put anotherway, matrix B T E9 A has no zero eigenvalues. Since the eigenvalues of BT E9 A are Ai(A) + pj(B T ) = Ai(A) + /lj(B), the conclusion follows.

D

and

OIl]

[ =~ 1J

yielding

6English mathematician/actuary/lawyer/poet who lived from 1814-1897. He did important work

on matrix theory and was a friend of Cayley.

50

First, we prove the uU'''''I'_'.'-'11'-'OO n,"r,np,"tu To that end

~~lnT,/.n" of A""QT'"''

Consider the so-called

AX+

=c

+

where

C E IR

are

matrices. Let {Ai, i = 1, ... ,11} be the eigenvalues

(2.7.5) has a

solution E IR llxll if and

(possibly COlnplex of A. Then

+ .\(A) i- 0, 'Vi,) = 1, ... ,n.

0

only if

that

+ Q = 0, i =

,2.

llxll

After SUl)tf(lctmg the

+

the

C'U"""'Y'Ah"u

Consider the matrix equation

of matrix

+

+XA=

we find

'"''-I''''U •.''JUu,

+

=0.

this equation can be rewritten as

{(X1 -

+

+

=0.

The above equation is a Sylvester

Since

both A +

A+

have all their eigenvalues in ([:-, A +

and

+

have no nA'-";tQ I,"PC'

in common.

it follows from Lemma 2.31 that the above eqllatllOn (2.7.6) has a

= satisfies the

So

unique solution

Obviously,

which proves the

result.

then this solution

Next, we show that if equation (2.7.1) has a stabilizing solution

with the definition of 1 and H as

will be symmetric. To that end we first note

the matrix lH defined

p,

where a(A) C ([:-. This matrix equation has a unique solution for every choice of matrix

be in ([:- and

C. This is because the sum of any two eigenvalues of matrix A will

0

thus differ from zero.

°

Consider the matrix equation

+

with

[-;1

as in

~3] , and

C,

2.3, and C an arbitrarily chosen 2 x 2

[=:

~].

that is:

According to Example 2.3 the eigenvalues of

are

is a SYlnnletnc matrix.

let X solve the Riccati

where

""r",,,+""'"

{ I , 2} and

has only one eigenvalue {- 2}.

according to Lemma 2.31, there

exist matrices C for which the above equation has no solution and also matrices C for

which the equation has an infinite number of solutions. There exists no matrix C for

0

which the equation has exactly one solution.

with

This

lH

and

invertible.

]=

Since the left-hand side of this eqllatlon is syrnnletlnC, we conclude that the flQ'm·'l1a.nu

side of this equation has to

stated

7Russian mathematician who lived from 1857-1918. Schoolfriend of Markov and student of

Chebyshev. He did important work on differential equations, potential theory, stability of systems

and probability theory.

c ([:-

Theorem

that

The next theorem states the important result that if the algebraic Riccati equation (2.7.1)

has a stabilizing solution then it is

moreover, symmetric.

The algebraic Riccati equation (2.7.1) has at most one stabilizing solution. This solution

is symmetric.

"",-,,,·rl,,.., ....

rl,-t'+"U'A,,+lu

+

=0.

53

52

This is a

obvious from

2.32 that this

o satisfies the

'-'\.I'.HtLLVH

has a

of

solution

. But, then

c

C - it is

Riccati

of equation (2.7.1), then X ::;

has a

U","',,",Ul.UH.

~~""TH~n

Since both

and X

.... '-, .....

~,u'Vu

"tUU.LUlLoHlj;:.

and

solution

is another

(2.7.1)

+

XA+

D

of the algebraic Riccati

it

Apart from the fact that the stabilizing solution,

exists) is characterized

its uniqueness, there is another characteristic property. It is the

"""0.",."...... 1 solution of equation (2.7.1). That is, every other solution X of equation (2.7.1)

Notice that maximal (and minimal) solutions are unique if

exist.

satisfies <

To prove this property we first show a lemma whose proof uses the concept of the

like the scalar

exponential of a matrix. The reader not familiar with this, can think of

exponential function eat. A formal treatment of this notion is given in section 3.1.

If Q ::; 0 and A is stable, the Lyapunov equation

AX+

=Q

Since A is stable eAt converges to zero if t becomes arbitrarily large (see section 3.1

again). Consequently, since e A .O = I, and the operation of integration and differentiation

'cancel out' we obtain on the one hand that

= 0-

(2.7.9)

...,,"'U.l.JL.l.L.d.H6

Let X be the stabilizing solution of equation (2.7.

equation (2.7.1), yield

Combining equations (2.7.9) and (2.7.10) yields then that X 2: O.

Simple manipulations, using

R

+

As

I

[-X

0]-1

[I

I

= X

~],

we conclude from the above

(J(H) = (J(A

"i",nh1ru

1

that

+ RX) U (J( -(A +

Since X is a stabilizing solution, (J(A +

is contained in C- and (J( - (A

H does not have eigenvalues on the imaginary axis.

and on the other hand, using equation (2.7.8), that

V(t)dt =

D

This maximality property has also led to iterative procedures to

the

solution (see, for example, Zhou, Doyle and Glover (1996).

Next we provide a necessary condition on the spectrum of H from which one can

conclude that the algebraic Riccati equation has a stabilizing solution. Lancaster and

Rodman (1995) have shown that this condition together with a condition on the

associated so-called matrix sign function are both necessary and sufficient to conclude

that equation (2.7.1) has a real, symmetric, stabilizing solution.

(2.7.8)

+

- X 2: O.

The algebraic Riccati equation (2.7.1) has a stabilizing solution only if H has no

eigenvalues on the imaginary axis.

Since A is stable we immediately infer from Corollary 2.32 that equation (2.7.7) has a

unique solution X. To show that X 2: 0, consider V(t) := ~ (eATtXeAt). Using the product

eAtA (see section 3.1), we have

rule of differentiation and the fact that

V(t)dt

is stable Lemma 2.34 gives that

Since, by assumption, A -

(2.7.7)

has a unique semi-positive definite solution X.

V(t) =

+

+

+

(2.7.10)

D

+

in C+.

D

The next example illustrates that the above mentioned condition on the spectrum of

matrix H in general is not enough to conclude that the algebraic Riccati equation will

have a solution.

54 linear

~ln<Olhl'~

55

solution of the

Riccati

This

rise to an iterative

been formalized

Kleinman

"l£lUU'"'-'U"'b

Let A

= [

01

~ ] , R = [~ ~]

and Q =

[~ ~ ] . Then it is readily verified that the

is based on

to calculate this solution

'-'-I,.lUljlVH

1I1'-"'U~~.

eigenvalues of Hare {- 1, I}. A basis for the conesponding eigenspaces are

[~]

,b4

=

[~],

1. Consider

respectively.

Consequently, H has no graph subspace associated with the eigenvalues {-1, -I}.

0

We conclude this section by providing a sufficient condition under which the algebraic

Riccati equation (2.7.1) has a stabilizing solution. The next theorem shows that the

converse of Theorem 2.36 also holds, under some additional assumptions on matrix R.

One of these assumptions is that the matrix pair (A, R) should be stabilizable. A more

detailed treatment of this notion is given in section 3.5. For the moment it is enough to

R) is called stabilizable if it is possible to steer any initial

bear in mind that the pair

state xo of the system

x(t) =

+ Ru(t),

x(O)

xo,

independent.

Show that {VI, V2} are

Show that {V 1, V2, V3} are linearly dependent.

(c) Does a set of vectors Vi exist such that they constitute a basis for a:;R3?

(d) Determine Span{V2, V3, V 4}. What is the dimension of the subspace sp,mrLea

these vectors?

Determine the length of vector V3.

(f) Determine all vectors in a:;R3 that are perpendicular to V4.

2. Consider

towards zero using an appropriate control function u(.). The proof of this theorem can be

found in the Appendix to this chapter.

R) is stabilizable and R is positive semi-definite. Then the algebraic

Assume that

Riccati equation (2.7.1) has a stabilizing solution if and only if H has no eigenvalues on

0

the imaginary axis.

Good references for a book with more details on linear algebra (and in particular section

2.5) is Lancaster and Tismenetsky (1985) and Horn and Johnson (1985).

For section 2.7 the book by Zhou, Doyle and Glover (1996) in patiicular chapters 2 and

13, has been consulted. For a general treatment (that is without the positive definiteness

assumption) of solutions of algebraic Riccati equations an appropriate reference is the

book by Lancaster and Rodman (1995). Matrix H in equation (2.7.2) has a special

structure which is known in literature as a Hamiltonian structure. Due to this structure, in

particular the eigenvalues and eigenvectors of this matrix have some nice prclpertle:s.

Details on this can be found, for example, in chapter 7 of Lancaster and Rodman (1995).

A geometric classification of all solutions can also be found in Kucera

The

eigenvector solution method for finding the solutions of the algebraic Riccati equation

was popularized in the optimal control literature by MacFarlane (1963) and Potter (1966).

Methods and references on how to evercome numerical difficulties with this approach can

be found, for example, in Laub (1991). Another numerical approach to calculate the

(a) Use the orthogonalization procedure of Gram-Schmidt to find an orthonormal

basis for S.

(b) Find a basis for Sl-.

3. Consider

A

=

[1 ~l

n

Determine Ker A and 1m A.

Determine rank(A), dim(Ker

and

Determine all b E a:;R4 for which Ax = b has a solution x.

If the equation Ax = b in item (c) has a solution x, what can you say about the

number of solutions?

4. Let A E a:;Rllxm and S be a linear subspace. Show that the following sets are linear

subspaces too.

(a)

(b)

(c)

(d)

(a) Sl-;

(b) Ker A;

(c) 1m A.

57

56

Show that for any cOJmvlex numbers ZI and

5. Consider the

i(t)

x(o) = Xo

y(t) = Cx(t),

(a) ZI

(b) ZIZ2

where x(.) E ~n and y(.) E ~m. Denote by y(t,xo) the value ofy at time t induced by

the initial state xo. Consider for a fixed tl the set

V(td

:=

{xoly(t,xo)

=

0, t E [0, td}·

Z2 = 2:1 + 2:2,

= 2:12:2 and Z]

Z2

= 2:1

z\ = ZI·

Show that for any complex vector

(c)

Z

E

en Izi =

Show that for any complex matrix Z

Show that V(td is a linear subspace (see also Theorem 3.2).

i

=

[Z:I] , where Zi

= 1, ... , n + 1,

=

[Zil, ... ,Zin],

Zn

6. Use Cramer's rule to determine the inverse of matrix

Z]

det

+z~Zn+] ]

..

[

.

[Z/;:] ]

= detZ + Adet

Zn

...

.

Zn

7. Consider

vi = [1,

2, 3, 0, -1, 4] and

vI = [1,

-1, 1, 3, 4, 1].

Let A:= VIVI(a) Determine trace(13 * A).

(b) Determine trace(2 * A + 3 * I).

(c) Determine trace(A * AT).

8. Determine for each of the following matrices

A

=

[~

n [7 ~];

B

=

C

=

[i il

D

=

[~

:

n

(a) the characteristic polynomial,

(b) the eigenvalues,

(c) the eigenspaces,

(d) for every eigenvalue both its geometric and algebraic multiplicity,

(e) for every eigenvalue its generalized eigenspace.

9. Assume that A = SBS- 1 • Show that matrix A and B have the same eigenvalues.

10. Let AE TR

Zl

I Zi II,

and

16. Starting from the assumption that the results shown in Exercise 14 also hold when we

consider an arbitrary row of matrix Z, show that

det([~

;])

det(A)det(D).

17. Determine for each of the following matrices

-1

1

llXU

(a) Show that for any p 2: 1, N(AP) C N(Ap+I).

(b) Show that whenever N(A k ) N(A k + 1) for some k 2: 1 also N(AP)

for every p 2: k.

(c) Show that N(AP) = N(A n ), for every p 2: n.

11. Determine for the following complex numbers

plane.

(b) Consider in (a) a matrix Z for which Z] = Z2. Show that det Z = 0.

(c) Show, using (a) and (b), that if matrix Z] is obtained from matrix Z by adding

some multiple of the second row of matrix Z to its first row, detZ] = detZ.

(d) Show, using (a), that if the first row of matrix Z is a zero row, detZ = 0.

15. Let w:= det(A + iB), where A, B E ~nx/l. Show that det(A - iB) = w. (Hint:

consider A + iB; the result follows then directly by a simple induction proof using

the definition of a determinant and Theorem 2.13.)

= 1 + 2i;

Z2

=2-

i; and Z3

= -1 -

1

= N(Ap+1),

2i,

±, respectively. Plot all these numbers on one graph in the complex

'-t

(a) the characteristic polynomial,

(b) the (complex) eigenvalues,

(c) the (complex) eigenspaces,

(d) for every eigenvalue both its geometric and algebraic multiplicity,

(e) for every eigenvalue its generalized eigenspace.

18. Show that kerA and lmA are A-invariant subspaces.

59

58

Consider

26.

nn,,,1"n.lp

definite and ,.. . """1"n'o

Sl~ml-Q(~nnlHe.

27. Determine all the solutions of the following algebraic Riccati eql1atlOns. Moreover,

+

determine for every solution

-A 3 +

Show that the characteristic .... "'h".."'.....-.F1 of A is p(A)

- 4A + 2/ = O.

Show that

+

Show that p(A) = (1 A)(A 2 - 2A + 2).

and

Determine

.Show that Nl n

= {O}.

(f) Show that any set of basis vectors for N] and

basis for

20. Consider the matrices

(b)

(c)

(d)

(e)

A

=

T]

[~ ~

and B

=

~ i] +X[~ ~ ]X+ [~ ~] = o.

;rX+X[~1 ;]+x[~ ~]x=o

ir X+

- 4A + 2.

2A+

X[

28. Verify whether the following matrix equations have a

respectively, form together a

[l ~

n

(a)

(b)

(c)

solution.

[~ ~3]X+X[~ ;] = [; 2]3 .

1

[~ ;]x +x[ 2 ~] = [;

[\ 1]1 X +X [-4-2 ;] = [;

n

n

29. Determine all solutions of the matrix equation

(a) Determine the (generalized) eigenvectors of matrix A and B.

(b) Determine the Jordan canonical form of matrix A and B.

21. Determine the Jordan canonical form of the following matrices

[~ ~]X+X[ 0 ~]

1

where C = [;

; ] and C =

[~ ~ ], respectively, Can you find a matrix

C for

which the above equation has a unique solution?

22. Determine the Jordan canonical form of the matrices

-I

j

[

-I

[~I

[~I n

0

A=

2

0

~

; B=

~1

0

1

0

~J c= [

2

0

1

1

0

1

~Il

-2

D=

2

2

23. Determine for each matrix in Exercise 22 all its invariant subspaces.

24. Factorize the matrices below as

diagonal matrix.

A =

[~ ~3];

B=

where U is an orthonormal matrix and D a

[~-1 0~ ~ll

0

25. Let A be a symmetric matrix. Show that A

Ai > 0 (2: 0).

c=

[;

1

We distinguish three cases:

i,j E {I, ... , k};

i E {I, ... , k}

E {k + 1, ... , r};

and