* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download Microsoft SQL Server on EMC Symmetrix Storage Systems

Entity–attribute–value model wikipedia , lookup

Tandem Computers wikipedia , lookup

Serializability wikipedia , lookup

Oracle Database wikipedia , lookup

Ingres (database) wikipedia , lookup

Microsoft Access wikipedia , lookup

Concurrency control wikipedia , lookup

Extensible Storage Engine wikipedia , lookup

Team Foundation Server wikipedia , lookup

Relational model wikipedia , lookup

Microsoft Jet Database Engine wikipedia , lookup

Database model wikipedia , lookup

Open Database Connectivity wikipedia , lookup

Microsoft SQL Server on

EMC Symmetrix

Storage Systems

Version 3.0

• Layout, Configuration and Performance

• Replication, Backup and Recovery

• Disaster Restart and Recovery

Txomin Barturen

Copyright © 2007, 2010, 2011 EMC Corporation. All rights reserved.

EMC believes the information in this publication is accurate as of its publication date. The information is

subject to change without notice.

THE INFORMATION IN THIS PUBLICATION IS PROVIDED “AS IS.” EMC CORPORATION MAKES NO

REPRESENTATIONS OR WARRANTIES OF ANY KIND WITH RESPECT TO THE INFORMATION IN THIS

PUBLICATION, AND SPECIFICALLY DISCLAIMS IMPLIED WARRANTIES OF MERCHANTABILITY OR

FITNESS FOR A PARTICULAR PURPOSE.

Use, copying, and distribution of any EMC software described in this publication requires an applicable

software license.

For the most up-to-date regulatory document for your product line, go to the Technical Documentation and

Advisories section on EMC Powerlink.

For the most up-to-date listing of EMC product names, see EMC Corporation Trademarks on EMC.com.

All other trademarks used herein are the property of their respective owners.

Microsoft SQL Server on EMC Symmetrix Storage Systems

3.0

P/N h2203.2

2

Microsoft SQL Server on EMC Symmetrix Storage Systems

Contents

Preface

Chapter 1

Microsoft SQL Server

Microsoft SQL Server overview...................................................... 24

Microsoft SQL Server instances and databases ............................ 26

Microsoft SQL Server logical components .................................... 27

A SQL Server database ..............................................................28

Data access ......................................................................................... 29

Microsoft SQL Server physical components ................................. 31

Data files ......................................................................................31

Transaction log............................................................................33

Microsoft SQL Server system databases........................................ 35

Microsoft SQL Server instances ...................................................... 36

Microsoft Windows Clustering installations ................................ 40

Backup and recovery interfaces — VDI and VSS......................... 42

Microsoft SQL Server and EMC integration ................................. 43

Advanced storage system functionality ........................................ 45

Additional Microsoft SQL Server tools.......................................... 47

Chapter 2

EMC Foundation Products

Introduction ....................................................................................... 50

Symmetrix hardware and EMC Enginuity features .................... 53

Symmetrix VMAX platform......................................................54

EMC Enginuity operating environment..................................55

EMC Solutions Enabler base management ................................... 57

EMC Change Tracker ....................................................................... 60

EMC Symmetrix Remote Data Facility .......................................... 61

SRDF benefits ..............................................................................62

Microsoft SQL Server on EMC Symmetrix Storage Systems

3

Contents

SRDF modes of operation.......................................................... 62

SRDF device groups and composite groups .......................... 63

SRDF consistency groups .......................................................... 63

SRDF terminology ...................................................................... 67

SRDF control operations............................................................ 69

Failover and failback operations .............................................. 73

EMC SRDF/Cluster Enabler solutions.................................... 75

EMC TimeFinder............................................................................... 76

TimeFinder/Mirror establish operations................................ 77

TimeFinder split operations...................................................... 78

TimeFinder restore operations ................................................. 79

TimeFinder consistent split....................................................... 80

Enginuity Consistency Assist ................................................... 80

TimeFinder/Mirror reverse split ............................................. 83

TimeFinder/Clone operations.................................................. 83

TimeFinder/Snap operations ................................................... 86

EMC Storage Resource Management ............................................ 89

EMC Storage Viewer ........................................................................ 94

EMC PowerPath................................................................................ 96

PowerPath/VE............................................................................ 98

EMC Replication Manager ............................................................ 105

EMC Open Replicator .................................................................... 107

EMC Virtual Provisioning ............................................................. 108

Thin device ................................................................................ 108

Data device ................................................................................ 108

Symmetrix VMAX specific features....................................... 109

EMC Virtual LUN migration ......................................................... 111

EMC Fully Automated Storage Tiering for Disk Pools............. 114

EMC Fully Automated Storage Tiering for Virtual Pools......... 116

Chapter 3

Storage Provisioning

Storage provisioning ...................................................................... 118

SAN storage provisioning ............................................................. 119

LUN mapping ........................................................................... 120

LUN masking ............................................................................ 121

Auto-provisioning with Symmetrix VMAX ......................... 122

Host LUN discovery ................................................................ 124

Challenges of traditional storage provisioning .......................... 125

How much storage to provide................................................ 125

How to add storage to growing applications....................... 125

How to balance usage of the LUNs ....................................... 126

How to configure for performance ........................................ 126

4

Microsoft SQL Server on EMC Symmetrix Storage Systems

Contents

Virtual Provisioning........................................................................ 128

Terminology...............................................................................129

Thin devices ...............................................................................130

Thin pool ....................................................................................131

Data devices...............................................................................131

I/O activity to a thin device ....................................................131

Virtual Provisioning requirements.........................................133

Windows NTFS considerations ..............................................133

SQL Server components on thin devices ...............................136

Approaches for replication ......................................................141

Performance considerations ....................................................141

Thin pool management ............................................................142

Thin pool monitoring ...............................................................142

Exhaustion of oversubscribed pools ......................................143

Recommendations ....................................................................145

Fully Automated Storage Tiering ................................................. 146

Evolution of Storage Tiering ...................................................147

FAST implementation ..............................................................149

Deploying FAST DP with SQL Server databases ....................... 155

Deploying FAST VP with SQL Server databases........................ 173

Chapter 4

Creating Microsoft SQL Server Database Clones

Overview .......................................................................................... 195

Recoverable versus restartable copies of databases ................... 196

Recoverable database copies ...................................................196

Restartable database copies .....................................................196

Copying the database with Microsoft SQL Server shutdown .. 198

Using TimeFinder/Mirror .......................................................198

Using TimeFinder/Clone ........................................................200

Using TimeFinder/Snap ..........................................................202

Copying the database using EMC Consistency technology ..... 205

Using TimeFinder/Mirror .......................................................205

Using TimeFinder/Clone ........................................................207

Using TimeFinder/Snap ..........................................................209

Copying the database using SQL Server VDI and VSS ............. 212

Using TimeFinder/Mirror .......................................................213

Using TimeFinder/Clone ........................................................218

Using TimeFinder/Snap ..........................................................224

Copying the database using Replication Manager .................... 229

Transitioning disk copies to SQL Server databases clones........ 231

Instantiating clones from consistent split or shutdown

images .........................................................................................232

Microsoft SQL Server on EMC Symmetrix Storage Systems

5

Contents

Using SQL Server VDI to process the database image ....... 233

Reinitializing the cloned environment ........................................ 241

Choosing a database cloning methodology................................ 242

Chapter 5

Backing up Microsoft SQL Server Databases

EMC Consistency technology and backup ................................. 247

SQL Server backup functionality ................................................. 248

Microsoft SQL Server recovery models................................. 250

Types of SQL Server backups ................................................. 253

SQL Server log markers ................................................................. 255

EMC products for SQL Server backup ........................................ 256

Integrating TimeFinder and Microsoft SQL Server............. 257

EMC Storage Resource Management .................................... 259

TF/SIM VDI and VSS backup....................................................... 263

Using TimeFinder/Mirror ...................................................... 263

Using TimeFinder/Clone........................................................ 265

Using TimeFinder/Snap ......................................................... 269

SYMIOCTL VDI backup ................................................................ 273

Using TimeFinder/Mirror ...................................................... 273

Replication Manager VDI backup................................................ 277

Saving the VDI or VSS backup to long-term media .................. 279

Chapter 6

Restoring and Recovering Microsoft SQL Server

Databases

SQL Server restore functionality .................................................. 283

SQL Server – RESTORE WITH RECOVERY ........................ 284

SQL Server – RESTORE WITH NORECOVERY.................. 285

SQL Server – RESTORE WITH STANDBY........................... 285

EMC Products for SQL Server recovery...................................... 288

EMC Consistency technology and restore .................................. 289

TF/SIM VDI and VSS restore........................................................ 292

Using TimeFinder/Mirror ...................................................... 292

Using TimeFinder/Clone........................................................ 303

Using TimeFinder/Snap ......................................................... 313

SMIOCTL VDI restore.................................................................... 327

Executing TimeFinder restore ................................................ 329

SYMIOCTL with NORECOVERY.......................................... 331

SYMIOCTL with STANDBY................................................... 334

SYMIOCTL with RECOVERY ................................................ 337

Replication Manager VDI restore................................................. 338

Applying logs up to timestamps or marked transactions ........ 340

6

Microsoft SQL Server on EMC Symmetrix Storage Systems

Contents

Chapter 7

Microsoft SQL Server Disaster Restart and Disaster

Recovery

Definitions ........................................................................................ 343

Dependent-write consistency..................................................343

Database restart.........................................................................343

Database recovery.....................................................................344

Roll forward recovery ..............................................................344

Considerations for disaster restart and disaster recovery......... 345

Recovery Point Objective (RPO) .............................................345

Recovery Time Objective (RTO) .............................................346

Operational complexity............................................................346

Source server activity ...............................................................347

Production impact ....................................................................347

Target server activity................................................................347

Number of copies......................................................................348

Distance for solution.................................................................348

Bandwidth requirements .........................................................348

Federated consistency ..............................................................349

Testing the solution ..................................................................349

Cost .............................................................................................350

Tape-based solutions....................................................................... 351

Tape-based disaster recovery..................................................351

Tape-based disaster restart ......................................................351

Local high-availability solutions................................................... 353

Multisite high-availability solutions ............................................ 354

Remote replication challenges....................................................... 356

Propagation delay .....................................................................356

Bandwidth requirements .........................................................357

Network infrastructure ............................................................357

Method of instantiation............................................................358

Method of re-instantiation .......................................................358

Change rate at the source site..................................................358

Locality of reference .................................................................359

Expected data loss.....................................................................359

Failback operations ...................................................................360

Array-based remote replication .................................................... 361

Planning for array-based replication............................................ 362

SQL Server specific issues.............................................................. 363

SRDF/S: Single Symmetrix to single Symmetrix ....................... 364

How to restart in the event of production site loss..............366

SRDF/S and consistency groups .................................................. 368

Rolling disaster..........................................................................368

Protecting against a rolling disaster .......................................370

Microsoft SQL Server on EMC Symmetrix Storage Systems

7

Contents

SRDF/S with multiple source Symmetrix arrays ................ 372

SRDF/A............................................................................................ 375

SRDF/A using single source Symmetrix .............................. 377

SRDF/A using multiple source Symmetrix ......................... 378

Restart processing..................................................................... 379

SRDF/AR single hop ..................................................................... 381

Restart processing..................................................................... 383

SRDF/AR multi hop ...................................................................... 384

Restart processing..................................................................... 386

Database log-shipping solutions .................................................. 387

Overview of log shipping........................................................ 387

Log shipping considerations................................................... 391

Log shipping and the remote database ................................. 394

Shipping logs with SRDF ........................................................ 398

SQL Server Database Mirroring ............................................. 398

Running database solutions .......................................................... 404

SQL Server transactional replication ..................................... 404

Other transactional systems .......................................................... 407

Chapter 8

Microsoft SQL Server Database Layouts on EMC

Symmetrix

The performance stack ................................................................... 411

Optimizing I/O......................................................................... 412

Storage system layout considerations ................................... 413

SQL Server layout recommendations .......................................... 415

File system partition alignment.............................................. 415

General principles for layout .................................................. 415

SQL Server layout and replication considerations .............. 417

Symmetrix performance guidelines............................................. 419

Front-end connectivity............................................................. 419

Symmetrix cache....................................................................... 421

Back-end considerations.......................................................... 430

Additional layout considerations........................................... 432

Configuration recommendations ........................................... 433

RAID considerations ...................................................................... 435

Types of RAID........................................................................... 435

RAID recommendations.......................................................... 438

Symmetrix metavolumes......................................................... 440

Host versus array-based striping ................................................. 441

Host-based striping .................................................................. 441

Symmetrix based striping (metavolumes)............................ 442

Striping recommendation........................................................ 442

8

Microsoft SQL Server on EMC Symmetrix Storage Systems

Contents

Data placement considerations ..................................................... 445

Disk performance considerations ...........................................445

Hypervolume contention.........................................................447

Maximizing data spread across back-end devices ...............448

Minimizing disk head movement ..........................................450

Other layout considerations .......................................................... 451

Database layout considerations with SRDF/S .....................451

Database cloning, TimeFinder, and sharing spindles .........452

Database clones using TimeFinder/Snap .............................453

Database-specific settings for SQL Server ................................... 454

Remote replication performance considerations..................454

TEMPDB storage and replication ...........................................455

Appendix A

Related Documents

Related documents.......................................................................... 458

Appendix B

References

Sample SYMCLI group creation commands ............................... 462

Glossary

Index

Microsoft SQL Server on EMC Symmetrix Storage Systems

9

Contents

10

Microsoft SQL Server on EMC Symmetrix Storage Systems

Figures

Title

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

Page

Microsoft SQL Server architecture overview ............................................. 27

SQL Server database architecture ................................................................ 30

SQL Server internal data file logical structure ........................................... 32

Multiple SQL Server instance installation directories .............................. 37

SQL Server Enterprise Manager with multiple instances ........................ 38

Connecting to default and named instances .............................................. 39

SRDF/CE for MSCS resource group for a SQL Server instance.............. 41

Symmetrix VMAX logical diagram ............................................................. 55

Basic synchronous SRDF configuration ...................................................... 62

SRDF consistency group ............................................................................... 65

SRDF establish and restore control operations .......................................... 71

SRDF failover and failback control operations .......................................... 73

Geographically distributed four-node EMC SRDF/CE clusters............. 75

EMC Symmetrix configured with standard volumes and BCVs ............ 77

ECA consistent split across multiple database-associated hosts............. 81

ECA consistent split on a local Symmetrix system ................................... 82

Creating a copy session using the symclone command ........................... 85

TimeFinder/Snap copy of a standard device to a VDEV......................... 88

SRM commands.............................................................................................. 90

EMC Storage Viewer...................................................................................... 95

PowerPath/VE vStorage API for multipathing plug-in........................... 99

Output of the rpowermt display command on a Symmetrix VMAX

device ...............................................................................................................102

Device ownership in vCenter Server......................................................... 103

Virtual Provisioning components .............................................................. 109

Virtual LUN Eligibility Tables.................................................................... 111

Simple storage area network configuration ............................................. 120

LUN masking relationship ......................................................................... 121

Auto-provisioning with Symmetrix VMAX............................................. 123

Virtual Provisioning component relationships........................................ 132

Microsoft SQL Server on EMC Symmetrix Storage Systems

11

Figures

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

12

Windows NTFS volume format with Quick Format option.................. 134

Thin pool display after NTFS format operations .................................... 135

Creation of a SQL Server database ............................................................ 137

Thin pool display after database creation ................................................ 138

SQL Server Management Studio view of the database .......................... 139

Windows event log entry created by SYMAPI event daemon.............. 143

SQL Server I/O request informational message ..................................... 144

FAST relationships....................................................................................... 149

Overview of relationships of filegroups, files and physical drives ...... 156

Defining Storage Types within Symmetrix Management Console ...... 158

Tier definition within Symmetrix Management Console ...................... 159

Allocating a Storage Group to a policy in Symmetrix Management

Console ............................................................................................................ 160

Symmetrix Optimizer collect and swap/move windows...................... 161

SQL Server Performance Warehouse workload view ............................ 162

SQL Server Performance Warehouse virtual file statistics .................... 163

Read I/O rates for data file volumes......................................................... 164

Read latency for data file volumes ............................................................ 164

FAST generated performance movement plan........................................ 166

FAST policy compliance view.................................................................... 167

FAST generated compliance movement plan .......................................... 167

User approval of a suggested FAST plan ................................................. 168

FAST migration in progress ....................................................................... 169

Read latencies for data volumes - post migration................................... 170

Read I/O rates for data volumes - post migration.................................. 170

Performance Warehouse virtual file statistics - post migration ............ 171

Comparison of improvement for major metrics...................................... 171

Overview of relationships of filegroups, files and thin devices............ 174

Relationship of thin LUNs to data devices and physical drives ........... 175

Detail of FC_SQL thin pool allocations for bound thin devices............ 176

Defining FAST VP storage tiers within Symmetrix Management

Console ............................................................................................................ 178

FAST VP policy definition within Symmetrix Management Console . 179

Allocating a storage group to a policy in Symmetrix Management

Console ............................................................................................................ 180

Symmetrix FAST Configuration Wizard performance time window.. 181

Symmetrix FAST Configuration Wizard movement time window ..... 182

Windows performance counters for reads and writes IOPs.................. 183

Windows performance counters for read and write latencies .............. 184

Read and write workload before and during migrations ...................... 185

Read and write latencies before and during migrations ........................ 185

Output from demand association report.................................................. 186

Summary of thin pool allocations over time............................................ 186

Microsoft SQL Server on EMC Symmetrix Storage Systems

Figures

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

Storage allocations for a LUN used by a Broker file ............................... 187

Storage allocations for the SATA tier ........................................................ 188

Read I/O rates for data volumes - Post-FAST VP activation................. 189

Comparison of improvement for major metrics ...................................... 190

Copying a shutdown SQL Server database with TimeFinder/Mirror. 199

Copying a shutdown SQL Server database with TimeFinder/Clone .. 202

Copying a shutdown SQL Server database with TimeFinder/Snap .... 203

Copying a running SQL Server database with TimeFinder/Mirror..... 206

Copying a running SQL Server database with TimeFinder/Clone ...... 209

Copying a running SQL Server database with TimeFinder/Snap........ 210

Creating a TimeFinder/Mirror backup of a SQL Server database........ 214

Sample TimeFinder/SIM backup with TimeFinder/Mirror ................. 215

Sample SYMIOCTL backup with TimeFinder/Mirror ........................... 218

Creating a backup of a SQL Server database with TimeFinder/

Clone.................................................................................................................219

Sample TF/SIM VSS backup using TimeFinder/Clone ......................... 220

Sample SYMIOCTL backup using TF/Clone........................................... 223

Creating a VDI or VSS backup of a SQL Server database with

TimeFinder/Snap ...........................................................................................224

Sample TF/SIM backup with TF/Snap..................................................... 226

Sample SYMIOCTL backup with TF/SNAP ............................................ 228

Using RM to make a backup of a SQL Server database.......................... 229

Attaching a consistent split image to SQL Server.................................... 233

TF/SIM and SQL Server VDI to create a clone ........................................ 234

Using SYMIOCTL and SQL Server VDI to create a clone ...................... 235

Using TF/SIM and SQL Server VDI to create a standby database ....... 236

Using SYMIOCTL and SQL Server VDI to create a standby database. 237

Attaching a cloned database with relocated data and log locations..... 238

SQL Query Analyzer executing sp_helpdb .............................................. 239

Mapping database logical components to new file locations ................ 239

Using TF/SIM and VDI to restore s database to a new location ........... 240

SQL Server Management Studio backup interface.................................. 248

DATABASE BACKUP Transact-SQL execution ...................................... 249

Recovery model options for a SQL Server database ............................... 252

Setting recovery model via Transact-SQL ................................................ 253

SYMRDB listing SQL database file locations............................................ 261

SYMCLONE query of a device group ....................................................... 262

Creating a TimeFinder/Mirror VDI or VSS backup of a SQL Server

database ...........................................................................................................265

Creating a VDI or VSS backup of a SQL Server database with

TimeFinder/Clone .........................................................................................266

TF/SIM VDI backup using TimeFinder/Clone ....................................... 268

TF/SIM remote VSS backup using TimeFinder/Clone.......................... 269

Microsoft SQL Server on EMC Symmetrix Storage Systems

13

Figures

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

14

Creating a VDI backup of a SQL Server database with TimeFinder/

Snap.................................................................................................................. 271

TF/SIM backup using TimeFinder/Snap ................................................ 272

Sample SYMIOCTL backup usingTF/Mirror.......................................... 276

Using RM to make a TimeFinder replica of a SQL Server database..... 277

SQL Enterprise Manager restore interface ............................................... 283

Additional Enterprise Manager restore options...................................... 284

DATABASE RESTORE Transact-SQL execution..................................... 286

SQL log from attaching a Consistent split image to SQL Server .......... 291

TF/SIM restore process using TimeFinder/Mirror ................................ 292

TF/SIM restore database with TimeFinder/Mirror and

NORECOVERY .............................................................................................. 295

SQL Management Studio view of a RESTORING database .................. 297

Restore of incremental transaction log with NORECOVERY ............... 298

TF/SIM restore with TimeFinder/Mirror and STANDBY .................... 300

SQL Management Studio view of a STANDBY (read-only) database . 301

Restore of incremental transaction log with STANDBY ........................ 302

Execution of TimeFinder/Clone restore................................................... 305

TF/SIM restore database with TimeFinder/Clone and

NORECOVERY .............................................................................................. 306

SQL Management Studio view of a RESTORING database .................. 307

Restore of incremental transaction log with NORECOVERY ............... 308

TF/SIM restore with TimeFinder/Mirror and STANDBY .................... 310

SQL Enterprise Manager view of a STANDBY (read-only) database .. 311

Restore of incremental transaction log with STANDBY ........................ 312

TF/SIM restore using TimeFinder/Snap ................................................. 315

TimeFinder/Snap listing restore session.................................................. 316

TF/SIM restore with TimeFinder/SNAP and NORECOVERY............ 318

SQL Management Studio view of a RESTORING database .................. 319

Restore of incremental transaction log with NORECOVERY ............... 320

TF/SIM restore with TimeFinder/SNAP and STANDBY..................... 322

SQL Management Studio view of a STANDBY (read-only) database . 323

Restore of incremental transaction log with STANDBY ........................ 324

Using SYMIOCTL for TimeFinder/Mirror restore of production

database ........................................................................................................... 328

SYMIOCTL restore and NORECOVERY.................................................. 331

SQL Management Studio view of a RESTORING database .................. 332

Restore of incremental transaction log with NORECOVERY ............... 333

SYMIOCTL restore and STANDBY.......................................................... 334

SQL Management Studio view of a STANDBY (read-only) database . 335

Restore of incremental transaction log with STANDBY ........................ 336

SYMIOCTL restore and recovery mode ................................................... 337

Replication Manager/Local restore overview ......................................... 338

Microsoft SQL Server on EMC Symmetrix Storage Systems

Figures

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

SRDF/S replication process ........................................................................ 365

Rolling disaster with multiple production Symmetrix arrays ............... 370

SRDF consistency group protection against rolling disaster ................. 371

SRDF/S with multiple source Symmetrix and ConGroup protection . 373

SRDF/Asynchronous replication internals .............................................. 375

SRDF/AR single-hop replication internals............................................... 382

SRDF/AR multi-hop replication internals ............................................... 386

Log shipping configuration - destination dialog ..................................... 390

Log Shipping configuration - restoration mode ...................................... 391

Log shipping implementation overview................................................... 395

Restore of incremental transaction log with NORECOVERY................ 396

Restore of incremental transaction log with STANDBY......................... 397

SQL Server Database Mirroring with SRDF/S ........................................ 399

SQL Server Database Mirroring flow overview – SYNC mode............. 401

The performance stack................................................................................. 412

Relationship between I/O size, operations per second, and

throughput...................................................................................................... 421

Performance Manager graph of write pending for single

hypervolume .................................................................................................. 427

Performance Manager graph of write pending for four member

striped metavolume .......................................................................................428

Comparison of write workload single hyper and striped

metavolume.................................................................................................... 429

RAID 5 (3+1) layout detail .......................................................................... 436

Anatomy of a RAID 5 write ........................................................................ 437

Disk performance factors ............................................................................ 447

Microsoft SQL Server on EMC Symmetrix Storage Systems

15

Figures

16

Microsoft SQL Server on EMC Symmetrix Storage Systems

Tables

Title

1

2

3

4

5

6

7

8

9

10

11

12

13

Page

Microsoft SQL Server system databases ...................................................... 35

SYMCLI base commands ............................................................................... 57

TimeFinder device type summary................................................................ 87

Data object SRM commands .......................................................................... 91

Data object mapping commands .................................................................. 91

File system SRM commands to examine file system mapping ................ 92

File system SRM command to examine logical volume mapping ........... 93

SRM statistics command ................................................................................ 93

Virtual Provisioning terminology............................................................... 129

Storage Class definition................................................................................ 148

A comparison of database cloning technologies ...................................... 242

Database cloning requirements and solutions .......................................... 243

SQL Server data and log response guidelines ......................................... 414

Microsoft SQL Server on EMC Symmetrix Storage Systems

17

Tables

18

Microsoft SQL Server on EMC Symmetrix Storage Systems

Preface

As part of an effort to improve and enhance the performance and capabilities

of its product lines, EMC periodically releases revisions of its hardware and

software. Therefore, some functions described in this document may not be

supported by all versions of the software or hardware currently in use. For

the most up-to-date information on product features, refer to your product

release notes.

If a product does not function properly or does not function as described in

this document, please contact your EMC representative.

Note: This document was accurate as of the time of publication.

However, as information is added, new versions of this document may be

released to the EMC Powerlink website. Check the Powerlink website to

ensure that you are using the latest version of this document.

Purpose

This document describes how the EMC Symmetrix storage system

operates and interfaces with Microsoft SQL Server. The information

in this document is based on Microsoft SQL Server 2005, Microsoft

SQL Server 2008, and Microsoft SQL Server 2008 R2 on Symmetrix

storage systems running Solutions Enabler Version 7.x, and current

releases of Symmetrix Enginuity microcode.

This document provides an overview of Microsoft SQL Server 2005,

Microsoft SQL Server 2008, and Microsoft SQL Server 2008 R2 along

with a general description of EMC products and utilities that can be

used for SQL Server administration. EMC Symmetrix storage systems

and EMC software products can be used to manage Microsoft SQL

Server environments and to enhance database and storage

management backup/recovery and restart procedures. Using EMC

products and utilities to manage Microsoft SQL Server environments

Microsoft SQL Server on EMC Symmetrix Storage Systems

19

Preface

can help reduce database and storage management administration,

reduce system CPU resource consumption, and reduce the time

required to clone, back up, recover, or restart Microsoft SQL Server

databases.

In this document the product names Microsoft SQL Server 2005,

Microsoft SQL Server 2008 and Microsoft SQL Server 2008 R2 may be

referred to as SQL Server. The acronym SQL refers to the Structured

Query Language, and should not be confused with the product

Microsoft SQL Server. The Structured Query Language (SQL) is used

within many relational database management systems (RDBMS) to

store, retrieve, and manipulate data. Microsoft provides a specific

implementation of the SQL language called Transact SQL (T-SQL).

T-SQL is used throughout this document in the various examples.

Microsoft provides extensive documentation on SQL Server through

its website, and through the SQL Server Books On-Line

documentation set. This should be the primary source of information

on SQL Server-specific commands and T-SQL syntax. The Books On

Line documentation may be installed through the SQL Server

installation process, and may be installed independently of the SQL

Server database engine. Updated versions of the Books On Line

documentation are available for free download from the Microsoft

SQL Server website at http://www.microsoft.com/sql.

Audience

Conventions used in

this document

The intended audience is SQL Server systems administrators,

database administrators, and storage management personnel

responsible for managing SQL Server systems.

EMC uses the following conventions for special notices.

Note: A note presents information that is important, but not hazard-related.

A caution contains information essential to avoid data loss or

damage to the system or equipment.

IMPORTANT

An important notice contains information essential to operation of

the software or hardware.

20

Microsoft SQL Server on EMC Symmetrix Storage Systems

Preface

Typographical conventions

EMC uses the following type style conventions in this document:

Normal

Used in running (nonprocedural) text for:

• Names of interface elements (such as names of windows, dialog boxes, buttons,

fields, and menus)

• Names of resources, attributes, pools, Boolean expressions, buttons, DQL

statements, keywords, clauses, environment variables, functions, utilities

• URLs, pathnames, filenames, directory names, computer names, filenames, links,

groups, service keys, file systems, notifications

Bold

Used in running (nonprocedural) text for:

• Names of commands, daemons, options, programs, processes, services,

applications, utilities, kernels, notifications, system calls, man pages

Used in procedures for:

• Names of interface elements (such as names of windows, dialog boxes, buttons,

fields, and menus)

• What user specifically selects, clicks, presses, or types

Italic

Used in all text (including procedures) for:

• Full titles of publications referenced in text

• Emphasis (for example a new term)

• Variables

Courier

Used for:

• System output, such as an error message or script

• URLs, complete paths, filenames, prompts, and syntax when shown outside of

running text

Courier bold

Used for:

• Specific user input (such as commands)

Courier italic

Used in procedures for:

• Variables on command line

• User input variables

<>

Angle brackets enclose parameter or variable values supplied by the user

[]

Square brackets enclose optional values

|

Vertical bar indicates alternate selections - the bar means “or”

{}

Braces indicate content that you must specify (that is, x or y or z)

...

Ellipses indicate nonessential information omitted from the example

Your feedback on our TechBooks is important to us! We want our

books to be as helpful and relevant as possible, so please feel free to

send us your comments, opinions and thoughts on this or any other

TechBook:

[email protected]

Microsoft SQL Server on EMC Symmetrix Storage Systems

21

Preface

22

Microsoft SQL Server on EMC Symmetrix Storage Systems

1

Microsoft SQL Server

This chapter presents these topics:

◆

◆

◆

◆

◆

◆

◆

◆

◆

◆

◆

◆

Microsoft SQL Server overview .......................................................

Microsoft SQL Server instances and databases .............................

Microsoft SQL Server logical components .....................................

Data access ..........................................................................................

Microsoft SQL Server physical components ..................................

Microsoft SQL Server system databases.........................................

Microsoft SQL Server instances .......................................................

Microsoft Windows Clustering installations..................................

Backup and recovery interfaces — VDI and VSS ..........................

Microsoft SQL Server and EMC integration ..................................

Advanced storage system functionality .........................................

Additional Microsoft SQL Server tools...........................................

Microsoft SQL Server

24

26

27

29

31

35

36

40

42

43

45

47

23

Microsoft SQL Server

Microsoft SQL Server overview

Microsoft SQL Server is Microsoft Corporation’s premier relational

database management system (RDBMS). Developed over several

years, Microsoft SQL Server has grown to become one of the most

scalable and highly performing database systems currently available,

as shown by several industry-leading Transaction Processing Council

(TPC) benchmarks.

The origin of the RDBMS stems back to initial co-development work

between Microsoft and Sybase, which focused on developing a

database management system for the then-evolving OS/2

environment. Later, a variation of this initial release would become

available on Microsoft’s LAN Manager platform. In 1995, Microsoft

developed and released Microsoft SQL Server 6.0 on the Windows

platform. In the intervening years a number of subsequent releases

providing increased functionality, performance, and scalability have

been widely adopted.

As of February 2011, Microsoft SQL Server 2008 R2 is the latest in a

series of SQL Server product releases that specifically cater to the

Microsoft Windows platform. Currently, Microsoft SQL Server does

not support any platform other than Windows Server.

In 2003, Microsoftintroduced support of the Itanium 64-bit version of

the Windows Server, coinciding with the Windows Server support,

Microsoft SQL Server released an Itanium 64-bit (IA-64) version of

Microsoft SQL Server 2000. In April of 2010, Microsoft announced

that support of Itanium-based systems would be limited to the

current version of Windows Server 2008 R2. As a result of this

position, no future versions of Microsoft SQL Server are expected to

be developed for Itanium-based implementations. Ongoing support

for existing deployments would be maintained with Microsoft’s

product lifecycle guidelines.

Microsoft SQL Server introduced support for native 64-bit

EM64T/AMD64 environments with the introduction of Microsoft

SQL Server 2005 . The EM64T/AMD64 environment is generically

referred to as x64, and has become the main Windows platform for

Windows Server 2008. Indeed, as of Windows Server 2008 R2, the

32-bit architectures cease to be supported as target platforms.

Subsequently future SQL Server releases will only become available

on the x64 platform.

24

Microsoft SQL Server on EMC Symmetrix Storage Systems

Microsoft SQL Server

Further information and support may be found at the appropriate

Microsoft product and support website locations.

Microsoft SQL Server overview

25

Microsoft SQL Server

Microsoft SQL Server instances and databases

A Microsoft SQL Server instance is defined as a discrete set of files

and executables, which operate autonomously to provide service.

SQL Server supports multiple instances operating independently on

a given operating system. Within any given SQL Server instance there

are typically a number of independent user databases. It is important

to understand this relationship of database-to-SQL Server instance,

and the capability to support multiple SQL Server instances on the

server.

A single database cannot physically exist across multiple database

instances, but can be logically represented across multiple instances

and/or servers. This logical extension of a single database across

multiple SQL Server instances is referred to as a Federated Database

environment. This style of architecture utilizes distributed

partitioned views to create a single logical database entity. However,

while the database appears to be a single entity, each member server

in the federated environment maintains a discrete set of data files and

transaction logs for its database instance.

26

Microsoft SQL Server on EMC Symmetrix Storage Systems

Microsoft SQL Server

Microsoft SQL Server logical components

Microsoft SQL Server may be divided into two main logical

components and a number of subsidiary subsystems:

◆

Relational engine — Responsible for verifying SQL statements,

and selecting the most efficient means of retrieving the data

requested

◆

Storage engine — Responsible for executing physical I/O

requests, which return the rows requested by the relational

engine

Together, these components create a complete relational database

environment providing continuous availability and data integrity.

Figure 1 on page 27 provides an overview of the Microsoft SQL

Server architecture.

Network Libraries

User Mode Scheduler

Relational

Engine

T-SQL Parser

T-SQL Compiler

Optimizer

Other Subsystems

Other Interfaces

OLE DB Interface

Transaction Manager

Logging and Recovery

Storage

Engine

File and Device

Manager

Lock

Manager

Buffer and Log

Manager

Other

Subsystems

Backup/Restore

VDI

I/O Manager and Windows Subsystem

ICO-IMG-000035

Figure 1

Microsoft SQL Server architecture overview

Microsoft SQL Server logical components

27

Microsoft SQL Server

A SQL Server database

A SQL Server database exists as a collection of physical objects (data

files and transaction log) that contain the data in the form of tables.

As previously mentioned, it is possible to create many databases

within a SQL Server instance. Typically, within any SQL Server

instance, there are by default four system databases and one or more

user databases. Each database has a defined owner who may grant or

revoke access permissions to other users.

Typical SQL Server logical database objects are:

◆

Tables

◆

Indexes

◆

Views

The database owner is associated with a user within a database

instance. All permissions and ownership of objects in the database

are controlled by the owner’s user account. Typically, the owner of a

SQL Server database is referred to as user dbo. This means that for

example, a user xyz of database myDB_1 and a user abc of database

myDB_2 may both be referred to as the dbo user for their respective

databases.

Microsoft SQL Server 2005 and 2008 include the ability to use

Integrated Windows Security with the Windows Active Directory.

This allows access and ownership to be defined to Windows login

accounts stored within the Active Directory, rather than the previous

SQL Server internal user accounts. It is possible to run either SQL

Server authentication; Integrated Windows-based authentication; or a

combination of both.

Note: It is the responsibility of the various programs and utilities to utilize

the appropriate authentication methods. Applications may fail to function

correctly if they do not support the installed authentication method.

Implemented authentication methods can be changed through the SQL

Server properties page.

28

Microsoft SQL Server on EMC Symmetrix Storage Systems

Microsoft SQL Server

Data access

Microsoft SQL Server utilizes a page as the basic physical

representation of data. A SQL Server data page is 8 KB (8192 bytes).

Data pages are then logically aggregated to form tables, which are

collections of data suitable for quick reference. Each table is a data

structure defined with a table name and a set of columns and rows,

with data occupying each cell formed by a row/column intersection.

A row is a collection of column information corresponding to a single

record.

In SQL Server, an index is an optional structure associated with a

table which may increase data retrieval performance. Indexes are

created on one or more columns of a table. Indexes are useful when

an application often needs to make queries to a table for a range of

rows or a specific row. There are two forms of indexes within SQL

Server, a clustered index or a non-clustered index. There can only be one

clustered index for a given table, as the clustered index defines the

order in which the data is stored in the table. Non-clustered indexes

are logically and physically independent of the table data and can

therefore be created or dropped at any time. If no clustered index is

defined for a table, then the table is referred to as a heap.

A view may be best considered as a virtualized table, though it should

be noted that the view does not persist as an object within the

database. The Transact-SQL statement, which defined the view, is

stored, and the view may be materialized when referenced. This may

be useful to generate a large virtual table which joins data from

different tables.

A filegroup is a named storage pool that aggregates the physical

database data files. As shown in Figure 2 on page 30, one or more

table and index structures make up the database files of a filegroup.

The data is stored logically in filegroups and physically in data files

that are associated with the corresponding filegroups.

Data access

29

Microsoft SQL Server

Database instance

MASTER database

PRIMARY filegroup

Table A

Table B

master.mdf

User database

FileGroup1

FileGroup2

Table A

Owner1

Table A

Table A

Table B

Owner2

Table B

Owner2

Table C

UserData1.mdf

UserData2.ndf

UserData3.ndf

Log file

Log file

mastlog.ldf

Userlog.ldf

ICO-IMG-000036

Figure 2

SQL Server database architecture

Note: Every SQL Server instance contains four system databases named

master, tempdb, msdb, and model, which a SQL Server instance creates

automatically when it is installed. The master database contains the data

dictionary tables and views for the entire SQL Server instance in addition to

security information relating to the user databases defined within the

instance.

30

Microsoft SQL Server on EMC Symmetrix Storage Systems

Microsoft SQL Server

Microsoft SQL Server physical components

Data files

SQL Server maintains its data and index information in data files.

Figure 3 on page 32 represents the physical layout of a single data file

object, and shows the relationship of pages and extents. A page is a

logical storage structure that is the smallest unit of storage and I/O

used by the SQL Server database. The data page size for both SQL

Server 2005 and 2008 is 8 KB (8192 bytes).

When data is added to a given table (or index), space must be

allocated for the new data. SQL Server allocates additional space

within the relevant data file in a unit size of eight contiguous data

pages. SQL Server refers to this eight 8 KB page allocation as an

extent. Therefore the extent size for SQL Server is 64 KB (65536 bytes).

Extents are either mixed or uniform, where mixed extents contain

both data and index pages which may belong to multiple tables

within the database, and uniform extents only contain data or index

pages for a single table. Typically, as data is added to a table within a

database, only uniform extents are used to store the relevant index or

data pages.

As shown in Figure 2 on page 30, several data files may be

aggregated into filegroups. Utilizing this functionality provides a

facility to constrain given data tables and/or indexes to a particular

filegroup. There is always at least one filegroup, the PRIMARY

filegroup. As data or index information is added to a table or index,

extents are allocated from the data files which represent the filegroup

wherein the table or index exists.

The first file created in the first (PRIMARY) filegroup for the database

is unique in that it contains additional information (metadata)

regarding the structure of the database itself. This file is referred to as

the primary file, and typically is assigned the .mdf extension to

signify this functionality. Subsequent data files are assigned the .ndf

extension and log files are assigned the .ldf extension. In actuality, file

extensions are somewhat irrelevant to SQL Server itself, and are

provided simply as a means to make the function of the file more

obvious to the user/administrator.

Microsoft SQL Server physical components

31

Microsoft SQL Server

Pages 8 KB

File

header

Page

free

space

Global

allocation

map

Shared

GAM

File

header

IAM

Extent - 8 pages (64KB)

DATAFILE

ICO-IMG-000041

Figure 3

SQL Server internal data file logical structure

It is possible to allocate specific data or index information to a named

(other than the default) filegroup, such that the named filegroup will

only contain the given data or index information. This can be used to

isolate particular data or index information, which is unique in some

manner, or has a different I/O profile and therefore requires a specific

storage location. However, this is not generally required in the

majority of SQL Server deployments, and Microsoft fully supports

mixing both data and index information within the filegroups—this

is the default behavior.

When allocating new data or index storage space, SQL Server will use

extents allocated from the filegroup in a proportional fill fashion,

such that data and index information are evenly distributed amongst

the files within the filegroup as data is added. This functionality

ensures that there is a more even distribution of data and index

information and therefore I/O load. Proportional fill ensures that

over time, all the data files have storage allocated based on the

available free space in the data file. As such, if one data file within a

filegroup has twice as much free space as other data files within the

same filegroup, twice as many extent allocations will be made from

the larger data file. It is therefore optimal to equally size all data files

within a filegroup, as this results in a more even round-robin

allocation of space across all the data files.

32

Microsoft SQL Server on EMC Symmetrix Storage Systems

Microsoft SQL Server

Transaction log

In addition to the data and index storage areas, which are the data

files, Microsoft SQL Server also creates and maintains a transaction

log for each database. The transaction log maintains records relating

to changes within the data files. It is possible to define multiple

transaction logs for a given SQL Server database. However, only one

transaction log is actively receiving writes at any given time. SQL

Server takes a serial approach to logging within the transaction log,

such that the first log file is fully written to before switching to

another transaction log file.

The transaction log does not use allocations such as extents used by

the data files. Once created, any single physical transaction log is

logically segmented into virtual logs based on internal SQL Server

algorithms and the initial size of the transaction log. Once

transactional activity begins, transactional information is recorded

into a virtual log within the physical log file. A logical sequence

number (LSN) is assigned to each transaction log record. Additional

information is also recorded in the transaction log record, including

the transaction ID of the transaction to which this record belongs, as

well as a pointer to any preceding log record for the transaction.

The transaction log is also considered to have an active log

component. This active log area is the sequence of log records (which

may span multiple virtual logs) relating to transactions in process.

This is required to facilitate any recovery operations on pending

transactions at the database level. Those virtual logs, which contain

data relating to the active log portion, cannot be marked for reuse

until their state changes.

The information recorded within the transaction log records is used

by Microsoft SQL Server to resolve inconsistencies within the

database either in an operational state, or subsequent to an

unexpected server failure. In general these are:

◆

rollback operations (when the data need to be returned to the state

before a transaction executing).

◆

roll forward changes into the data files (when a transaction has

completed successfully and the data files have not yet been

updated).

Microsoft SQL Server maintains relational integrity by utilizing

in-memory structures and the data recorded within the transaction

log combined with the data in the data files. Transaction log records

Microsoft SQL Server physical components

33

Microsoft SQL Server

always contain any updates to data pages which have been modified

by committed transactions. They may also contain updated pages

that belong to a transaction that may not have been committed.

In the event of a server crash, SQL Server utilizes the information

recorded in the transaction log to return to a transactionally

consistent point in time. To ensure that the log always maintains the

current state of data, the transaction log is written to synchronously,

bypassing all file system buffering. Once updates are recorded in the

transaction log, the subsequent updates to the data files may occur

asynchronously. It is obvious then, that the state of the data files and

the transaction log are not synchronized, but the log always

maintains information ahead of the data files.

A point of consistency is created by SQL Server when a checkpoint

occurs. A checkpoint forces updated (or dirty) pages out of the

memory data buffers and onto disk. At the point when a checkpoint

completes, the state of the transaction log and the data files is

consistent. The log is required to identify the state of data pages

belonging to those transactions that have not been committed and

therefore could potentially be rolled back if they abort.

34

Microsoft SQL Server on EMC Symmetrix Storage Systems

Microsoft SQL Server

Microsoft SQL Server system databases

Microsoft SQL Server maintains a number of system databases which

are used internally by various systems and functions. A list of these

databases is provided in Table 1 on page 35, including a description

on the function of the database.

Table 1

Microsoft SQL Server system databases

Database Name

Function

MASTER

Records system information on all user databases, login accounts,

security information, etc.

MODEL

Default blank template for new databases.

TEMPDB

Used to maintain temporary sort areas, stored procedures, etc.

This database is rebuilt each time the SQL Server instance is

started.

MSDB

Used for recording backup/restore events; scheduling and alerts.

System databases themselves comprise a data file and a transaction

log. In most instances, there is minimal activity to the system

databases, so placement is not performance critical. There may be

operational issues that dictate that these databases need to be placed

on SAN devices. As an example, Microsoft Failover Clustering will

require that these system databases are located on a shared SAN

environment to facilitate restart operations.

Of all the system databases, TEMPDB has specific functionality

which differentiates it from the other system databases. The TEMPDB

data file(s) and transaction log(s) may need to be appropriately

located as they can be the destination of significant I/O load. In

general, the default location of these databases is in the

%SYSTEMDRIVE% drive. Discussion on placement and

requirements for these databases are covered in Chapter 8, “Microsoft

SQL Server Database Layouts on EMC Symmetrix.”

Microsoft SQL Server system databases

35

Microsoft SQL Server

Microsoft SQL Server instances

It is possible to install multiple separate instances of the SQL Server

software on a given Windows Server environment. In general, SQL

Server can be installed as a default instance, or as a named instance.

Each instance can be considered a completely autonomous SQL

Server environment. The instances can be started or shut down

independently, except for actions such as the Windows Server

shutdown, which will obviously affect all instances.

Each instance is installed with its own system databases, as

previously described, and a separate set of executables for the server

components. Thus, it is possible to implement completely separate

security mechanisms for each environment, and even to have each

instance at a different version or even different SQL Server Service

Pack level.

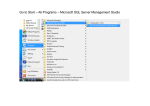

In Figure 4 on page 37, two instances have been installed on a single

Windows Server. This results in two sets of executables being

installed. The default instance is referenced by the

MSSQ10.MSSQLSERVER directory, the secondary named instance

was called DEV, and is therefore referenced as MSSQL10.DEV

36

Microsoft SQL Server on EMC Symmetrix Storage Systems

Microsoft SQL Server

Figure 4

Multiple SQL Server instance installation directories

As a result of the ability to have multiple instances executing on a

given Windows Server, Microsoft provided extensions to its client

connectivity to allow for defining the specific SQL Server instance

required. It is possible to reference the default SQL Server instance by

simply defining the connection to be the Windows Server hostname

itself. For a named instance, the server name needs to include the

instance name.

All Microsoft management tools support and display multiple

instances installed on a server. In Figure 5 on page 38, Enterprise

Manager is used to display the two SQL Server instances created on

the LICOC211 server.

Microsoft SQL Server instances

37

Microsoft SQL Server

Figure 5

SQL Server Enterprise Manager with multiple instances

For example, on the sample Windows Server documented in Figure 6

on page 39, it is possible to connect to the two instances from the osql

command line interface in the following manner. Each connection

specifies the relevant instance. In the first instance, a connection is

made to the default instance by only providing the server name, and

in the second, the named instance DEV is appended to the server

name. The server is named LICOC211. In both cases, a statement is

executed to return the name of the server instance.

38

Microsoft SQL Server on EMC Symmetrix Storage Systems

Microsoft SQL Server

Figure 6

Connecting to default and named instances

All EMC products that interact with Microsoft SQL Server support

multiple SQL Server instances on a given Windows server.

Microsoft SQL Server instances

39

Microsoft SQL Server

Microsoft Windows Clustering installations

SQL Server supports installations in a Microsoft Windows Cluster

deployments, both Windows Server 2003 Cluster Service (MSCS) and

Windows Server 2008 Failover Cluster environments.When installed

into a cluster configuration, the installation locations differ from

those of a standard local installation.

Note: As of Windows Server 2008, the name for the clustering functionality

was changed to Windows Failover Clustering. For the purposes of this

document, we will consider MSCS and Failover Clustering synonymous,

except where indicated.

All data and transaction log files, including those for the system

databases must be located on disk devices viewed by the cluster as

shared disks. This is a requirement to ensure that all nodes within a

given cluster are able to access all required databases within the

instance. By default, the SQL Server instance itself will implement a

resource dependency on the shared disk resources installed within

the resource group. This dependency must be maintained, and any

modifications to the database structure that may introduce an

additional disk resource, such as adding a data file on a new shared

LUN, must be replicated within the resource group, by adding the

new disk as a resource within the group, and adding it as a

dependency for SQL Server.

Solutions built using EMC’s geographically dispersed cluster product

SRDF®/CE for MSCS implement identical functionality and

restrictions. In Figure 7 on page 41, an SRDF/CE for MSCS

implementation is displayed. The dependencies as described are

required to be maintained, but now additionally include the disks

depending on the SRDF/CE for MSCS resource. In this manner, states

for RDF devices are managed appropriately before upper-level

services attempting start. In many ways, the implementation of

SRDF/CE for MSCS is somewhat transparent to a standard MSCS

installation. Figure 7 on page 41 details an MSCS resource group. In

this instance, the view is actually of a SRDF/CE for MSCS resource

group, and the SRDF/CE for MSCS resource may be seen as a group

member.

40

Microsoft SQL Server on EMC Symmetrix Storage Systems

Microsoft SQL Server

Figure 7

SRDF/CE for MSCS resource group for a SQL Server instance

Unlike the shared data files and logs for all the databases within the

SQL Server instance, the SQL Server executables are installed locally

to each node within the cluster in a similar layout as described in the