* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download chapter 8, 10 - School of Mathematics and Statistics

Survey

Document related concepts

Transcript

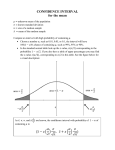

CHAPTER 8, 10 z Introduction Statistical inference is concerned with making decision or predictions about parameters- the numerical descriptive measures that characterize a population. Type of Inference • Estimation: – Estimating or predicting the value of the parameter – “What is the most likely values of µ or p”? • Hypothesis Testing: – Deciding about the value of a parameter based on some preconceived ideas – “Did the sample come from a population with µ = 5 or p = 0.22?” N An estimator is a rule, usually a formula, that tells you how to calculate the estimate based on the sample. • Point Estimation: A single number is calculated to estimate the parameter. • Interval Estimation: Two numbers are calculated to create an interval within which the parameter is expected to lie. Properties of Point Estimators • Since an estimator is calculated from sample values, it varies from sample to sample according to its sampling distribution. • An estimator is unbiased if the mean of its sampling distribution equals the parameter of interest. It does not systematically overestimate or underestimate the target parameter. • Of all the unbiased estimators, we prefer the estimator whose sampling distribution has the smallest spread or variability. Measuring the goodness of an Estimator The distance between an estimate and the true of the parameter is the error of estimation. 1 For a large sample, our unbiased estimators will have normal distribution. The Margin of Error For unbiased estimators with normal sampling distribution, 95% of all point estimates will lie within 1.96 standard deviations of the parameter of interest. Margin of Error: The maximum error of estimation, calculated as 1.96 × standard error of estimation Type of Inference • To estimate the population mean µ for a quantitative population, the point estimator x̄ is unbiased with standard error estimated as s SE = √ n The margin of error when n > 30 is estimated as s ±1.96( √ ) n • To estimate the population proportion p for a binomial population, the point estimator p̂ = nx is unbiased, with standard error estimated as r p̂q̂ SE = n The margin of error is estimated as r ±1.96 p̂q̂ n Assumption: np̂ > 5 and nq̂ > 5 ———————————————————————————Examples: • A random sample of n = 50 observations from a quantitative population produced x̄ = 56.4 and s2 = 2.6. Give the best point estimate for the population mean µ, and calculate the margin of error. • If there were 40 men in a random sample of 250 elementary school teachers, estimate the proportion of male elementary school teachers in the entire population. Give the margin of error for your estimate. 2 Interval Estimation The probability that a confidence interval will contain the estimated parameter is called the confidence coefficient. • Create an interval (a, b) so that you are fairly sure that the parameter lies between these two values. • “Fairly sure” is means “with high probability” measured using the confidence coefficient, 1 − α. Usually, 1 − α = 0.90, 0.95, 0.99 • Suppose 1 − α is confidence coefficient and the estimator has a normal distribution. A (1 − α)100% Large-Sample Confidence Interval (Point estimator) ± zα/2 × (Standard error of the estimator) where zα/2 is the z-value with an area α/2 in the right tail of standard normal distribution. This formula generates two values: the lower confidence limit(LCL) and upper confidence limit (UCL). A (1 − α)100% Large-Sample Confidence Interval for a Population Mean µ σ x̄ ± zα/2 √ n where zα/2 is the z-value corresponding to an area α/2 in the upper tail of standard normal z distribution, and n = Sample size σ = Standard deviation of the sample population If σ is unknown, it can be approximated by the sample standard deviation s when the sample size is large (n > 30) and the approximate confidence interval is s x̄ ± zα/2 √ n Inference from Small Samples x̄−µ √ does not have a normal distriUnfortunately, when sample size n is small, the statistic s/ n bution. So, you cannot say that x̄ will lie within 1.96 standard errors of µ, 95% of time. The statistic x̄ − µ t= √ s/ n for random samples of size n from a normal population has been known as Student’s t. It has the following characteristics: 3 • It is mound-shaped and symmetric about t = 0. • It is more variable than z. • the shape of the t distribution depends on the sample size n. As n increases,the variability of t decreases. Eventually, when n is infinitely large, the t and z distributions are identical. ? The divisor (n − 1) in the formula for the sample variance s2 is called the number of degrees of freedom (df ) associated with s2 . Assumption behind t distribution • The sample must be randomly selected. • The population from which you are sampling must be normally distributed. Small-Sample (1 − α)100% Confidence Interval for µ s x̄ ± tα/2 √ n A (1 − α)100% Large-Sample Confidence Interval for a Population Proportion p r p̂ ± zα/2 pq n where zα/2 is the z-value corresponding to an area α/2 in the right tail of the standard normal z distribution. Since p and q are unknown, they are estimated using the best point estimators: p̂ and q̂. The sample size is considered large when the normal approximation to the binomial distribution is adequate, when np̂ > 5 and nq̂ > 5. —————————————————————————————————– Examples: 1. In an electrolysis experiment, a class measured the amount of copper precipitated from a saturated solution of copper sulfate over a 30-minute period. The n = 30 students calculated a sample mean and standard deviation equal to 0.145 and 0.0051 respectively. Find a 90% confidence interval for the mean amount of copper precipitated from the solution over a 30-minute period. 2. A random sample of n = 300 observations from a binomial population produced x = 263 successes. Find a 99% confidence interval for p and interpret the interval. 4 3. The test scores on a 100 points test were recorded for 20 students: 71 93 91 86 75 73 86 82 76 57 84 89 67 62 72 77 68 65 75 84 If these students can be considered a random sample from the population of all students, find a 95% confidence interval for the average test score in the population. —————————————————————————————————– Estimating the Difference Between Two Population Means When independent random samples of n1 and n2 observations have been selected from populations with µ1 and µ2 and variance σ12 and σ22 , respectively. The best point estimator of the difference (µ1 − µ2 ) between the population means is (x̄1 − x̄2 ). Properties of the Sampling Distribution of (x̄1 − x̄2 ) The sampling distribution of the difference (x̄1 − x̄2 ) has the following properties: 1. The mean of (x̄1 − x̄2 ) is µ1 − µ2 and the standard error is s σ12 σ22 + SE = n1 n2 which can be estimated as s SE = s21 s2 + 2 n1 n2 when the sample sizes are large. 2. If the sampled populations are normally distributed, then the sampling distribution of (x̄1 − x̄2 ) is exactly normally distributed, regardless of the sample size. 3. If the sampled populations are not normally distributed, then the sampling distribution of (x̄1 − x̄2 ) is approximately normally distributed when n1 and n2 are both 30 or more, due to CLT. Large-Sample Point Estimation of (µ1 − µ2 ) point estimator: (x̄1 − x̄2 ) q 95% Margin of error: ±1.96 s21 n1 + s22 n2 A (1 − α)100% Large-Sample Confidence Interval for (µ1 − µ2 ) s (x̄1 − x̄2 ) ± zα/2 5 s21 s2 + 2 n1 n2 Unfortunately, when the sample sizes are small, the statistic (x̄1 − x̄2 ) − (µ1 − µ2 ) q 2 s2 s1 + n22 n1 does not have an approximately normal distribution - nor does it have a t-distribution. You need one more assumption to use t-distribution. Suppose that the variability of the measurements in the two normal populations is the same and can be measured by a common variance σ 2 . That is, both populations have exactly the same shape, and σ12 = σ22 = σ 2 . Then the standard error of the difference in the two sample means is s r 1 1 σ12 σ22 + = σ2( + ) n1 n2 n1 n2 where σ 2 is estimated by s2 = (n1 − 1)s21 + (n2 − 1)s22 n1 + n2 − 2 which is called pooled variance. Small-Sample (1 − α)100% Confidence Interval for (µ1 − µ2 ) Based on Independent Random Samples r 1 1 (x̄1 − x̄2 ) ± tα/2 s2 ( + ) n1 n2 2 2 where s is the pooled estimate of σ ——————————————————————————————– Examples: 1. Independent random samples were selected from populations 1 and 2. The sample sizes, mean, and variances are as follows: Population 1: Sample size=64, Sample mean=2.9 Sample variance=0.83 Population 2: Sample size=64, Sample mean=5.1 Sample variance=1.67 a. Find a 90% confidence interval for the difference in the population means. What does the phrase 90% confident mean? b. Find a 99% confidence interval for the difference in the population means. Can you conclude that there is a difference in the two population means? 2. Two independent random samples of sizes n1 = 4 and n2 = 5 are selected from each of two normal populations: Population 1: 12 3 8 5 Population 2: 14 7 7 9 6 a. Calculate s2 , the pooled estimator of σ 2 . b. Find 90% confidence interval for (µ1 − µ2 ). 6 ———————————————————————————– Estimating the Difference Between Two Binomial Proportions Assume that independent random samples of n1 and n2 observations have been selected from binomial populations with parameters p1 and p2 , respectively. The unbiased estimator of the difference (p1 − p2 ) is the sample difference (p̂1 − p̂2 ). Properties of the Sampling Distribution of the Difference (p̂1 − p̂2 ) The sampling distribution of the difference between sample proportions x1 x2 − (p̂1 − p̂2 ) = n1 n2 has these properties: 1. The mean of (p̂1 − p̂2 ) is p1 − p2 and standard error is r p1 q 1 p2 q 2 SE = + n1 n2 which is estimated as r SE = p̂1 q̂1 p̂2 q̂2 + n1 n2 2. The sampling distribution of (p̂1 − p̂2 ) can be approximated by a normal distribution when n1 and n2 are large, due to the CLT. Large-Sample Point Estimation of (p1 − p2 ) Point estimator: (p̂1 − p̂2 ) q 95% Margin of error: ±1.96SE = ±1.96 pn1 q11 + pn2 q22 A (1 − α)100% Large-Sample Confidence Interval for (p1 − p2 ) r (p̂1 − p̂2 ) ± zα/2 p1 q1 p2 q2 + n1 n2 Assumptions:n1 and n2 must be sufficiently large so that the sampling distribution of (p̂1 − p̂2 )can be approximated by a normal distribution, if n1 p̂1 > 5, n1 q̂1 > 5, n2 p̂2 > 5, n2 q̂2 > 5 Choosing the Sample Size If σ zα/2 ( √ ) < B n 7 then 2 σ2 zα/2 n> . B2 Similarly, for binomial population n> 2 pq zα/2 B2 in the case that you do not have idea for p and q n> . 2 zα/2 0.25 B2 . Also, you can find sample size for two populations. ————————————————————————————————Examples: 1. Independent random samples of n1 = 1265 and n2 = 1688 observations were selected from binomial populations 1 and 2, and x1 = 849 and x2 = 910 successes were observed. a. Find a 99% confidence interval for the difference (p1 − p2 ) in the two population proportions. b. Based on the confidence interval in part a, can you conclude that there is a difference in the two binomial proportions? Explain. 2. Suppose you wish to estimate a population mean based on a random sample of n observations and prior experience suggests that σ = 12.7. If you wish to estimate µ to within 1.6, with probability equal to 0.95, how many observations should be include in your sample? ————————————————————————————————Suggested Exercises: 8.10, 8.12, 8.14, 8.18, 8.20, 8.32, 8.34, 8.36, 8.38, 8.42, 8.46, 8.48, 8.52, 8.56, 8.58, 8.70, 8.72, 8.82, 8.88, 8.98 8