* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download The Conjugate Gradient Method

Computational phylogenetics wikipedia , lookup

Mathematical optimization wikipedia , lookup

Strähle construction wikipedia , lookup

Expectation–maximization algorithm wikipedia , lookup

Computational fluid dynamics wikipedia , lookup

Horner's method wikipedia , lookup

Newton's method wikipedia , lookup

Computational electromagnetics wikipedia , lookup

The Conjugate Gradient Method

Tom Lyche

University of Oslo

Norway

The Conjugate Gradient Method – p. 1/2

Plan for the day

The method

Algorithm

Implementation of test problems

Complexity

Derivation of the method

Convergence

The Conjugate Gradient Method – p. 2/2

The Conjugate gradient method

Restricted to positive definite systems: Ax = b,

A ∈ Rn,n positive definite.

Generate {xk } by xk+1 = xk + αk pk ,

pk is a vector, the search direction,

αk is a scalar determining the step length.

In general we find the exact solution in at most n

iterations.

For many problems the error becomes small after a few

iterations.

Both a direct method and an iterative method.

Rate of convergence depends on the square root of the

condition number

The Conjugate Gradient Method – p. 3/2

The name of the game

Conjugate means orthogonal; orthogonal gradients.

But why gradients?

Consider minimizing the quadratic function Q : Rn → R

given by Q(x) := 12 xT Ax − xT b.

The minimum is obtained by setting the gradient equal

to zero.

∇Q(x) = Ax − b = 0 linear system Ax = b

Find the solution by solving r = b − Ax = 0.

The sequence {xk } is such that {r k } := {b − Axk } is

orthogonal with respect to the usual inner product in Rn .

The search directions are also orthogonal, but with

respect to a different inner product.

The Conjugate Gradient Method – p. 4/2

The algorithm

Start with some x0 . Set p0 = r 0 = b − Ax0 .

For k = 0, 1, 2, . . .

xk+1 = xk + αk pk ,

αk =

r Tk r k

pTk Apk

r k+1 = b − Axk+1 = r k − αk Apk

pk+1 = r k+1 + βk pk ,

βk =

r Tk+1 r k+1

r Tk r k

The Conjugate Gradient Method – p. 5/2

Example

2 −1

−1 2

[ xx12 ] = [ 10 ]

Start with x0 = 0.

p0 = r0 = b = [1, 0]T

h

r T0 r 0

pT0 Ap0

i

= 12 , x1 = x0 + α0 p0 = [ 00 ] + 12 [ 10 ] = 1/2

0

h i

0

2

r 1 = r 0 − α0 Ap0 = [ 10 ] − 12 −1

= 1/2

, r T1 r 0 = 0

h i

h

i

T

1/4

0

β0 = rr 1T rr 10 = 14 , p1 = r 1 + β0 p0 = 1/2

+ 41 [ 10 ] = 1/2 ,

α0 =

0

α1 =

r T1 r 1

pT1 Ap1

= 23 ,

x2 = x1 + α1 p1 =

h

1/2

0

i

+

2

3

h

1/4

1/2

i

=

h

2/3

1/3

i

r 2 = 0, exact solution.

The Conjugate Gradient Method – p. 6/2

Exact method and iterative method

Orthogonality of the residuals implies that xm is equal to the solution

x of Ax = b for some m ≤ n.

For if xk 6= x for all k = 0, 1, . . . , n − 1 then rk 6= 0 for

k = 0, 1, . . . , n − 1 is an orthogonal basis for Rn . But then rn ∈ Rn is

orthogonal to all vectors in Rn so rn = 0 and hence xn = x.

So the conjugate gradient method finds the exact solution in at most

n iterations.

The convergence analysis shows that kx − xk kA typically becomes

small quite rapidly and we can stop the iteration with k much smaller

that n.

It is this rapid convergence which makes the method interesting and

in practice an iterative method.

The Conjugate Gradient Method – p. 7/2

Conjugate Gradient Algorithm

[Conjugate Gradient Iteration] The positive definite linear system Ax = b is

solved by the conjugate gradient method. x is a starting vector for the iteration. The

iteration is stopped when ||r k ||2 /||r 0 ||2 ≤ tol or k > itmax. itm is the number of

iterations used.

function [ x , i t m ] = cg ( A , b , x , t o l , itm a x ) r =b−A∗ x ; p= r ; rho=r ’ ∗ r ;

rho0=rho ; f o r k =0: itm a x

i f s qrt ( rho / rho0)<= t o l ˆ 2

i t m =k ; re turn

end

t =A∗p ; a=rho / ( p ’ ∗ t ) ;

x=x+a∗p ; r =r−a∗ t ;

rhos=rho ; rho=r ’ ∗ r ;

p= r +( rho / rhos ) ∗ p ;

end i t m = itm a x +1;

The Conjugate Gradient Method – p. 8/2

A family of test problems

We can test the methods on the Kronecker sum matrix

C1

cI

C1

bI

.

+

..

A = C 1 ⊗I+I⊗C 2 =

C1

C1

bI

cI

..

.

bI

..

.

..

bI

cI

.

bI

,

bI

cI

where C 1 = tridiagm (a, c, a) and C 2 = tridiagm (b, c, b).

Positive definite if c > 0 and c ≥ |a| + |b|.

The Conjugate Gradient Method – p. 9/2

m = 3, n = 9

A=

2c a 0 b 0 0 0 0 0

a 2c a 0 b 0 0 0 0

0 a 2c 0 0 b 0 0 0

b 0 0 2c a 0 b 0 0

0 b 0 a 2c a 0 b 0

0 0 b 0 a 2c 0 0 b

0 0 0 b 0 0 2c a 0

0 0 0 0 b 0 a 2c a

0 0 0 0 0 b 0 a 2c

b = a = −1, c = 2: Poisson matrix

b = a = 1/9, c = 5/18: Averaging matrix

The Conjugate Gradient Method – p. 10/2

Averaging problem

λjk = 2c + 2a cos (jπh) + 2b cos (kπh), j, k = 1, 2, . . . , m.

a = b = 1/9, c = 5/18

λmax =

5

9

+ 49 cos (πh), λmin =

cond2 (A) =

λmax

λmin

=

5+4 cos(πh)

5−4 cos(πh)

5

9

− 49 cos (πh)

≤ 9.

The Conjugate Gradient Method – p. 11/2

2D formulation for test problems

V = vec(x). R = vec(r), P = vec(p)

Ax = b ⇐⇒ DV + V E = h2 F ,

D = tridiag(a, c, a) ∈ Rm,m , E = tridiag(b, c, b) ∈ Rm,m

vec(Ap) = DP + P E

The Conjugate Gradient Method – p. 12/2

Testing

[Testing Conjugate Gradient ] A = trid(a, c, a, m) ⊗ I m + I m ⊗

2

2

trid(b, c, b, m) ∈ Rm ,m

function [ V , i t ] = c g t e s t (m, a , b , c , t o l , itm a x )

h = 1 / (m+ 1 ) ; R=h∗h∗ ones (m) ;

D=sparse ( t r i d i a g o n a l ( a , c , a ,m) ) ; E=sparse ( t r i d i a g o n a l ( b , c , b ,m) ) ;

V=zeros (m,m) ; P=R; rho=sum(sum(R. ∗ R ) ) ; rho0=rho ;

f o r k =1: itm a x

i f s qrt ( rho / rho0)<= t o l

i t =k ; re turn

end

T=D∗P+P∗E ; a=rho /sum(sum(P. ∗ T ) ) ; V=V+a∗P ; R=R−a∗T ;

rhos=rho ; rho=sum(sum(R. ∗ R ) ) ; P=R+( rho / rhos ) ∗ P ;

end ;

i t = itm a x +1;

The Conjugate Gradient Method – p. 13/2

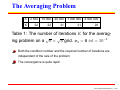

The Averaging Problem

n

2 500

10 000

40 000

1 000 000

4 000 000

K

22

22

21

21

20

Table 1: The number of iterations K for the averag√

√

ing problem on a n × n grid. x0 = 0 tol = 10−8

Both the condition number and the required number of iterations are

independent of the size of the problem

The convergence is quite rapid.

The Conjugate Gradient Method – p. 14/2

Poisson Problem

λjk = 2c + 2a cos (jπh) + 2b cos (kπh), j, k = 1, 2, . . . , m.

a = b = −1, c = 2

λmax = 4 + 4 cos (πh), λmin = 4 − 4 cos (πh)

cond2 (A) =

λmax

λmin

=

1+cos(πh)

1−cos(πh)

= cond( T )2 .

cond2 (A) = O(n).

The Conjugate Gradient Method – p. 15/2

The Poisson problem

n

2 500

10 000

40 000

160 000

K

140

294

587

1168

√

K/ n

1.86

1.87

1.86

1.85

Using CG in the form of Algorithm 8 with ǫ = 10−8 and x0 = 0 we list

√

K, the required number of iterations and K/ n.

The results show that K is much smaller than n and appears to be

√

proportional to n

This is the same speed as for SOR and we don’t have to estimate

any acceleration parameter!

√

n is essentially the square root of the condition number of A.

The Conjugate Gradient Method – p. 16/2

Complexity

The work involved in each iteration is

1. one matrix times vector (t = Ap),

2. two inner products (pT t and r T r ),

3. three vector-plus-scalar-times-vector (x = x + ap,

r = r − at and p = r + (rho/rhos)p),

The dominating part of the computation is statement 1.

Note that for our test problems A only has O(5n) nonzero

elements. Therefore, taking advantage of the sparseness of

A we can compute t in O(n) flops. With such an

implementation the total number of flops in one iteration is

O(n).

The Conjugate Gradient Method – p. 17/2

More Complexity

How many flops do we need to solve the test problems

by the conjugate gradient method to within a given

tolerance?

Average problem. O(n) flops. Optimal for a problem

with n unknowns.

Same as SOR and better than the fast method based

on FFT.

Discrete Poisson problem: O(n3/2) flops.

same as SOR and fast method.

Cholesky Algorithm: O(n2 ) flops both for averaging and

Poisson.

The Conjugate Gradient Method – p. 18/2

Analysis and Derivation of the Method

Theorem 3 (Orthogonal Projection). Let S be a subspace of a finite

dimensional real or complex inner product space (V, F, h·, ·, )i. To each

x ∈ V there is a unique vector p ∈ S such that

hx − p, si = 0,

for all s ∈ S.

(1)

x

x-p

S

p=P x

S

The Conjugate Gradient Method – p. 19/2

Best Approximation

Theorem 4 (Best Approximation). Let S be a subspace of a finite

dimensional real or complex inner product space (V, F, h·, ·, )i. Let

x ∈ V , and p ∈ S . The following statements are equivalent

1. hx − p, si = 0,

for all s ∈ S .

2. kx − sk > kx − pk for all s ∈ S with s 6= p.

If (v 1 , . . . , v k ) is an orthogonal basis for S then

k

X

hx, v i i

vi.

p=

hv i , v i i

(2)

i=1

The Conjugate Gradient Method – p. 20/2

Derivation of CG

Ax = b, A ∈ Rn,n is pos. def., x, b ∈ Rn

(x, y) := xT y ,

x, y ∈ Rn

hx, yi := xT Ay = (x, Ay) = (Ax, y)

√

kxkA = xT Ax

W0 = {0}, W1 = span{b}, W2 = span{b, Ab},

Wk = span{b, Ab, A2 b, . . . , Ak−1 b}

W0 ⊂ W1 ⊂ W2 ⊂ Wk ⊂ · · ·

dim(Wk ) ≤ k,

w ∈ Wk ⇒ Aw ∈ Wk+1

xk ∈ Wk , hxk − x, wi = 0 for all w ∈ Wk

Pj−1 hr j ,pi i

p0 = r0 := b, pj = r j − i=0 hp ,p i pi , j = 1, . . . , k.

i

i

The Conjugate Gradient Method – p. 21/2

Convergence

Theorem 5. Suppose we apply the conjugate gradient method to a

positive definite system Ax = b. Then the A-norms of the errors satisfy

||x − xk ||A

||x − x0 ||A

√

k

κ−1

≤2 √

,

κ+1

for k ≥ 0,

where κ = cond2 (A) = λmax /λmin is the 2-norm condition number of

A.

This theorem explains what we observed in the previous

section.

Namely that the number of iterations is linked to

√

κ, the square root of the condition number of A. Indeed,

the following corollary gives

an upper bound for the number

√

of iterations in terms of κ.

The Conjugate Gradient Method – p. 22/2

Corollary 6. If for some ǫ > 0 we have k ≥

1

2

2 √

ln( ǫ ) κ then

||x − xk ||A /||x − x0 ||A ≤ ǫ.

The Conjugate Gradient Method – p. 23/2