Utile Distinction Hidden Markov Models

... POMDP problems. However, it fails to make distictions based on utility — it cannot discriminate between different parts of a world that look the same but are different in the assignment of rewards. He posed the Utile Distinction Conjecture claiming that state distinctions based on utility would not ...

... POMDP problems. However, it fails to make distictions based on utility — it cannot discriminate between different parts of a world that look the same but are different in the assignment of rewards. He posed the Utile Distinction Conjecture claiming that state distinctions based on utility would not ...

1994-Learning to Coordinate without Sharing Information

... icy ?r in state s, and y is a discount rate (0 < y < 1). Various reinforcement learning strategies have been proposed using which agents can can develop a policy to maximize rewards accumulated over time. For our experiments, we use the Q-learning (Watkins 1989) algorithm, which is designed to find ...

... icy ?r in state s, and y is a discount rate (0 < y < 1). Various reinforcement learning strategies have been proposed using which agents can can develop a policy to maximize rewards accumulated over time. For our experiments, we use the Q-learning (Watkins 1989) algorithm, which is designed to find ...

Reinforcement Learning as a Context for Integrating AI Research

... brain processes. This complexity has been previously expressed by those arguing for the need to solve the symbol grounding problem in order to create intelligent language behavior. One particularly difficult aspect of language is its role as a shortcut for brains to learn parts of their simulation m ...

... brain processes. This complexity has been previously expressed by those arguing for the need to solve the symbol grounding problem in order to create intelligent language behavior. One particularly difficult aspect of language is its role as a shortcut for brains to learn parts of their simulation m ...

On the Sample Complexity of Reinforcement Learning with a Generative Model

... new lower bound for RL. Finally, we conclude the paper and propose some directions for the future work in Section 5. ...

... new lower bound for RL. Finally, we conclude the paper and propose some directions for the future work in Section 5. ...

WSD: bootstrapping methods

... – Or derive the co-occurrence terms automatically from machine readable dictionary entries – Or select seeds automatically using co-occurrence statistics (see Ch 6 of J&M) ...

... – Or derive the co-occurrence terms automatically from machine readable dictionary entries – Or select seeds automatically using co-occurrence statistics (see Ch 6 of J&M) ...

Research Summary - McGill University

... model. The learning algorithms developed for PSRs so far are based on a suitable choice of core tests. However, knowing the model dimensions (i.e. the core tests) is a big assumption and not always possible. Finding the core tests incrementally from interactions with an unknown system seems to be co ...

... model. The learning algorithms developed for PSRs so far are based on a suitable choice of core tests. However, knowing the model dimensions (i.e. the core tests) is a big assumption and not always possible. Finding the core tests incrementally from interactions with an unknown system seems to be co ...

Feature Markov Decision Processes

... • Replace Φ by Φ0 for sure if Cost gets smaller or with some small probability if Cost gets larger. Repeat. ...

... • Replace Φ by Φ0 for sure if Cost gets smaller or with some small probability if Cost gets larger. Repeat. ...

Rollout Sampling Policy Iteration for Decentralized POMDPs

... of possible joint policies is O((|Ai |(|Ωi | −1)/(|Ωi |−1) )|I| ). Instead of searching over the entire policy space, dynamic programming (DP) constructs policies from the last step up to the first one and eliminates dominated policies at the early stages [11]. However, the exhaustive backup in the ...

... of possible joint policies is O((|Ai |(|Ωi | −1)/(|Ωi |−1) )|I| ). Instead of searching over the entire policy space, dynamic programming (DP) constructs policies from the last step up to the first one and eliminates dominated policies at the early stages [11]. However, the exhaustive backup in the ...

Machine Learning --- Intro

... classifies new examples accurately. An algorithm that takes as input specific instances and produces a model that generalizes beyond these instances. Classifier - A mapping from unlabeled instances to (discrete) classes. Classifiers have a form (e.g., decision tree) plus an interpretation procedure ...

... classifies new examples accurately. An algorithm that takes as input specific instances and produces a model that generalizes beyond these instances. Classifier - A mapping from unlabeled instances to (discrete) classes. Classifiers have a form (e.g., decision tree) plus an interpretation procedure ...

記錄 編號 6668 狀態 NC094FJU00392004 助教 查核 索書 號 學校

... pp. 154-156. [14] J. Y. Kuo, “A document-driven agent-based approach for business”, processes management. Information and Software Technology, 2004, Vol. 46, pp. 373-382. [15] J. Y. Kuo, S.J. Lee and C.L. Wu, N.L. Hsueh, J. Lee. Evolutionary Agents for Intelligent Transport Systems, International Jo ...

... pp. 154-156. [14] J. Y. Kuo, “A document-driven agent-based approach for business”, processes management. Information and Software Technology, 2004, Vol. 46, pp. 373-382. [15] J. Y. Kuo, S.J. Lee and C.L. Wu, N.L. Hsueh, J. Lee. Evolutionary Agents for Intelligent Transport Systems, International Jo ...

artificial intelligence techniques for advanced smart home

... Hidden Markov Model (HMM) for action prediction [10]. Hidden Markov models have been extensively used in various environments which include the location of the devices, device identifiers and speech recognition cluster. Traditional machine learning techniques such as memory-based learners, decision ...

... Hidden Markov Model (HMM) for action prediction [10]. Hidden Markov models have been extensively used in various environments which include the location of the devices, device identifiers and speech recognition cluster. Traditional machine learning techniques such as memory-based learners, decision ...

Improving Reinforcement Learning by using Case Based

... 2 Reinforcement Learning and the Q–Learning algorithm Reinforcement Learning (RL) algorithms have been applied successfully to the on-line learning of optimal control policies in Markov Decision Processes (MDPs). In RL, this policy is learned through trial-and-error interactions of the agent with it ...

... 2 Reinforcement Learning and the Q–Learning algorithm Reinforcement Learning (RL) algorithms have been applied successfully to the on-line learning of optimal control policies in Markov Decision Processes (MDPs). In RL, this policy is learned through trial-and-error interactions of the agent with it ...

記錄編號 6668 狀態 NC094FJU00392004 助教查核 索書號 學校名稱

... Marukawa, “Interactive Multiagent Reinforcement Learning with Motivation Rules”, Proceeding on 4th International Conference on Computational Intelligence and Multimedia Applications, 2001, pp.128-132. [22] J. Y. Kuo, M. L. Tsai, and N. L. Hsueh. 2006. “Goal Evolution based on Adaptive Q-learning for ...

... Marukawa, “Interactive Multiagent Reinforcement Learning with Motivation Rules”, Proceeding on 4th International Conference on Computational Intelligence and Multimedia Applications, 2001, pp.128-132. [22] J. Y. Kuo, M. L. Tsai, and N. L. Hsueh. 2006. “Goal Evolution based on Adaptive Q-learning for ...

Course outline - Computing Science

... Students investigate non-deterministic computer algorithms that are used in wide application areas but cannot be written in pseudo programming languages. Non-deterministic algorithms have been known as topics of machine learning or artificial intelligence. The topics covered in this course include m ...

... Students investigate non-deterministic computer algorithms that are used in wide application areas but cannot be written in pseudo programming languages. Non-deterministic algorithms have been known as topics of machine learning or artificial intelligence. The topics covered in this course include m ...

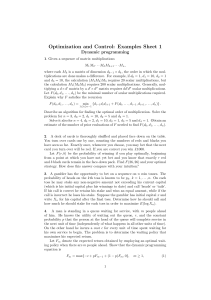

Optimization and Control: Examples Sheet 1

... befall him: he will escape with probability pi , he will be killed with probability qi , and with probability ri he will find the passage to be a dead end and be forced to return to the room. The fates associated with different passages are independent. Establish the order in which Theseus should at ...

... befall him: he will escape with probability pi , he will be killed with probability qi , and with probability ri he will find the passage to be a dead end and be forced to return to the room. The fates associated with different passages are independent. Establish the order in which Theseus should at ...

Metaheuristic Methods and Their Applications

... trapped in confined areas of the search space. • The basic concepts of metaheuristics permit an abstract level description. • Metaheuristics are not problem-specific. • Metaheuristics may make use of domain-specific knowledge in the form of heuristics that are controlled by the upper level strategy. ...

... trapped in confined areas of the search space. • The basic concepts of metaheuristics permit an abstract level description. • Metaheuristics are not problem-specific. • Metaheuristics may make use of domain-specific knowledge in the form of heuristics that are controlled by the upper level strategy. ...

COMP 3710

... application areas but cannot be written in pseudo programming languages. Nondeterministic algorithms have been known as topics of machine learning or artificial intelligence. Students are introduced to the use of classical artificial intelligence techniques and soft computing techniques. Classical a ...

... application areas but cannot be written in pseudo programming languages. Nondeterministic algorithms have been known as topics of machine learning or artificial intelligence. Students are introduced to the use of classical artificial intelligence techniques and soft computing techniques. Classical a ...

AI Safety and Beneficence, Some Current Research Paths

... actions that are otherwise against its utility/cost/reward functions ...

... actions that are otherwise against its utility/cost/reward functions ...

P - Research Group of Vision and Image Processing

... “Amazon.com might be the world's largest laboratory to study human behavior and decision making.” ...

... “Amazon.com might be the world's largest laboratory to study human behavior and decision making.” ...

CS2351 Artificial Intelligence Ms.R.JAYABHADURI

... Objective: To introduce the most basic concepts, representations and algorithms for planning, to explain the method of achieving goals from a sequence of actions (planning) and how better heuristic estimates can be achieved by a special data structure called planning graph. To understand the design ...

... Objective: To introduce the most basic concepts, representations and algorithms for planning, to explain the method of achieving goals from a sequence of actions (planning) and how better heuristic estimates can be achieved by a special data structure called planning graph. To understand the design ...

DOC/LP/01/28

... acting in the real world Objective: To introduce the most basic concepts, representations and algorithms for planning, to explain the method of achieving goals from a sequence of actions (planning) and how better heuristic estimates can be achieved by a special data structure called planning graph. ...

... acting in the real world Objective: To introduce the most basic concepts, representations and algorithms for planning, to explain the method of achieving goals from a sequence of actions (planning) and how better heuristic estimates can be achieved by a special data structure called planning graph. ...