Substitution method

... log(f(n)) is smaller then 0.5f(n) (f(n) is a monotonous increasing function), for f(n)> 4. Thus, we may choose 0.085 . f(n)=logn=4. Hence we set n0 = 16. T(n) = Θ(nlog23) ...

... log(f(n)) is smaller then 0.5f(n) (f(n) is a monotonous increasing function), for f(n)> 4. Thus, we may choose 0.085 . f(n)=logn=4. Hence we set n0 = 16. T(n) = Θ(nlog23) ...

ct ivat ion Function for inimieat ion Abstract

... Hopfield nets, are used extensively for optimization, constraint satisfaction, and approximation of NP-hard problems. Nevertheless, finding a global minimum for the energy function is not guaranteed, and even a local minimum may take an exponential number of steps. We propose an improvement to the s ...

... Hopfield nets, are used extensively for optimization, constraint satisfaction, and approximation of NP-hard problems. Nevertheless, finding a global minimum for the energy function is not guaranteed, and even a local minimum may take an exponential number of steps. We propose an improvement to the s ...

Lecture2_ProblemSolving

... SOLVING PROBLEM C – STEP 4 Step 4 – Execute the program * Check for any semantic / logic errors ...

... SOLVING PROBLEM C – STEP 4 Step 4 – Execute the program * Check for any semantic / logic errors ...

probability

... probability of an event can be calculated by counting the number of possible outcomes that correspond to the event and dividing that number by the total number of outcomes. The former implies listing the ways something can happen. Let's consider the problem of rolling two dice. There are 6 possible ...

... probability of an event can be calculated by counting the number of possible outcomes that correspond to the event and dividing that number by the total number of outcomes. The former implies listing the ways something can happen. Let's consider the problem of rolling two dice. There are 6 possible ...

Correlated Random Variables in Probabilistic Simulation

... where ∆E is difference of norms E before and after random change. This probability distribution expresses the concept when a system in thermal equilibrium at temperature T has its energy probabilistically distributed among all different energy states ∆E. Boltzmann constant kb relates temperature and ...

... where ∆E is difference of norms E before and after random change. This probability distribution expresses the concept when a system in thermal equilibrium at temperature T has its energy probabilistically distributed among all different energy states ∆E. Boltzmann constant kb relates temperature and ...

pptx - Neural Network and Machine Learning Laboratory

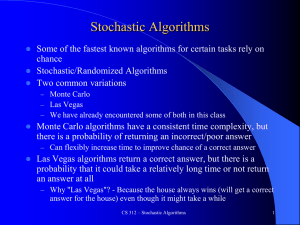

... There is less than a 50% probability that a composite number c passes the Fermat primality test So try the test k times This is called Amplification of Stochastic Advantage ...

... There is less than a 50% probability that a composite number c passes the Fermat primality test So try the test k times This is called Amplification of Stochastic Advantage ...

Chapter 8 Notes

... Constructs transitive closure T as the last matrix in the sequence of n-by-n matrices R(0), … , R(k), … , R(n) where R(k)[i,j] = 1 iff there is nontrivial path from i to j with only first k vertices allowed as intermediate Note that R(0) = A (adjacency matrix), R(n) = T (transitive closure) ...

... Constructs transitive closure T as the last matrix in the sequence of n-by-n matrices R(0), … , R(k), … , R(n) where R(k)[i,j] = 1 iff there is nontrivial path from i to j with only first k vertices allowed as intermediate Note that R(0) = A (adjacency matrix), R(n) = T (transitive closure) ...

pdf

... Nonetheless if we consider stronger consistency, such as domain consistency, the improvement is twofold: it provides better accuracy since the variable biases rely only on supports that are domain consistent (rather than arc consistent for EMBP-a) and it is easily implementable for any constraint fo ...

... Nonetheless if we consider stronger consistency, such as domain consistency, the improvement is twofold: it provides better accuracy since the variable biases rely only on supports that are domain consistent (rather than arc consistent for EMBP-a) and it is easily implementable for any constraint fo ...

Simulated annealing

Simulated annealing (SA) is a generic probabilistic metaheuristic for the global optimization problem of locating a good approximation to the global optimum of a given function in a large search space. It is often used when the search space is discrete (e.g., all tours that visit a given set of cities). For certain problems, simulated annealing may be more efficient than exhaustive enumeration — provided that the goal is merely to find an acceptably good solution in a fixed amount of time, rather than the best possible solution.The name and inspiration come from annealing in metallurgy, a technique involving heating and controlled cooling of a material to increase the size of its crystals and reduce their defects. Both are attributes of the material that depend on its thermodynamic free energy. Heating and cooling the material affects both the temperature and the thermodynamic free energy. While the same amount of cooling brings the same amount of decrease in temperature it will bring a bigger or smaller decrease in the thermodynamic free energy depending on the rate that it occurs, with a slower rate producing a bigger decrease.This notion of slow cooling is implemented in the Simulated Annealing algorithm as a slow decrease in the probability of accepting worse solutions as it explores the solution space. Accepting worse solutions is a fundamental property of metaheuristics because it allows for a more extensive search for the optimal solution.The method was independently described by Scott Kirkpatrick, C. Daniel Gelatt and Mario P. Vecchi in 1983, and by Vlado Černý in 1985. The method is an adaptation of the Metropolis–Hastings algorithm, a Monte Carlo method to generate sample states of a thermodynamic system, invented by M.N. Rosenbluth and published in a paper by N. Metropolis et al. in 1953.