comparative analysis of parallel k means and parallel fuzzy c means

... degrade when the dataset grows larger in terms of number of objects and dimension. To obtain acceptable computational speed on huge datasets, most researchers turn to parallelizing scheme. A. K-MEANS CLUSTERING K-Means is a commonly used clustering algorithm used for data mining. Clustering is a mea ...

... degrade when the dataset grows larger in terms of number of objects and dimension. To obtain acceptable computational speed on huge datasets, most researchers turn to parallelizing scheme. A. K-MEANS CLUSTERING K-Means is a commonly used clustering algorithm used for data mining. Clustering is a mea ...

Time-focused density-based clustering of trajectories of moving

... from all the visited core objects. If none can be found, go to step 1 Output: reach-d() of all visited points ...

... from all the visited core objects. If none can be found, go to step 1 Output: reach-d() of all visited points ...

Algorithms of the Intelligent Web J

... networks overview 201 • A neural network fraud detector at work 203 The anatomy of the fraud detector neural network 208 • A base class for building general neural networks 214 ...

... networks overview 201 • A neural network fraud detector at work 203 The anatomy of the fraud detector neural network 208 • A base class for building general neural networks 214 ...

Spatio-temporal clustering methods

... we can use to compare our own results against. If the activity recognition system is not detecting movement (the person is standing still) and our collected GPS coordinates are consistently far apart, we may be facing a problem with GPS accuracy. In such cases, additional data sources should be cons ...

... we can use to compare our own results against. If the activity recognition system is not detecting movement (the person is standing still) and our collected GPS coordinates are consistently far apart, we may be facing a problem with GPS accuracy. In such cases, additional data sources should be cons ...

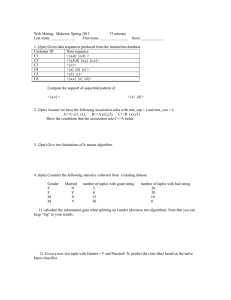

Homework 4

... 2. (2pts) Assume we have the following association rules with min_sup = s and min_con = c: A=>C (s1, c1), B=>A (s2,c2), C=>B (s3,c3) Show the conditions that the association rule C=>A holds. ...

... 2. (2pts) Assume we have the following association rules with min_sup = s and min_con = c: A=>C (s1, c1), B=>A (s2,c2), C=>B (s3,c3) Show the conditions that the association rule C=>A holds. ...

Spatial clustering paper

... We compared the performances of the two different clustering algorithms. Figure 2 shows the storm events detected during the time period from 1:00 pm to 1:15 pm. Figures 2a and 2c shows the k-means clustering results with k=2 and 3, respectively. Figures 2b and 2d shows the clustering results using ...

... We compared the performances of the two different clustering algorithms. Figure 2 shows the storm events detected during the time period from 1:00 pm to 1:15 pm. Figures 2a and 2c shows the k-means clustering results with k=2 and 3, respectively. Figures 2b and 2d shows the clustering results using ...

collaborative clustering: an algorithm for semi

... corresponding result sets obtained. These result sets are then refined using a systematic refinement approach to obtain result sets with similar structure and the same number of clusters. Then, a voting algorithm is used to unify the clusters into one single result set. Finally, a cluster labelling ...

... corresponding result sets obtained. These result sets are then refined using a systematic refinement approach to obtain result sets with similar structure and the same number of clusters. Then, a voting algorithm is used to unify the clusters into one single result set. Finally, a cluster labelling ...

Soft computing data mining - Indian Statistical Institute

... method to discover classification rules. In this approach, they develop two genetic algorithms specifically designed for discovering rules covering examples belonging to small disjuncts (i.e., a rule covering a small number of examples), whereas a conventional decision tree algorithm is used to produc ...

... method to discover classification rules. In this approach, they develop two genetic algorithms specifically designed for discovering rules covering examples belonging to small disjuncts (i.e., a rule covering a small number of examples), whereas a conventional decision tree algorithm is used to produc ...

CIS 498 Exercise for Salary Census Data (U.S. Only) Part I: Prepare

... Then create a mining structure with the following mining models: Naïve Bayes, Decision Tree, and Clustering. Assume that all attributes (other than the key) are used for input, except for the Salary attribute, which should be Predict Only in all models. Note: some models will ignore some of the inpu ...

... Then create a mining structure with the following mining models: Naïve Bayes, Decision Tree, and Clustering. Assume that all attributes (other than the key) are used for input, except for the Salary attribute, which should be Predict Only in all models. Note: some models will ignore some of the inpu ...

Selection of Initial Seed Values for K-Means Algorithm

... challenging role because of the curse of dimensionality [6]. The aim of clustering is to find structure in data and is therefore exploratory in nature. Clustering has a long and rich history in a variety of scientific fields. One of the most popular and simple clustering algorithms is K-Means. KMean ...

... challenging role because of the curse of dimensionality [6]. The aim of clustering is to find structure in data and is therefore exploratory in nature. Clustering has a long and rich history in a variety of scientific fields. One of the most popular and simple clustering algorithms is K-Means. KMean ...

Assignment 4b

... conditionally independent of each other so that fewer generalization errors would be made. The constraint of partitioning the data is impractical for most data sets. Other algorithms which try to overcome this requirement instead require time consuming cross validation techniques and if the original ...

... conditionally independent of each other so that fewer generalization errors would be made. The constraint of partitioning the data is impractical for most data sets. Other algorithms which try to overcome this requirement instead require time consuming cross validation techniques and if the original ...

Density Based Clustering - DBSCAN [Modo de Compatibilidade]

... DBSCAN can only result in a good clustering as good as its distance measure is in the function getNeighbors(P,epsilon). The most common distance metric used is the euclidean distance measure. Especially for high-dimensional data, this distance metric can be rendered almost useless due to the so call ...

... DBSCAN can only result in a good clustering as good as its distance measure is in the function getNeighbors(P,epsilon). The most common distance metric used is the euclidean distance measure. Especially for high-dimensional data, this distance metric can be rendered almost useless due to the so call ...

GF-DBSCAN: A New Efficient and Effective Data Clustering

... compared with other data in neighboring cells to generate a new cluster. Those clusters have different areas. The search points of Cluster 3 are seen only in the neighboring cells. In Fig.7(b), the new cluster embraces the edges of other clusters, which have core points in other clusters. Accordingl ...

... compared with other data in neighboring cells to generate a new cluster. Those clusters have different areas. The search points of Cluster 3 are seen only in the neighboring cells. In Fig.7(b), the new cluster embraces the edges of other clusters, which have core points in other clusters. Accordingl ...

Clustering Hierarchical Clustering

... CURE: the Ideas Each cluster has c representatives Choose c well scattered ppoints in the cluster Shrink them towards the mean of the cluster by a fraction of The representatives capture the physical shape and geometry of the cluster ...

... CURE: the Ideas Each cluster has c representatives Choose c well scattered ppoints in the cluster Shrink them towards the mean of the cluster by a fraction of The representatives capture the physical shape and geometry of the cluster ...

DSS Chapter 1 - Hossam Faris

... Clustering allows a user to make groups of data to determine patterns from the data. Advantage: when the data set is defined and a general pattern needs to be determined from the data. You can create a specific number of groups, depending on your business needs. One defining benefit of clustering ov ...

... Clustering allows a user to make groups of data to determine patterns from the data. Advantage: when the data set is defined and a general pattern needs to be determined from the data. You can create a specific number of groups, depending on your business needs. One defining benefit of clustering ov ...

![Density Based Clustering - DBSCAN [Modo de Compatibilidade]](http://s1.studyres.com/store/data/002454047_1-be20c7200358e2e97b22d406311261a6-300x300.png)