Generalized Cluster Aggregation

... Bayesian method [Wang et al., 2009]. Most of the traditional approaches treat each input clustering equally. Recently, some researchers proposed to weigh different clusterings differently when performing cluster aggregation to further improve the diversity and reduce the redundancy in combining the ...

... Bayesian method [Wang et al., 2009]. Most of the traditional approaches treat each input clustering equally. Recently, some researchers proposed to weigh different clusterings differently when performing cluster aggregation to further improve the diversity and reduce the redundancy in combining the ...

Analysis of Mass Based and Density Based Clustering

... major data mining techniques are regression, classification and clustering . In this research paper we are working only with the clustering because it is most important process, if we have a very large database. We are using Weka tools for clustering . Clustering is the task of assigning a set of ob ...

... major data mining techniques are regression, classification and clustering . In this research paper we are working only with the clustering because it is most important process, if we have a very large database. We are using Weka tools for clustering . Clustering is the task of assigning a set of ob ...

The Use of Heuristics in Decision Tree Learning Optimization

... show that the traditional algorithm has performed well in almost all the cases compared to the genetic algorithm, but the training classifier using genetic algorithm takes longer than the traditional algorithm. However GAs are capable of solving a large variety of problems (e.g. noisy data) where tr ...

... show that the traditional algorithm has performed well in almost all the cases compared to the genetic algorithm, but the training classifier using genetic algorithm takes longer than the traditional algorithm. However GAs are capable of solving a large variety of problems (e.g. noisy data) where tr ...

Attribute weighting in K-nearest neighbor classification Muhammad

... by analyzing and observing the data and results given to it and constantly updates this pattern whenever new data are presented to it. It is similar to the learning concept in human beings who make decisions based on their observations, that’s why it is called artificial intelligence. Machine learni ...

... by analyzing and observing the data and results given to it and constantly updates this pattern whenever new data are presented to it. It is similar to the learning concept in human beings who make decisions based on their observations, that’s why it is called artificial intelligence. Machine learni ...

Chapter 9 - cse.sc.edu

... Adapt to the characteristics of the data set to find the natural clusters Use a dynamic model to measure the similarity between clusters – Main property is the relative closeness and relative interconnectivity of the cluster – Two clusters are combined if the resulting cluster shares certain propert ...

... Adapt to the characteristics of the data set to find the natural clusters Use a dynamic model to measure the similarity between clusters – Main property is the relative closeness and relative interconnectivity of the cluster – Two clusters are combined if the resulting cluster shares certain propert ...

Clustering Categorical Data Streams

... stream, f is an integer representing its estimated frequency, and ∆ is the maximum possible error in f. Initially, D is empty. Whenever a new element e arrives, we first look up D to see weather an entry for e already exists or not. If the lookup succeeds, the entry is updated by incrementing its fr ...

... stream, f is an integer representing its estimated frequency, and ∆ is the maximum possible error in f. Initially, D is empty. Whenever a new element e arrives, we first look up D to see weather an entry for e already exists or not. If the lookup succeeds, the entry is updated by incrementing its fr ...

Clustering Algorithms: Study and Performance

... collection of data items in to clusters, such items within a cluster are more similar to each other then they are in other clusters. They used k-means & k-mediod clustering algorithms and compare the performance evaluation of both with IRIS data on the basis of time and space complexity. In this inv ...

... collection of data items in to clusters, such items within a cluster are more similar to each other then they are in other clusters. They used k-means & k-mediod clustering algorithms and compare the performance evaluation of both with IRIS data on the basis of time and space complexity. In this inv ...

Speeding up k-means Clustering by Bootstrap Averaging

... we show how bootstrap averaging with k-means can produce results comparable to clustering all of the data but in much less time. The approach of bootstrap (sampling with replacement) averaging consists of running k-means clustering to convergence on small bootstrap samples of the training data and a ...

... we show how bootstrap averaging with k-means can produce results comparable to clustering all of the data but in much less time. The approach of bootstrap (sampling with replacement) averaging consists of running k-means clustering to convergence on small bootstrap samples of the training data and a ...

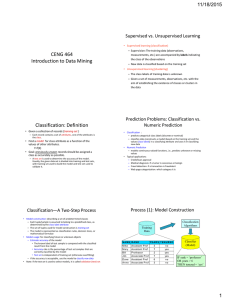

Data Mining - Computer Science Intranet

... repeat until no examples, or DL of ruleset >minDL(rulesets)+64, or error >50% GROW: add conditions until rule is 100% by IG PRUNE: prune last to first while worth metric W increases for each rule R, for each class C: split E into Grow/Prune remove all instances from Prune covered by other rules GROW ...

... repeat until no examples, or DL of ruleset >minDL(rulesets)+64, or error >50% GROW: add conditions until rule is 100% by IG PRUNE: prune last to first while worth metric W increases for each rule R, for each class C: split E into Grow/Prune remove all instances from Prune covered by other rules GROW ...

Review Paper on Clustering Techniques

... Abstract - The purpose of the data mining technique is to mine information from a bulky data set and make over it into a reasonable form for supplementary purpose. Clustering is a significant task in data analysis and data mining applications. It is the task of arrangement a set of objects so that o ...

... Abstract - The purpose of the data mining technique is to mine information from a bulky data set and make over it into a reasonable form for supplementary purpose. Clustering is a significant task in data analysis and data mining applications. It is the task of arrangement a set of objects so that o ...

Decision Tree Induction

... Dependencies among these cannot be modeled by Naïve Bayes Classifier How to deal with these dependencies? Bayesian Belief Networks ...

... Dependencies among these cannot be modeled by Naïve Bayes Classifier How to deal with these dependencies? Bayesian Belief Networks ...

K-nearest neighbors algorithm

In pattern recognition, the k-Nearest Neighbors algorithm (or k-NN for short) is a non-parametric method used for classification and regression. In both cases, the input consists of the k closest training examples in the feature space. The output depends on whether k-NN is used for classification or regression: In k-NN classification, the output is a class membership. An object is classified by a majority vote of its neighbors, with the object being assigned to the class most common among its k nearest neighbors (k is a positive integer, typically small). If k = 1, then the object is simply assigned to the class of that single nearest neighbor. In k-NN regression, the output is the property value for the object. This value is the average of the values of its k nearest neighbors.k-NN is a type of instance-based learning, or lazy learning, where the function is only approximated locally and all computation is deferred until classification. The k-NN algorithm is among the simplest of all machine learning algorithms.Both for classification and regression, it can be useful to assign weight to the contributions of the neighbors, so that the nearer neighbors contribute more to the average than the more distant ones. For example, a common weighting scheme consists in giving each neighbor a weight of 1/d, where d is the distance to the neighbor.The neighbors are taken from a set of objects for which the class (for k-NN classification) or the object property value (for k-NN regression) is known. This can be thought of as the training set for the algorithm, though no explicit training step is required.A shortcoming of the k-NN algorithm is that it is sensitive to the local structure of the data. The algorithm has nothing to do with and is not to be confused with k-means, another popular machine learning technique.