Classification Algorithms for Data Mining: A Survey

... The unknown sample is assigned the most common class among its k nearest neighbors. When k=1, the unknown sample is assigned the class of the training sample that is closest to it in pattern space. Nearest neighbor classifiers are instance-based or lazy learners in that they store all of the trainin ...

... The unknown sample is assigned the most common class among its k nearest neighbors. When k=1, the unknown sample is assigned the class of the training sample that is closest to it in pattern space. Nearest neighbor classifiers are instance-based or lazy learners in that they store all of the trainin ...

Genetic-Algorithm-Based Instance and Feature Selection

... Instance Selection and Construction for Data Mining Ch. 6 H. Ishibuchi, T. Nakashima, and M. Nii ...

... Instance Selection and Construction for Data Mining Ch. 6 H. Ishibuchi, T. Nakashima, and M. Nii ...

Weka: An open source tool for data analysis and

... Weka: An open-source tool for data analysis and mining with machine learning Quantitative Data Analysis Colloquium Centenary College of Louisiana Mark Goadrich ...

... Weka: An open-source tool for data analysis and mining with machine learning Quantitative Data Analysis Colloquium Centenary College of Louisiana Mark Goadrich ...

Slide 1

... Idea standing behind k-nn Aim - finding k-similar objects to the one we are analyzing and eventually assigning appropriate decision Method - calculating distance from analyzed object to the others in our database and finding the closest ones ...

... Idea standing behind k-nn Aim - finding k-similar objects to the one we are analyzing and eventually assigning appropriate decision Method - calculating distance from analyzed object to the others in our database and finding the closest ones ...

Dissertation Defense: Association Rule Mining and Classification

... Apriori or FP-Growth) generate if-then rules (i.e., frequent itemsets) for each class separately, which are then used in a majority voting classification scheme. However, generating a large number of rules is computationally time-consuming as data (feature) size gets larger. In addition, most of gen ...

... Apriori or FP-Growth) generate if-then rules (i.e., frequent itemsets) for each class separately, which are then used in a majority voting classification scheme. However, generating a large number of rules is computationally time-consuming as data (feature) size gets larger. In addition, most of gen ...

Association Rule Mining - Indian Statistical Institute

... Question: Can there be more than one optimal? ...

... Question: Can there be more than one optimal? ...

Extending the Classification Paradigm to Temporal Domains

... included treating each frame-channel pair as an attribute, then using instance-based learning; using entropy measuresfor each frame-channelpair as a bias to instance-based learning; extracting templates and using a X2 test to determineusefulness to classification. ...

... included treating each frame-channel pair as an attribute, then using instance-based learning; using entropy measuresfor each frame-channelpair as a bias to instance-based learning; extracting templates and using a X2 test to determineusefulness to classification. ...

Chapter 5: k-Nearest Neighbor Algorithm Supervised vs

... TOC: Main questions of the k-NN algorithm • How do we measure distance? • How do we combine the information from more than one observation? – Should all points be weighted equally, or should some ...

... TOC: Main questions of the k-NN algorithm • How do we measure distance? • How do we combine the information from more than one observation? – Should all points be weighted equally, or should some ...

Data Mining Reference Books Supervised vs. Unsupervised Learning

... training set and the values (class labels) in a classifying attribute and uses it in classifying new data y Numeric Prediction y models continuous-valued functions, i.e., predicts unknown or missing values ...

... training set and the values (class labels) in a classifying attribute and uses it in classifying new data y Numeric Prediction y models continuous-valued functions, i.e., predicts unknown or missing values ...

INFS 6510 – Competitive Intelligence Systems

... 1. How do association rules differ from traditional production rules? How are they the same? ...

... 1. How do association rules differ from traditional production rules? How are they the same? ...

On Demand Classification of Data Streams

... a part of the training stream the class labels are known a-priori Nearest neighbor procedure (XεQfit) Find the closest micro-cluster in N(tc,h) to X ...

... a part of the training stream the class labels are known a-priori Nearest neighbor procedure (XεQfit) Find the closest micro-cluster in N(tc,h) to X ...

slides - UCLA Computer Science

... Pre-assign classes to obtain an approximate result and provide simple models/rules. Decompose the feature space to make classification decisions. Akin to wavelets. ...

... Pre-assign classes to obtain an approximate result and provide simple models/rules. Decompose the feature space to make classification decisions. Akin to wavelets. ...

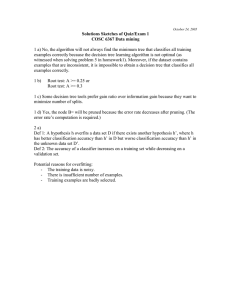

Exam/Quiz1

... examples correctly because the decision tree learning algorithm is not optimal (as witnessed when solving problem 5 in homework1). Moreover, if the dataset contains examples that are inconsistent, it is impossible to obtain a decision tree that classifies all examples correctly. 1 b) ...

... examples correctly because the decision tree learning algorithm is not optimal (as witnessed when solving problem 5 in homework1). Moreover, if the dataset contains examples that are inconsistent, it is impossible to obtain a decision tree that classifies all examples correctly. 1 b) ...

Supervised Learning and k Nearest Neighbors

... Note that if k is too large, decision may be skewed Plain majority may unfairly skew decision Revise algorithm so that closer neighbors have greater “vote weight” ...

... Note that if k is too large, decision may be skewed Plain majority may unfairly skew decision Revise algorithm so that closer neighbors have greater “vote weight” ...

CSE5334 Data Mining

... – The set of stored records – Distance Metric to compute distance between records – The value of k, the number of nearest neighbors to retrieve ...

... – The set of stored records – Distance Metric to compute distance between records – The value of k, the number of nearest neighbors to retrieve ...

K-nearest neighbors algorithm

In pattern recognition, the k-Nearest Neighbors algorithm (or k-NN for short) is a non-parametric method used for classification and regression. In both cases, the input consists of the k closest training examples in the feature space. The output depends on whether k-NN is used for classification or regression: In k-NN classification, the output is a class membership. An object is classified by a majority vote of its neighbors, with the object being assigned to the class most common among its k nearest neighbors (k is a positive integer, typically small). If k = 1, then the object is simply assigned to the class of that single nearest neighbor. In k-NN regression, the output is the property value for the object. This value is the average of the values of its k nearest neighbors.k-NN is a type of instance-based learning, or lazy learning, where the function is only approximated locally and all computation is deferred until classification. The k-NN algorithm is among the simplest of all machine learning algorithms.Both for classification and regression, it can be useful to assign weight to the contributions of the neighbors, so that the nearer neighbors contribute more to the average than the more distant ones. For example, a common weighting scheme consists in giving each neighbor a weight of 1/d, where d is the distance to the neighbor.The neighbors are taken from a set of objects for which the class (for k-NN classification) or the object property value (for k-NN regression) is known. This can be thought of as the training set for the algorithm, though no explicit training step is required.A shortcoming of the k-NN algorithm is that it is sensitive to the local structure of the data. The algorithm has nothing to do with and is not to be confused with k-means, another popular machine learning technique.