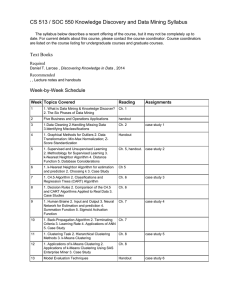

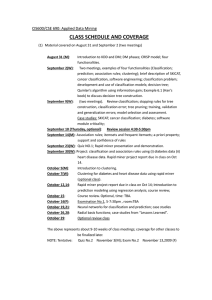

CS 513 / SOC 550 Knowledge Discovery and Data Mining Syllabus

... 1. Back-Propagation Algorithm 2. Terminating Ch. 7 Criteria 3. Learning Rate 4. Applications of ANN 5. Case Study ...

... 1. Back-Propagation Algorithm 2. Terminating Ch. 7 Criteria 3. Learning Rate 4. Applications of ANN 5. Case Study ...

Data Mining and Machine Learning

... • However, Same top-down, divide and conquer approach • Begin with the end values and then choose the attribute with the most “positive instances” ...

... • However, Same top-down, divide and conquer approach • Begin with the end values and then choose the attribute with the most “positive instances” ...

Discriminative Classifiers

... the test point x and the support vectors xi • Solving the optimization problem also involves computing the inner products xi · xj between all pairs of training points C. Burges, A Tutorial on Support Vector Machines for Pattern Recognition, Data Mining and Knowledge Discovery, 1998 ...

... the test point x and the support vectors xi • Solving the optimization problem also involves computing the inner products xi · xj between all pairs of training points C. Burges, A Tutorial on Support Vector Machines for Pattern Recognition, Data Mining and Knowledge Discovery, 1998 ...

Classification problem, case based methods, naïve Bayes

... Instances represented as points in a Euclidean space. Locally weighted regression Constructs local approximation Case-based reasoning Uses symbolic representations and knowledge-based inference ...

... Instances represented as points in a Euclidean space. Locally weighted regression Constructs local approximation Case-based reasoning Uses symbolic representations and knowledge-based inference ...

(I) Predictive Analytics (II) Inferential Statistics and Prescriptive

... Introduction to big data and Challenges for big data analytics. (III) Lab work 7. Implementation of following methods using R or Matlab ( One of the class tests with a weightage of 15 marks be used to examine these implementations): Simple and multiple linear regression, Logistic regression, Linear ...

... Introduction to big data and Challenges for big data analytics. (III) Lab work 7. Implementation of following methods using R or Matlab ( One of the class tests with a weightage of 15 marks be used to examine these implementations): Simple and multiple linear regression, Logistic regression, Linear ...

Mining massive datasets

... The students will attain in depth understanding of the machine learning and data mining 10. techniques for massive data sets. They will be able to successfully apply machine learning algorithms when solving real problems concerning business intelligence, social networks, web data description. They w ...

... The students will attain in depth understanding of the machine learning and data mining 10. techniques for massive data sets. They will be able to successfully apply machine learning algorithms when solving real problems concerning business intelligence, social networks, web data description. They w ...

PDF

... – Applies only to binary classification problems (classes pos/neg) – Precision: Fraction (or percentage) of correct predictions among all examples predicted to be positive – Recall: Fraction (or percentage) of correct predictions among all positive examples ...

... – Applies only to binary classification problems (classes pos/neg) – Precision: Fraction (or percentage) of correct predictions among all examples predicted to be positive – Recall: Fraction (or percentage) of correct predictions among all positive examples ...

On Comparing Classifiers: Pitfalls to Avoid and a Recommended

... • Data mining researchers often use classifiers to identify important classes of objects within a data repository. Classification is particularly useful when a database contains examples that can be used as the basis for future decision making; e.g., for assessing credit risks, for medical diagnosis ...

... • Data mining researchers often use classifiers to identify important classes of objects within a data repository. Classification is particularly useful when a database contains examples that can be used as the basis for future decision making; e.g., for assessing credit risks, for medical diagnosis ...

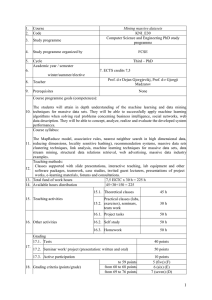

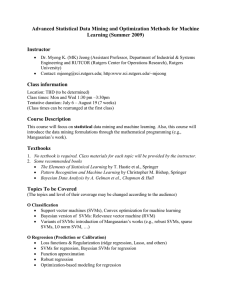

COURSE SYLLABUS

... Topics To be Covered (The topics and level of their coverage may be changed according to the audience) O Classification Support vector machines (SVMs), Convex optimization for machine learning Bayesian version of SVMs: Relevance vector machine (RVM) Variants of SVMs: introduction of Mangasaria ...

... Topics To be Covered (The topics and level of their coverage may be changed according to the audience) O Classification Support vector machines (SVMs), Convex optimization for machine learning Bayesian version of SVMs: Relevance vector machine (RVM) Variants of SVMs: introduction of Mangasaria ...

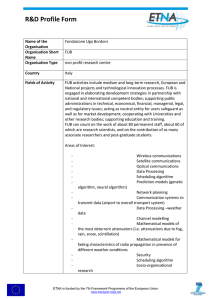

Name of the Organisation

... Mathematical models of the most deterrent attenuators (i.e. attenuations due to fog, rain, snow, scintillation) Mathematical models for fading characteristics of radio propagation in presence of different weather conditions Security Scheduling algorithm Socio-organisational research ...

... Mathematical models of the most deterrent attenuators (i.e. attenuations due to fog, rain, snow, scintillation) Mathematical models for fading characteristics of radio propagation in presence of different weather conditions Security Scheduling algorithm Socio-organisational research ...

Performance Evaluation of Different Data Mining Classification

... etc. Nearest neighbors algorithm is considered as statistical learning algorithms and it is extremely simple to implement and leaves itself open to a wide variety of variations. The k-nearest neighbors’ algorithm is amongest the simplest of all machine learning algorithms. An object is classified by ...

... etc. Nearest neighbors algorithm is considered as statistical learning algorithms and it is extremely simple to implement and leaves itself open to a wide variety of variations. The k-nearest neighbors’ algorithm is amongest the simplest of all machine learning algorithms. An object is classified by ...

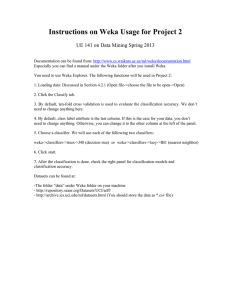

CSE/CIS 787: Analytical Data Mining

... 2. CRISP model; description of each phase. 3. Description of the four functionalities in DM; classification; prediction; association rules; clustering. PartB: Classification (similar to assignment No.2) (50%) 1. Classification, 2-steps of development and use of classification model 2. Decision tree ...

... 2. CRISP model; description of each phase. 3. Description of the four functionalities in DM; classification; prediction; association rules; clustering. PartB: Classification (similar to assignment No.2) (50%) 1. Classification, 2-steps of development and use of classification model 2. Decision tree ...

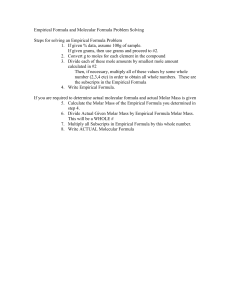

CS 634 DATA MINING QUESTION 1 [Time Series Data Mining] (A

... (B) Consider the task of building a decision tree classifier from random data, where the attribute values are generated randomly irrespective of the class labels. Assume the data set contains records from two classes, “+” and “−”. Half of the data set is used for training while the remaining half is ...

... (B) Consider the task of building a decision tree classifier from random data, where the attribute values are generated randomly irrespective of the class labels. Assume the data set contains records from two classes, “+” and “−”. Half of the data set is used for training while the remaining half is ...

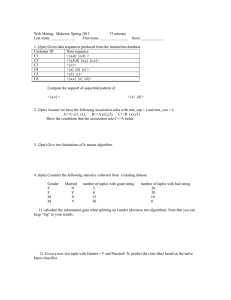

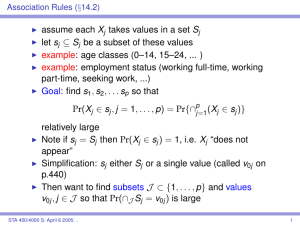

Homework 4

... 2. (2pts) Assume we have the following association rules with min_sup = s and min_con = c: A=>C (s1, c1), B=>A (s2,c2), C=>B (s3,c3) Show the conditions that the association rule C=>A holds. ...

... 2. (2pts) Assume we have the following association rules with min_sup = s and min_con = c: A=>C (s1, c1), B=>A (s2,c2), C=>B (s3,c3) Show the conditions that the association rule C=>A holds. ...

Class Slides - Pitt Department of Biomedical Informatics

... Uses the logistic function, which goes between 0 and 1 ...

... Uses the logistic function, which goes between 0 and 1 ...

K-nearest neighbors algorithm

In pattern recognition, the k-Nearest Neighbors algorithm (or k-NN for short) is a non-parametric method used for classification and regression. In both cases, the input consists of the k closest training examples in the feature space. The output depends on whether k-NN is used for classification or regression: In k-NN classification, the output is a class membership. An object is classified by a majority vote of its neighbors, with the object being assigned to the class most common among its k nearest neighbors (k is a positive integer, typically small). If k = 1, then the object is simply assigned to the class of that single nearest neighbor. In k-NN regression, the output is the property value for the object. This value is the average of the values of its k nearest neighbors.k-NN is a type of instance-based learning, or lazy learning, where the function is only approximated locally and all computation is deferred until classification. The k-NN algorithm is among the simplest of all machine learning algorithms.Both for classification and regression, it can be useful to assign weight to the contributions of the neighbors, so that the nearer neighbors contribute more to the average than the more distant ones. For example, a common weighting scheme consists in giving each neighbor a weight of 1/d, where d is the distance to the neighbor.The neighbors are taken from a set of objects for which the class (for k-NN classification) or the object property value (for k-NN regression) is known. This can be thought of as the training set for the algorithm, though no explicit training step is required.A shortcoming of the k-NN algorithm is that it is sensitive to the local structure of the data. The algorithm has nothing to do with and is not to be confused with k-means, another popular machine learning technique.

![Special topics on text mining [Representation and preprocessing]](http://s1.studyres.com/store/data/001175293_1-683e80edefb1cdff29e2207c14fc53d4-300x300.png)

![CS 634 DATA MINING QUESTION 1 [Time Series Data Mining] (A](http://s1.studyres.com/store/data/002423347_1-e72e0e06e7b13ca523d023405f882809-300x300.png)