Artificial Intelligence: - Computer Science, Stony Brook University

... The Gaussian mixture model for example, allows us to detect moving objects. This is done by classifying the captured objects and background pixels with different colors. Gaussin below:Image Source: http://www.mathworks.com/discovery/pattern-recognition.html?requestedDomain=www.mathworks.com Markow r ...

... The Gaussian mixture model for example, allows us to detect moving objects. This is done by classifying the captured objects and background pixels with different colors. Gaussin below:Image Source: http://www.mathworks.com/discovery/pattern-recognition.html?requestedDomain=www.mathworks.com Markow r ...

An Artificial Intelligence Approach Towards Sensorial

... resource-intensive approaches are called for. Especially if smart structures reacting to stimuli from their environment [5] are desired, a spatial distribution of numerous simple, miniaturized, low-power evaluation units over the entire structure seems advisable. As is the case for a human accidenta ...

... resource-intensive approaches are called for. Especially if smart structures reacting to stimuli from their environment [5] are desired, a spatial distribution of numerous simple, miniaturized, low-power evaluation units over the entire structure seems advisable. As is the case for a human accidenta ...

novel sequence representations Reliable prediction of T

... comparable performance. In the following we will use the Blosum50 matrix when we refer to Blosum sequence encoding. A last encoding scheme is defined in terms of a hidden Markov model. The details of this encoding are described later in section 3.3. The sparse versus the Blosum sequence-encoding sch ...

... comparable performance. In the following we will use the Blosum50 matrix when we refer to Blosum sequence encoding. A last encoding scheme is defined in terms of a hidden Markov model. The details of this encoding are described later in section 3.3. The sparse versus the Blosum sequence-encoding sch ...

Hebbian Learning of Bayes Optimal Decisions

... inference through an approximate implementation of the Belief Propagation algorithm (see [3]) in a network of spiking neurons. For reduced classes of probability distributions, [4] proposed a method for spiking network models to learn Bayesian inference with an online approximation to an EM algorith ...

... inference through an approximate implementation of the Belief Propagation algorithm (see [3]) in a network of spiking neurons. For reduced classes of probability distributions, [4] proposed a method for spiking network models to learn Bayesian inference with an online approximation to an EM algorith ...

A Feedback Model of Visual Attention

... modulate the activity of neurons rather than weights of synapses (Salinas and Thier, 2000; Salinas and Abbott, 1997). This mechanism has previously been modelled by allowing the activity generated by stimulation of the receptive field to be multiplicatively modulated by the response to a separate se ...

... modulate the activity of neurons rather than weights of synapses (Salinas and Thier, 2000; Salinas and Abbott, 1997). This mechanism has previously been modelled by allowing the activity generated by stimulation of the receptive field to be multiplicatively modulated by the response to a separate se ...

PDF file

... emphasizing the visual attention selection in a dynamic visual scene. Instead of directly using some low level features like orientation and intensity, they accommodated additional processing to find middle level features, e.g., symmetry and eccentricity, to build the feature map. Volitional shifts ...

... emphasizing the visual attention selection in a dynamic visual scene. Instead of directly using some low level features like orientation and intensity, they accommodated additional processing to find middle level features, e.g., symmetry and eccentricity, to build the feature map. Volitional shifts ...

Retrieval of the diffuse attenuation coefficient Kd(λ)

... Purely empirical method Non-linear inversion Universal approximator of any derivable function Can handle “easily” noise and outliers Taking more spectral information ...

... Purely empirical method Non-linear inversion Universal approximator of any derivable function Can handle “easily” noise and outliers Taking more spectral information ...

Expert System Used on Materials Processing

... specialists called it. This approach allows solving a problem by using a preset computing scheme which applies to some structures well-known for input information and produces a result that keep to program operations sequence made within computing scheme. Yet, there is another category of problems w ...

... specialists called it. This approach allows solving a problem by using a preset computing scheme which applies to some structures well-known for input information and produces a result that keep to program operations sequence made within computing scheme. Yet, there is another category of problems w ...

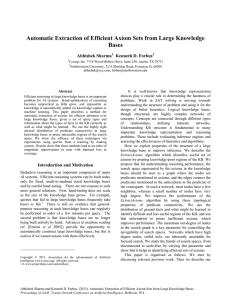

Automatic Extraction of Efficient Axiom Sets from Large Knowledge

... search space below it is less relevant7. Moreover genlPreds inference is typically easier than inference with normal axioms. Therefore the function AllFacts(p), defined above, provides a good measure for identifying predicates which should be included. Our results below show that this heuristic can ...

... search space below it is less relevant7. Moreover genlPreds inference is typically easier than inference with normal axioms. Therefore the function AllFacts(p), defined above, provides a good measure for identifying predicates which should be included. Our results below show that this heuristic can ...

The speed of learning instructed stimulus

... Keywords: Rapid instructed task learning, Pre-frontal cortex, Inferior-temporal Cortex, Hippocampus, synaptic learning Abstract Humans can learn associations between visual stimuli and motor responses from just a single instruction. This is known to be a fast process, but how fast is it? To answer t ...

... Keywords: Rapid instructed task learning, Pre-frontal cortex, Inferior-temporal Cortex, Hippocampus, synaptic learning Abstract Humans can learn associations between visual stimuli and motor responses from just a single instruction. This is known to be a fast process, but how fast is it? To answer t ...

*αí *ß>* *p "* " G6*ç"ê"ë"è"ï"î"ì"Ä"Å"É"æ"Æ"ô"ö"ò"û"ù"ÿ"Ö"Ü

... In the following sections of this report there is a description of how the components of the system architecture have been implemented. These modules and models were developed as collaborations between the computational partners of Nancy, Ulm and Sunderland. This was done based on motivation from th ...

... In the following sections of this report there is a description of how the components of the system architecture have been implemented. These modules and models were developed as collaborations between the computational partners of Nancy, Ulm and Sunderland. This was done based on motivation from th ...

Slides - Neural Network Research Group

... • Hidden states disambiguated through memory – Recurrency in neural networks 88 ...

... • Hidden states disambiguated through memory – Recurrency in neural networks 88 ...

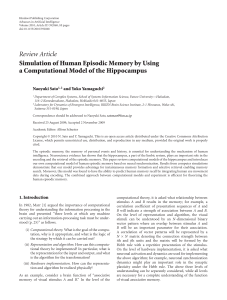

392868

... In this section, a computational model of the episodic memory based on neural synchronization of phase precession [43] is reviewed. 4.1. Representation of Object and Scene Information. Figure 2(a) shows the information flow of the model that follows experimental proposals [13, 17]. Retinal informati ...

... In this section, a computational model of the episodic memory based on neural synchronization of phase precession [43] is reviewed. 4.1. Representation of Object and Scene Information. Figure 2(a) shows the information flow of the model that follows experimental proposals [13, 17]. Retinal informati ...

Comparison of Handwriting characters Accuracy using

... including Thai handwritten characters (65 classes), Bangla numeric (10 classes) and MNIST (10 classes). The data sets are then classified by the k-Nearest Neighbors algorithm using the Euclidean distance as function for computing distances between data points. In that study, the classification rates ...

... including Thai handwritten characters (65 classes), Bangla numeric (10 classes) and MNIST (10 classes). The data sets are then classified by the k-Nearest Neighbors algorithm using the Euclidean distance as function for computing distances between data points. In that study, the classification rates ...

original

... bp/rp = number of black/red pieces; bk/rk = number of black/red kings; = number of black/red pieces threatened (can be taken on next turn) CIS 530 / 730: Artificial Intelligence ...

... bp/rp = number of black/red pieces; bk/rk = number of black/red kings; = number of black/red pieces threatened (can be taken on next turn) CIS 530 / 730: Artificial Intelligence ...

Removing some `A` from AI: Embodied Cultured Networks

... may be used to control a robot to handle a specific task. Using one of these response properties, we created a system that could achieve the goal [26]. Networks stimulated with pairs of electrical stimuli applied at different electrodes reliably produce a nonlinear response, as a function of inter-s ...

... may be used to control a robot to handle a specific task. Using one of these response properties, we created a system that could achieve the goal [26]. Networks stimulated with pairs of electrical stimuli applied at different electrodes reliably produce a nonlinear response, as a function of inter-s ...

Neuronal oscillations and brain wave dynamics in a LIF model

... also produce periodic output? Using the same model and configuration, the only thing that was changed was that the input string now determined the chance that the input neuron would fire. So a 5 would be a 50% of firing, and a 9 90%. Input strings were changed to match the amount of incoming charge ...

... also produce periodic output? Using the same model and configuration, the only thing that was changed was that the input string now determined the chance that the input neuron would fire. So a 5 would be a 50% of firing, and a 9 90%. Input strings were changed to match the amount of incoming charge ...

Ensemble Learning

... For example, even if there exists a unique best hypothesis, it might be difficult to achieve since running the algorithms result in sub-optimal hypotheses. Thus, ensembles can compensate for such imperfect search processes. The third reason is that, the hypothesis space being searched might not cont ...

... For example, even if there exists a unique best hypothesis, it might be difficult to achieve since running the algorithms result in sub-optimal hypotheses. Thus, ensembles can compensate for such imperfect search processes. The third reason is that, the hypothesis space being searched might not cont ...

Catastrophic interference

Catastrophic Interference, also known as catastrophic forgetting, is the tendency of a artificial neural network to completely and abruptly forget previously learned information upon learning new information. Neural networks are an important part of the network approach and connectionist approach to cognitive science. These networks use computer simulations to try and model human behaviours, such as memory and learning. Catastrophic interference is an important issue to consider when creating connectionist models of memory. It was originally brought to the attention of the scientific community by research from McCloskey and Cohen (1989), and Ractcliff (1990). It is a radical manifestation of the ‘sensitivity-stability’ dilemma or the ‘stability-plasticity’ dilemma. Specifically, these problems refer to the issue of being able to make an artificial neural network that is sensitive to, but not disrupted by, new information. Lookup tables and connectionist networks lie on the opposite sides of the stability plasticity spectrum. The former remains completely stable in the presence of new information but lacks the ability to generalize, i.e. infer general principles, from new inputs. On the other hand, connectionst networks like the standard backpropagation network are very sensitive to new information and can generalize on new inputs. Backpropagation models can be considered good models of human memory insofar as they mirror the human ability to generalize but these networks often exhibit less stability than human memory. Notably, these backpropagation networks are susceptible to catastrophic interference. This is considered an issue when attempting to model human memory because, unlike these networks, humans typically do not show catastrophic forgetting. Thus, the issue of catastrophic interference must be eradicated from these backpropagation models in order to enhance the plausibility as models of human memory.