ANOVA

... Within-Treatments Variability: MSwithin measures the size of the differences that exist inside each of the samples. Because all the individuals in a sample receive exactly the same treatment, any differences (or variance) within a sample cannot be caused by different treatments. Thus, these di ...

... Within-Treatments Variability: MSwithin measures the size of the differences that exist inside each of the samples. Because all the individuals in a sample receive exactly the same treatment, any differences (or variance) within a sample cannot be caused by different treatments. Thus, these di ...

Power and Sample Size for the Two-sample t

... determining the required sample size to achieve a specified level of power for a given difference between the mean change under the stated H 0 and under the stated H 1 , standard deviation of the change and α-level. By varying these four quantities we can obtain a set of sample size curves that show ...

... determining the required sample size to achieve a specified level of power for a given difference between the mean change under the stated H 0 and under the stated H 1 , standard deviation of the change and α-level. By varying these four quantities we can obtain a set of sample size curves that show ...

Chapter 6

... Sampling Error Sampling error • The difference between the point estimate and the actual population parameter value. • For μ: the sampling error is the difference x – μ μ is generally unknown x varies from sample to sample ...

... Sampling Error Sampling error • The difference between the point estimate and the actual population parameter value. • For μ: the sampling error is the difference x – μ μ is generally unknown x varies from sample to sample ...

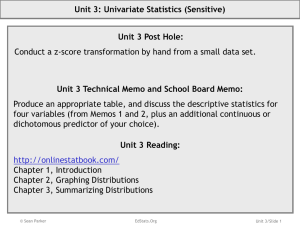

Unit 3 - Schools Count

... When discussing univariate descriptive statistics (or any statistics for that matter), make sure the audience has enough information to draw the right conclusion (or at least enough information to not draw the wrong conclusion!). ...

... When discussing univariate descriptive statistics (or any statistics for that matter), make sure the audience has enough information to draw the right conclusion (or at least enough information to not draw the wrong conclusion!). ...

MINITAB BASICS

... members are a random sample taken from a population with mean 65 against the alternative that the mean is different from 65. Assume population standard deviation to be 2.5. 1. Choose Stat>Basic Statistics>1-Sample Z... 2. Specify FHTS in the Variables text box 3. Select the Test mean option button 4 ...

... members are a random sample taken from a population with mean 65 against the alternative that the mean is different from 65. Assume population standard deviation to be 2.5. 1. Choose Stat>Basic Statistics>1-Sample Z... 2. Specify FHTS in the Variables text box 3. Select the Test mean option button 4 ...

Using SAS Perform the Analysis of Means for Variances Test

... (1997) for a complete discussion. The key points are ...

... (1997) for a complete discussion. The key points are ...

A Nonparametric Bayesian Estimation of Freeway Capacity

... at that point. It only suggests that it might not breakdown during the limited observation period. This problem is also encountered in the study of Brilon et al. (1). They could not obtain a complete distribution function, despite the large size of a one-year sample, since ...

... at that point. It only suggests that it might not breakdown during the limited observation period. This problem is also encountered in the study of Brilon et al. (1). They could not obtain a complete distribution function, despite the large size of a one-year sample, since ...

Bootstrapping (statistics)

In statistics, bootstrapping can refer to any test or metric that relies on random sampling with replacement. Bootstrapping allows assigning measures of accuracy (defined in terms of bias, variance, confidence intervals, prediction error or some other such measure) to sample estimates. This technique allows estimation of the sampling distribution of almost any statistic using random sampling methods. Generally, it falls in the broader class of resampling methods.Bootstrapping is the practice of estimating properties of an estimator (such as its variance) by measuring those properties when sampling from an approximating distribution. One standard choice for an approximating distribution is the empirical distribution function of the observed data. In the case where a set of observations can be assumed to be from an independent and identically distributed population, this can be implemented by constructing a number of resamples with replacement, of the observed dataset (and of equal size to the observed dataset).It may also be used for constructing hypothesis tests. It is often used as an alternative to statistical inference based on the assumption of a parametric model when that assumption is in doubt, or where parametric inference is impossible or requires complicated formulas for the calculation of standard errors.