2.5 Notes

... Algebra 2: Section 2.5 Linear Regression Notes Calculator Reference: Linear Regression If you have a data table, the calculator can help you find an equation or rule for the function. X Example: Given the following data set, find an equation for Y. ...

... Algebra 2: Section 2.5 Linear Regression Notes Calculator Reference: Linear Regression If you have a data table, the calculator can help you find an equation or rule for the function. X Example: Given the following data set, find an equation for Y. ...

simple linear regression model

... mean of Y for a unit change in x. Also, the variability of Y at a particular value of x is determined by the error variance, 2. This implies there is a distribution of Y-values at each x and that the variance of this distribution is the same at each x. ...

... mean of Y for a unit change in x. Also, the variability of Y at a particular value of x is determined by the error variance, 2. This implies there is a distribution of Y-values at each x and that the variance of this distribution is the same at each x. ...

Самойлова В.И.

... located on the LUKOIL website1. In this work the investigation will be taken a step further in those cases for which there is a hypothesize that one variable depends on another. During the research, there was observed several indicators interrelation of revenue and total liabilities. By using the fo ...

... located on the LUKOIL website1. In this work the investigation will be taken a step further in those cases for which there is a hypothesize that one variable depends on another. During the research, there was observed several indicators interrelation of revenue and total liabilities. By using the fo ...

SIMPLE LINEAR REGRESSION

... closer Cor(Y, X) is to 1 or -1, the stronger is the relationship between Y and X . The sign of Cor(Y, X ) indicates the direction of the relationship between Y and X . That is, Cor(Y, X) > 0 implies that Y and X are positively related. Conversely, Cor(Y, X) < 0, implies that Y and X are negatively r ...

... closer Cor(Y, X) is to 1 or -1, the stronger is the relationship between Y and X . The sign of Cor(Y, X ) indicates the direction of the relationship between Y and X . That is, Cor(Y, X) > 0 implies that Y and X are positively related. Conversely, Cor(Y, X) < 0, implies that Y and X are negatively r ...

ch2 freq dist and histogram # R code

... would generally be older than single men, so they would have been in the workforce longer and therefore had more time to advance in their careers. 5.33 What’s my grade? In Professor Krugman’s economics course the correlation between the students’ total scores prior to the final examination and their ...

... would generally be older than single men, so they would have been in the workforce longer and therefore had more time to advance in their careers. 5.33 What’s my grade? In Professor Krugman’s economics course the correlation between the students’ total scores prior to the final examination and their ...

Logistic Regression on SPSS

... note that SPSS test both of the coefficients to see if they are equal to zero with Wald chi square tests. We can see that all of the coefficients are significantly different from zero. (p-values are ...

... note that SPSS test both of the coefficients to see if they are equal to zero with Wald chi square tests. We can see that all of the coefficients are significantly different from zero. (p-values are ...

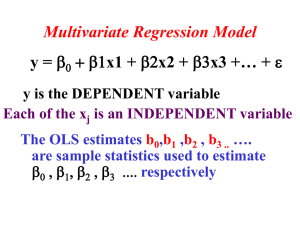

Multiple Regression

... Interpreting the Coefficients • b2 = 0.1541. In this model, for each additional point on exam 2, the final exam score increases on average by 0.1541 (assuming the other variables are held constant). • b3 = 0.2960. For each additional point on exam 3, the final exam score increases on average by 0.2 ...

... Interpreting the Coefficients • b2 = 0.1541. In this model, for each additional point on exam 2, the final exam score increases on average by 0.1541 (assuming the other variables are held constant). • b3 = 0.2960. For each additional point on exam 3, the final exam score increases on average by 0.2 ...

Welcome ...to the course in Statistical Learning, 2011. Lectures

... f (x) = Px . From any soft classifier we can get a natural hard classifier by taking f hard (x) = argmaxi f (x)(i). If f (x) = Px then f hard = fB is the Bayes classifier again. Linear Regression For (X, Y ) a pair of random variables with values in Rp × R we assume that E(Y |X) = β0 + ...

... f (x) = Px . From any soft classifier we can get a natural hard classifier by taking f hard (x) = argmaxi f (x)(i). If f (x) = Px then f hard = fB is the Bayes classifier again. Linear Regression For (X, Y ) a pair of random variables with values in Rp × R we assume that E(Y |X) = β0 + ...

Limitation of ridge regression

... the extra sum of square associated with a predictor varies , depending upon which other predictor variables are already included in the model. the estimated SD of the regression coefficients become large the estimated regression coefficients individually may not be statistically significant even tho ...

... the extra sum of square associated with a predictor varies , depending upon which other predictor variables are already included in the model. the estimated SD of the regression coefficients become large the estimated regression coefficients individually may not be statistically significant even tho ...

Statistics for Science Fair Cheat Sheet

... Statistics is a scientist’s powerful ally. Used properly, statistics allows your students to interpret the results of their experiments and report conclusions with measured confidence. Statistics shouldn’t be scary—in fact, the basic ideas are quite simple. It’s the details that get messy. This hand ...

... Statistics is a scientist’s powerful ally. Used properly, statistics allows your students to interpret the results of their experiments and report conclusions with measured confidence. Statistics shouldn’t be scary—in fact, the basic ideas are quite simple. It’s the details that get messy. This hand ...

Abstract ( Kamakura Seminar )

... covariance is investigated. The covariance structure is deterministic and quite general, including piecewise constant structure or cyclical behaviors as special cases. In this framework we propose ordinary least squares (OLS), generalized least squares (GLS) and adaptive least squares (ALS) procedur ...

... covariance is investigated. The covariance structure is deterministic and quite general, including piecewise constant structure or cyclical behaviors as special cases. In this framework we propose ordinary least squares (OLS), generalized least squares (GLS) and adaptive least squares (ALS) procedur ...

Forecasting with Regression Analysis Causal, Explanatory

... Real Estate Example • Why is not more variation explained? • Multicollinearity Total variation exists: – X1 is correlated Explained with X2 by X1 – We want independence of Explained by X2 the X’s (uncorrelated) ...

... Real Estate Example • Why is not more variation explained? • Multicollinearity Total variation exists: – X1 is correlated Explained with X2 by X1 – We want independence of Explained by X2 the X’s (uncorrelated) ...

II. Supervised latent Dirichlet allocation

... its review. Intuitively, good predictive topics will differentiate words like “excellent”, “terrible”, and “average,” without regard to genre. But topics estimated from an unsupervised model may correspond to genres, if that is the dominant structure in the corpus. The distinction between unsupervis ...

... its review. Intuitively, good predictive topics will differentiate words like “excellent”, “terrible”, and “average,” without regard to genre. But topics estimated from an unsupervised model may correspond to genres, if that is the dominant structure in the corpus. The distinction between unsupervis ...

Review of Basic Statistical Concepts

... manufacturer, then a random variable X can be defined as the number of defective laptops in the lot. The possible values for X are any number from zero to 10. Continuous RV: A random variable that can take an uncountable range of values. For instance, if an experiment consists of measuring the amoun ...

... manufacturer, then a random variable X can be defined as the number of defective laptops in the lot. The possible values for X are any number from zero to 10. Continuous RV: A random variable that can take an uncountable range of values. For instance, if an experiment consists of measuring the amoun ...

Math and the Redesigned SAT

... • Calculate and interpret measures of center (mean, median) • Calculate and interpret measures of center (range and standard deviation) ...

... • Calculate and interpret measures of center (mean, median) • Calculate and interpret measures of center (range and standard deviation) ...

Linear regression

In statistics, linear regression is an approach for modeling the relationship between a scalar dependent variable y and one or more explanatory variables (or independent variables) denoted X. The case of one explanatory variable is called simple linear regression. For more than one explanatory variable, the process is called multiple linear regression. (This term should be distinguished from multivariate linear regression, where multiple correlated dependent variables are predicted, rather than a single scalar variable.)In linear regression, data are modeled using linear predictor functions, and unknown model parameters are estimated from the data. Such models are called linear models. Most commonly, linear regression refers to a model in which the conditional mean of y given the value of X is an affine function of X. Less commonly, linear regression could refer to a model in which the median, or some other quantile of the conditional distribution of y given X is expressed as a linear function of X. Like all forms of regression analysis, linear regression focuses on the conditional probability distribution of y given X, rather than on the joint probability distribution of y and X, which is the domain of multivariate analysis.Linear regression was the first type of regression analysis to be studied rigorously, and to be used extensively in practical applications. This is because models which depend linearly on their unknown parameters are easier to fit than models which are non-linearly related to their parameters and because the statistical properties of the resulting estimators are easier to determine.Linear regression has many practical uses. Most applications fall into one of the following two broad categories: If the goal is prediction, or forecasting, or error reduction, linear regression can be used to fit a predictive model to an observed data set of y and X values. After developing such a model, if an additional value of X is then given without its accompanying value of y, the fitted model can be used to make a prediction of the value of y. Given a variable y and a number of variables X1, ..., Xp that may be related to y, linear regression analysis can be applied to quantify the strength of the relationship between y and the Xj, to assess which Xj may have no relationship with y at all, and to identify which subsets of the Xj contain redundant information about y.Linear regression models are often fitted using the least squares approach, but they may also be fitted in other ways, such as by minimizing the ""lack of fit"" in some other norm (as with least absolute deviations regression), or by minimizing a penalized version of the least squares loss function as in ridge regression (L2-norm penalty) and lasso (L1-norm penalty). Conversely, the least squares approach can be used to fit models that are not linear models. Thus, although the terms ""least squares"" and ""linear model"" are closely linked, they are not synonymous.