OLLSCOIL NA hÉIREANN MÁ NUAD

... (c) Local linear regression can be used to reduce such edge bias. How would the k- [10 marks] nearest neighbour pseudo-code need to be modified to implement a local linear regression estimator ? (d) k-nearest neighbour methods estimators rely on the function being roughly [11 marks] constant over a ...

... (c) Local linear regression can be used to reduce such edge bias. How would the k- [10 marks] nearest neighbour pseudo-code need to be modified to implement a local linear regression estimator ? (d) k-nearest neighbour methods estimators rely on the function being roughly [11 marks] constant over a ...

Logistic Regression & Survival Analysis

... The Logistic Regression The joint effects of all explanatory variables put together on the odds is Odds = P/1-P = e α + β1X1 + β2X2 + …+βpXp Taking the logarithms of both sides Log{P/1-P} = log α+β1X1+β2X2+…+βpXp Logit P = α+β1X1+β2X2+..+βpXp The coefficients β1, β2, βp are such that the sums of th ...

... The Logistic Regression The joint effects of all explanatory variables put together on the odds is Odds = P/1-P = e α + β1X1 + β2X2 + …+βpXp Taking the logarithms of both sides Log{P/1-P} = log α+β1X1+β2X2+…+βpXp Logit P = α+β1X1+β2X2+..+βpXp The coefficients β1, β2, βp are such that the sums of th ...

Weighted Ridge Estimation in a Collective Bargaining Context

... where X is the matrix of explanatory variable values, K is a diagonal matrix with the ridge biasing parameter down the diagonal and V is the vector of dependent variable values. Hoerl and Kennard (1970) have shOwn that if the X and V data are appropriately standardized to mean of zero and variance o ...

... where X is the matrix of explanatory variable values, K is a diagonal matrix with the ridge biasing parameter down the diagonal and V is the vector of dependent variable values. Hoerl and Kennard (1970) have shOwn that if the X and V data are appropriately standardized to mean of zero and variance o ...

Summary of COS424

... • The problem of how to discover patterns in data—also called “learning”— can be formulated as an optimization of an appropriate objective function. • We focused on maximum likelihood estimation. – The model is indexed by parameters. – The observed data have a probability under each setting. – Find ...

... • The problem of how to discover patterns in data—also called “learning”— can be formulated as an optimization of an appropriate objective function. • We focused on maximum likelihood estimation. – The model is indexed by parameters. – The observed data have a probability under each setting. – Find ...

Statistics 572 Midterm 1 Solutions

... model, E[yi ] = Xi β = β1 + β2 x2 + · · · + βk xk is violated. Solution: A plot of residuals versus fitted values may show a pattern. 11. The generalized linear models we have seen, logistic regression and Poisson regression, have variants that include an overdispersion parameter. Why do we not need ...

... model, E[yi ] = Xi β = β1 + β2 x2 + · · · + βk xk is violated. Solution: A plot of residuals versus fitted values may show a pattern. 11. The generalized linear models we have seen, logistic regression and Poisson regression, have variants that include an overdispersion parameter. Why do we not need ...

The Standard Error of the Slope

... To understand that the slope of a regression line fitted from sample data will vary from sample to sample. To learn that having a wider spread in the values of x and a smaller spread in the values of y (for each x) decreases the variability of the slope b1. To learn that the variability in the ...

... To understand that the slope of a regression line fitted from sample data will vary from sample to sample. To learn that having a wider spread in the values of x and a smaller spread in the values of y (for each x) decreases the variability of the slope b1. To learn that the variability in the ...

Classic Regression Analysis in SAS

... response that shuts off circulation to the skin, muscles, and internal organs, and diverts extra oxygen-carrying blood to the heart, lungs, and brain.) A research physician conducted an experiment to investigate the effects of various cold water temperatures on the pulse rates of 10 children with th ...

... response that shuts off circulation to the skin, muscles, and internal organs, and diverts extra oxygen-carrying blood to the heart, lungs, and brain.) A research physician conducted an experiment to investigate the effects of various cold water temperatures on the pulse rates of 10 children with th ...

University of Warwick, Department of Sociology, 2012/13 SO 201

... duration data, proportional hazards models do not require a researcher to specify a form for the hazard curve (technically speaking, they are semiparametric rather than parametric). • These models are thus well-suited to situations where a researcher is more interested in the difference between the ...

... duration data, proportional hazards models do not require a researcher to specify a form for the hazard curve (technically speaking, they are semiparametric rather than parametric). • These models are thus well-suited to situations where a researcher is more interested in the difference between the ...

Review of Hypothesis Testing

... The expected count in any cell of a two-way table when H0 is true is ...

... The expected count in any cell of a two-way table when H0 is true is ...

Inference for least squares lines

... • Gather data for the two variables in the model. • Draw the scatter diagram to determine whether a linear model appears to be appropriate. • Determine the regression equation. • Check the required conditions for the errors. • Check the existenc ...

... • Gather data for the two variables in the model. • Draw the scatter diagram to determine whether a linear model appears to be appropriate. • Determine the regression equation. • Check the required conditions for the errors. • Check the existenc ...

36-309/749

... ii) In randomized experiments, association can be interpreted as causation. iii) In observations studies, causation can be in either direction or due to a third variable. ...

... ii) In randomized experiments, association can be interpreted as causation. iii) In observations studies, causation can be in either direction or due to a third variable. ...

HW 2 - Marriott School

... Promotion. These data describe promotional spending by a pharmaceutical company for a cholesterol-lowering drug. The data cover 39 consecutive weeks and isolate the area around Boston. The variables in this collection are shares. Marketing research often describes the level of promotion in terms of ...

... Promotion. These data describe promotional spending by a pharmaceutical company for a cholesterol-lowering drug. The data cover 39 consecutive weeks and isolate the area around Boston. The variables in this collection are shares. Marketing research often describes the level of promotion in terms of ...

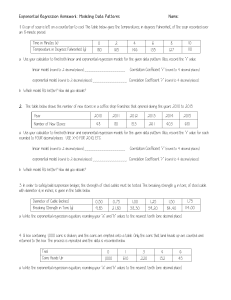

Exponential Regression Homework: Modeling Data Patterns Name

... 4. A box containing 1,000 coins is shaken, and the coins are emptied onto a table. Only the coins that land heads up are counted and returned to the box. The process is repeated and the data is recorded below. Trial Coins Heads Up ...

... 4. A box containing 1,000 coins is shaken, and the coins are emptied onto a table. Only the coins that land heads up are counted and returned to the box. The process is repeated and the data is recorded below. Trial Coins Heads Up ...

Linear regression

In statistics, linear regression is an approach for modeling the relationship between a scalar dependent variable y and one or more explanatory variables (or independent variables) denoted X. The case of one explanatory variable is called simple linear regression. For more than one explanatory variable, the process is called multiple linear regression. (This term should be distinguished from multivariate linear regression, where multiple correlated dependent variables are predicted, rather than a single scalar variable.)In linear regression, data are modeled using linear predictor functions, and unknown model parameters are estimated from the data. Such models are called linear models. Most commonly, linear regression refers to a model in which the conditional mean of y given the value of X is an affine function of X. Less commonly, linear regression could refer to a model in which the median, or some other quantile of the conditional distribution of y given X is expressed as a linear function of X. Like all forms of regression analysis, linear regression focuses on the conditional probability distribution of y given X, rather than on the joint probability distribution of y and X, which is the domain of multivariate analysis.Linear regression was the first type of regression analysis to be studied rigorously, and to be used extensively in practical applications. This is because models which depend linearly on their unknown parameters are easier to fit than models which are non-linearly related to their parameters and because the statistical properties of the resulting estimators are easier to determine.Linear regression has many practical uses. Most applications fall into one of the following two broad categories: If the goal is prediction, or forecasting, or error reduction, linear regression can be used to fit a predictive model to an observed data set of y and X values. After developing such a model, if an additional value of X is then given without its accompanying value of y, the fitted model can be used to make a prediction of the value of y. Given a variable y and a number of variables X1, ..., Xp that may be related to y, linear regression analysis can be applied to quantify the strength of the relationship between y and the Xj, to assess which Xj may have no relationship with y at all, and to identify which subsets of the Xj contain redundant information about y.Linear regression models are often fitted using the least squares approach, but they may also be fitted in other ways, such as by minimizing the ""lack of fit"" in some other norm (as with least absolute deviations regression), or by minimizing a penalized version of the least squares loss function as in ridge regression (L2-norm penalty) and lasso (L1-norm penalty). Conversely, the least squares approach can be used to fit models that are not linear models. Thus, although the terms ""least squares"" and ""linear model"" are closely linked, they are not synonymous.