Simple OLS - National University of Kaohsiung

... of the intercept or constant term can be shown to be an unbiased estimator of 1 when the model is correctly specified. ...

... of the intercept or constant term can be shown to be an unbiased estimator of 1 when the model is correctly specified. ...

Worksheet of Nov. 22

... A common theme in statistical modeling is to think of each data point as being comprised of two parts: the part that is explained by the model (called the fit) and the “leftover” part (called the residual that is either the result of chance variation or of other variables not included in the model. ...

... A common theme in statistical modeling is to think of each data point as being comprised of two parts: the part that is explained by the model (called the fit) and the “leftover” part (called the residual that is either the result of chance variation or of other variables not included in the model. ...

GLS2014

... yt = xt’βi + εt or matrix form y = x’ β + ε εt is a random variable (a process) E{εt} = 0 Correct specification (+No err in variable) E{εt εt} = σ2 Homoscedasticity E{εt εt±/-k} = 0 for all k ≠ t No autocorrelation E{εt | X} = 0 Weak exogeneity Var {εt | X} = 0 We can add linearity, Normality in εt ...

... yt = xt’βi + εt or matrix form y = x’ β + ε εt is a random variable (a process) E{εt} = 0 Correct specification (+No err in variable) E{εt εt} = σ2 Homoscedasticity E{εt εt±/-k} = 0 for all k ≠ t No autocorrelation E{εt | X} = 0 Weak exogeneity Var {εt | X} = 0 We can add linearity, Normality in εt ...

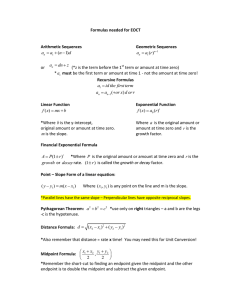

Formulas needed for EOCT Arithmetic Sequences Geometric

... table on your calculator under L1, L2, and L3 and enter a formula under L3. Finding linear and exponential models of best fit (regression): Enter all of the x data under L1 and the y data under L2 in your calculator. -To run a linear regression on the TI -30XS Multiview, hit 2nd data and run 2 Varia ...

... table on your calculator under L1, L2, and L3 and enter a formula under L3. Finding linear and exponential models of best fit (regression): Enter all of the x data under L1 and the y data under L2 in your calculator. -To run a linear regression on the TI -30XS Multiview, hit 2nd data and run 2 Varia ...

Slide 1

... 2. Do any observations near x = 0 exist in the data set? A value of 0 is reasonable for the drilling data (this indicates that drilling begins at the surface of Earth. The smallest observation in the data set is x = 35 feet, which is reasonably close to 0. So, interpretation of the y-intercept is re ...

... 2. Do any observations near x = 0 exist in the data set? A value of 0 is reasonable for the drilling data (this indicates that drilling begins at the surface of Earth. The smallest observation in the data set is x = 35 feet, which is reasonably close to 0. So, interpretation of the y-intercept is re ...

INTRODUCTION TO APPLIED STATISTICS FOR AGRI

... use contingency table and the Chi-square test - Simple Linear Regression and Correlation. Understanding concepts related to linear regression models including, least squares method, correlation, Spearman's rank order correlation, inferences about the parameters in the linear regression model. - Intr ...

... use contingency table and the Chi-square test - Simple Linear Regression and Correlation. Understanding concepts related to linear regression models including, least squares method, correlation, Spearman's rank order correlation, inferences about the parameters in the linear regression model. - Intr ...

Worksheet 4

... What pattern do you notice? By how many dollars is each prediction higher than the preceding one? Give a brief interpretation of the slope coefficient b for our regression line. 14. By how much does the regression line predict airfare to rise for each additional 50 miles that a destination is farthe ...

... What pattern do you notice? By how many dollars is each prediction higher than the preceding one? Give a brief interpretation of the slope coefficient b for our regression line. 14. By how much does the regression line predict airfare to rise for each additional 50 miles that a destination is farthe ...

Math 137 Unit 4 and 5 Review 1) 2)

... 20) A researcher has collected data on the price of gasoline from 1992 to 2014 and has found that the price in dollars after t years can be predicted using the equation: y = − 0.0256t2 +0.3584t +1.90 a) According to this model what was the price of gas in1990? b) Using this model predict the price o ...

... 20) A researcher has collected data on the price of gasoline from 1992 to 2014 and has found that the price in dollars after t years can be predicted using the equation: y = − 0.0256t2 +0.3584t +1.90 a) According to this model what was the price of gas in1990? b) Using this model predict the price o ...

A researcher was investigating variables that might be associated

... 4. The first researcher concluded that because the coefficient for the variable $ Per Pupil was positive in his results, spending additional money on students would have a positive effect on SAT-M scores. This researcher therefore recommended more money be spent on students. The second researcher co ...

... 4. The first researcher concluded that because the coefficient for the variable $ Per Pupil was positive in his results, spending additional money on students would have a positive effect on SAT-M scores. This researcher therefore recommended more money be spent on students. The second researcher co ...

Lect.8 - Department of Engineering and Physics

... 5. The DF column gives the degrees of freedom, the degrees of freedom for regression is equal to the number of independent variables in the model. The degrees of freedom for “Residual Error” is the number of observations – number of parameters estimated. The total degrees of freedom is the sum of th ...

... 5. The DF column gives the degrees of freedom, the degrees of freedom for regression is equal to the number of independent variables in the model. The degrees of freedom for “Residual Error” is the number of observations – number of parameters estimated. The total degrees of freedom is the sum of th ...

Linear regression

In statistics, linear regression is an approach for modeling the relationship between a scalar dependent variable y and one or more explanatory variables (or independent variables) denoted X. The case of one explanatory variable is called simple linear regression. For more than one explanatory variable, the process is called multiple linear regression. (This term should be distinguished from multivariate linear regression, where multiple correlated dependent variables are predicted, rather than a single scalar variable.)In linear regression, data are modeled using linear predictor functions, and unknown model parameters are estimated from the data. Such models are called linear models. Most commonly, linear regression refers to a model in which the conditional mean of y given the value of X is an affine function of X. Less commonly, linear regression could refer to a model in which the median, or some other quantile of the conditional distribution of y given X is expressed as a linear function of X. Like all forms of regression analysis, linear regression focuses on the conditional probability distribution of y given X, rather than on the joint probability distribution of y and X, which is the domain of multivariate analysis.Linear regression was the first type of regression analysis to be studied rigorously, and to be used extensively in practical applications. This is because models which depend linearly on their unknown parameters are easier to fit than models which are non-linearly related to their parameters and because the statistical properties of the resulting estimators are easier to determine.Linear regression has many practical uses. Most applications fall into one of the following two broad categories: If the goal is prediction, or forecasting, or error reduction, linear regression can be used to fit a predictive model to an observed data set of y and X values. After developing such a model, if an additional value of X is then given without its accompanying value of y, the fitted model can be used to make a prediction of the value of y. Given a variable y and a number of variables X1, ..., Xp that may be related to y, linear regression analysis can be applied to quantify the strength of the relationship between y and the Xj, to assess which Xj may have no relationship with y at all, and to identify which subsets of the Xj contain redundant information about y.Linear regression models are often fitted using the least squares approach, but they may also be fitted in other ways, such as by minimizing the ""lack of fit"" in some other norm (as with least absolute deviations regression), or by minimizing a penalized version of the least squares loss function as in ridge regression (L2-norm penalty) and lasso (L1-norm penalty). Conversely, the least squares approach can be used to fit models that are not linear models. Thus, although the terms ""least squares"" and ""linear model"" are closely linked, they are not synonymous.