ICS 178 Introduction Machine Learning & data Mining

... • We can think of the problem as one where we are trying to find the probability distribution for P(Y|X). • We can write: Yn AXn b dn where d is the residual error pointing vertically from the line to the data-point. • d is a random vector and we may assume is has a Gaussian distribution. ...

... • We can think of the problem as one where we are trying to find the probability distribution for P(Y|X). • We can write: Yn AXn b dn where d is the residual error pointing vertically from the line to the data-point. • d is a random vector and we may assume is has a Gaussian distribution. ...

A Bootstrap Evaluation of the EM Algorithm for Censored Survival Data

... that are sometimes difficult to justify scientifically. In this paper, the EM algorithm is investigated as an alternative to SAS PROC LIFEREG. ...

... that are sometimes difficult to justify scientifically. In this paper, the EM algorithm is investigated as an alternative to SAS PROC LIFEREG. ...

See regression.R : solve(t(X01) %*% X01) %*% t(X01) %*% Y

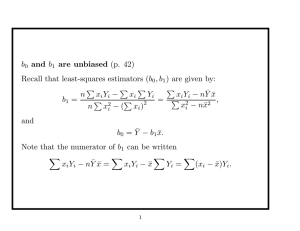

... In this model, the x values are assumed to be fixed and known. The Y values are random. Under this model, the Y values are independent, and the distribution of each Yi is normal: ...

... In this model, the x values are assumed to be fixed and known. The Y values are random. Under this model, the Y values are independent, and the distribution of each Yi is normal: ...

Aitchison Geometry for Probability and Likelihood Abstract K.Gerald van den Boogaart

... data analysis, such as Aitchison distance or centered log ratio transform. In this way very elaborated aspects of mathematical statistics can be understood easily in the light of a simple vector space structure and of compositional data analysis. E.g. combination of statistical information such as B ...

... data analysis, such as Aitchison distance or centered log ratio transform. In this way very elaborated aspects of mathematical statistics can be understood easily in the light of a simple vector space structure and of compositional data analysis. E.g. combination of statistical information such as B ...

Expectation–maximization algorithm

In statistics, an expectation–maximization (EM) algorithm is an iterative method for finding maximum likelihood or maximum a posteriori (MAP) estimates of parameters in statistical models, where the model depends on unobserved latent variables. The EM iteration alternates between performing an expectation (E) step, which creates a function for the expectation of the log-likelihood evaluated using the current estimate for the parameters, and a maximization (M) step, which computes parameters maximizing the expected log-likelihood found on the E step. These parameter-estimates are then used to determine the distribution of the latent variables in the next E step.