* Your assessment is very important for improving the workof artificial intelligence, which forms the content of this project

Download multimedia communication systems: techniques, standards, networks

Survey

Document related concepts

Policies promoting wireless broadband in the United States wikipedia , lookup

Computer network wikipedia , lookup

Wake-on-LAN wikipedia , lookup

TV Everywhere wikipedia , lookup

Net neutrality law wikipedia , lookup

Recursive InterNetwork Architecture (RINA) wikipedia , lookup

Airborne Networking wikipedia , lookup

Video on demand wikipedia , lookup

Asynchronous Transfer Mode wikipedia , lookup

Cracking of wireless networks wikipedia , lookup

List of wireless community networks by region wikipedia , lookup

Piggybacking (Internet access) wikipedia , lookup

Serial digital interface wikipedia , lookup

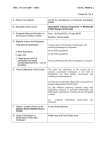

Transcript