* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download 61solutions5

Regression analysis wikipedia , lookup

Hardware random number generator wikipedia , lookup

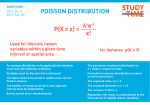

Birthday problem wikipedia , lookup

Vector generalized linear model wikipedia , lookup

Expectation–maximization algorithm wikipedia , lookup

Taylor's law wikipedia , lookup

Mean field particle methods wikipedia , lookup

Least squares wikipedia , lookup

Stat 61 - Homework #5 - SOLUTIONS (10/29/07) 1. Let X be a random variable with E(X) = 100 and Var(X) = 15. What are… a. E ( X2 ) (Not 10000) Var(X) = E(X2) – E(X)2, so 15 = E(X2) – 10000, so E(X2) = 10015. b. E ( 3X + 10 ) =3 E(X) + 10 = 310 c. E (-X) = –100 d. Standard deviation of –X ? = 15 3.9, NOT –3.9 2. Let X be the random variable with pdf fX ( x ) = 2x 0 if 0 ≤ x ≤ 1 otherwise. a. What is the cdf, FX(a) ? FX(a) = a 0 f ( x)dx = a 2 1 if a 0 if 0 a 1 if a 1 b. Let Y be another random variable defined by Y = X2. What is its cdf, FY (b) ? ( This is possible because if you know P( X ≤ a ) for every a, you can get P( Y ≤ b ) for any b.) FY(b) = P ( Y ≤ b ) (by definition of FY) 2 =P(X ≤b) (same event) (This is clearly zero of b < 0, so from here on assume b ≥ 0) = P(X≤ b ) (again, it’s the same event, at least when b is nonnegative) = FX ( b ) (by definition of FX) = b =b 2 (or: 1 if b 1, 0 if b ≤ 0) 1 c. What is the pdf, fY ? It’s the derivative of FY… if b<0 0 fY(b) = 1 if 0 b 1 0 if b 1 d. Compute E(Y) directly from FY. (Use the secret formula.) (See website if necessary) E(Y) = = 1 y 0 y 0 1 F ( y) dy y F ( y)dy 0 (1 y )dy 0 = 1/2. e. Compute E(Y) from fY. E(Y) = y yf ( y )dy 1 y 0 y 1 dy = 1/2 f. Compute E(Y) = E(X2) directly from fX. (If your answers to d-e-f don’t agree, panic.) E(Y) = E(Y ) E ( X 2 ) x x2 f x ( x)dx 1 x 0 x 2 (2 x)dx =1/2 3. (Sum of random variables) Let X and Y be independent random variables, with these pmf’s: pX(n) = (1/3) for n = 1, 2, 3; else 0 pY(n) = (1/2) for n = 1, 2; else 0 Let Z = X + Y. Using the formula pZ (n) pX (m) pY (n m) , all m [ = P(X=1) P(Y=n-1) + P(X=2) P(Y=n-2) + P(X=3) P(Y=n-3) etc. ] construct the pmf for Z. 2 P ( Z = 2 ) = P( X=1)P(Y=1) = (1/3)(1/2) = 1/6 P ( Z = 3 ) = P(X=1)P(Y=2) + P(X=2)P(Y=1) = (1/3)(1/2) + (1/3)(1/2) = 2/6 P ( Z = 4 ) = P(X=2)P(Y=2) + P(X=3)P(Y=1) = etc. = 2/6 P ( Z = 5 ) = P(X=3)P(Y=2) = etc. = 1/6 (I guess there are infinitely many other terms in each of these sums, but they are all zero) so the pmf for Z is z pZ(z) 2 1/6 3 2/6 4 2/6 5 1/6 1 4. Now let X and Y be independent Poisson random variables, with means X and Y respectively. Let Z = X + Y. Using the formula from problem 3, derive the pmf for Z. n n! a mb n m . ) m 0 m !( n m)! (Hint: The binomial formula is (a b) n In what follows, x, y, and z are integers (being typical values of X, Y, Z resp.). pZ ( z ) p X ( x) pY ( z x) all x z x X e X x 0 x ! Y z x Y e z x ! z 1 e ( X Y ) X x Y z x x 0 x ! z x ! z! 1 z e ( X Y ) X x Y z x z ! x 0 x ! z x ! z 1 e ( X Y ) X Y z! 3 This is the Poisson pmf for Z, assuming Z = X + Y. So: The sum of two independent Poisson random variables is itself a Poisson random variable. 5. (The mother of all Poisson examples) Do problem 4.2.10, page 287. Either actually read the problem and do what it says, or do parts a and b below, which amount to the same thing. Specifically, given the following distribution… k Observed number of corps-years in which k fatalities occurred 0 1 2 3 4 ≥5 109 65 22 3 1 0 200 [This is a frequency table describing 200 annual reports from pre-WWI Prussian cavalry corps, each giving a number of soldiers killed by being kicked by horses.] It is suggested that these values represent 200 independent draws from a Poisson distribution. a. What is a reasonable estimate for the mean of the distribution? One reasonable estimate is the mean of the 200 observed values, which is = [ 109(0)+65(1)+22(2)+3(3)+1(4) ] / 200 = 0.61. (As we’ll see soon, this is the method-of-moments estimator for the mean, which happens to agree in this situation with the maximum likelihood estimator.) b. What would the entries in the right-hand column be if the 200 draws were exactly distributed according to this Poisson distribution? (The entries would not be integers.) 4 Using = 0.61: k 0 1 2 3 4 ≥5 observed 109 65 22 3 1 0 theoretical 108.67 = (200) ( 0.610 / 0! ) ( e–0.61 ) 66.29 20.22 4.11 0.63 0.09 200 200 (In November we’ll address this question: Comparing the original data to the theoretical values in part b, is it plausible that these really are draws from a Poisson distribution? Or should we discard that theory?) This looks to me like a very good fit --- even suspiciously good --- but we may try a Chi-Square test later. 6. (Editing a Poisson distribution) At a certain boardwalk attraction, customers appear according to a Poisson process at a rate of = 15 customers/hour. So, if X is the number of customers appearing between noon and 1pm, X has a Poisson distribution with mean 15. Assume that each customer wins a prize with (independent) probability 1/5. Let Y be the number of customers winning prizes between noon and 1pm. One way to understand Y is that if the value of X is given, then Y is binomial, with parameters n = [value of X] and p = 1/5. This reasoning gives us: P(Y=k)= n nk P( X n) k 1/ 5 k (4 / 5) nk (The second part of the summand is the probability that Y=k given that X=n.) 5 a. Simplify this formula, to show that Y is itself a Poisson distribution with mean 3. P(Y=k)= n P( X n) k 1/ 5 k nk (4 / 5) n k 15 15 n k e 1/ 5 (4 / 5) n k nk n ! k n 1 15 n 1 1 e 15 n k! nk (n k )! 5 1 15 k 3n k 4n k e (3 ) k! n k ( n k )! 15n nk 4 ...and now since (3k )(3n k )... n 5 When we substitute m for n-k in the last sum, we get 12m , m 0 m ! which is the Taylor series for e+12; so 3k 3 P(Y=k)= e k! as desired. (Or, if after getting off to a good start you find this problem too annoying, do part b instead.) b. Find a much simpler explanation of why Y should be Poisson with mean 3. If the probability of a customer arriving in a small interval is 15 times the length of the interval in hours, independently of what happens in other intervals, then the probability of a winner appearing during that small interval is 3 times the length of the interval, and is still independent of what happens in other intervals. That means that the “winners” process is a Poisson process with mean 3, and the result follows. 6 7. (Waiting times) Buses arrive according to a Poisson process with mean (1/10) min-1. Let W be the waiting time from time t = 0 till the arrival of the second bus. a. What is E(W) ? If X is the time we have to wait for the first bus, then X is exponentially distributed with mean 10. After the first bus arrives, we can start waiting for the second bus, and if Y is the time that takes, then Y also has mean 10. Now W = X + Y. It doesn’t matter whether X and Y are independent (they are) --- in any case, E(W) = E(X) + E(Y) = 20 minutes. Part a is of no use in solving part b. b. Can you construct a pdf or cdf for W ? ( Good start: P(W ≤ t) = 1 – P(exactly 0 buses or exactly 1 bus between times 0 and t). Another approach: W is the sum of two independent one-bus waiting times.) P ( W ≤ t ) = 1 – exp(-(1/10) t) – (1/10) t exp(-(1/10) t). That’s the same as FW(t). If you want the density function fW(t), just differentiate FW(t); things cancel and you get fW(t) = (1/100) t exp(-(1/10)t ). This happens to be the density for (a special case of) the gamma distribution. 8. (The first boring normal tables problem) If Z is a standard normal variable, what are… a. P ( -1.0 ≤ Z ≤ +1.0 ) = 0.68 b. P ( -2.0 ≤ Z ≤ +2.0 ) = 0.95 c. P ( -3.0 ≤ Z ≤ +3.0 ) = 0.997 ( by the “Rule of 68 – 95 – 99.7” ) ( or, use Excel’s NORMSDIST function to get 0.682689, 0.9545, 0.9973 ) 7 9. (The second boring normal tables problem) Let Z be a standard normal variable. a. If P ( -a ≤ Z ≤ +a ) = 0.95, what is a ? Find z for which Phi(z) = 0.975 --- namely, z = 1.96. (Or: =NORMSINV(0.975) = 1.9600 ) b. If P ( -b ≤ Z ≤ +b ) = 0.99, what is b ? Find z for which Phi(z) = 0.995 --- namely, z = 2.58 (Or: =NORMSINV(0.995) = 2.575829 ) 10. (The third boring normal tables problem) a. If X is normal with mean 500 and standard deviation 110, what is P ( X ≥ 800 ) ? If Z = (X-500)/110 then Z is standard normal. P ( X ≥ 800 ) = P ( Z ≥ (800-500)/110 ) = P ( Z ≥ 2.7272 ) = 1 – (2.72) = 0.0032 b. If X is normal with mean 500 and standard deviation 120, what is P ( X ≥ 800 ) ? If Z = (X-500)/120 then Z is standard normal. P ( X ≥ 800 ) = P ( Z ≥ (800-500)/120 ) = P ( Z ≥ 2.5 ) = 1–(2.5) = 0.0062 (end) 8