* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download Digital Music and Music Processing

Survey

Document related concepts

Transcript

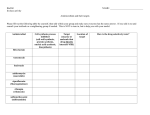

Music Processing Roger B. Dannenber Overview Music Representation MIDI and Synthesizers Synthesis Techniques Music Understanding Music Representation Acoustic Level: sound, samples, spectra Performance Information: timing, parameters Notation Information: parts, clefs, stem direction Compositional Structure: notes, chords, symbolic structure Performance Information MIDI bandwidth is 3KB/s, or 180KB/min More typical: 3KB/minute, 180KB/hour • Complete Scott Joplin: 1MB • Output of 50 Composers (400 days of music): 500MB (1 CD-ROM) Synthesis of acoustic instruments is a problem Music Notation Compact, symbolic representation Does not capture performance information Expressive “performance” not fully automated Compositional Structure Example: Nyquist (free software!) (defun melody1 () (seq (stretch q (note a4) (note b4) (note cs5) (note d5)))) (defun counterpoint () …) (defun composition () (sim (melody1) (counterpoint))) (play (transpose 4 (composition))) Overview Music Representation MIDI and Synthesizers Synthesis Techniques Music Understanding MIDI: Musical Instrument Digital Interface Musical Performance Information: • Piano Keyboard key presses and releases • “instrument” selection (by number) • sustain pedal, switches • continuous controls: volume pedal, pitch bend, aftertouch • very compact (human gesture < 100Hz bandwidth) MIDI (cont’d) Point-to-point connections: • MIDI IN, OUT, THRU • Channels No time stamps • (almost) everything happens in real time Asynchronous serial, 8-bit bytes+start+stop bits, 31.25K baud = 1MHz/32 MIDI Message Formats Key Up 8 ch key# vel Key Down 9 ch key# vel Polyphonic Aftertouch A ch key# press Control Change B ch ctrl# value Program Change C ch index# Channel Aftertouch D ch press Pitch Bend E ch lo 7 System Exclusive … DATA … F 0 F hi 7 E Standard MIDI Files Key point: Must encode timing information Interleave time differences with MIDI data... <track data> =1 or more <track event>, <track event> = <delta time> <event>, <event> = midi data or <meta event>, <meta event> = FF<type><length><data> Delta times use variable length encoding, omit for zero. Standard MIDI Files (cont’d) MThd <length> <header data>header info MTrk <length> <track data> track data: each MTrk <length> <track data> with 16 channels Overview Music Representation MIDI and Synthesizers Synthesis Techniques Music Understanding Music Synthesis Introduction Primary issue is control • No control Digital Audio (start, stop, ...) • Complete control Digital Audio (S[0], S[1], S[2], ... ) • Parametric control Synthesis Music Synthesis Introduction (cont’d) What parameters? • pitch • loudness • timbre (e.g. which instrument) • articulation, expression, vibrato, etc. • spatial effects (e.g. reverberation) Why synthesize? • high-level representation provides precision of specification and supports interactivity Additive Synthesis n1 Si A j sin j i t j0 amplitude A[i] and frequency [i] specified for each partial (sinusoidal component) potentially 2n more control samples than signal samples! Additive Synthesis (cont’d) often use piece-wise linear control envelopes to save space still difficult to control because of so many parameters and parameters do not match perceptual attributes Table-Lookup Oscillators If signal is periodic, store one period Control parameters: pitch, amplitude, wavefor Frequency + Efficient, but ... Spectrum is static Phase Amplitude x (Note that phase and frequency are fixed point or floating point numbers) FM Synthesis MOD FREQ A AMPL + A F F Usually use sinusoids “carrier” and “modulator” are both at audio frequencies If frequencies are simple ratio (R), output spectrum is periodic Output varies from sinusoid to complex signal as MOD increases out = AMPL·sin(2·FREQ·t + MOD sin(2R·FREQ·t)) FM Synthesis (cont’d) Interesting sounds, Time-varying spectra, and ... Low computation requirements Often uses more than 2 oscillators … but … Hard to recreate a specific waveform No successful analysis procedure Sample-based Synthesis Samplers store waveforms for playback Sounds are “looped” to extend duration Spectrum is static (as in tablelookup), so: • different samples are used for different pitches • simple effects are added: filter, vibrato, envelope Attack amplitude Loop Loop again ... Physical Models Additive, FM, and sampling: more-or-less perception-based. Physical Modeling is source-based: compute the wave equation, simulate attached reeds, bows, etc. Example: Reed Bore Bell Physical Models (cont’d) Difficult to control, and ... Can be very computationally intensive … but ... Produce “characteristic” acoustic sounds. Overview Music Representation MIDI and Synthesizers Synthesis Techniques Music Understanding Music Understanding Introduction Score Following, Computer Accompaniment Interactive Performance Style Recognition Conclusions What Does Music Mean? Emotion Formal structures Abstract Physical What is Music Understanding? Translation? Recognition? (Of what?) Parsing? Pattern forming? Recognition of themes? Music Understanding is the recognition of pattern and structure in music. Computer Accompaniment Performance Input Processing Score for Performer Score for Accompaniment Matching Accompaniment Performance Music Synthesis (see Dannenberg ‘84) Accompaniment Interactive Performance Traditional Western Composition is carefully composed, but the result is static. Composer is central figure. Jazz and other improvisations are not carefully composed (typically small structures), but the result is dynamic and spontaneous. Performer is central figure. Can we integrate these two? A New Approach to Music Making Computers let us put compositional theories into programs. Music understanding helps us tie programs to live performance. Result can be carefully composed and structured: Composer-oriented. Style Recognition Everyone recognizes musical style: “I don’t know anything about music, but I know what I like” What makes something Lyrical? Syncopated? Experimental Setup Pointilistic ? Lyrical Frantic Syncopated Music Understanding Conclusions Music Understanding: the recognition of pattern or structure in music. Music Understanding is necessary for high-level interfaces between musicians and computers. Music Summary Rich in representations Different representations support different tasks Active research in: • Synthesis • Understanding Hardware to Software