* Your assessment is very important for improving the workof artificial intelligence, which forms the content of this project

Download The University of Queensland

Asynchronous Transfer Mode wikipedia , lookup

Backpressure routing wikipedia , lookup

IEEE 802.1aq wikipedia , lookup

Piggybacking (Internet access) wikipedia , lookup

Drift plus penalty wikipedia , lookup

Distributed firewall wikipedia , lookup

Computer network wikipedia , lookup

Network tap wikipedia , lookup

Recursive InterNetwork Architecture (RINA) wikipedia , lookup

Wake-on-LAN wikipedia , lookup

Deep packet inspection wikipedia , lookup

List of wireless community networks by region wikipedia , lookup

Airborne Networking wikipedia , lookup

Answer:

Distributed state abstraction:

The Network OS provides a global view of the network (in a logically centralised way) and presents it as an

(annotated) ‘network graph’ to the network applications.

The Network Operating System functionality is provided by so called ‘Network Controllers’ or ‘Network

Controller Platforms’. The original attempt to implement this was NOX, and many more have been

developed more recently.

The University of Queensland

School of Information Technology and Electrical Engineering

Semester 2, 2014

NOX paper:

Gude, Natasha, et al. "NOX: towards an operating system for networks." ACM SIGCOMM Computer

Communication Review 38.3 (2008): 105-110.

http://yuba.stanford.edu/~casado/nox-ccr-final.pdf

COMS4200/7200 – Tutorial 1, Answers

Configuration abstraction or Specification Abstraction

Simplified, high level abstraction of how to configure network, the specification of ‘what’ the network should

do (goals, policies), not ‘how’ it should be done.

Example: cross-bar model for access control

This configuration or specification abstraction is the northbound interface in the SDN architecture.

There is currently no clear consensus (or standard) on how this should look like.

Q1) What are some of the key motivations behind SDN, i.e. what are the limitations of

current networks and network architectures?

Answer:

Ossification of current network, slow innovation

Networks are increasingly large and complex, and are increasingly hard to configure and manage

o Lack of control abstractions, lots of low level, individual device configuration

Analogy: Like programming without an OS

o This makes it hard to adapt networks to changing demands

Current network do not support network virtualisation well enough

Vertically integrated solutions (mainframe analogy), vendor lock-in

Forwarding abstraction:

Should provide an abstraction of the switch functionality, but should not be vendor or implementation

specific. This is essentially the south-bound interface.

OpenFlow provides a forwarding abstraction (not the only one, but currently the pre-dominant one).

Q5) Based on the talk by Scott Shenker, what’s the key value proposition of SDN, in three

words?

Q2) What is the key technical difference of SDN compared to traditional networks?

Answer:

Innovation through abstraction.

Answer:

The key difference of SDN is the separation of the control and data plane, and the logically centralised

control of the network. This is in contrast to the control via distributed protocols (e.g. routing protocols) in

traditional IP networks.

Q6) Given that the Network Operating System provides the abstraction of a global view of

the network as a ‘network graph’, explain how this can be used to implement shortest path

routing.

Answer:

If we have a global view of the network, i.e. the network topology as graph, computing the shortest paths

between any two nodes is relatively easy, e.g. by using Dijkstra’s algorithm. Once routes are computed by

the SDN controller, they need to be installed at the corresponding switches, typically by sending the

corresponding OpenFlow messages.

Q3) What are potential key benefits of SDN?

Answer:

Increased rate of innovation

Greater flexibility and agility through programmability

Simplified network control and management

Increased efficiency and scalability

Lowered cost

Q7) The ONF Whitepaper on SDN provides a brief introduction to OpenFlow, and provides

the definition of a ‘flow’. Compare the granularity of control that OpenFlow provides with

traditional IP routing.

Q4) Based on the talk by Scott Shenker watched during the lecture, what are the 3 key

abstractions in SDN, and how are they implemented?

Answer:

In traditional IP routing, all packets between a source and destination follow the same path through the

network. This is due to the fact that forwarding decisions at each hop are based on the destination IP

address in the packet. In contrast, the matching rules in flow tables of SDN switches are much more finegrained. A flow is defined as a match rule, consisting a large number of packet header fields, including MAC

source and destination address, IP source and destination address, UDP/TCP port numbers, etc. A separate

forwarding action can be defined for each unique flow, i.e. each unique combination of values of all these

header fields.

Talk: http://www.youtube.com/watch?v=YHeyuD89n1Y

1

2

Q3)

a) In an M/M/1 System with arrival rate sand service rate =5 [1/s], to what

percentage is the server utilised on average?

The University of Queensland

School of Information Technology and Electrical Engineering

Answer:

4/5=0.8 80%

Semester 2, 2014

b) When the system is started, what’s the probability that there are no customers arriving

within the next 2 seconds? What’s probability of no customers arriving in the next 2

seconds after the system has been running for 2 minutes?

COMS4200/7200 – Tutorial 2, Answers

Answer:

We have a Poisson process here, which has no memory, i.e. the probability of k customers arriving in an

interval of t seconds does not depend on the position of this interval on the time axis.

Q1) Classify the following Queuing Systems using Kendall’s Notation:

P (t , k )

a) Data packets arrive at a router with exponential inter-arrival time. The packet length is

independent from the arrival time and has also an exponential distribution. The time it

takes to forward a packet is proportional to its length. We assume that the router can

buffer an unlimited number of packets.

e t ( t ) k

k!

In this case we have: t= 2s, k=0, =4

P(2,0)=e-t

Answer:

M/M/1

(M/M/1/FIFO/∞/∞)

= e-

c) What’s the probability that there are more than two customers in the system?

b) Consider the express checkout line at a supermarket with 5 checkout operators.

Customers arrive according to a Poisson process and the service time has an unknown

distribution. We assume that the queue can fit a maximum of 100 customers (including

customers being served).

Answer:

General M/M/1 results:

P0 = 1-0.2

Pk = (1-)k

P1= (1-)1 = 0.16

P2 = (1-)2 = 0.128

Answer:

M/G/5/100

(M/G/5/FIFO/100/∞)

Probability that there are up to 2 customers in the system: P0 + P1 + P2 = 0.488

Probability that there are more than 2 customers in the system: 1-(P0 + P1 + P2) = 0.512

c) Cars arrive at a car wash station with an average rate of 5 per hour. It takes exactly 5

minutes for each car to be washed by the single station. There is unlimited space for cars

to queue.

Q4) Suppose we have an M/M/2/2 system where a maximum of two customers are

allowed in the system. Since we have two servers, this means we have no queuing space.

Answer:

G/D/1

(G/D/1/FIFO/∞/∞)

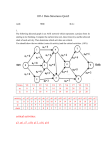

a) Draw a state transition diagram for this scenario (Markov chain)

Q2) Skiers arrive at a Ski lift with an average rate of 10 per minute. (The inter-arrival times

follow a normal distribution.) The lift can take 1 Skier every 4 seconds. Observation of the

queue shows that on average, there are 100 people queuing. How long is the average wait

in the queue?

Answer:

0

Answer:

We can use Little’s Law, which is independent of the distribution of arrival and service times.

Little: N=λT

Since we can apply Little’s Law to any sub-system, we apply it to the queue.

NQ=λTQ

TQ= NQ/λ = 100/10=10 minutes

1

1

2

2

b) Find the probabilities for each of the states of the system?

The University of Queensland

School of Information Technology and Electrical Engineering

Answer:

Equilibrium conditions:

State 0: P0*P1*P1=P0(/)

Semester 2, 2014

State 1: P1*P1*P0*P2*2

P2=(P1(P0*P0(/)()P0*P0()

Normalisation: P0+P1+P2 = 1

COMS4200/7200 – Tutorial 3, Answers

P0+P0() + P0() = 1

Q1) Classify the following Queuing Systems using Kendall’s Notation:

P0 =1/ (1+)

P1=(1+)

P2=0.5* (1+)

A call centre has 10 phone lines and employs 4 operators during daytime. The time

between calls is assumed to have an exponential distribution. The distribution of the call

durations is not known.

Answer:

When all operators are busy, a caller is put on hold. If all 10 phone lines are busy, a caller is rejected, i.e.

gets the busy tone. We can assume a FIFO queue.

Q5) A communication line capable of transmitting at a rate of 50Kbit/s will be used to

accommodate 10 sessions, each generating Poisson traffic at a rate of 150 packets/min.

Packet lengths are exponentially distributed with a mean of 1000 bits.

M/G/4/10

a) In a first scenario, we split the channel into 10 TDM sub-channels of equal capacity,

with 5 Kbit/s each. Each sub-channel is allocated to a session. What’s the average delay T

that packets experience in this scenario?

Q2) What’s the ‘offered load’ in Erlang for the following system?

Answer:

We can model this scenario as 10 separate M/M/1 queues, with min = 2.5/s. The transmission rate of

each sub-channel is 5Kbit/s, with an average packet size of 1000 bits, this results in a service rate of s

From our analysis of M/M/1 queues we know T=1/()

a) Calls arrive with an average rate of 10 per minute. We assume the average call duration

is 30 seconds.

Answer: Offered load = average number of circuits (servers) that would be used if no calls were rejected.

T=1/(5-2.5) [s] = 0.4s

A=λ/µ=10/(1/0.5) = 5 Erlang

b) In this scenario, the 10 sessions share the 50Kbit/s channel via statistical multiplexing,

i.e. there is one single queue where packets from all the 10 sessions are queued. (e.g. in a

router) The single server (transmission line) is fed from this queue. What’s the average

delay T that packets experience in this scenario?

Q3) We have a call centre with the following properties:

•

•

•

•

Answer:

We can model Scenario b) as a single M/M/1 queue with parameters min = 25/s and s.

T=1/() = 1/(50-25)[s] = 0.04s

(We use the fact that merging Poisson processes result in a new Poisson process)

This shows that decreased delay is one of the general benefits of packet switching over circuit switching.

The same model and argument can be applied in any situation where we evaluate the use of one fast server

versus multiple slower servers (and individual queues) with equal total capacity.

Two operators

Two phone lines

Call arrivals are Poisson, with an average rate of 20 per hour

Service time (for each operator) has exponential distribution with Ts = 3 min

a) What type of queuing system do we have?

Answer: M/M/2/2

3

1

b) If we ring the call centre, what’s the probability of an operator answering the phone,

instead of getting a busy tone?

M / G /1 :

Answer: We need to find the “Blocking Probability” PB. The Erlang B formula gives us this result.

Utilisation factor: ρ =

m=2, λ=20/hour, µ=1/3min = 20/hour

λ/µ=ρ=20/20=1

PB =

Pollaczek-Khinchin formula :

(λ / µ ) m / m!

λ Var (TS ) +

m

∑ (λ / µ )

n

/ n!

TQ =

n =0

PB =

λ

µ

1 / 2 ⋅1

0.5

=

= 0.2 = 20%

1

2

1 / 1 + 1 / 1 + 1 / 2 2.5

λ

µ2

2(1 − ρ )

2

0

NQ =

The probability of getting an operator to answer our call is 1-PB =1 – 0.2 = 0.8.

λ2 Var (TS ) + ρ 2

2(1 - ρ )

b) Through observation over a few days, you find that the Variance of Alice’s service time

is 0.01 [h2]. Bob’s service time has a variance of 0.04 [h2]. How much longer is the queue

in front of Bob’s terminal on average?

c) What’s the probability that there are two customers in the system, i.e. P2=?

Answer:

We use the Pollaczek-Khinchin formula.

λ=9 per hour

µ=10 per hour

Answer: This is exactly the blocking probability! The probability that a customer is rejected is the probability

that both servers are busy, which is P2.

We also could have used the results from the previous Tutorial, Question 4. There we found for a M/M/2/2

system:

Alice:

ρ=λ/µ

NQ =

P0 =1/ (1+ρ+0.5 ρ2)

P1=ρ/(1+ρ+0.5 ρ2)

P2=0.5* ρ2/(1+ρ+0.5 ρ2)

Bob:

For ρ=λ/µ = 20/20 =1, we get P2 = 0.5/(1+1+0.5) = 0.2

NQ =

Q4) Alice and Bob are 2 checkout operators at Coles. On average they are both able to

handle 10 customers per hour with customers arriving with exponentially distributed interarrival times. (With the same average arrival rate of 9 customers per hour at both

terminals.) The store management notices that the queue at Bob’s checkout terminal is on

average significantly longer than the one at Alice’s terminal.

λ2 Var (TS ) + ρ 2 81⋅ 0.01 + 0.9 2

=

= 8.1

2(1 - ρ )

0.2

λ2 Var (TS ) + ρ 2 81 ⋅ 0.04 + 0.9 2

=

= 20.25

2(1 - ρ )

0.2

Difference: 20.25 – 8.1 = 12.15

Bob’s Queue has on average 12.15 customers more waiting than Alice’s queue.

You can verify the results with the Excel spread sheet available on the course web site.

a) How can you explain this?

Answer: We have 2 individual M/G/1 systems with the same λ and µ.

The Pollaczek-Khinchin formula tells us that for M/G/1 systems, a high variance of service times increases

the average Queuing Delay and the average Queue Length. We can therefore say that Alice works more

consistently whereas Bob has bursts of intense activity and periods where he takes it easy.

I.e. Alice’s service rate has a lower variance than Bob’s

2

3

Q5) Decode the following ‘object’ encoded in ASN.1 BER Transfer Syntax.

The University of Queensland

School of Information Technology and Electrical Engineering

00000110 00000111 00101011 00000110 00000001 00000010 00000001 00000001 00000011

Semester 2, 2014

The following partial view of the OID tree is provided:

1 – ISO

1.3 – ISO Member Body

1.3.6 – US DoD

1.3.6.1 – Internet

1.3.6.1.2 – Management

1.3.6.1.2.1 – MIB-II

1.3.6.1.2.1.1 – System

1.3.6.1.2.1.1.1 – SysDescription

1.3.6.1.2.1.1.3 – SysUptime

1.3.6.1.2.1.2 – Interface

1.3.6.1.2.1.2.2.1.2.1 – IfDescription1

1.3.6.1.2.1.2.2.1.2.2 – IfDescription2

1.3.6.1.2.1.2.2.1.4.1 – IfMTU1

1.3.6.1.2.1.2.2.1.4.2 – IfMTU2

1.3.6.1.2.1.2.2.1.5.1 – IfSpeed1

1.3.6.1.2.1.2.2.1.5.2 – IfSpeed2

1.3.6.1.2.1.2.2.1.6.1 – IfIpAddress1

COMS4200/7200 – Tutorial 4, Answers

Q1) What are the 5 conceptual areas of network management as defined by ISO?

Answer:

Performance Management

Fault Management

Configuration Management

Accounting Management

Security Management

Q2) What are the components (entities) of a general network management architecture?

Answer:

T: Universal, primitive type, Object Identifier

L:7

V : {1 3 6 1 2 1 1 3}

Answer:

Management station

Managed entity

Agent

Management Protocol

Management Data (Management Information)

System Uptime object in MIB-2

00 0 00110 00000111 00101011 00000110 00000001 00000010 00000001 00000001 00000011

T=OID

L= 7

1,3

6

1

2

1

1

3

Q3) What is the following OID: {1 3 6 1 2 1 1}?

Q6) Decode the following ‘object’ encoded in ASN.1 BER Transfer Syntax.

Answer:

System in MIB-II

00000011 00000010 00000111

10000001

Q4) Encode the following in ASN.1 BER Transfer Syntax.

Type

Counter32

Answer:

00000011

00000010

00000111

Bit String length=2

last 7 bits unused

Value

1000 (decimal)

10000001

1

Answer:

Bit String = ‘1’

T

L

V

01 0 00001

00000100

00000000 00000000 00000011 11101000

Application Specific

Primitive

Code 1: Counter32

Length 4 bytes (32 bits)

Value 1000 in binary representation

1

2

Q7) What are the main improvements of SNMPv2c over SNMPv1?

The University of Queensland

School of Information Technology and Electrical Engineering

Answer:

Adds GetBulk message

Allows downloading multiple objects (e.g. a table) with one request

Semester 2, 2014

Adds Inform message

For sending trap messages from manager to manager. Acknowledged.

Adds RMON functionality

Remote Monitoring probes (“super SNMP agent”) can be deployed to monitor entire subnets continuously

and independently of manager

COMS4200/7200 – Tutorial 5, Answers

Q1) Describe two major differences between the ECN method and the RED method of

congestion avoidance.

Answer:

Q8) What is the key difference between SNMP and OSI Network Management (CMIP) in

terms of data model and transport protocol?

First, the ECN method explicitly sends a congestion notification to the source by setting a bit, whereas RED

implicitly notifies the source by simply dropping one of its packets. Second, the ECN method drops a packet

only when there is no buffer space left, whereas RED drops packets before all the buffer are exhausted.

Answer:

SNMP:

Static database

No support for object oriented data model. (no methods, no inheritance)

Cannot express relationships between objects.

Q2) A token bucket scheme is used for traffic shaping. A new token is put into the bucket

every 5 µsec. Each token is good for one short packet, which contains 48 bytes of

data. What is the maximum sustainable data rate?

Connection-less, unreliable transport protocol (UDP).

Answer:

With a token every 5 μsec, 200,000 packets/sec can be sent. Each packet holds 48 data bytes or 384 bits.

The data rate is then 76.8 Mbps.

OSI NM:

Dynamic database

Support of OO features, e.g. inheritance

Can express relationships between objects.

Q3) A computer on a 6-Mbps network is regulated by a token bucket. The token bucket is

filled at a rate of 1 Mbps. It is initially filled to capacity with 8 megabits. How long can

the computer transmit at the full 6 Mbps?

Uses connection-oriented, reliable transport protocol.

Answer:

The naive answer says that at 6 Mbps it takes 4/3 sec to drain an 8 megabit bucket. However, this answer is

wrong, because during that interval, more tokens are generated. The correct answer S = B/(M − R), where B

– bucket size, M – network data rate, R – flow average rate. Substituting, we get S = 8/(6 − 1) or 1.6 sec.

Q4) Consider the user of differentiated services with expedited forwarding. Is there a

guarantee that expedited packets experience a shorter delay than regular packets?

Why or why not?

Answer:

There is no guarantee. If too many packets are expedited, their channel may have even worse performance

than the regular channel. DiffServ does not guarantee quality of service. It only reserves resources in routers

(bandwidth, buffers, CPU) for particular traffic class but if offered load exceeds the reserved resources the

quality of service will be poor.

3

1

Q5) In the figure below suppose a new flow E is added that takes a path from R1 to R2 to

R6. How does the max-min bandwidth allocation change for the five flows?

The University of Queensland

School of Information Technology and Electrical Engineering

Semester 2, 2014

COMS4200/7200 – Tutorial 6, Answers

Q1) For the following Chord ring with m=6, write down the finger tables for Node 20 and for

Node 41.

6

60

Answer:

10

Allocation for flow A will be 1/2 on links R1R2 and R2R3. Allocation for flow E will be 1/2 on links R1R2 and

R2R6. All other allocations remain the same.

50

Q6) Discuss the advantages and disadvantages of credits versus sliding windows

protocols.

14

44

20

41

25

Answer:

34

The sliding window is simpler, having only one set of parameters (the window edges) to manage. Windows

are already used for flow control so congestion control windows can be easily added. However, the credit

scheme is more flexible, allowing a dynamic management of the buffering, separate from the

acknowledgements.

30

Answer:

Finger table entries for Node n:

Q7) Explain why congestion avoidance algorithms (congestion control laws) decide on

how much bandwidth is given to each flow. Also discuss why efficiency, fairness, and

convergence are important in these algorithms.

Finger table entry i: successor (i=n+2^i)

For i=0..m-1

All calculations are done modulo 2^m=64

Answer:

Node 20:

i=0: successor(20 + 1) = 25

i=1: successor(20 + 2) = 25

i=2: successor(20 + 4) = 25

i=3: successor(20 + 8) = 30

i=4: successor(20 + 16) = 41

i=5: successor(20 + 32) = 60

The algorithms decide how much to crease or increase the current bandwidth so they decide about the

bandwidth allocation. The allocation for all flows (aggregate bandwidth) should be efficient (close to the link

capacity) but it also needs to be fair for the flows (or at least do not starve some flows). The algorithms need

to converge to the operating point in order to avoid oscillation in bandwidth allocation.

Q8) Some policies for fairness in congestion control are Additive Increase Additive

Decrease (AIAD), Multiplicative Increase Additive Decrease (MIAD), and Multiplicative

Increase Multiplicative Decrease (MIMD). Discuss these three policies in terms of

convergence and stability.

Node 41:

i=0: successor(41 + 1) = 44

i=1: successor(41 + 2) = 44

i=2: successor(41 + 4) = 50

i=3: successor(41 + 8) = 50

i=4: successor(41 + 16) = 60

i=5: successor(41 + 32) = 10

Answer:

In AIAD and MIMD, the users will oscillate along the efficiency line, but will not converge. MIAD will converge

just like AIMD. None of these policies are stable. Decrease policy in AIAD and MIAD is not aggressive, and

increase policy in MIAD and MIMD is not gentle.

2

1

Q2) For a wireless link with the following parameters, what is the maximum distance between

sender and receiver (Free Space Loss Model)?

Q3) Under what condition would it be possible to use CSMA/CD for IEEE 802.11 LANs?

Answer:

Wireless transceivers would need to be full-duplex, i.e. able to send and listen at the same time, in order to

detect collisions.

The hidden node problem, which prevents sending nodes from detecting collisions that happen at remote

receivers, would need to be solved somehow. This however is a fundamental problem of the wireless medium.

Frequency: 900 MHz

Transmitter Antenna Gain (TxG): 15dBi

Transmitter losses (TxL): 2 dB

Transmitter output power (TxP): 100 milliwatts

Receiver Losses (RxL): 1 dB

Receiver Antenna Gain (RxG): 15 dBi

Miscellaneous loses (ML): 4 dB

Receiver Sensitivity (RxS): -75 dBm

Q4) Explain why IEEE 802.11 uses acknowledgements?

Link Margin (LM): 10 dB

Answer:

The wireless medium is much more unreliable than a wired Ethernet for example. Reasons for this are

interference, multi-path propagation, signal attenuation and collisions (802.11 has no way of knowing if there

was a collision or not, except via ACKs.)

The cost of leaving the responsibility of implementing reliability entirely to higher layers (i.e. TCP), would very

costly. Lost frames on the wireless link would incur end-to-end retransmissions at the transport layer.

Answer:

RxP = RxS + Link Margin = -75 dBm + 10 dB = -65 dBm

RxP = TxP + TxG - TxL - FSL - ML + RxG - RxL

FSL = RxG

FSL = 15 dBi

- RxP + TxP +

+ 65 dBm + 20 dBm +

TxG 15 dBi -

TxL 2 dB -

ML 4 dB -

Q5) At the moment, station A is transmitting a frame using IEEE 802.11 (DCF mode). Stations

B and C are within range of A and of each other. Both want to send a frame and are waiting

for the channel to become free.

The size of the contention window is currently 31 slots. What is the probability that B and C

will collide twice in a row after the channel becomes free.

RxL

1dB = 108 dB

Convert to non-dB ratio:

X dB = 10 log10(P1/P2)

(P1/P2) = 10^(X/10)

Answer:

The probability of a first collision PC1 is the probability that B and C pick the same random number in the range

1..31. PC1 = 1/31 ≈ 0.0323.

108 dB:

(P1/P2) = 10^(108/10) = 10^10.8

After the collision, the contention window size is increased to 63 slots.

n

(The size of the contention window is 2 -1, with n being increased by one each time a collision happens, up to a

maximum of 1023: 31, 63, 127, 255, 511, 1023 )

The probability of a second collision is the probability that B and C pick the same random number in the range

1..63.

PC2 = 1/63 ≈ 0.0159

Free Space Loss Model:

L = (4πdf/c)^2 = 10^10.8

d = L^0.5 * c/4πf

Speed of Light (approximately):

Since the two events are independent, the probability of two collisions in a row is PC12= PC1 * PC2 ≈ 0.0005

c = 3 * 10^8 m/s

d = 10^5.4 * 3*10^8/ (4* 3.1416 * 900*10^6) = 6.66 km

Q6) The Hidden Node Problem was briefly discussed during the Lecture. Due to limited

transmission range, nodes believe the channel is free when in effect another node is already

transmitting to the intended destination node. The end result is a collision at the receiver

which is not detected by either of the two senders.

a) Can you think of a scenario where the opposite is happening, i.e. a node believes that the

channel is not free, when in effect it could send to the destination and no collision would occur

(at the destination). Draw a figure that shows such a scenario.

2

3

Answer:

This is called the “Exposed Terminal Problem”. In the scenario shown below, A is transmitting to B and C wants

to transmit to D. When C listens to the channel, it detects that it is busy (due to A transmitting), and therefore will

defer transmission. However, in this case A could transmit to B at the same time as C is transmitting to D and no

collision would occur at the receivers, since C is B is out of range of C and D is out of range of A. This problem is

not destructive in the sense that it does not cause any collisions. However it causes underutilisation of the

medium.

Transmission

Range of A

Transmission

Range of C

A

C

B

D

b) Explain how RTS/CTS can be used to address this problem?

Answer:

When a node hears an RTS from a neighboring node, but not the corresponding CTS, that node can deduce that

it is an exposed node (out of range) and is permitted to transmit to other neighboring nodes.

4