* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download –

Survey

Document related concepts

Transcript

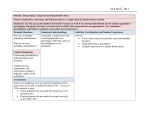

– – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – Parameter = pop, statistic = sample Descriptive stats = summarize, organize, simplify data (graphs, tables, etc.) Inferential stats = use sample data to make general conclusions about the pop (generally not perfect reps of pop parameters) Sampling error = error b/w sample stat and pop. parameter (relationship between variables – no cause and effect) Correlational = measures 2 variables (ex. sleep and academic performance); no manipulation, just observation Experimental = I.V. manipulated and D.V. observed; tries controlling other variables that might influence (cause & effect) Non-experimental/quasi-experimental = compares pre-existing groups (ex. boys/girls), no manipulation/control (no c and e) Nominal scale = not quantitative, categories with diff names (can’t determine direction/distance of difference) mode! Ordinal = ranked by size (shows direction but not distance; can’t tell how much bigger something is) Interval = intervals of same size (zero point is arbitrary – like temperature) shows distance and direction of difference Ratio = interval scale but zero point is an absolute (ex. height) shows distance and direction freq. dist. shows how individuals are distributed on the scale (number of indiv’s in each category) Proportion = p = f/N (relative frequency; sum should = 1) percentage = p(100) = (f/N)(100) total frequencies equal N Grouped freq. dist. tables: when scores cover wide range; group into class intervals (no gaps in histogram, bars touch) Scores on x-axis (vertical, IV) and frequencies on y-axis (horizontal, DV) real limits for continuous variables (no gaps) To find width for data intervals, do highest-lowest/10 (aiming for 10 intervals roughly;# we get is range) Relative frequency = for some populations where exact frequency is unknown, use smooth curves to show its an estimate Tail = where scores get less at end (+) skewed = tail on right (-) skewed = tail on left Percentile rank = percent of indiv’s at or below that score (rank refers to percent, percentile refers to a score) Cumulative freq (cf) = determine percentile convert to cumulative percentage (c%); c% = cf/N(100) Stem = first digit, leaf = other digits ** not noisy data = same values, noisy data = random scores Central tendency = find single score that best reps entire dist. (central point of distribution; average score) Mean: µ = ΣX/N (population) and M = ΣX/n (sample) balance point for, see saw! If a constant is added/etc. to every score, same is done to mean; mean focuses on distances, not scores, so influenced greatly by extreme scores/skewed dist’s Weighted mean: M = ΣX₁ + ΣX₂/n₁ + n₂ (combine two sets of data to find overall mean) Median: divides distribution in half (midpoint); half of the scores on either side; relatively unaffected by extreme scores Mode: score with greatest frequency; 2 modes = bimodal (unequal f = major and minor mode), 2 or more= multimodal Use median = extreme score/skewed, undetermined/unknown values, open-ended, ordinal scale (no distance for mean) Use mode = nominal scale, discrete variables (ex. mean makes 2.4 kids, not possible), describing shape (location of peak) Symmetrical distribution mean and median in middle (same value); mode at highest peak at the centre Skewed dist = ex. for (+): left to right = mode, median, mean (opposite for negatively skewed) Variability = describes how scores are scattered around the central point/average (if scores are same, no variability); Range = distance from largest to smallest score range = URL Xmax – LRL Xmin or highest – lowest + 1 (interval/ratio scales); shows how spread out scores are Interquartile range = middle 50% of dist. (avoid extreme scores) = Q3-Q1 when used for variability, transformed to semi-interquartile range = (Q3-Q1)/2 S.d. = distance between each score and the mean (deviation = distance from mean = X - µ) (sign tells us above or below mean) only used with interval and ratio scales (SS = sum of squared deviations) how spread out scores are Variance = σ2= SS/N = Σ(X-µ) 2/N (mean squared deviation) standard deviation = σ = √σ2 = SS/N (sample s.d. = s) Definitional: SS=Σ(X-M) 2 (when mean is whole #, few scores) computational: ΣX2 – (EX)2/n (mean isn’t whole #) Sample variance (s2) divide by n-1 (unlike N) to correct for sample bias degrees of freedom = n-1 Add constant to every score doesn’t change s.d. (doesn’t change distance, dist. just shifts), but multiplying does Extreme scores affect range, s.d. and var. (semi good though) open-ended dist only uses semi (can’t compute others) Z-scores = standard score, identify exact location of scores (allows to compare distances); count # of s.d. b/w score & mean Z = (X - µ)/σ OR z = (X – M)/s (shape same as original data, mean of 0 (reference point) and s.d. of 1) standardized distribution (Shows direction and distance from mean) descriptive and inferential b/c it identifies exact location Probability (a) = number of outcomes of a/total number of possible outcomes ex. p(king) = ... Random samples, equal chance of being selected, sample with replacement Normal distribution for z transformation 0 to 1 = 34.13%, 1 to 2 = 13.59%, past 2 = 2.28% p(z>2.00)=2.28% p(x<130) = p(z<2.00) = 0.9772 (or 97.72%) -> b/w 2 scores (use D): p(22<x<65)=p(-0.30<z<+0.70)=0.1179+0.2580=0.38 binomial distribution = 2 categories on measurement scale (male and female) normal dist. if pn and qn are =/> than 10 binominal - Mean: µ = pn and Standard Deviation: σ = npq, z = (X - µ)/σ = (X –pn)/√npq Ex. Predicting suit 14 out of 48 times – use real limits, for x = 13.5 do z, do same for 14.5, convert with table, subtract Percentile rank = % of indiv’s with scores at/below x value (proportion to left), percentile = x value not identified by rank Dist of Sample means = collection of sample means for all possible samples from a pop – contains all samples, predict sample characte. Sampling distribution = dist. of stats obtained by selecting all possible samples of a specific size from a pop – values are stats not scores – – – – – – – – – – – – – – – – – – – – – – – – – – – Central limit theorem = dist of sample means for sample size n will have mean of µ & s.d. of σ/n, approach normal as approach infinity Normal dist. of sample mean if pop is normal dist. and number of scores in each sample is large, around 30 plus Mean of dist. of sample means called the expected value of M = equal to the mean of the population Standard error of M, σM, is the s.d. for the dist. of sample means (if its small, sample means close; if its large, sample means scattered); measure of how much distance is b/w a sample mean (M) and the pop. mean (µ) – larger sample size = sample mean closer to µ Formula for standard error = σM = σ/n as well as = σM = σ2 /n =(σ2 /n) - tells how much error to expect when using s.mean Use dist. of sample means to find probability of any specific sample (probability = proportion) location of sample mea: z = M-µ/ σM Statistical inference involves using sample stats to make a general conclusion about a population parameter Hypothesis testing = statistical method using sample data to evaluate a hypothesis about a population inferential procedure State hypothesis and select alpha, set criteria: alpha level (.05,.01,.001) – define very unlikely sample outcomes if the null is true, critical region: extreme sample values that are unlikely if null is true, boundaries determined by alpha level (alpha of .05 means crit=5, if data fall here than null is rejected, collect data and compute, do z-score (called t statistic, z=M-µ/ σM), reject or fail to reject null Type I error = rejects a null that’s true – concluded treatment does have an effect when it has no effect determined by alpha level Type II error = fails to reject false null – failed to detect a treatment effect, not as serious as type I, likely when effect is very small, β Large z indicates unlikely M occurred with no treatment effect, so reject null Factors influencing hyp. Test = size of mean difference (larger the diff, larger the z, greater chance of an effect), variability of scores (larger variability lowers chance of finding effect), number of scores in sample (larger size, greater chance of finding an effect) Assumptions with z scores random sampling, independent observations, value of s.d. is unchanged by treatment, normal dist. Directional/one-tailed test crit. region located in one tail of the dist., not two – can reject null when diff. b/w sample & pop. is small Effect size measurement of magnitude of effect, independent of size of sample Cohen’s d = mean difference/standard deviation = µ treatment - µ no treatment/σ and estimated Cohen’s d = mean difference/standard deviation = M treatment - µ no treatment/σ Power = probability the test will correctly reject the null identify treatment and null distributions, specify magnitude, locate critical region power is portion of treatment distribution located beyond critical region as size of effect increases, power increases: increasing alpha increases power, one-tailed test has greater power than two-tailed, large sample results in more power than small samp. Goal of hypothesis test for t stat is to determine whether diff. b/w data and hypothesis is greater than would be expected by chance Estimated standard error sM = s/n OR sM = (s2/n) when value of s.d. is unknown, estimate of standard distance b/w M and µ T statistic test hypotheses about unknown µ when value of s.d. is unknown t = M - µ/ sM (with df, use larger value of t is no #) Degrees of freedom number of scores in sample independent, free to vary df = n-1 (larger the sample, better to represent the pop.) Dist. of t stats is more flatter/more spread out than the standard normal dist. as df gets very large, t dist. gets closer to normal dist. Ex. for non-directional: H0: µattractive = 10 seconds); alternative states there’s preference: H1: µattractive ≠ 10 seconds For critical region, find df, know if its directional or not, known alpha, and then look at chart – for regular hyp. Test, use unit normal Calculate t stat calculate sample variance (s2 = SS/df), then estimated standard error (sM = s2/n), and then t stat (t = M - µ/ sM) Assumptions = values must be independent observations, population sampled must be normal Estimated d = mean difference/sample standard deviation = M - µ/s numerator measures magnitude of the treatment effect by finding the difference between the mean for the treated sample and the mean for the untreated population (µ from H0); s = (SS/df) – – – – – – – – – – Measure effect size by determining how much variability in the scores is explained by the treatment effect r2 = t2 / t2 + df called the percentage of variance accounted for by the treatment - Interpreting r2 0.01 = small effect, 0.09 = medium, 0.25 = large Independent-measures research design/between-subjects design = uses separate sample for each treatment condition/for each pop. H0: µ₁ - µ₂ = 0 OR µ₁ = µ₂ (no difference between the population means) and H1: µ₁ - µ₂ ≠ 0 OR µ₁ ≠ µ₂ (there is a mean difference) Uses difference between 2 sample means to evaluate diff. b/w 2 pop. means t = (M₁ - M₂) – (µ₁ - µ₂)/ s(M₁ - M₂) Formula for standard error: s(M₁ - M₂) = √ s21/n₁ + s22/n₂ Combines error for 1st sample mean and error for 2nd sample mean Equal sample sizes, pooled variance is halfway b/w 2 sample variances s2p = SS₁ + SS₂ /df₁ + df₂ When sample sizes are different, variances aren’t equal so do pooled variance s2p = SS₁ + SS₂ /df₁ + df₂ Estimated standard error of M₁ - M₂ = s(M₁ - M₂) = √ s2p/n₁ + s2p/n₂, df for t statistic = df₁ + df₂ = (n₁ - 1) + (n₂ - 1) State hypotheses and alpha level, df and find critical region, compute t (pooled variance, est. Standard error, t stat), make a decision For independent-measures, diff between two sample means is used for the mean difference, and the pooled standard deviation is used to estimate the population standard deviation estimated d = estimated mean difference/estimated standard deviation =M₁ – M₂/√s2p – – – – – – Assumptions = observations independent, populations must be normal, populations must have equal variances (homogeneity of variance Hartley’s Fmax test: compute sample variance, s2 = SS/df, for each of the separate samples, then select largest and smallest of sample variances and do F-max = s2(largest) / s2(smallest) – if value’s larger than crit. value, variances diff, hom. isn’t valid) Repeated-measures design/within-subjects design = single sample measured more than once, same subjects used in treatments Matched-subjects = individual in one sample matched with indiv. In other sample so they’re equivalent or nearly (ex. match IQ, gender, age) Diff’nce scores/D = X₂ - X₁.– want to see if there’s a difference b/w 2 treatment conditions: Ho : µD = 0, H₁: µD ≠ 0 Sample variance: s² = SS/n-1 OR s = SSdf(standard dev), estimated standard error: sMD = √(s2n) or sMD = s/√n, t stat: t = MD - µD / sMD Cohen’s d mean difference between treatments Estimated d = sample mean difference/sample standard deviation = MD / s – Inde. Uses two separate samples, repeated uses one sample with same individuals rep. requires fewer subjects, more efficient, good for learning and development (over time), reduces problems caused by individual differences – With rep though, participation first time can change results for second time, so counterbalance order of treatments (one group does 1st then 2nd, other group does 2nd then 1st, outside factors may have changed since then like mood, health, weather