* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download Introduction to Vectors and Matrices

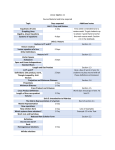

Rotation matrix wikipedia , lookup

Jordan normal form wikipedia , lookup

Determinant wikipedia , lookup

Matrix (mathematics) wikipedia , lookup

Laplace–Runge–Lenz vector wikipedia , lookup

System of linear equations wikipedia , lookup

Eigenvalues and eigenvectors wikipedia , lookup

Cross product wikipedia , lookup

Perron–Frobenius theorem wikipedia , lookup

Non-negative matrix factorization wikipedia , lookup

Orthogonal matrix wikipedia , lookup

Exterior algebra wikipedia , lookup

Cayley–Hamilton theorem wikipedia , lookup

Gaussian elimination wikipedia , lookup

Singular-value decomposition wikipedia , lookup

Vector space wikipedia , lookup

Euclidean vector wikipedia , lookup

Covariance and contravariance of vectors wikipedia , lookup

Matrix multiplication wikipedia , lookup

A Linear Algebra Primer

James Baugh

Introduction to Vectors and Matrices

Vectors and Vector Spaces

Vectors are elements of a vector space which is a set of mathematical objects which can be added and

multiplied by numbers (scalars) subject to the following axiomatic requirements:

Addition must be associative: Addition must be commutative: Addition must have a unique identity: (the zero vector).

Every element must have an additive inverse: .

Under scalar multiplication 1 must acts as a multiplicative identity:1 Scalar multiplication must be distributive with addition:

, and .

Another requirement is closure which we can express singly as closure of linear combinations:

o If and are in the space then so too is for any numbers, and.

•

•

•

•

•

•

•

What does all that mean? It simply means that vectors behave just like number as far as doing algebra

is concerned except that we don’t (as yet) define multiplication of vectors times vectors. (Later we will

see several distinct types of vector multiplication.)

Examples of Vector Spaces: Arrows (displacements)

The typical first example of vectors are arrows which we may think of as acts of displacement i.e. the

action of moving a point on a plane or in space in a certain direction over a certain distance:

v

(Shown is the vector mapping point to point .)

The arrow should be considered apart from any specific point but rather as an action we may apply to

arbitrary points. In a sense the arrow is a function acting on points. In this context then we define

addition of arrows as composition of actions and scalar multiplication as scaling of actions:

w

u

v

+

0.75

=

0.75 v

v

(75% of original length)

=

A good exercise is to verify that the various axiomatic rules for vectors hold in this example.

When we interpret arrows in this way (as point motions) we refer to them as displacement vectors.

Note that if we define an origin point to space (or plane) we can then identify any point with the

displacement vector which moves the origin to that point. This is what we then mean by a position

vector.

A Linear Algebra Primer

James Baugh

Examples of Vector Spaces: Function Spaces

Consider now a totally different type of vector space. Let V be the set of all continuous functions with

domain, the unit interval [0,1]. We can add functions to get functions and we can multiply functions to

get functions. It is simple enough then to verify that if f and g have domain [0,1] then the function h:

h(x)=a f(x) + b g(x) also has domain [0,1] and is also continuous and thus is also in V.

Another example of a function space is the space of polynomials in one variable. This space is denoted:

indicating polynomials in the variable with real coefficients. Again we can always add

polynomials and multiply them by scalars.

A third vector space we can define is the set of linear functions on variables.

Example of Vector Spaces: Matrices

Matrices are arrays of numbers with a specific number of rows and columns (the dimensions of the

matrix). For Example:

3 2

5

1 1 √7

Here is a 2 3 (“two by three”) matrix. We use the convention of specifying first the number of rows

and then the number of columns. (To remember this, the traditional mnemonic is “RC cola!”)

We may add matrices with the same dimensions by simply adding corresponding entries.

We multiply a matrix by a number (scalar) by multiplying each entry by that number:

1 2 4

0 2 0

1 2 4

0 6 0

1

$ 3!

$!

$!

$!

3 1 0

0 3 2

3 1 0

0 9 6

3

Thus the set of all ( matrices forms a vector space.

!

8 4

$

10 6

Basis, Span, Independence

A basis of a vector space is a set of linearly independent vectors which span the space. To understand

this of course we must understand the meaning of span and linear independence.

• The span of a set of vectors is the set of all linear combinations of those vectors.

Example: )*, + *: ,forallscalarvaluesofand+.

One can easily show that the span of a set of vectors is itself a vector space (it will be a subspace

of the original vector space).

• There are two basic (equivalent) ways to define linear independence.

A set of vectors is linearly independent if no element of the set is a linear combination of the

remaining elements (it isn’t in the span of the set of remaining elements), or equivalently if no

non-trivial linear combination of elements equals the zero vector. (The trivial linear

combination would be the sum of zero times each element.)

The main role of a basis is to span the space, i.e. it provides a way to express all vectors in terms of the

basis set. The linear independence tells us that we have no more elements in the basis than we actually

need.

Example: Position vectors (or displacement vectors) in the plane can always be expressed in terms of

horizontal and vertical displacements. We define the standard basis as *9, :+ where 9 is the displacement

one unit to the right (in the x-direction) and : is the unit displacement upward (in the y-direction).

A Linear Algebra Primer

3Ĥ 2Ĵ

James Baugh

K

9

Note then that to express a position vector for the point , ; we need only note that this is the point

obtained by displacing the origin to the right by a distance and up a distance;. It thus corresponds to

the position vector< 9 ;:. The standard basis thus exactly corresponds to the use of rectangular

coordinates. When we expand a vector as a linear combination of basis elements then we refer to the

coefficients as linear coordinates.

Now many different bases (pronounced “bayseez”) are possible for the same vector space but the size

(number of elements) is always the same and this defines the dimension of the space. The planar

displacements have a standard basis of two elements and so has dimension two. We can extend this to

three dimensional displacements in space with basis *9, :, =+ corresponding to unit displacements in the

x,y, and z-directions respectively.

When we expand a vector in terms of an established basis (e.g. < 9 ;: >=) we can simply give

the coefficients in which case we use angle brackets. Example: < 9 ;: >= 〈, ;, >〉. When

working with multiple bases we may use a subscript to indicate which basis is being used.

< 〈, ;, >〉A where B *9, :, =+. We should however be a bit careful here since our definition of a

basis is as a set of vectors. Sets do not indicate order. We can be clear by defining an ordered basis as a

sequence instead of a set but otherwise equivalent to the above definition.

Matrices as Vectors, Vectors as Matrices

As was just mentioned we may view matrices as vectors. As it turns out matrix algebra is a good

standard language for all vectors. We will make special use of matrices which have only one column

(column vectors) and matrices which have only one row (row vectors).

;

Such as: C D or E >

First let us define an ordered basis which is simply a basis (set) rewritten as a sequence of basis

elements, for example*9, :, =+ → 9 : =. We treat these formally as a matrix (row vector).

The reason for ordering a basis is so we can reference elements (and coefficients) by their

positions rather than their implicit identities. This is important for example if we consider a nontrivial transformation which, say cycles the basis elements without changing them. (A 60°

rotation about the line x=y=z will cycle x, y, and z-axes. This can be expressed by a change of

ordered basis9 : = → = 9 :, but it leaves the basis set unchanged.)

We then write a general vector as a product of a row matrix and column matrix:

< 9 ;: >: 9 : = C;D

>

We take this as the definition of multiplication of a row times a column be it rows of vectors or numbers

(or later differential operations).

The point here is that once we have decided upon a particular (ordered) basis we may work purely with

the column vectors or coordinates.

< G C;D

>

A Linear Algebra Primer

James Baugh

So we have three ways of expressing a vector in terms of a given basis,

i.

Explicitly as in: < 9 ;: >:

Using the angle bracket notation: < 〈, ;, >〉,

iii.

Using a column vector (matrix): < G C;D

>

We write the first two as equations because they are identifications. The last however is not quite since

matrices are defined as their own type of mathematical objects. We rather are identifying them by

equivalent mathematical behavior rather than by identical meaning.

ii.

Dual Vectors and Matrix Multiplication

Dual Vectors and Row Vectors

If we consider a vector space L then a linear functional is a function mapping vectors in L to scalars

obeying the linearity property (see below). Since functionals are just functions we can add them and

multiply them by scalars so they form yet another vector space. We denote the space of linear

functionals byL ∗, the dual space ofL. We thus also call these linear functionals dual vectors.

OPQRST

Linearity: N: L UVVVW X (N is a linear mapping from L toX) means that:

N N Nforall, inandforall, inL.

Said in English

“N is linear ” means N of a linear combination of objects equals the same linear combination of

N of each object.

If we combine this with the use of a basis then we can express any linear functional uniquely by how it

acts on basis elements.

If N9 and N: and N= [ then

N< N9 ;: >= N9 ;N: >N= ; [>

What’s more by moving to the column vector representation of a vector (in the standard basis) we can

express dual vectors (linear functionals) using row vectors:

N< [ C;D ; [>

>

We can then entirely drop the function notation and write the functional evaluation as a matrix product:

N< \<]^_`_\ G [ A Side Note: This form of multiplication is contracted which means we reduce dimensions by summing

over terms (also the dimensions must be equal or rather dual but of equal size). Compare this with

scalar multiplication which is a form of distributed multiplication.

C;D C;D

>

>

Distributed multiplication preserves dimension. I mention this to clarify its use later.

A Linear Algebra Primer

James Baugh

Multiplying Matrices times Column Vectors

Now that we can multiply a row vector times a column vector to get a scalar, we can use this to define

general matrix multiplication. A general matrix may be simultaneously considered as a row vector of

column vectors or vis versa.

a

1 2

4

1 2 4

1

2

b aa b a b a bb c

3 1

3 1 0

3

1

0

4

d

0

So we can multiply an ( matrix by a column vector of length ( ( an ( 1 matrix) as follows: Treat

the ( matrix as a row vector (with ( columns) of column vectors (with rows) and apply the row

times column multiplication. The result will be an 1 matrix

2; 4>

2;

4

4

4>

1 2 4 ;

1

2

1

2

a

b C D aa b a b a bb C;D a b a b ; a b > a b c d a b c

d

3

;

3 ;

3 1 0 >

3

1

0 >

3

1

0

0

We describe this as contracting outer multiplication combined with distributed inner multiplication.

Now this works but there is another way to go about it. Treat the matrix instead as a column of rows

and multiply the column vector on the right times each row. “Column of rows times column = column of

(row times column)”

g1 2 4 C;Dj

f

1 2 4

2; 4>

1 2 4 ;

> i

d C; D f

a

bC D c

i c 3 ; d

3 1 0

3 1 0 >

>

f3 1 0 C;Di

e

> h

This is a more often used sequence and it allows us to then generalize consistently. You can view this as

distributed outer multiplication with contracting inner multiplication.

In a similar way we can multiply a row vector times a matrix to yield another row vector.

Matrix Multiplication

To multiply two general matrices the number of columns of the left matrix must equal the number of

rows of the right matrix. Using the dimensions (remember RC cola) we see then that we can multiply a

k matrix times a ( matrix and the result is a k ( matrix. In short k ( k (.

Treat the left matrix as a column vector of row vectors and the right as a row vector of column vectors…

Example:

0 1 2 3

1 2 3 0 1 2 3

1 2 3

a

b l4 56 7m c

d nl4m l5m l6m l7mo

4 5 6

4 5 6

8 9 8 9 and use distributed multiplication except contact at the inner most level.

0

1

2

3

g 1 2 3 l4m 1 2 3 l5m 1 2 3 l6m 1 2 3 l7m j

0 1 2 3

f

i

1 2 3

8 9 i

a

b l4 56 7m f

0

1

2

3 i

4 5 6

f

8 9 f 4 5 6 l4m 4 5 6 l5m 4 5 6 l6m 4 5 6 l7m i

e

8

9

h

and now contracting products (row times column):

0 8 24 1 10 27 2 12 3 3 14 3

32 38 14 3 17 3

d

b c a

0 20 48 4 25 54 8 30 6 12 35 6

68 83 38 6 47 6

A Linear Algebra Primer

James Baugh

Things to note:

Matrix multiplication is not commutative that is given the matrix product pB the reverse product Bp

may not even be defined, and if defined may not yield a matrix with the same dimensions as pB and

even in the special case (square matrices) where it does, it will not in general yield the same matrix.

There are some interesting special cases one of which is square matrices which are matrices with the

same number of rows and columns. Multiplication by square matrices of the same dimension yields

again square matrices of the same dimension.

Consider the following square (3 3) matrix:

1 0 0

qr l0 1 0m

0 0 1

It is called the (3x3) Identity matrix because multiplication by this matrix (when defined) will leave the

other matrix unchanged. Examples:

1

l0

0

0 0 ;

1 0m C D C ; D

>

0 1 >

and

a

E

1 0

[

b l0 1

]

0 0

0

0m a

E

1

[

b

]

We can define the inverse of a square matrix to be the square matrix (if it exists) st such that

st s sst q

As the identity behaves like multiplication by 1, the inverse is analogous to the reciprocal, hence the -1

power notation.

Example:

Formula for 2 2 matrices:

1 3

0

1 3 0 t

0 m

l 0 1 0m l 0 1

0

0

1/2

0 0 2

t

v b Swtxy a

b

a

[ [ v

Provided v [ z 0. If this (determinant) is equal to zero then the matrix has no inverse.

Given a vector space, an inner product (dot product) is a symmetric positive definite bilinear form, {

• As a bilinear form it is a function mapping two vectors to a scalar ( {, in ) in such a way

that the function is linear with respect to each of the two vectors (remember action on linear

combination equals linear combination of actions).

{ , { , { , and likewise with the other argument.

• The positive definiteness means that when we apply the form to two copies of the same (nonzero) vector we get a positive number {, | 0, and if {, 0 then .

• By symmetric we mean that exchanging the two vector arguments doesn’t change the value.

{, {, .

There are various notations for an inner product:

|

{, or

or

⋅

or

({ here is the name of the bilinear form as a function.)

Transpose, Adjoint, and Inner (dot) Products

A Linear Algebra Primer

James Baugh

SIDE NOTE: This definition assumes we’re using real scalars. The extension to complex numbers

forks in either of two ways. We can maintain symmetry (orthogonal form) or maintain positivity

(Hermitian form) but not both.

We will mostly here use the dot notation and call the inner product the dot product. But one should be

aware that more than one inner product can be defined on the same space.

Inner (dot) products provide us with a sense of the length or size of a vector (we call this a norm of the

vector) in that dotting a vector with itself may be considered as the squared magnitude:

⋅ || or || √ ⋅

This is the reason we insist on the positive definiteness of the inner product so we can take the square

root to get a positive real valued norm. One may show that given we start with a norm we can define a

corresponding inner product. So the two ideas are equivalent. We thus also refer to the inner product

as a metric, (specifically a positive definite metric).

In the example of displacement vectors (arrows) the norm defines (or is defined by) the length of the

vector which is the distance it moves the points to which it is applied.

SIDE NOTE: Sometimes we relax this positive definiteness requirement in which case we end up

with a pseudo-norm. For example special relativity unifies space and time into a space-time in

which the metric is not positive definite. This yields vectors some of which have real length,

some of which have an “imaginary length” and some of which have zero length while not being

the zero vector (null vectors).

THE dot product (between two displacement vectors)

There is a specific geometric inner product, the dot product, defined for arrows or displacements. It is

defined as the product of the magnitudes of the two vectors times the cosine of their relative angle:

u

v

∙ ||||cos

Note that in the case where we dot a vector with itself, the relative angle is zero and so the cosine is 1.

Thus a vector dotted with itself yields the square of its magnitude.

Orthonormal Basis

Since the inner products are bilinear we can expand their action in terms of the action on basis

elements. Once we know the dot products between all pairs of basis elements we can apply this to dot

products between any vectors when expanded in terms of the basis. Observe:

For 9 : [=and < 9 ;: >= the linearity of the dot product gives us:

⋅ 9 : [= ⋅ 9 ;: >= 9 : [= ⋅ 9 ;9 : [= ⋅ : >9 : [= ⋅ = 9 ⋅ 9 : ⋅ 9 [= ⋅ 9 ;9 ⋅ : ;: ⋅ : [;= ⋅ : >9 ⋅ = >: ⋅ = [>= ⋅ =

Rather tedious but note we are just applying the regular algebra skills just as if we were expanding a

product of two polynomials. (Recall that we can think of polynomials as vectors.) The main point here is

A Linear Algebra Primer

James Baugh

that we have expressed the original dot product as a sum of multiples of the dot products of basis

elements. Once we know these we can calculate the dot product readily. In fact we will shortly show

how to use matrix notation to help keep track of all the pieces of this calculation. But for now…

Recall our standard basis for displacements were the unit (length 1) displacements along the x, y, and z

axes. So the angles between different basis elements are 90° which has cosine of 0. This gives us:

9 ∙ 9 : ∙ : = ∙ = 1 ∙ 1 ∙ cos0 1

9 ∙ : 9 ∙ = : ∙ = 1 ∙ 1 ∙ cos90° 0

The above tedious dot product calculation then reduces to:

⋅ 9 ⋅ 9 : ⋅ 9 [= ⋅ 9 ;9 ⋅ : ;: ⋅ : [;= ⋅ : >9 ⋅ = >: ⋅ = [>= ⋅ = 1 0 [0 ;0 ;1 [;0 >0 >0 [>1 ; [>

So (having used the standard basis)

⋅ ; [>

The dot product is just the sum of the products of corresponding components. This is true only because

of the form of the standard basis. Note that each basis element is of unit length and orthogonal

(perpendicular) to all the others. This property of the basis is called orthonormality . That is to say it

means we have an orthonormal basis. For arbitrary bases the dot product is a bit more complicated but

not too bad if we use matrices consistently. We’ll see that shortly.

Adjoint and Transpose

There’s an easy way to express the dot product (given an orthonormal basis) in terms of matrices:

G C;D

G CD ,

>

[

Their dot product can be written as a matrix product:

∙ [ C;D

>

Two points to note here. Firstly, to be consistent we need to express the operation of changing a

column into a row. This (and the reverse) we call transposing a matrix. Secondly note that the action of

taking the dot product with respect to a given vector defines a linear functional (linear mapping from

vector to scalar).

Let’s take that second point first. We can consistently (re)interpret the dot product notation by

grouping the dot symbol with the first vector and calling the result a dual vector:

∙ ∙ [ C;D

>

We take ∙ to be a dual vector or linear functional with a corresponding row vector representation.

G C D ,

∙ G [ [

Another way of interpreting the dot product is as a linear transformation mapping vectors to dual

vectors. This type of mapping is also known as an adjoint which we indicate using a dagger superscript:

∙

Hence we can write the dot product in the form:

∙ A Linear Algebra Primer

James Baugh

We extend the adjoint to apply to both vectors and dual vectors (and later matrices) so that when we

apply the adjoint twice we end up back where we started. .

Now recall we have a very simple form because we used an orthonormal basis. In the matrix

representation the adjoint is just the transpose. The transpose of a matrix is the matrix we obtain by

reversing rows with columns.

Example: a

1

2

[ ⊺

b l

3

[

1

2m

3

Side Note: When we generalize to complex vectors (and matrices) the adjoint will in fact be the

complex conjugate of the transpose…(which defines a Hermitian inner product).

Finally note that the transpose when applied to products of two or more matrices will reverse the order

of multiplication. ⊺ ⊺ ⊺ This you can confirm by working out examples.

Adjoint and Metric with non-orthonormal bases

For real vectors, the metric representation of the inner product was, in the matrix representation

multiplication by the transpose provided we had an orthonormal basis. To see how to work with a

general basis we go back and consider how we expanded the vector in a basis using matrices. Recall

that we used a row vector of basis elements for the ordered basis and used it as follows:

9

:

=

< 9 ;: >: C;D

>

Let’s use an arbitrary basis expansion for two vectors.

E

r C D ,

r C D

]

`

We express the dot product ⋅ using the transpose or more properly the adjoint and applying matrix

multiplication. Note that the adjoint of the basis vectors will be “take the dot product with” operations

so we have:

⋅

E ] n o E ] n ⋅o

r ⋅

r

⋅

⋅

]

⋅ E

l m r C D

r ⋅

`

Now apply matrix multiplication between the basis column and row:

⋅

⋅ ⋅ ⋅ r

⋅

l m r l ⋅ ⋅ ⋅ r m

r ⋅

r ⋅ r ⋅ r ⋅ r

So we have:

⋅ ⋅ ⋅ r ⋅ E ] l ⋅ ⋅ ⋅ r m C D E ]M C D

r ⋅ r ⋅ r ⋅ r `

`

We end up with the transpose of the matrix for times a square matrix times the column vector for

.

A Linear Algebra Primer

James Baugh

( ( (r

⋅ ⋅ ⋅ r

(

⋅

⋅

⋅

The matrix l r m l ( (r m

(r (r (rr

r ⋅ r ⋅ r ⋅ r

of basis dot products is called the metric. Note that when the basis is orthonormal it takes the simple

form of the identity matrix.

1 0 0

l0 1 0m

0 0 1

For general cases it will be either symmetric (equal to its transpose) or when we generalize to complex

vectors it will be Hermitian (equal to its complex conjugate transpose).

Note then we can express the adjoint of a column matrix corresponding to a vector G C D,

[

( ( (r

expanded in an arbitrary basis by G ⊺ [ l( ( (r m

(r (r (rr

This tells us in a general basis how to expand the dot product of two vectors using the adjoint of one.

But given the adjoint of the adjoint gets us back where we started we also have a dual metric defining a

dot product for dual vectors.

The dual metric also has a matrix representation (when we express dual vectors in terms of row vectors)

and it will be the inverse transpose t⊺ t ⊺ of the matrix representation of the metric. In

short given a dual vector \ G [ , we have \ G t⊺ ⊺

Putting this all together we can then see that the adjoint of the adjoint gets us back where we started.

For a vector :

⊺

G t⊺ t⊺ ⊺ ⊺ t⊺ ⊺ t ⊺ ⊺ G To follow this string of operations remember the transpose of a product is the reversed product of the

transposes and that a matrix times its inverse is the identity matrix and so cancels.

For a (real) square matrix we can also define an adjoint.

t⊺ ⊺ If the matrix is complex we must also take the complex conjugate: t⊺ ∗⊺ . It is so much easier

if we work in an orthonormal basis where both metric and dual metric matrices are the identity:

t⊺ . Thus you will usually find the adjoint defined simply as the conjugate transpose. This

however is a basis dependent definition and when working in general bases we must remember to

account for these extra metric factors.

THAT’S ALL FOR NOW… I intend to add more later including for example how to define cross products

in terms of matrices, tensors and tensor products,