* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download Statistical inference for nonparametric GARCH models Alexander Meister Jens-Peter Kreiß May 15, 2015

Survey

Document related concepts

Transcript

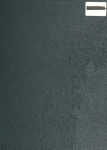

Statistical inference for nonparametric GARCH models Alexander Meister∗ Jens-Peter Krei߆ May 15, 2015 Abstract We consider extensions of the famous GARCH(1, 1) model where the recursive equation for the volatilities is not specified by a parametric link but by a smooth autoregression function. Our goal is to estimate this function under nonparametric constraints when the volatilities are observed with multiplicative innovation errors. We construct an estimation procedure whose risk attains the usual convergence rates for bivariate nonparametric regression estimation. Furthermore, those rates are shown to be optimal in the minimax sense. Numerical simulations are provided for a parametric submodel. Keywords: autoregression; financial time series; inference for stochastic processes; minimax rates; nonparametric regression. AMS subject classification 2010: 62M10; 62G08. 1 Introduction During the last decades GARCH time series have become a famous and widely studied model for the analysis of financial data, e.g. for the investigation of stock market indices. Since the development of the basic ARCH processes by Engle (1982) and their generalization to GARCH time series in Bollerslev (1986) a considerable amount of literature deals with statistical inference – in particular parameter estimation – for those models. Early interest goes back to Lee and Hansen (1994), who consider a quasi-maximum likelihood estimator for the GARCH(1, 1) parameters, and to Lumsdaine (1996) who proves consistency and asymptotic normality of such methods. In a related setting, Berkes et at. (2003) and Berkes and Horvath (2004) establish ∗ Institut für Mathematik, Universität Rostock, D-18051 Rostock, Germany, email: alexander.meister@uni- rostock.de † Institut für Mathematische Stochastik, TU Braunschweig, D-38092 Braunschweig, Germany, email: [email protected] 1 n−1/2 -consistency, asymptotic normality and asymptotic efficiency for the quasi maximum likelihood estimator of the GARCH parameters. Hall and Yao (2003) study GARCH models under heavy-tailed errors. More recent approaches to parameter estimation in the GARCH setting include Robinson and Zaffaroni (2006), Francq et al. (2011) and the manuscript of Fan et al. (2012). Also we refer to the books of Fan and Yao (2003) and Francq and Zikoı̈an (2010) for comprehensive overviews of estimation procedures in general nonlinear time series and GARCH models, respectively. Moreover, Buchmann and Müller (2012) prove asymptotic equivalence of the GARCH model and a time-continuous COGARCH experiment in LeCam’s sense. Besides the standard GARCH time series many related models have been derived such as e.g. AGARCH (Ding et al. (1993)) and EGARCH (Nelson (1991)). Straumann and Mikosch (2006) consider quasi maximum likelihood estimation in those settings among others. Linton and Mammen (2005) investigate kernel methods in semiparametric ARCH(∞) models. Hörmann (2008) deduces asymptotic properties of augmented GARCH processes. Čı́žek et al. (2009) study various of parametric GARCH-related models where the parameters may vary nonparametrically in time and, thus, the data are non-stationary. Other extensions concern the GARCH-in-mean models. Recently, Christensen et al. (2012) consider semiparametric estimation in that model in which the recursive equation for the volatilities is still parametric while an additional function occurs in the recursion formula for the observations. Conrad and Mammen (2009) study testing for parametric specifications for the mean function in those models and introduce an iterative kernel estimator for the mean curve. In the current note we study a general extension of the GARCH(1, 1) model where the recursive equation for the volalilities is specified by a link function which is not uniquely determined by finitely many real-valued parameters but follows only nonparametric constraints. More specifically, we introduce the stochastic processes (Xn )n≥1 and (Yn )n≥1 driven by the recursive formula Yn = Xn εn , (1.1) Xn+1 = m(Xn , Yn ) , with i.i.d. nonnegative random variables {εn }n≥0 , which satisfy Eεn = 1 and Rε := ess sup εn < ∞, and a measurable autoregression function m : [0, ∞)2 → [0, ∞). The data Yj , j = 1, . . . , n, are observed while the Xn are not accessible. Therein, we assume that (Xn )n is strictly stationary and causal, i.e. for any fixed finite T ⊆ N0 , the marginal distributions of (Xt )t−s∈T coincide for any integer shift s ≥ 0; Xn is measurable in the σ-field generated by X1 , ε1 , . . . , εn−1 ; and X1 , ε1 , . . . , εn−1 are independent. The random variables Xn are non-negative as well. Our goal is to estimate the link function m without any parametric pre-specification. The GARCH(1, 1)2 setting is still included by putting m(x, y) = α0 + β1 x + α1 y , where Xn represent the squared volatilities and Yn represent the squared returns of a financial asset. This simplification reduces on the one hand the technical effort to establish our main result, namely to rigorously show that in nonparametric GARCH settings the usual convergence rates for nonparametric estimation of smooth bivariate regression functions can be achieved. On the other hand relevant extensions of the standard GARCH model, which are devoted to include asymmetry in the volatility function according to the dependence on the lagged return, are not included in our model. Threshold GARCH (TGARCH) models have also been introduced in order to capture asymmetry (i.e. usage of threshold zero). If one instead thinks of a positive threshold, at which the GARCH parameters alter depending on whether the lagged squared return is less than or larger than the threshold, (1.1) covers rather similar situations with smooth transitions (depending on squared or absolute values of lagged returns) between different GARCH models. Such general models have been mentioned e.g. in Bühlmann and McNeil (2002) or Audrino and Bühlmann (2001, 2009). In those papers, some iterative estimation and forecasting algorithms are proposed. However, to our best knowledge, a rigorous asymptotic minimax approach to nonparametric estimation of the autoregression function m has not been studied so far. The current paper intends to fill that gap by introducing a nonparametric minimum contrast estimator of m (section 3), deducing the convergence rates of its risk and showing their minimax optimality (section 4). Numerical simulations are provided in Section 5. The proofs are deferred to section 6 while, in section 2, some important properties of stationary distributions of the volatilities are established. 2 Properties of stationary distributions We introduce the function m(x, e y) := m(x, xy) so that the second equation of (1.1) can be represented by Xn+1 = m(X e n , εn ). We impose Condition A (A1) We assume that m is continuously differentiable, maps the subdomain [0, ∞)2 into [0, ∞) and satisfies kmx k∞ + kmy k∞ Rε ≤ c1 < 1 where mx , my denote the corresponding partial derivatives of m and the supremum norm is to be taken over [0, ∞)2 . Moreover, Rε = ess sup ε1 . 3 (A2) We impose that the function m is bounded by linear functions from above and below, i.e. α00 + α10 x + β10 y ≤ m(x, y) ≤ α000 + α100 x + β100 y, ∀(x, y) ∈ [0, ∞)2 , for some positive finite constants α00 , α000 , α10 , α100 , β10 , β100 with α100 < 1. (A3) Moreover the function m increases monotonically with respect to both components; concretely we have m(x, y) ≤ m(x0 , y 0 ), ∀x0 ≥ x ≥ 0, y 0 ≥ y ≥ 0 . (A4) Furthermore, we impose that ε1 has a bounded Lebesgue density fε which decreases monotonically on (0, ∞) and satisfies rε := inf x∈[0,Rε /2] fε (x) > 0. We have Eε1 = 1. The existence of stationary solutions in modified GARCH models has been shown in the papers of Bougerol (1993) and Straumann and Mikosch (2006). Also Diaconis and Freedman (1999) and Wu and Shao (2004) study the existence and properties of a stationary solution for similar iterated random functions. Still, for the purpose of our work, some additional properties of the stationary distribution are required such as its support constraints. Therefore, we modify the proof of Bougerol (1993) in order to obtain part (a) of the following proposition. Part (b) provides a fixed point equation for the left endpoint of stationary distributions. Part (c) gives some lower bound on the small ball probabilities of stationary distributions. As a remarkable side result, a lower bound on the tail of this distribution at its left endpoint is deduced. Proposition 2.1 (a) (modification of the result of Bougerol (1993)) We assume that Condition A holds true. Then there exists a strictly stationary and causal process (Xn )n with Xn ≥ 0 a.s. and RX := ess sup Xn ≤ α000 /(1 − c1 ) < ∞ , which represents a solution to (1.1) for a given sequence (εn )n . The distribution of X1 is unique for any given m and fε under the assumptions of strict stationarity and causality. (b) We impose that (Xn )n satisfies all properties mentioned in part (a). We denote the left endpoint of the stationary distribution of X1 by LX := sup x ≥ 0 : X1 ≥ x a.s. . Then, LX is the only fixed point of the mapping x 7→ m(x, 0), x ∈ [0, ∞). (c) We impose that (Xn )n satisfies all properties mentioned in part (a). Also, we assume that α00 (1 − α100 ) Rε 1 − α10 − ≤ . 0 00 0 β1 α0 β1 8 4 (2.1) Then, for any z ∈ (LX , LX + Rε β10 α00 /4) and δ ∈ (0, α00 /8), we have P [|X1 − z| ≤ δ] ≥ δ · µ1 (z − LX )µ2 exp µ3 log2 (z − LX ) , for some real-valued constants µ1 , µ2 , µ3 , which only depends on c1 , rε , Rε , α00 , α000 , β10 , where µ1 > 0, µ2 > 0 and µ3 < 0. It immediately follows from Proposition 2.1(a) and Condition A that (Yn )n with Yn := Xn εn is a strictly stationary and causal series as well and consists of nonnegative and essentially bounded random variables. Therein note that Xn and εn are independent. Throughout this work we assume that the series (Xn )n is strictly stationary and causal and satisfies all properties mentioned in Proposition 2.1(a). 3 Methodology In this section we construct a nonparametric estimator for the function m and suggest how to choose some approximation space used for its definition. 3.1 Construction of the estimation procedure Here and elsewhere, we utilize the following notation: Given a measurable basic function f : [a, ∞)2 → [a, ∞) or R2 → R, we define the functions f [k] : [a, ∞)k+1 → [a, ∞) or Rk+1 → R, respectively, a ∈ R, integer k ≥ 1, via the recursive scheme f [1] (x, y1 ) := f (x, y1 ) , f [k+1] (x, y1 , . . . , yk+1 ) := f f [k] (x, y1 , . . . , yk ), yk+1 . (3.1) In the sequel we impose that j ≥ k are arbitrary. Iterating the second equation of (1.1) k-fold, we obtain that Xj+1 = m[k] (Xj−k+1 , Yj−k+1 , . . . , Yj ) . (3.2) Considering the causality of the solution (Xn )n we establish independence of εj+1 on the one hand and of Xj−k+1 , Yj−k+1 , . . . , Yj on the other hand so that E Yj+1 |Xj−k+1 , Yj−k+1 , . . . , Yj = Xj+1 , almost surely; and taking the conditional expectation with respect to the smaller σ-field generated by Yj−k+1 , . . . , Yj leads to E Yj+1 |Yj−k+1 , . . . , Yj = E Xj+1 |Yj−k+1 , . . . , Yj = E m[k] (Xj−k+1 , Yj−k+1 , . . . , Yj )|Yj−k+1 , . . . , Yj 5 a.s. . (3.3) The result (3.3) can be viewed as a pseudo estimation equation since the left hand side is accessible by the data while the right hand side contains the target curve m in its representation. Unfortunately the right hand side also depends on the unobserved Xj−k+1 . However we can show in the following lemma that this dependence is of minor importance for large k. Lemma 3.1 We assume Condition A and that j ≥ k > 1. Let f : [0, ∞)2 → [0, ∞) or R2 → R be a continuously differentiable function with bounded partial derivative with respect to x. Fixing an arbitrary deterministic x0 ≥ 0, we have [k] f (Xj−k+1 , Yj−k+1 , . . . , Yj ) − f [k] (x0 , Yj−k+1 , . . . , Yj ) ≤ kfx kk∞ |Xj−k+1 − x0 | . We recall Condition (A1) which guarantees that the assumptions on f in Lemma 3.1 are satisfied by m; and which provides in addition that kmx k∞ ≤ c1 < 1. Combining (3.2) and Lemma 3.1, we realize that m[k] (x0 , Yj−k+1 , . . . , Yj ) can be employed as a proxy of Xj+1 . Concretely we have 2 2 E Xj+1 − m[k] (x0 , Yj−k+1 , . . . , Yj ) ≤ kmx k2k ∞ E|X1 − x0 | , (3.4) where the strict stationarity of the sequence (Xn )n has been used in the last step. Furthermore, as an immediate consequence of (3.2) and (3.4), we deduce that 2 E E m[k] (Xj−k+1 , Yj−k+1 , . . . , Yj )|Yj−k+1 , . . . , Yj − m[k] (x0 , Yj−k+1 , . . . , Yj ) 2 = E E m[k] (Xj−k+1 , Yj−k+1 , . . . , Yj ) − m[k] (x0 , Yj−k+1 , . . . , Yj )Yj−k+1 , . . . , Yj ≤ E|m[k] (Xj−k+1 , Yj−k+1 , . . . , Yj ) − m[k] (x0 , Yj−k+1 , . . . , Yj )|2 2 ≤ kmx k2k ∞ E|X1 − x0 | , (3.5) Thus, suggesting m[k] (x0 , Yj−k+1 , . . . , Yj ) for an arbitrary fixed deterministic x0 ≥ 0 as a proxy of the right hand side of (3.3) seems reasonable. The left hand side of this equality represents the best least square approximation of Yj+1 among all measurable functions based on Yj−k+1 , . . . , Yj . That motivates us to consider that g ∈ G which minimizes the (nonlinear) contrast functional Φn (g) := n−2 X 1 Yj+1 − g [K] (x0 , Yj−K+1 , . . . , Yj )2 n−K −1 j=K 2 + Yj+2 − g [K+1] (x0 , Yj−K+1 , . . . , Yj+1 ) , as the estimator m b = m(Y b 1 , . . . , Yn ) of m where we fix some finite collection G of applicants for the true regression function m. Moreover the parameter K ≤ n − 2 remains to be chosen. We use a two-step adaptation of g [j] to Yj+1 , i.e. for j = K and j = K + 1, in order to reconstruct g from g [j] which will be made precise in section 4. In particular, Lemma 4.1 will clarify the merit of this double least square procedure. 6 3.2 Selection of the approximation set G Now we focus on the problem of how to choose the approximation set G. In addition to Condition A, we impose Condition B The restriction of the true regression function m to the domain I := [0, R00 ] × [0, R00 Rε ] can be continued to a function on [−1, R00 + 1] × [−1, R00 Rε + 1] which is bβc-fold continuously differentiable and all partial derivatives of m with the order ≤ bβc are bounded by a uniform constant cM on the enlarged domain. Therein we write R00 := α000 /(1−c1 ) with c1 as in Condition (A1). Moreover, for non-integer β, the Hölder condition ∂ bβc m ∂ bβc m (x1 , y1 ) − (x2 , y2 )β−bβc , (x , y ) − (x , y ) ≤ c 1 1 2 2 M (∂x)k (∂y)bβc−k (∂x)k (∂y)bβc−k is satisfied for all k = 0, . . . , bβc, (x1 , y1 ), (x2 , y2 ) which are located in [−1, R00 +1]×[−1, R00 Rε +1]. We assume that β > 2. Note that, by Condition A and Proposition 2.1(a), we have RX ≤ R00 so that the smoothness region of m contains the entire support of the distribution of (X1 , Y1 ). Condition B represents classical smoothness assumptions on m where β describes the smoothness level of the function m. All admitted regression functions, i.e. those functions which satisfy all constraints imposed on m in the Conditions A and B are collected in the function class M = M(c1 , α00 , α10 , β10 , α000 , α100 , β100 , β, cM ) . Contrarily, the distribution of the error ε1 is assumed to be unknown but fixed. By k · k2 we denote the Hilbert space norm kmk22 Z := m2 (x, y)dPX1 ,Y1 (x, y) , for any measurable function m : [0, ∞)2 → R, for which the above expression is finite, with respect to the stationary distribution PX1 ,Y1 of (X1 , Y1 ) when m is the true regression function. Now let us formulate our stipulations on the approximation space G which are required to show the asymptotic results in Section 4. Condition C We assume that G consists either of finitely many measurable mappings g : [0, ∞)2 → [0, ∞) or R2 → R. In addition, we assume that g is continuously differentiable and satisfies kgx k∞ ≤ c1 with the constant c1 as in Condition A and kgy k∞ ≤ cG as well as supx∈[0,R00 ]×[0,R00 Rε ] |g(x, y)| ≤ 7 cG for some constant cG > cM . Furthermore, we impose that any m ∈ M is well-approximable in G; more specifically sup min km − gk22 = O n−β/(1+β) . m∈M g∈G (3.6) On the other hand, the cardinality of G is restricted as follows, #G ≤ exp cN · n1/(1+β) , for some finite constant cN . Therein β and R00 are as in Condition B. We provide Lemma 3.2 For cG sufficiently large, there always exists some set G such that Condition C is satisfied. The cover provided in Lemma 3.2 depends on the smoothness level β in Condition C so that the estimator m b depends on β. Therefore the estimator is denoted by m b β while the index is oppressed elsewhere. That motivates us to consider a cross-validation selector for β as used in the construction of our estimator. The integrated squared error of our estimator is given by ZZ 2 2 km b β − mk2 = km b β k2 − 2 m b β (x, y)m(x, y)dP(X,Y ) (x, y) + kmk22 . (3.7) While the last term in (3.7) does not depend on the estimator we have to mimic the first two expressions. For that purpose we introduce 0 < n1 < n2 < n and base our estimator only on the dataset Y1 , . . . , Yn1 . Then km b β k22 can be estimated by 1 n − n2 n X [j−n1 ] 2 m bβ (x0 , Yn1 +1 , . . . , Yj ) , j=n2 +1 in the notation (3.1), and the second term in (3.7) is estimated by n−1 X 2 [j−n ] Yj+1 · m b β 1 (x0 , Yn1 +1 , . . . , Yj ) . n − n2 − 1 j=n2 +1 Replacing the first two terms in (3.7) by these empirical proxies and omitting the third one provides an empirically accessible version of (3.7). This version can be minimized over some discrete grid with respect to β instead of the true integrated squared error. Then the minimizing b Still we have to leave the question of adaptivity open for future research. value is suggested as β. Finally we mention that the estimator derived in this section can also be applied to parametric approaches although we do not focus on such models in the framework of the current paper. In the simulation section we will consider such a parametric subclass. 8 4 Asymptotic properties In this section we investigate the asymptotic quality of our estimator m b as defined in section 3. In order to evaluate this quality we consider the mean integrated square error Ekm b − mk22 (MISE). In the following subsection 4.1, we deduce upper bounds on the convergence rates of the MISE when the sample size n tends to infinity; while, in subsection 4.2, we will show that those rates are optimal with respect to any estimator based on the given observations in a minimax sense. Note that we consider the MISE uniformly over the function class m ∈ M while the error density fε is viewed as unknown but fixed; in particular, it does not change in n. 4.1 Convergence rates – upper bounds We fix some arbitrary g ∈ G. Then we study the expectation of the functional Φn (g). Exploiting (3.3) and the strict stationarity of (Yn )n , we obtain that 2 2 EΦn (g) = E YK+1 − g [K] (x0 , Y1 , . . . , YK ) + E YK+2 − g(g [K] (x0 , Y1 , . . . , YK ), YK+1 ) = E var(YK+1 |Y1 , . . . , YK ) + E var(YK+2 |Y1 , . . . , YK+1 ) + E|WK − GK (g)|2 + E|WK+1 − GK+1 (g)|2 , where we define Wj := E m[j] (X1 , Y1 , . . . , Yj )|Y1 , . . . , Yj , Gj (g) := g [j] (x0 , Y1 , . . . , Yj ) , g ∈G, for j = K, K + 1. For any g1 , g2 ∈ G we deduce that EΦn (g1 ) − EΦn (g2 ) = E|GK (g1 )|2 + E|GK+1 (g1 )|2 − E|GK (g2 )|2 − E|GK+1 (g2 )|2 − 2 EWK GK (g1 ) + EWK+1 GK+1 (g1 ) − EWK GK (g2 ) − EWK+1 GK+1 (g2 ) . For l = 1, 2, k = K, K + 1, we combine (3.4), (3.5), the Cauchy-Schwarz inequality and the triangle inequality in L2 (Ω, A, P ) to show that EWj Gj (gl ) − EXj+1 Gj (gl ) ≤ 2kmx kj∞ (E|X1 − x0 |2 )1/2 {E|Gj (gl )|2 }1/2 . Then elementary calculation yields that EΦn (g1 ) − EΦn (g2 ) − ϑK (g1 ) + ϑK (g2 ) 2 1/2 ≤ 2kmx kK ∞ (E|X1 − x0 | ) 2 X {E|GK (gl )|2 }1/2 + kmx k∞ {E|GK+1 (gl )|2 }1/2 , (4.1) l=1 9 where ϑK (g) := E|XK+1 − GK (g)|2 + E|XK+2 − GK+1 (g)|2 , g ∈ G. This functional will be studied in the following lemma. In particular, we will provide both an upper and a lower bound of ϑK (g) where the right hand side of the corresponding inequalities both contain the distance between g and the true target function m with respect to the k · k2 -norm. Lemma 4.1 We assume the Conditions A,B and C. Then, for any g ∈ G, we have 1/2 2 1/2 ϑK (g) ≤ 2km − gk2 / 1 − kgx k∞ + kgx kK , ∞ {1 + kgx k∞ }(E|X1 − x0 | ) as well as ϑK (g) ≥ km − gk22 /2 . As a side result of Lemma 4.1, we deduce that under the Conditions A and C 1/2 (E|GK (g)|2 )1/2 ≤ (EX12 )1/2 + ϑK (g) ≤ (EX12 )1/2 + 2(E|X1 − x0 |2 )1/2 + 2km − gk2 /(1 − kgx k∞ ) ≤ (EX12 )1/2 + 2(E|X1 − x0 |2 )1/2 + 2{(EX12 )1/2 + kgk2 }/(1 − kgx k∞ ) ≤ 3RX + x0 + 2{RX + cG }/(1 − c1 ) , using the equality X2 = m(X1 , Y1 ) and the strict stationarity of (Xn )n . Applying that to (4.1) we have EΦn (g1 ) − EΦn (g2 ) − ϑK (g1 ) + ϑK (g2 ) ≤ SK , with SK := 4cK 1 (RX n RX + cG o + x0 ) · 6RX + 4x0 + 4 . 1 − c1 Now we introduce g0 := arg ming∈G km − gk2 . Then, for any g ∈ G, we obtain under Condition A and C by Lemma 4.1 that EΦn (g) − EΦn (g0 ) ≥ ϑK (g) − ϑK (g0 ) − SK 4 1 2 ≥ km − gk22 − 2 · km − g0 k2 − RK , 2 1 − c1 (4.2) where RK := 8(cG + α000 + α100 RX + β100 RX Rε ) 2K 2 (RX + x0 )cK 1 + 4c1 (RX + x0 ) + SK 1 − c1 ≤ Λ1 · cK 1 , (4.3) where the positive finite constant Λ1 only depends on α000 , α100 , β100 , Rε , x0 , cG , c1 by Proposition 2.1(a). The equation (4.2) shows that the distance between the expectation of Φn at g and at 10 g0 , i.e. the best-approximating element of m within G, increases with the order km − gk22 if this distance is large enough so that the expected value of Φn (g) really contrasts g from g0 with respect to their k · k2 -distance to the target function m. That is essential to prove the following theorem. Theorem 4.1 We consider the model (1.1) under the Conditions A, B. The series (Xn )n is assumed to be strictly stationary and causal. We choose K nι for some ι ∈ (0, 1) and the approximation set G such that Condition C is satisfied. Then the estimator m b from section 3 satisfies sup Ekm b − mk22 = O n−β/(β+1) . m∈M We realize that our estimator attains the usual convergence rates for the nonparametric estimation of smooth bivariate regression functions. Remark 1 An inspection of the proof of Theorem 4.1 shows that the result remains valid when M is changed into one of its subsets in the theorem as well as in Condition C. 4.2 Convergence rates – lower bounds In this subsection we focus on the question whether or not the rates established in Theorem 4.1 are optimal with respect to an arbitrary estimator sequence (m b n )n where m b n is based on the data Y1 , . . . , Yn in a minimax sense. Prepatory to this investigation we provide Condition C’ The partial derivative my satisfies inf (x,y)∈[0,∞)2 my (x, y) ≥ c0M for some uniform constant c0M > 0. All m ∈ M which satisfy this constraint are collected in the set M0 . and Lemma 4.2 We consider the model (1.1) under the Conditions A, B and C’. Then the stationary distribution of X1 is absolutely continuous and has a Lebesgue density fX which is bounded from above by kfε k∞ /(c0M α00 ). By k · kλ we denote the norm 1/2 Z R00 Z R00 Rε kf kλ := f 2 (x, y) dx dy , x=α00 y=0 of the Hilbert space of all measurable and squared integrable functions from [α00 , R00 ] × [0, R00 Rε ] to C with respect to the Lebesgue measure λ. For all functions f in this space, we have kf k22 ≤ kf k2λ · kfε k2∞ /{c0M (α00 )2 } . 11 We consider the model (1.1) under the Conditions A and B where, in addition, we stipulate Condition D We have 0 < α00 = α000 =: α0 and α100 > α10 as well as β100 > β10 . Also α100 + β100 Rε < c1 . Also we impose that α100 − α10 ≤ Rε β10 /8 . For some given Rε > 1, the parameters α0 , α10 , α100 , β10 and β100 can be selected such that Condition D is granted while these constraints are consistent with Condition A. For any m ∈ M under the additional Condition D, we consider the interval J (m) := (LX + Rε β10 α0 /16, LX + Rε β10 α0 /8]. Since Rε β10 < 1 by Condition D, it follows from Proposition 2.1(c) that n h R β0 α R β 0 α io µ1 Rε β10 α0 µ2 ε 1 0 ε 1 0 · · exp µ3 log2 + log2 · λ(I) 2 16 16 8 ≥ µ6 · λ(I) , (4.4) P [X1 ∈ I] ≥ for all closed intervals I ⊆ J (m) where the constant µ6 > 0 only depends on c1 , rε , Rε , α0 , β10 and λ stands for the one-dimensional Lebesgue measure. We give the following lemma. Lemma 4.3 Let I = (i1 , i2 ] be an left-open non-void bounded interval and c > 0 some constant. Let Q be some fixed probability measure on the Borel σ-field of I. We impose that for all [a, b] ⊆ I we have Q([a, b]) ≥ c · λ([a, b]) , Then, for all non-negative measurable functions ϕ on the domain I we have Z Z ϕ(x)dQ(x) ≥ c · ϕ(x)dλ(x) . If, in addition, Q has a Lebesgue-Borel density q on I then q(x) ≥ c holds true for Lebesgue almost all x ∈ I. Combining Lemma 4.3 and (4.4), we conclude by Fubini’s theorem that Z Z 2 kmk2 ≥ m2 (x, xt) · 1J (m) (x)dPX1 (x) fε (t)dt Z Z ≥ µ6 · m2 (x, xt)dx fε (t)dt , (4.5) J (m) for any measurable function m : [0, ∞)2 → R with Em2 (X1 , Y1 ) < ∞. Note that the k · k2 norm is constructed with respect to the stationary distribution of (X1 , Y1 ) when m is the true autoregression function. In particular PX1 denotes the marginal distribution of X1 . 12 We define mG (x, y) = α0 + α1 x + β1 y for α1 ∈ (α10 , α100 ) and β1 ∈ (β10 , β100 ). Thus the function m = mG leads to a standard GARCH(1, 1) time series. Considering that (mG )x ≡ α1 and (mG )y ≡ β1 , we realize that m = mG fulfills Condition A. Choosing cM sufficiently large with respect to any β > 2 Condition B is satisfied as well as all derivatives of mG with the order ≥ 2 vanish. Then mG lies in M. By Proposition 2.1(b) we easily deduce that the left endpoint LX of the distribution of X1 under m = mG equals LX = α0 /(1 − α1 ). We introduce the points j 1 , j = 0, . . . , bn , ξj,n := α0 /(1 − α1 ) + Rε β10 α0 · 1 + 16 bn for some sequence (bn )n ↑ ∞ of even integers. Furthermore we define the grid points 1 ij,k := (ξj,n + ξj+1,n ), (k + 1/2)Rε /(2bn ) , j, k = 0, . . . , bn − 1 . 2 1 1 The function H(x, y) := exp x2 −1 + y2 −1 for max{|x|, |y|} < 1 and H(x, y) := 0 otherwise is supported on [−1, 1]2 and differentiable infinitely often on R2 . We specify the following parameterized family of functions m e θ (x, y) := m e G (x, y) + bX n −1 0 cH b−β n θj,k · H 64bn (x − ij,k,1 )/(Rε β1 α0 ), 8bn (y − ij,k,2 )/Rε , j,k=0 where θ = {θj,k }j,k=0,...,bn −1 denotes some binary matrix, cH > 0 denotes a constant, which is viewed as sufficiently small compared to all other constants, and β is as in Condition B. Accordingly we define mθ (x, y) := m e θ (x, y/x) . (4.6) We have provided the family of competing regression functions which will be used to prove the minimax lower bound in the following theorem. Theorem 4.2 We consider the model (1.1) under the Conditions A,B and D with cM sufficiently large. Furthermore, we assume that fε is continuously differentiable and that the Fisher information of the density f ε of log ε1 satisfies 0 2 E f ε (log ε1 )/f ε (log ε1 ) ≤ Fε , (4.7) for some Fε < ∞. Let {m b n }n be an arbitrary estimator sequence where m b n is based on the data Y1 , . . . , Yn . Then we have lim inf nβ/(β+1) sup Ekm b n − mk22 > 0 . n→∞ m∈M As f ε (x) = fε (exp(x)) · exp(x) the condition (4.7) holds true for some finite Fε whenever 2 Eε21 fε0 (ε1 )/fε (ε1 ) < ∞ . Thus, Theorem 4.2 ensures optimality of the convergence rates established for our estimator in Theorem 4.1. 13 5 Implementation and numerical simulations We consider the implementation of our estimator and the perspectives for its use in practice. The main difficulty represents the selection of the approximation set G. Nevertheless we will show that our method can be used beyond the framework of the Conditions A–C, whose main purpose is to guarantee the optimal nonparametric convergence rates as considered in the previous section. As mentioned in Subsection 3.2 we concentrate on a parametric type of G for the numerical simulation. Concretely, we consider the functions mα0 ,α1 ,β1 ,βp (x, y) = α0 + β1 x + α1 y + βp sin(2πx) + cos(2πy) , which represent the GARCH model with some trigonometric perturbance. Figure 1 and 2 show the graphical plot of these functions for the values α0 = 0.2, α1 = 0.2, β1 = 0.3, βp = −0.03 and α0 = 0.3, α1 = 0.2, β1 = 0.3, βp = 0, respectively. α0 α1 β1 βp α0 α1 β1 βp 0.2000 0.2000 0.3000 −0.0300 0.3000 0.2000 0.3000 0 0.2000 0.2500 0.2500 −0.0300 0.3000 0.2000 0.3000 0 0.2000 0.2000 0.3000 −0.0300 0.3000 0.2500 0.2500 0.0100 0.2000 0.2000 0.3000 −0.0300 0.3000 0.2000 0.3000 0 0.2000 0.2000 0.3000 −0.0300 0.3000 0.2000 0.3000 −0.0100 0.2000 0.2000 0.3000 −0.0300 0.3000 0.2000 0.3000 0 0.2000 0.2000 0.3000 −0.0300 0.3000 0.2000 0.3000 0.0100 0.2000 0.2000 0.3000 −0.0300 0.3000 0.2000 0.3000 0 0.2000 0.2000 0.3000 −0.0300 0.3000 0.2000 0.3000 −0.0100 0.2000 0.2000 0.3000 −0.0300 0.3000 0.2000 0.3000 −0.0100 Table 1: results corr. to Fig. 1 Table 2: results corr. to Fig. 2 As huge sample sizes are usually available in financial econometrics we base our simulations on 5, 000 observations Y1 , . . . , Y5000 . We choose G = mα0 ,α1 ,β1 ,βp : α0 , α1 , β1 = 0.1 : 0.05 : 0.3, βp = −0.03 : 0.01 : 0.03 , for our estimator under the true m from Figure 1 as well as from Figure 2. Note that the existence of a stationary solution is ensured for each g ∈ G by the results of Bougerol (1993). We have executed 10 independent simulation runs for each setting. We select the parameters K = 5 and x0 = 0. Moreover the error variables εj have the Lebesgue density fε (x) = 2 3 1 − x/4 1[0,4] (x) , 4 14 0.8 1 0.6 0.8 0.4 0.6 0.2 0.4 0 1 0.2 1 0.8 0.8 1 0.6 0.4 0.2 0.2 0 0.8 0.6 0.4 0.4 0.2 1 0.6 0.8 0.6 0.4 0.2 0 0 Figure 1: trigonometric perturbance 0 Figure 2: pure GARCH surface in our simulations. The results of all simulations for m as in Figure 1 and Figure 2 are given in Table 1 and 2, respectively. We realize that our procedure performs well for the considered estimation problem. However refining the equidistant grid from the step width 0.05 to e.g. 0.01 will increase the computational effort intensively and also deteriorate the estimation results due to the increasing variance of the estimator under enlarged G. Critically we address that the appropriate selection of G remains a challenging problem in practice in order to avoid a curse of dimensionality if many coefficients are involved. The MATLAB code is available from the authors upon request. 6 Proofs Proof of Proposition 2.1: (a) Inspired by the proof of Bougerol (1993) we introduce random variables εn with integer n < 0 such that εn , integer n, are i.i.d. random variables on a joint probability space (Ω, A, P ). Furthermore, we define the random variables Zt,0 := 0 for all integer t ≥ 0 and recursively Zt,s+1 := m(Z e t,s , εs−t ). We deduce that |Zt+1,s+1 − Zt,s | = m(Z e t+1,s , εs−t−1 ) − m(Z e t,s−1 , εs−t−1 ) ≤ km e x (·, εs−t−1 )k∞ |Zt+1,s − Zt,s−1 | , for any t ≥ 0, s ≥ 1 by the mean value theorem. Repeated application of this inequality yields that |Zt+1,s+1 − Zt,s | ≤ s−1 Y j=0 15 km e x (·, εj−t )k∞ α000 . As the random variables km e x (·, εj−t )k∞ , j = 0, . . . , s − 1, are bounded from above by kmx k∞ + kmy k∞ Rε almost surely we obtain that ess sup|Zt+1,s+1 − Zt,s | ≤ (kmx k∞ + kmy k∞ Rε )s α000 ≤ cs1 α000 . (6.1) As a side result of this inequality we derive by induction that all random variables Zt+n,n , t, n ≥ 0, are located in L∞ (Ω, A, P ), i.e. the Banach space consisting of all random variables X on (Ω, A, P ) with ess sup|X| < ∞. Moreover, Condition (A1) and the nonnegativity of the εn provide that Zt+n,n ≥ 0 almost surely for all t, n ≥ 0 also by induction. Since kmx k∞ + kmy k∞ Rε ≤ c1 < 1 and (6.1) holds true we easily conclude by the triangle inequality that (Zt+n,n )n≥0 are Cauchy sequences in L∞ (Ω, A, P ) for all integer t ≥ 0. By the geometric summation formula, we also deduce that ess sup Zt+n,n ≤ α000 /(1 − c1 ) , (6.2) for all t, n ≥ 0. The completeness of L∞ (Ω, A, P ) implies convergence of each (Zt+n,n )n≥0 to some Zt∗ ∈ L∞ (Ω, A, P ) with respect to the underlying essential supremum norm. The nonnegative random variables on (Ω, A, P ) which are bounded from above by α000 /(1 − c1 ) almost surely form a closed subset of L∞ (Ω, A, P ) as L∞ (Ω, A, P )-convergence implies convergence in distribution. We conclude that (6.2) can be taken over to the limit variables Zt∗ so that Zt∗ ∈ 0, α000 /(1 − c1 ) almost surely for all t ≥ 0. Since L∞ (Ω, A, P )-convergence also implies convergence in probability the inequality (6.1) also yields that P |Zt+n,n − Zt∗ | > δ ≤ lim sup P |Zt+n,n − Zt+m,m | > δ/2 m→∞ ≤ 2δ −1 ∞ X ess sup |Zt+m,m − Zt+m+1,m+1 | m=n ≤ 2δ −1 α000 cn1 /(1 − c1 ) , by Markov’s inequality for any δ > 0 so that the sum of the above probabilities taken over all n ∈ N is finite. Hence, (Zt+n,n )n converges to Zt∗ almost surely as well. By the recursive definition of the Zt,s and the joint initial values Zt,0 = 0 we realize that, for any fixed n, the random variables Zt+n,n , t ≥ 0, are identically distributed. We conclude that all limit variables Zt∗ possess the same distribution. As m e is continuous and we have Zt+n+1,n+1 = m(Z e t+n+1,n , ε−t−1 ) the established almost sure convergence implies that ∗ ∗ ∗ Zt∗ = m(Z e t+1 , ε−t−1 ) = m(Zt+1 , Zt+1 ε−t−1 ) , almost surely for all t ≥ 0. The variable Zt+1+n,n is measurable in the σ-algebra generated by ∗ Zt+1+n,0 , ε−t−2 , ε−t−3 , . . . , ε−t−n−1 . Hence, an appropriate version of the almost sure limit Zt+1 16 is measurable in the σ-algebra generated by all εs , s ≤ −t − 2, and therefore independent of ε−t−1 . We conclude that, by putting X0 := Z0∗ , the induced sequences (Xn )n , which are defined according to the recursive relation (1.1), is strictly stationary and causal since X0 , ε0 , ε1 , . . . are independent so that all marginal distributions of (Xn )n−s∈T , with any finite T ⊆ N0 , do not depend on the integer shift s ≥ 0. Also all Xn have the same distribution as the Zt∗ so that Xn ∈ [0, α000 /(1 − c1 )] holds true almost surely. Uniqueness of the stationary distribution can be seen as follows: We have Zt+n,n = m e [n] (0, ε−n−t , . . . , ε−1−t ) for all t and n in the notation (3.1). Now let (Xn )n be a strictly stationary and causal time series where X1 is independent of all εs , integer s. Again, by the mean value theorem and induction we obtain that Zt+n,n − m e [n] (X1 , ε−n−t , . . . , ε−1−t ) ≤ α000 cn1 , [n] e (X1 , ε−n−t , . . . , ε−1−t ) n converges to the random variable so that the stochastic process m Zt∗ almost surely and, hence, in distribution. As we claim that {Xn }n is a strictly stationary and causal solution for (1.1) all the random variables m e [n] (X1 , ε−n−t , . . . , ε−1−t ) have the same distribution as X1 . Therefore the distributions of X1 and Zt∗ coincide so that the distribution of Zt∗ is indeed the unique strictly stationary distribution when m is the autoregression function and fε is the error density. (b) Since {X1 ≥ LX } = T n∈N {X1 ≥ LX − 1/n} we have X1 ≥ LX almost surely. The monotonicity constraints in Condition (A3) imply that X2 = m(X e 1 , ε1 ) ≥ m(L e X , 0) = m(LX , 0) a.s.. The strict stationarity of the process (Xn )n provides that X1 ≥ m(LX , 0) a.s. holds true as well. By the definition of LX it follows that LX ≥ m(LX , 0). Now we assume that LX > m(LX , 0) = m(L e X , 0). As m e is continuous there exists some ρ > 0 with LX > m(L e X + ρ, ρ). Thus, P [X1 ≤ LX + ρ, ε1 ≤ ρ] ≤ P [X2 = m(X e 1 , ε1 ) ≤ m(L e X + ρ, ρ)] ≤ P [X1 < LX ] = 0 , due to Condition (A3) and the definition of LX . On the other hand we have P [X1 ≤ LX + ρ, ε1 ≤ ρ] = P [X1 ≤ LX + ρ] · P [ε1 ≤ ρ] = 0 , so that we obtain a contradiction to Condition (A4) and the definition of LX . We conclude that LX = m(LX , 0). If another solution x ≥ 0 existed we would have |x − LX | = |m(x, 0) − m(LX , 0)| ≤ kmx k∞ |x − LX | ≤ c1 |x − LX | , leading to a contradiction since c1 < 1. 17 (c) The constraints in Condition (A1) and (A2) give us that LX ≥ α00 > 0. Writing FX for the distribution function of X1 we deduce for any x > LX that Z FX (x) = P [m(X e 1 , ε1 ) ≤ x] = P [m(t, e ε1 ) ≤ x] dFX (t) , (6.3) t≥LX where we exploit the independence of X1 and ε1 . Let us consider the probability P [m(t, e ε1 ) ≤ x] for any fixed t ≥ LX . We have P [m(t, e ε1 ) ≤ x] = P [m(t, e ε1 ) − m(t, e 0) ≤ x − m(t, 0)] ≥ P kmy k∞ tε1 ≤ x − m(t, 0) ≥ P ε1 ≤ (x − m(t, 0))/(c1 t) , by Condition (A1), which provides via Rε ≥ Eε1 = 1 that 1 > c1 ≥ kmy k∞ Rε ≥ kmy k∞ , and the mean value theorem. This theorem also yields that x − m(t, 0) = x − LX + m(LX , 0) − m(t, 0) ≥ x − LX − kmx k∞ (t − LX ) ≥ x − LX − c1 (t − LX ) , (6.4) so that P m(t, e ε1 ) ≤ x ≥ Fε x − LX − c1 (t − LX ) /(c1 t) , where Fε denotes the distribution function of ε1 . Also if t − LX ≤ c−1 1 (x − LX ) holds true then x ≥ m(t, 0) and also c1 t ≤ x. Writing GX (y) := FX (y + LX ), y ∈ R, we insert these results into (6.3), which yields that Z GX (x − LX ) ≥ 1[0,c−1 (x−LX )] (s) Fε (x − LX − c1 s)/x dGX (s) . 1 We define c∗1 := (1 + c1 )/2 and conclude that Z 1 − c y 1 · · GX (y/c∗1 ) , GX (y) ≥ 1[0,y/c∗1 ] (s)Fε y(1 − c1 /c∗1 )/(y + LX ) dGX (s) ≥ Fε 1 + c1 y + LX for all y > 0. Iterating this inequality we obtain by induction that ≥ GX Y n−1 x − LX 1 + c1 (c∗1 )j LX + x − LX j=0 1 − c x − L 1 X (x − LX )/(c∗1 )n · Fεn · , 1 + c1 x1 GX (x − LX ) ≥ GX (x − LX )/(c∗1 )n Fε 1 − c 1 · for all integer n ≥ 2 and x ∈ (LX , x1 ) where we put x1 := 2α000 /(1 − c1 ). By the upper bound on RX in part (a) we derive that GX (x1 − LX ) = FX (x1 ) = 1 . 18 This also provides that x1 > LX . Thus, for all integer n ≥ − log (x1 − LX )/(x − LX ) / log c∗1 and x ∈ (LX , x1 ), we have GX (x − LX ) ≥ Fεn 1 − c 1 · x − LX x1 1 + c1 Specifying n := d− log (x1 − LX )/(x − LX ) / log c∗1 e we obtain that n log(x − L ) − log(x − L ) 1 − c x − L o 1 1 X X X GX (x − LX ) ≥ exp − · − 1 · log Fε . ∗ log c1 1 + c1 x1 The lower bound imposed on fε in Condition (A4) implies that Fε (t) ≥ rε t for all t ∈ [0, Rε /2] so that by elementary calculation and bounding we obtain n (1 + c1 )Rε x1 o FX (x) ≥ µ5 (x − LX )µ3 exp µ4 log2 (x − LX ) , ∀x ∈ LX , min x1 , LX + , 2 − 2c1 (6.5) where µ3 , µ4 , µ5 are real-valued constants which only depend on c1 , rε , cε , α00 , α000 . In particular we have µ4 = log−1 c∗1 < 0 and µ3 , µ5 > 0. Now we fix some arbitrary z > LX and δ > 0. Using the arguments from above (see eq. (6.3)) we deduce that Z x2 P |X1 − z| ≤ δ ≥ t=LX P |m(t, e ε1 ) − z| ≤ δ dFX (t) , (6.6) for some x2 ≥ LX which remains to be specified. We learn from Condition (A2) and (A3) that the continuous functions s 7→ m(t, e s), s ∈ [0, ∞), which increase monotonically, tend to +∞ as s → +∞ and, therefore, range from m(t, 0) to +∞. We focus on the case where z ≥ m(t, 0). Then there exists some s(t, z) ≥ 0 such that m(t, e s(t, z)) = z. The mean value theorem provides that for all s ≥ 0 with |s − s(t, z)| ≤ Rε δ/x2 we have |m(t, e s) − z| ≤ δ since kmy k∞ ≤ c1 /Rε < 1/Rε . We conclude that P |m(t, e ε1 ) − z| ≤ δ ≥ P |ε1 − s(t, z)| ≤ Rε δ/x2 ≥ Rε δx−1 2 fε s(t, z) + Rε δ/x2 , by Condition (A4). The lower bound on m in Condition (A2) yields that s(t, z) ≤ (z − α00 − α10 t)/(β10 t). Therein note that z ≥ m(t, 0) ≥ α00 + α10 t. We obtain the inequality z − α0 α10 −1 0 − + R δx . P |m(t, e ε1 ) − z| ≤ δ ≥ Rε δx−1 f ε ε 2 2 β10 LX β10 Inserting this low bound, which does not depend on t, into (6.6) for all t ∈ [LX , x2 ] with m(t, 0) ≤ z we deduce that z − α0 0 0 P |X1 − z| ≤ δ ≥ P X1 ≤ x2 , m(X1 , 0) ≤ z · Rε δx−1 f + R δ/α ε ε 0 2 β10 α00 z − L α100 − α10 X 0 ≥ FX (x2 ) · 1[0,z] (m(x2 , 0)) · Rε δx−1 f − + R δ/α ε ε 0 . 2 β10 α00 β10 19 Also we have used that x2 ≥ LX ≥ α00 ; and that LX = m(LX , 0) ≥ α000 + α100 LX , due to part (b) of the proposition, leading to LX ≤ α000 /(1 − α100 ). Using the arguments from (6.4) when replacing t by x2 and x by z we derive that selecting x2 = LX + (z − LX )/ċ1 implies m(x2 , 0) ≤ z for any ċ1 > max 1, β10 /4(1 + Rε (1 − c1 )) , which finally leads to P |X1 − z| ≤ δ ≥ z −1 δ (ċ1 )−µ3 µ5 exp(µ4 log2 ċ1 )(z − LX )µ3 −2µ4 log ċ1 exp µ4 log2 (z − LX ) z − L α100 − α10 X 0 · fε − + R δ/α ε 0 , β10 α00 β10 when combining these findings with (6.5) and x2 ≤ z. The assumptions on z and δ along with (2.1) yield that z − LX α100 − α10 − + Rε δ/α00 < Rε /2 , β10 α00 β10 so that Condition (A4) completes the proof of the proposition. Proof of Lemma 3.1: From the recursive definition of the f [k] we learn that [k] f (Xj−k+1 , Yj−k+1 , . . . , Yj ) − f [k] (x0 , Yj−k+1 , . . . , Yj ) ≤ f f [k−1] (Xj−k+1 , Yj−k+1 , . . . , Yj−1 ), Yj − f f [k−1] (x0 , Yj−k+1 , . . . , Yj−1 ), Yj ≤ kfx k∞ f [k−1] (Xj−k+1 , Yj−k+1 , . . . , Yj−1 ) − f [k−1] (x0 , Yj−k+1 , . . . , Yj−1 ) , by the mean value theorem. Repeated use of this inequality (by reducing j and k by 1 in each step) proves the claim of the lemma. Proof of Lemma 3.2: We define M0 as the set of the restriction of m to the domain I := [0, R00 ] × [0, R00 Rε ] for all m ∈ M. We learn from Theorem 2.7.1, p. 155 in van der Vaart and Wellner (1996) that the κ-covering number N (κ) of M0 with respect to the uniform metric, i.e. the minimal number of closed balls in C0 (I) with the radius κ which cover M0 , is bounded from above by exp cN · κ−2/β for all κ > 0 where the finite constant cN only depends on R00 , Rε , cM and β. Related results on covering numbers can be found in the book of van de Geer (2000). Moreover C0 (I) denotes the Banach space of all continuous functions on I, equipped with the underlying supremum norm. If, in addition, we stipulate that the centers of the covering balls in the definition of N (κ) are located in M0 we refer to the minimal number of such balls as the intrinsic covering number N (M0 , κ). Now assume that M1 represents a κ/2-cover of M0 with #M1 = N (κ/2). Hence, for any m1 ∈ M1 there exists some m0 ∈ M0 such that km1 − m0 k∞ ≤ κ/2. Otherwise, M1 \{m1 } would be a κ/2-cover of M0 with the cardinality N (κ/2) − 1. Therefore we can define a mapping Ψ from the finite set M1 to M0 which maps 20 m1 ∈ M1 to some fixed m0 ∈ M0 with km1 − m0 k∞ ≤ κ/2. Obviously, {Ψ(m1 ) : m1 ∈ M1 } represents an intrinsic κ-cover of M0 so that N (M0 , κ) ≤ exp cN 41/β · κ−2/β . Now we select the set G1 such that G0 := g |I : g ∈ G is an intrinsic κn -cover of M0 with −2/β #G ≤ exp cN 41/β ·κn . Note that kgx k∞ ≤ c1 follows from Condition A for all g ∈ G1 ⊆ M. When choosing the constant cG sufficiently large with respect to Rε , R00 , c1 , α000 , α100 , β100 , the other constraints contained in Condition B are also fulfilled. When choosing the sequence (κn )n such that κn n−β/(2β+2) Condition C is granted since kf k22 ≤ sup f 2 (x, y) , (x,y)∈I for all measurable functions f : [0, ∞)2 → R with Ef 2 (X1 , Y1 ) < ∞. Proof of Lemma 4.1: We deduce by (3.2) that 1/2 E|Xj+1 − g [j] (X1 , Y1 , . . . , Yj )|2 2 1/2 2 1/2 ≤ E m(Xj , Yj ) − g(Xj , Yj ) + E g(Xj , Yj ) − g(g [j−1] (X1 , Y1 , . . . , Yj−1 ), Yj ) 1/2 ≤ km − gk2 + kgx k∞ E|Xj − g [j−1] (X1 , Y1 , . . . , Yj−1 )|2 , by the strict stationarity of the sequences (Xn )n and (Yn )n . Iterating this inequality by replacing j by j − 1 in the next step, we conclude that 2 1/2 [j] E|Xj+1 − g (X1 , Y1 , . . . , Yj )| ≤ km − gk2 j−1 X kgx kk∞ ≤ km − gk2 / 1 − kgx k∞ , k=0 for all integer j ≥ 1. Moreover we obtain that E|Xj+1 − Gj (g)|2 1/2 1/2 ≤ km − gk2 / 1 − kgx k∞ + E|g [j] (X1 , Y1 , . . . , Yj ) − g [j] (x0 , Y1 , . . . , Yj )|2 ≤ km − gk2 / 1 − kgx k∞ + kgx kj∞ (E|X1 − x0 |2 )1/2 , so that the proposed upper bound on ϑ(g) has been established. 21 In order to prove the lower bound, we consider that |XK+1 − GK (g)|2 + |m(XK+1 , YK+1 ) − g(GK (g), YK+1 )|2 ≥ |XK+1 − GK (g)|2 + |m(XK+1 , YK+1 ) − g(XK+1 , YK+1 )|2 − 2|gx (ξK , YK+1 )| · |XK+1 − GK (g)| · |m(XK+1 , YK+1 ) − g(XK+1 , YK+1 )| + |gx (ξK , YK+1 )|2 · |XK+1 − GK (g)|2 ≥ min |XK+1 − GK (g)|2 + |m(XK+1 , YK+1 ) − g(XK+1 , YK+1 )|2 λ∈[0,1] − 2λ|XK+1 − GK (g)| · |m(XK+1 , YK+1 ) − g(XK+1 , YK+1 )| + λ2 |XK+1 − GK (g)|2 . by the mean value theorem where ξK denotes some specific value between XK+1 and GK (g). In order to determine that minimum only the values λ ∈ {0, 1, |m(XK+1 , YK+1 ) − g(XK+1 , YK+1 )|/ |XK+1 − GK (g)|} have to be considered where the last point has to be taken into account only if |m(XK+1 , YK+1 ) − g(XK+1 , YK+1 )| ≤ |XK+1 − GK (g)|. In all of those cases we derive that the above term is bounded from below by |m(XK+1 , YK+1 ) − g(GK (g), YK+1 )|2 /2. Then, taking the expectation of these random variables yields the desired result. Proof of Theorem 4.1: By Condition C we have kgk22 ≤ c2G for all g ∈ G. As m b ∈ G hold true by the definition of m b we have kmk b 22 ≤ c2G almost surely. Condition B yields that kmk22 ≤ c2M for the true regression function m since |m| is bounded by cM on the entire support of (X1 , Y1 ). Therefore, we have km b − mk2 ≤ cM + cG almost surely and Ekm b − mk22 = Z ∞ δ=0 P km b − mk22 > δ dδ ≤ δn + Z (cM +cG )2 δ=δn P km b − mk22 > δ dδ , (6.7) where δn := cδ · n−β/(β+1) for some constant cδ > 1 still to be chosen. Clearly we have 0 < δn < (cM + cG )2 for n sufficiently large. By the definition of m b we derive that Φn (m) b ≤ Φn (g0 ) holds 2 >δ true almost surely. Writing ∆n (g) := Φn (g)−EΦn (g), equation (4.2) provides that km−mk b 2 implies the existence of some g ∈ G with km − gk22 > δ such that 1 4 1 km − g0 k22 + RK . ∆n (g) − ∆n (g0 ) ≤ − km − gk22 − δ + 4 4 (1 − c1 )2 Condition C yields that sup km − g0 k22 = O n−β/(1+β) . m∈M Therefore cδ can be selected sufficiently large (uniformly for all m ∈ M) such that |∆n (g) − ∆n (g0 )| ≥ 22 1 km − gk22 , 4 for all δ > δn . Therein, note that, by (4.3) and the imposed choice of K, the term RK is asymptotically negligible uniformly with respect to m. Hence, we have shown that h i 1 P km b − mk22 > δ ≤ P ∃g ∈ G : km − gk22 > δ , ∆n (g) − ∆n (g0 ) ≥ km − gk22 4 h 1 i ≤ P ∃g ∈ G : ∆n (g) − ∆n (g0 ) ≥ max δ, km − gk22 , 4 holds true for all δ ∈ δn , (cG + cM )2 and for all n > N with some N which does not depend on m but only on the function class M. By the representation ∆n (g) − ∆n (g0 ) = n−2 X 1 Vj,K (g) + Vj+1,K+1 (g) − Vj,K (g0 ) − Vj+1,K+1 (g0 ) n−K −1 j=K − 2Uj,K (g) + 2Uj,K (g0 ) − 2Uj+1,K+1 (g) + 2Uj+1,K+1 (g0 ) , where 2 Vj,K (g) := m[K] (Xj−K+1 , Yj−K+1 , . . . , Yj ) − g [K] (x0 , Yj−K+1 , . . . , Yj ) 2 − E m[K] (Xj−K+1 , Yj−K+1 , . . . , Yj ) − g [K] (x0 , Yj−K+1 , . . . , Yj ) , Uj,K (g) := Xj+1 · m[K] (Xj−K+1 , Yj−K+1 , . . . , Yj ) − g [K] (x0 , Yj−K+1 , . . . , Yj ) · (εj+1 − 1) , we deduce that 1 h X P km b − mk22 > δ ≤ P sup l=0 + g∈G j=K 1 h X P sup g∈G l=0 ≤ n−2 X 1 1i Vj+l,K+l (g)/ max{δ, km − gk22 } ≥ n−K −1 32 n−2 X 1i 1 Uj+l,K+l (g)/ max{δ, km − gk22 } ≥ n−K −1 64 j=K 1 n − K − 1i h n−2 XX X P Vj+l,K+l (g)/ max{δ, km − gk22 } ≥ 32 g∈G l=0 + j=K 1 n − K − 1i h n−2 XX X Uj+l,K+l (g)/ max{δ, km − gk22 } ≥ , P 64 g∈G l=0 (6.8) j=K for all δ ∈ δn , (cG + cM )2 and n > N . In order to continue the bounding of the probability P km b − mk22 > δ an exponential inequality for weakly dependent and identically distributed random variables is required. In the following we will utilize a result from Merlevède et al. (2011). For that purpose, we introduce the stochastic processes (Xj∗ )j and (Yj∗ )j by x0 , ∗ Xj := m e [j−p−2] (x0 , εp+2 , . . . , εj−1 ) , 23 for j ≤ p + 2 , otherwise. and Yj∗ x0 , := X ∗ ε , j j for j ≤ p + 2 , otherwise. ∗ (g) and U ∗ (g) by Accordingly we define the random variables Vj,k j,K 2 ∗ ∗ ∗ ∗ Vj,K (g) := m[K] (Xj−K+1 , Yj−K+1 , . . . , Yj∗ ) − g [K] (x0 , Yj−K+1 , . . . , Yj∗ ) 2 − E m[K] (Xj−K+1 , Yj−K+1 , . . . , Yj ) − g [K] (x0 , Yj−K+1 , . . . , Yj ) , ∗ ∗ ∗ ∗ ∗ Uj,K (g) := Xj+1 · m[K] (Xj−K+1 , Yj−K+1 , . . . , Yj∗ ) − g [K] (x0 , Yj−K+1 , . . . , Yj∗ ) · (εj+1 − 1) , All variables Xj∗ and Yj∗ are measurable in the σ-field generated by εp+2 , εp+3 , . . . and so are ∗ (g) and U ∗ (g) for all j ≥ p + 1. Moreover, by M , s = 0, 1, we denote the σ-fields Vj,K p,s j,K induced by the Vj+l,K+l (g), j ≤ p and Uj+l,K+l (g), j ≤ p, respectively, which are both contained ∗ (g) and U ∗ (g), j ≥ p + 1 are independent in σ (Xj+l , εj+l ) : j ≤ p as a subset. Hence, all Vj,K j,K of Mp,s , s = 0, 1, respectively, so that E f (fL (Vj∗1 +l,K+l , . . . , Vj∗L +l,K+l )) | Mp,0 = E f (fL (Vj∗1 +l,K+l , . . . , Vj∗L +l,K+l )) , E f (fL (Uj∗1 +l,K+l , . . . , Uj∗L +l,K+l )) | Mp,1 = E f (fL (Uj∗1 +l,K+l , . . . , Uj∗L +l,K+l )) , hold true almost surely for p + 1 ≤ j1 < · · · < jL . Therein f and fL denote some arbitrary 1-Lipschitz-functions from R and RL , resp., to R. From there we learn that E f (fL (Vj +l,K+l , . . . , Vj +l,K+l )) | Mp,0 − E f (fL (Vj +l,K+l , . . . , Vj +l,K+l )) 1 1 L L ≤ E f (fL (Vj1 +l,K+l , . . . , VjL +l,K+l )) − f (fL (Vj∗1 +l,K+l , . . . , Vj∗L +l,K+l )) | Mp,0 + E f (fL (Vj1 +l,K+l , . . . , VjL +l,K+l )) − f (fL (Vj∗1 +l,K+l , . . . , Vj∗L +l,K+l )) L L X X ∗ ≤ E Vjr +l,K+l − Vjr +l,K+l + E Vjr +l,K+l − Vj∗r +l,K+l | Mp,0 . r=1 (6.9) r=1 Analogously we deduce that E f (fL (Uj +l,K+l , . . . , Uj +l,K+l )) | Mp,1 − E f (fL (Uj +l,K+l , . . . , Uj +l,K+l )) 1 1 L L ≤ L L X X E Ujr +l,K+l − Uj∗r +l,K+l + E Ujr +l,K+l − Uj∗r +l,K+l | Mp,1 . r=1 (6.10) r=1 Now we consider that 2 00 Vj +l,K+l − V ∗ r jr +l,K+l ≤ ∆ + 2(R + cG )∆ , 00 00 ∗ Uj +l,K+l − U ∗ r jr +l,K+l ≤ (Rε + 1) R ∆ + (R + cG )|Xjr +l+1 − Xjr +l+1 | + ∆|Xj∗r +l+1 − Xjr +l+1 | , 24 (6.11) where R00 ≥ RX is as in Condition B and ∆ := m[K] (Xj∗r −K+1 , Yj∗r −K+1 , . . . , Yj∗r +l ) − m[K] (Xjr −K+1 , Yjr −K+1 , . . . , Yjr +l ) + g [K] (x0 , Yj∗r −K+1 , . . . , Yj∗r +l ) − g [K] (x0 , Yjr −K+1 , . . . , Yjr +l ) . We have [K] ∗ m (Xj −K+1 , Yj∗ −K+1 , . . . , Y ∗ ) − m[K] (Xjr −K+1 , Yjr −K+1 , . . . , Yj +l ) r jr +l r r ≤ kmx k∞ · m[K−1] (Xj∗r −K+1 , Yj∗r −K+1 , . . . , Yj∗r +l−1 ) − m[K−1] (Xjr −K+1 , Yjr −K+1 , . . . , Yjr +l−1 ) + kmy k∞ · Yj∗r +l − Yjr +l .. . ≤ kmy k∞ · K+l−1 X ∗ kmx kq∞ Yj∗r +l−q − Yjr +l−q + kmx kK+l ∞ · Xjr −K+1 − Xjr −K+1 , (6.12) q=0 by iterating the first inequality driven by the mean value theorem. We obtain the corresponding inequality [K] g (x0 , Yj∗ −K+1 , . . . , Y ∗ ) − g [K] (x0 , Yjr −K+1 , . . . , Yj +l ) r jr +l r ≤ kgy k∞ · K+l−1 X kgx kq∞ Yj∗r +l−q − Yjr +l−q . (6.13) q=0 For the distances between the variables and their proxies we deduce that ∗ Xj −K+1 − Xjr −K+1 ≤ (x0 + R00 )c(jr −K−p−1)+ . 1 r since km e x (·, εj )k∞ ≤ c1 for all integer j and Xjr −K+1 = m e [jr −K−p−1] (Xp+2 , εp+2 , . . . , εjr −K ) for jr − K − p ≥ 2. Also we have x0 + Rε R00 , jr +l−q − Yjr +l−q ≤ R (x + R00 )cjr +l−q−p−2 , ε 0 1 for q ≥ jr + l − p − 2 , ∗ Y otherwise. Inserting these inequalities into (6.12) we obtain that [K] ∗ m (Xj −K+1 , Yj∗ −K+1 , . . . , Y ∗ ) − m[K] (Xjr −K+1 , Yjr −K+1 , . . . , Yj +l ) r jr +l r r ≤ c1 (x0 + R00 )c1jr +l−p−2 min{K + l, (jr + l − p − 2)+ } + c1 (x0 + Rε R00 ) Rε K+l−1 X K+l+(jr −K−p−1)+ cq1 + (x0 + R00 ) c1 q=jr +l−p−2 ≤ (x0 + R00 )(jr + l − p − 2)+ cj1r +l−p−1 + x0 + Rε R00 jr +l−p−1 c Rε (1 − c1 ) 1 + (x0 + R00 ) c1jr +l−p−1 ≤ const. · (jr − p)cj1r −p , 25 where we arrange that the constant “const.” only depends on c1 , x0 , R00 , Rε , cG . Note that the deterministic constant const. may change its value from line to line as long as its sole dependence on the above quantities is not affected. With respect to (6.13) we analogously derive that [K] g (x0 , Yj∗ −K+1 , . . . , Y ∗ ) − g [K] (x0 , Yjr −K+1 , . . . , Yj +l ) r jr +l r ≤ cG Rε (x0 + R00 )(jr + l − p − 2)+ cj1r +l−p−2 + cG (x0 + Rε R00 ) jr +l−p−1 c1 1 − c1 ≤ const. · (jr − p)cj1r −p . Thus, ∆ also admits the upper bound const. · (jr − p)cj1r −p . As above we deduce that ∗ 00 (jr +l−p−1)+ X . jr +l+1 − Xjr +l+1 ≤ (x0 + R )c1 Combining these findings with the inequalities (6.11) we provide that max Vjr +l,K+l − Vj∗r +l,K+l , Ujr +l,K+l − Uj∗r +l,K+l ≤ const. · (jr − p)cj1r −p . The equations (6.9) and (6.10) yield that E f (fL (Vj 1 +l,K+l , . . . , VjL +l,K+l )) | Mp,0 ≤ const. · − E f (fL (Vj1 +l,K+l , . . . , VjL +l,K+l )) ∞ L X X ici1 (jr − p)cj1r −p ≤ const. · i=j1 −p r=1 = const. · c1 cj11 −p d = const. · cj11 −p · (j1 − p)(1 − c1 ) + c1 , dc1 1 − c1 (6.14) while E f (fL (Uj 1 +l,K+l , . . . , UjL +l,K+l )) | Mp,1 − E f (fL (Uj1 +l,K+l , . . . , UjL +l,K+l )) , admits the same upper bound. Note that this upper bound does not depend on L. In the notation of Merlevède et al. (2011), in particular eq. (2.3), we have established that bxc τ (x) ≤ const. · c1 · bxc(1 − c1 ) + c1 , for all x ≥ 1. Thus, τ (x) ≤ const. · exp x · log c1 + 1/c1 − 1 , Since c1 ∈ (0, 1) we have log c1 + 1/c1 − 1 < 0 as the function h(x) := log x + 1/x − 1, x ∈ (0, 1], is continuous, increases strictly monotonically and satisfies h(1) = 0. Therefore, the condition (2.6) in Merlevède et al. (2011) is fulfilled when putting c := − log c1 − 1/c1 + 1, γ1 := 1 and choosing a sufficiently large (only depending on c1 , x0 , R00 , Rε ). Moreover the condition (2.7) of that paper is also satisfied for any γ2 > 0 and an appropriate constant b with respect to γ2 26 since the supports of the distributions of the random variables Vj,K (g) and Uj,K (g) are included in the intervals [−2(cG + R00 )2 , 2(cG + R00 )2 ] and [−R00 (cG + R00 )(Rε + 1), R00 (cG + R00 )(Rε + 1)], respectively, as subsets. Then, the constraint (2.8) of Merlevède et al. (2011) is also satisfied for γ := γ2 /(1 + γ2 ) ∈ (0, 1). Now let us consider the covariance of Vj+l,K+l (g) and Vi+l,K+l (g) for j > i. We have cov Vj+l,K+l (g), Vi+l,K+l (g) = EVj+l,K+l (g)Vi+l,K+l (g) = EVi+l,K+l (g) E Vj+l,K+l (g) | Mi,0 . We obtain that cov Vj+l,K+l (g), Vi+l,K+l (g) ≤ E|Vi+l,K+l (g)| · ess sup E Vj+l,K+l (g) | Mi,0 . Let us put f (x) := x and f1 (x) := x with L := 1 in (6.14). Then this inequality yields that ess sup E Vj+l,K+l (g) | Mi,0 ≤ const. · cj−i · (j − i)(1 − c1 ) + c1 . 1 Therein we have used that Vj+l,K+l (g) is centered. The quantity V in eq. (1.12) in Merlevède et al. (2011) – with respect to the sum of the Vj+l,K+l (g)/ max{δ, km − gk22 } – turns out to be bounded from above by 2 (g)/ max{δ 2 , km − gk42 } EVK+l,K+l X · (j − K)(1 − c1 ) + c1 / max{δ 2 , km − gk42 } + const. · E|VK+l,K+l (g)|cj−K 1 j>K ≤ const. · E|VK+l,K+l (g)|/ max{δ 2 , km − gk42 } . Note that the VK+l,K+l are identically distributed for all l and that |VK+l,K+l | is essentially bounded by 2(cG + R00 )2 . We consider that 2 E|VK+l,K+l (g)| ≤ 2E m[K] (X1 , Y1 , . . . , YK+l ) − g [K] (x0 , Y1 , . . . , YK+l ) ≤ 2E kgx k∞ · m[K−1] (X1 , Y1 , . . . , YK−1+l ) − g [K−1] (x0 , Y1 , . . . , YK−1+l ) 2 + m(XK+l , YK+l ) − g(XK+l , YK+l ) .. . ≤ 2E ≤ K+l−1 X 2 ci1 m(XK+l−i , YK+l−i ) − g(XK+l−i , YK+l−i ) + cK+l |X − x | 1 0 1 i=0 K+l−1 X 4 · 1 − c1 2 2(K+l) ci1 E m(XK+l−i , YK+l−i ) − g(XK+l−i , YK+l−i ) + 4(R00 + x0 )2 c1 i=0 27 ≤ 4 2(K+l) · km − gk22 + 4(R00 + x0 )2 c1 , (1 − c1 )2 (6.15) by the Cauchy-Schwarz inequality and the strict stationarity of the process (Xi , Yi ), integer i. Focussing on the covariance of Uj+l,K+l (g) and Ui+l,K+l (g) for j > i we realize that EUj+l,K+l Ui+l,K+l = EUi+l,K+l Xj+1+l · m[K] (Xj−K+1 , Yj−K+1 , . . . , Yj+l ) − g [K] (x0 , Yj−K+1 , . . . , Yj+l ) · E(εj+l+1 − 1) = 0, for j > i. The Uj+l,K+l (g) are centered so that cov Uj+l,K+l , Ui+l,K+l vanishes for all j > i. Hence, the quantity V in eq. (1.12) in Merlevède et al. (2011) – with respect to the sum of the 2 (g)/ max{δ 2 , km − gk42 } since the |Uj+l,K+l | Uj+l,K+l (g)/ max{δ, km − gk22 } – equals EUK+l,K+l are essentially bounded by R00 (Rε + 1)(cG + R00 ). We consider that 2 EUK+l,K+l (g) = (R00 )2 (Rε + 1)2 · E m[K] (Xj−K+1 , Yj−K+1 , . . . , Yj+l ) 2 − g [K] (x0 , Yj−K+1 , . . . , Yj+l ) ≤ (R00 )2 (Rε + 1)2 · 2 · km − gk22 (1 − c1 )2 2(K+l) + 2(R00 )2 (Rε + 1)2 (R00 + x0 )2 c1 , (6.16) where we have used the upper bound from (6.15). Thus the quantity V in the notation of Merlevède et al. (2011) is bounded from above by 2 4 2K 2 d1 / max{δ, km − gk22 } + d2 c2K 1 / max{δ , km − gk2 } ≤ d1 /δ + d2 c1 /δ , where the finite positive constants d1 and d2 only depends on c1 , R00 and Rε . Applying Theorem 1 from Merlevède et al. (2011) to (6.8) yields that 2 P km b − mk2 > δ ≤ 4 #G · (n − K − 1) exp − D1 (n − K + 1)γ + exp − D2 (n − K − 1)2 /3 n D o 2 (n − K − 1)δ + exp − 3d1 n D o 2 2 + exp − (n − K − 1)c−2K δ 1 3d2 n (n − K − 1)γ(1−γ) o + exp − D3 (n − K − 1) · exp D4 , logγ (n − K − 1) (6.17) 28 for all δ ∈ (δn , (cG + cM )2 ) and n > max{4, N } where the positive finite constants D1 , . . . , D4 only depend on c1 , x0 , R00 , Rε , cG and γ2 . Considering the upper bound on the cardinality of G provided by Condition C, we apply the inequality (6.17) to (6.7) and obtain that sup Ekm b − mk22 ≤ δn + 4(cM + cG )2 (n − K − 1) exp cN n1/(1+β) − D1 (n − K + 1)γ m∈M + 4(cM + cG )2 · exp cN n1/(1+β) − D2 (n − K + 1)2 /3 n (n − K − 1)γ(1−γ) o + 4(cM + cG )2 · exp cN n1/(1+β) − D3 (n − K − 1) · exp D4 logγ (n − K − 1) n o D2 + 4(cM + cG )2 · exp cN n1/(1+β) − (n − K − 1)c−2K δn2 1 3d2 o n 12d1 D2 + (n − K − 1)δn exp cN n1/(1+β) − D2 (n − K − 1) 3d1 for all n > max{4, N }. Due to δn = cδ · n−β/(1+β) with cδ sufficiently large, the selection of K and the fact that γ can be chosen arbitarily close to 1 (select γ2 large enough) while β > 1 by Condition C, the first addend δn dominates asymptotically in the above inequality which finally leads to the desired rate. Proof of Lemma 4.2: The derivative of m e (x) (y) := m(x, e y) with respect to y is bounded from below by c0M α00 for any m ∈ M0 and x ≥ α00 so that the functions m e (x) : [0, ∞) → [m(x, 0), ∞), x ≥ α00 , increase strictly monotonically and, hence, are invertible. For any c ≥ 0 we have {(x, z) : m e −1 e c) − z ≥ 0} so that Borel measurability of the function (x) (z) ≤ c} = {(x, z) : m(x, −1 (x, z) 7→ m e (x) (z) follows of that of m. e By Fubini’s theorem, it follows from there that Z Z P [X1 ≤ z] = P [m(x, e ε1 ) ≤ z]dPX1 (x) = P ε≤m e −1 (x) (z) dPX1 (x) Z Z = m e −1 (z) (x) Z z fε (t) dt dPX1 (x) = t=0 fε (m e −1 (x) (s)) Z s=0 m e y (x, m e −1 (x) (s)) dPX1 (x) ds , for all z ≥ 0 so that PX1 is absolutely continuous and has the Lebesgue density fX (z) = E fε (m e −1 (X1 ) (z)) m e y (X1 , m e −1 (X1 ) (z)) , which is bounded from above by kfε k∞ /(c0M α00 ) as X1 ≥ α00 holds true almost surely. Moreover the support of fX is included in [α00 , R00 ] with R00 as in Condition B. Therefore we have ZZ ZZ 2 2 kf k2 = f (x, xt)fX (x)fε (t) dx dt ≤ f 2 (x, xt) dx dt · kfε k2∞ /(c0M α00 ) [α00 ,R00 ]×[0,Rε ] ZZ ≤ [α00 ,R00 ]×[0,R00 Rε ] f 2 (x, y) dx dy · kfε k2∞ /{c0M (α00 )2 } , 29 so that k · k2 is dominated by k · kλ multiplied by a uniform constant. Proof of Lemma 4.3: It follows immediately that Q((a, b]) ≥ c · λ((a, b]) , for all left-open intervals (a, b] ⊆ I since Q((a, b]) ≥ Q([a − 1/m, b]) ≥ c · λ([a − 1/m, b]) → c(b − a) = cλ((a, b]) , as m → ∞ for all (a, b] ⊆ I. Let F denote the collection of all unions of finitely many intervals (a, b] ⊆ I. One can verify that F forms a ring of subsets of I which is closed with respect to the relative complement related to I. Moreover, F can equivalently be defined by the collection of all unions of finitely many intervals (a, b] ⊆ I which are pairwise disjoint. For all f ∈ F we conclude that Q(f ) = Q n [ n n X X (ak , bk ] = Q((ak , bk ]) ≥ c · λ((ak , bk ]) = c · λ(f ) , k=1 k=1 k=1 where the intervals (ak , bk ] are assumed to be pairwise disjoint. The σ-field generated by the ring F equals the Borel σ-field of I as F contains all left-open intervals of I. As Q and λ are finite measures on that Borel σ-field their restrictions to the domain F are finite pre-measures. By Carathéodory’s extension theorem, Q and λ fulfil Q(g) = inf ∞ nX Q(fj ) : g ⊆ j=1 λ(g) = inf ∞ nX j∈N λ(fj ) : g ⊆ j=1 Since P∞ j=1 Q(fj ) ≥ c· P∞ j=1 λ(fj ) o fj , fj ∈ F , [ [ o fj , fj ∈ F . j∈N the outer measure representation of Q and λ yields that Q(g) ≥ c · λ(g) for all Borel subsets g of I. Clearly for all elementary non-negative measurable P mappings ϕ on I, ϕ = nk=1 ϕk · 1gk , for some real numbers ϕk ≥ 0 and pairwise disjoint Borel subsets gk , k = 1, . . . , n, of I, it follows that Z Z ϕ(x)dQ(x) ≥ c · ϕ(x)dλ(x) . This inequality extends to all non-negative measurable functions ϕ by the monotone convergence theorem as any such function ϕ is pointwise approximable by a pointwise monotonically increasing sequence of elementary non-negative measurable mappings on the domain I. 30 Now we assume that the Lebesgue density q of Q on I exists. Then we put ϕ equal to the indicator function of the preimage q −1 ((−∞, c)) ⊆ I so that Z Z Z (q(x) − c)ϕ(x)dλ(x) = ϕ(x)dQ(x) − c · ϕ(x)dλ(x) ≥ 0 . As the function (q − c)ϕ is non-positive it follows that, for Lebesgue almost all x ∈ I, we have q(x) = c or ϕ(x) = 0. Thus, for those x we have q(x) ≥ c. Proof of Theorem 4.2: The statistical experiments under which one observes the data X 1 , Y 1 , . . . , Y n with X 1 = log X1 and Y j = log Yj from model (1.1) is more informative than the model under consideration in which only Y1 , . . . , Yn are accessible. Hence, as we are proving a lower bound, we may assume that log X1 is additionally observed and that the Y j are recorded instead of the Yj . First let us verify that, for all binary matrices θ, the functions mθ from (4.6) lie in M. The support of the function mθ − mG is included in o n 1 (x, y) ∈ R2 : x ∈ α0 /(1 − α1 ) + Rε β10 α0 · [1, 2] , 0 ≤ y ≤ Rε x/2 , 16 as a subset. Thus we derive that k(mθ )x − (mG )x k∞ ≤ 8cH bn1−β · k(mθ )y − (mG )y k∞ ≤ n o 8 4 kH k + kH k x ∞ y ∞ , Rε β10 α0 α0 8cH kHy k∞ · bn1−β , Rε α0 (6.18) while (mG )x ≡ α1 and (mG )y ≡ β1 . By Condition D we have α1 + β1 Rε < c1 so that Condition (A1) holds true for all mθ for cH > 0 sufficiently small. All functions mθ coincide with mG on their restriction to h i 1 0, α0 /(1 − α1 ) + Rε β10 α0 × [0, ∞) , 16 (6.19) while, for x > α0 /(1 − α1 ) + Rε β10 α0 /16, the upper bound kmθ − mG k∞ ≤ cH bβn kHk∞ , for cH small enough, suffices to verify the envelope Condition (A2). By (6.18) and the small cH we also establish that inf (mθ )x ≥ α1 /2 > 0 , inf (mθ )y ≥ β1 /2 > 0 , x≥0,y≥0 x≥0,y≥0 for all θ, what guarantees the monotonicity Condition (A3). Moreover, the above inequalities guarantee Condition C’ so that the stationary distribution of (Xn )n under any mθ has a bounded 31 Lebesgue density fX,θ by Lemma 4.2. Also, as cH is sufficiently small with respect to all other constants, we can verify the smoothness constraint in Condition B for all admitted values of θ. As we have shown that MP := mθ : θ ∈ Θn ⊆ M , where Θn denotes the set of all binary (bn × bn )-matrices, we follow a usual strategy of minimax theory and put an a-priori distribution on MP . Concretely, we assume that all components of the binary matrix θ are independent and satisfy P [θj,k = 1] = 1/2 and estimate the minimax risk by the corresponding Bayesian risk from below. However, we face the problem that the loss function depends on the stationary distribution of the data and, hence, on mθ . As all mθ equal mG on the interval (6.19) we have α0 /(1 − α1 ) = mθ α0 /(1 − α1 ), 0 . By Proposition 2.1(b), the left endpoint of the stationary distribution under the regression function mθ equals α0 /(1 − α1 ) for all θ ∈ Θn so that J := J (mθ ) = (α0 /(1 − α1 ) + Rε β10 α0 /16, α0 /(1 − α1 ) + Rε β10 α0 /8] , for all θ ∈ Θn . The closure of this interval contains the whole support of mθ − mG for all θ ∈ Θn . Then, (4.5) yields that km bn − mθ k22 Z Rε /2 Z 2 m b n (x, xt) − m e θ (x, t) dx dt , ≥ µ6 rε · J 0 where the k · k2 -norm depends on θ. We introduce the intervals Ij,k := ij,k + −Rε β10 α0 /(64bn ), Rε β10 α0 /(64bn ) × −Rε /(8bn ), Rε /(8bn ) , j, k = 0, . . . , bn −1 , which are pairwise disjoint and subsets of [0, Rε /2] × J . We deduce that Z Rε /2 Z 2 2 m sup Em km b n − mk2 ≥ µ6 rε Eθ Emθ b n (x, xt) − m e θ (x, t) dx dt m∈M ≥ 1 µ6 rε 2 0 bX n −1 Eθ,j,k Emθ,j,k,0 ZZ Ij,k j,k=0 J 2 m b n (x, xt) − m e θ,j,k,0 (x, t) dx dt ZZ + Emθ,j,k,1 Ij,k ≥ 1 µ6 rε 4 bX n −1 j,k=0 Z ZZ Eθ,j,k Ij,k µ6 rε Rε2 β10 cH α0 −2β−2 ≥ · bn · 2048 2 m b n (x, xt) − m e θ,j,k,1 (x, t) 2 m e θ,j,k,1 (x, t) − m e θ,j,k,0 (x, t) dx dt min{Ln,θ,j,k,0 (y), Ln,θ,j,k,1 (y)}dy ZZ 2 H (x, y)dxdy · bX n −1 Eθ,j,k q 1 − 1 − A(Ln,θ,j,k,0 , Ln,θ,j,k,1 ) j,k=0 where Em , Emθ , Emθ,j,k,b , b ∈ {0, 1}, denote the expectation with respect to the data when m, mθ , mθ,j,k,b , resp., is the true autoregression function and we write mθ,j,k,b for the function 32 mθ when θj,k = b and all other components of θ are random; Eθ stands for the expectation with respect to the a-priori distribution of θ; Eθ,j,k denotes that expectation with respect to all components of θ except θj,k ; Ln,θ,j,k,b , b ∈ {0, 1}, denotes the Lebesgue density of the data under the true autoregression function mθ,j,k,b ; finally, Z p A(f1 , f2 ) := f1 (x)f2 (x)dx , stands for the Hellinger affinity between two densities f1 and f2 . We have used LeCam’s inequality in the last step. Therefore, we obtain the lower bound bn−2β on the convergence rate if we verify that inf inf inf n≥N θ∈Θn j,k=0,...,bn −1 A(Ln,θ,j,k,0 , Ln,θ,j,k,1 ) > 0 , (6.20) for some fixed integer N . For the likelihood function, i.e. the data density with respect to the (n + 1)-dimensional Lebesgue measure, we have the following recursive equations L0,θ,j,k,b (x1 ) = f X,θ,j,k,b (x1 ) , Ll+1,θ,j,k,b (x1 , y1 , . . . , yl+1 ) = f ε yl+1 − mθ,j,k,b (xl , yl ) · Ll,θ,j,k,b (x1 , y1 , . . . , yl ) . for l = 0, . . . , n − 1. Therein we have mθ,j,k,b (x, y) = log mθ,j,k,b (exp(x), exp(y)) and f ε , f X,θ,j,k,b denote the density of log ε1 and the stationary density of (log Xn )n under m = mθ,j,k,b , respectively. Also the x2 , . . . , xl are recursively defined by xr+1 = mθ,j,k,b (xr , yr ). Writing Z p p 2 1/2 H(f1 , f2 ) := f1 (x) − f2 (x) dx , for the Hellinger distance between densities f1 and f2 , we deduce that Al+1 := A(Ll+1,θ,j,k,0 , Ll+1,θ,j,k,1 ) Z Z Z q f ε yl+1 − mθ,j,k,0 (xl , yl ) f ε yl+1 − mθ,j,k,1 (xl , yl ) dyl+1 = ··· q · Ll,θ,j,k,0 (x1 , y1 , . . . , yl ) · Ll,θ,j,k,1 (x1 , y1 , . . . , yl )dx1 dy1 · · · dyl Z Z 1 = ··· 1 − H2 f ε · −mθ,j,k,0 (xl , yl ) , f ε · −mθ,j,k,1 (xl , yl ) 2 q · Ll,θ,j,k,0 (x1 , y1 , . . . , yl ) · Ll,θ,j,k,1 (x1 , y1 , . . . , yl )dx1 dy1 · · · dyl = Al − 1 ∆n,θ,j,k , 2 (6.21) with ∆n,θ,j,k ≤ Emθ,j,k,0 H2 f ε · −mθ,j,k,0 (X l , Y l ) , f ε · −mθ,j,k,1 (X l , Y l ) 1/2 , · Emθ,j,k,1 H2 f ε · −mθ,j,k,0 (X l , Y l ) , f ε · −mθ,j,k,1 (X l , Y l ) 33 by the Cauchy-Schwarz inequality. For any a, b ∈ R the regularity constraint (4.7) implies that H2 f ε · −a , f ε · −b ≤ Fε · (a − b)2 , so that 2 ∆n,θ,j,k ≤ Fε · max log mθ,j,k,0 − log mθ,j,k,1 2,b , b=0,1 where we write k·k2,b for the k·k2 -norm under the true autoregression function mθ,j,k,b . According to Lemma 4.2, we have log mθ,j,k,0 − log mθ,j,k,1 2 2,b ≤ ≤ ZZ = Z 2kfε k2∞ c2H −2β H2 · bn · β1 α02 Ij,k ZZ 0 2 2 kfε k∞ Rε β1 cH 2 256β1 α0 · 2 log m e θ,j,k,0 (x, t) − log m e θ,j,k,1 (x, t) fX,θ,j,k,b (x)fε (t) dx dt 64bn (x − ij,k,1 )/(Rε β10 α0 ), 8bn (t − ij,k,2 )/Rε dx dt H (x, y)dxdy · bn−2β−2 , which provides an upper bound on ∆n,θ,j,k . Then it follows from (6.21) by induction that ZZ kfε k2∞ Rε2 β10 cH An ≥ A 0 − · H 2 (x, y)dxdy · nbn−2β−2 . (6.22) 512β1 α0 Then we consider that Z q Z q A0 = f X,θ,j,k,0 (x)f X,θ,j,k,1 (x) dx = fX,θ,j,k,0 (exp(x))fX,θ,j,k,1 (exp(x)) exp(x)dx Z q Z q = fX,θ,j,k,0 (x)fX,θ,j,k,1 (x) dx ≥ fX,θ,j,k,0 (x)fX,θ,j,k,1 (x) dx J ≥ µ6 Rε β10 α0 /16 > 0, using (4.4) and Lemma 4.3 where the above lower bound does not depend on cH . Viewing cH as sufficiently small and choosing bn = n1/(2β+2) , the equation (6.22) finally proves (6.20), which = n−β/(β+1) as a lower bound on the convergence rate. establishes b−2β n References [1] Audrino, F. and Bühlmann, P. (2001). Tree-structured generalized autoregressive conditional heteroscedastic models. J. Roy. Statist. Soc., Ser. B 63, 727–744. [2] Audrino, F. and Bühlmann, P. (2009). Splines for financial volatility. J. Roy. Statist. Soc., Ser. B 71, 655–670. [3] Berkes, I., Horváth, L. and Kokoszka, P.S. (2003). GARCH processes: structure and estimation. Bernoulli 9, 201–227. 34 [4] Berkes, I. and Horváth, L. (2004). The efficiency of the estimators of the parameters in GARCH processes. Ann. Statist. 32, 633–655. [5] Bollerslev, T. (1986). Generalized autoregressive conditional heteroscedasticity. J. Econometrics 31, 307–327. [6] Bougerol, P. and Picard, N. (1992). Stationarity of GARCH processes and of some non-negative time series. J. Econometrics 52, 115–127. [7] Bougerol, P. (1993). Kalman filtering with random coefficients and contractions. SIAM J. Control. Optim. 31, 942–959. [8] Buchmann, B. and Müller, G. (2012). Limits experiments of GARCH. Bernoulli 18, 64–99. [9] Bühlmann, P. and McNeil, A.J. (2002). An algorithm for nonparametric GARCH modelling. Comp. Statist. Data Anal. 40, 665–683. [10] Čı́žek, P., Härdle, W. and Spokoiny, V. (2009). Adaptive pointwise estimation in timeinhomogeneous conditional heteroscedasticity models, Econom. J. 12, 248–271. [11] Conrad, C. and Mammen, E. (2009). Nonparametric regression on latent covariates with an application to semiparametric GARCH-in-Mean models. Discussion Paper No. 473, University of Heidelberg, Department of Economics. [12] Christensen, B.J., Dahl, C.M. and Iglesias, E. (2012). Semiparametric inference in a GARCH-inmean model. J. Econometrics 167, 458-472. [13] Diaconis, P. and Freedman, D. (1999). Iterated random functions. SIAM Review 41, 45–76. [14] Ding, Z., Granger, C.W.J. and Engle, R. (1993). A long memory property of stock market returns and a new model. J. Empirical Finance 1, 83–106. [15] Engle, R. (1982). Autoregressive conditional heteroskedasticity with estimates of the variance of the United Kingdom inflation. Econometrica 50, 987–1007. [16] Fan, J., Qi, L. and Xiu, D. (2014). Quasi Maximum Likelihood Estimation of GARCH Models with Heavy-Tailed Likelihoods. J. Busi. Econ. Stat. 32, 178–205. [17] Fan, J. and Yao, Q., Nonlinear time series: nonparametric and parametric methods, 2003, Springer. [18] Francq, C. and Zikoı̈an, J.-M., GARCH models: structure, statistical inference and financial applications, 2010, Wiley. [19] Francq, C., Lepage, G. and Zikoı̈an, J.-M. (2011). Two-stage non Gaussian QML estimation of GARCH models and testing the efficiency of the Gaussian QMLE. J. Econometrics 165, 246–257. [20] Hall, P. and Yao, Q. (2003). Inference in ARCH and GARCH models with heavy-tailed errors. Econometrica 71, 285–317. 35 [21] Hörmann, S. (2008). Augmented GARCH sequences: dependence structure and asymptotics. Bernoulli 14, 543–561. [22] Lee, S.-W and Hansen, B.E. (1994). Asymptotic theory for the GARCH(1, 1) quasi-maximum likelihood estimator. Econometric Theory 10, 29–52. [23] Linton, O. and Mammen, E. (2005). Estimating semiparametric ARCH(∞) models by kernel smoothing methods. Econometrica 73, 771–836. [24] Lumsdaine, R.L. (1996). Consistency and asymptotic normality of the quasi-maximum likelihood estimator in IGARCH(1, 1) and covariance stationary GARCH(1, 1) models. Econometrica 64, 575– 596. [25] Merlevède, F., Peligrad, M. and Rio, E. (2011). A Bernstein type inequality and moderate deviations for weakly dependent sequences. Prob. Theo. Rel. Fields 151, 435–474. [26] Nelson, D. (1991). Conditional heteroskedasticity in asset returns: A new approach. Econometrica 59, 347–370. [27] Robinson, P.M. and Zaffaroni, P. (2006). Pseudo-maximum-likelihood estimation of ARCH(∞) models. Ann. Statist. 34, 1049–1074. [28] Straumann, D. and Mikosch, Th. (2006). Quasi-maximum-likelihood estimation in conditionally heteroscedastic time series: A stochastic recurrence equations approach. Ann. Statist. 34, 2449– 2495 [29] van de Geer, Sara A., Empirical Processes in M-estimation: Applications of empirical process theory, 2000, Cambridge Series in Statistical and Probabilistic Mathematics 6, Cambridge University Press. [30] van der Vaart, A.W. and Wellner, J.A., Weak Convergence and Empirical Processes with Applications to Statistics, 1996, Springer. [31] Wu, W.B. and Shao, X. (2004). Limit theorems for iterated random functions. J. Appl. Prob. 41, 425–436. A MATLAB code – Supplement not to be published In this section we provide the MATLAB codes for the estimators in the simulation section 5 from the main article. 36 A.1 Computation of the function mα0 ,α1 ,β1 ,βp f u n c t i o n e r g = l i n s i n ( a l p h a 0 , a l p h a 1 , beta , b et a p , x , y ) ; % here ” beta ” = beta 1 s t o e r u n g = b e t a p ∗ s i n ( 2 ∗ p i ∗x ’ ) ∗ o n e s ( 1 , l e n g t h ( y ) ) + b e t a p ∗ o n e s ( 1 , l e n g t h ( x ) ) ’ ∗ c o s ( 2 ∗ p i ∗y ) ; erg = alpha 0 ∗ ones ( length ( x ) , length ( y ) ) + beta ∗ x ’ ∗ ones (1 , length ( y ) ) + alpha 1 ∗ ones (1 , length ( x ) ) ’ ∗ y + stoerung ; A.2 Computation of the contrast functional Φ f u n c t i o n e r g = Phi ( a l p h a 0 , a l p h a 1 , beta , b et a p , K, x0 , Daten ) ; % i n s e r t t h e o b s e r v e d data a s ” Daten ” n = l e n g t h ( Daten ) ; summe = 0 ; f o r j = K: 1 : n−2; g1 = l i n s i n ( a l p h a 0 , a l p h a 1 , beta , b et a p , x0 , Daten ( j −K+ 1 ) ) ; f o r l = 2 : 1 :K; g1 = l i n s i n ( a l p h a 0 , a l p h a 1 , beta , b et a p , g1 , Daten ( j −K+l ) ) ; end ; g2 = l i n s i n ( a l p h a 0 , a l p h a 1 , beta , b et a p , g1 , Daten ( j + 1 ) ) ; d e l t a 1 = ( Daten ( j +1) − g1 ) ˆ 2 ; d e l t a 2 = ( Daten ( j +2) − g2 ) ˆ 2 ; summe = summe + d e l t a 1 + d e l t a 2 ; end ; e r g = summe / ( n−K−1); A.3 Computation of the final coefficient estimators f u n c t i o n e r g = m S c h a e t z e r (K, x0 , Daten ) ; % i n s e r t t h e o b s e r v e d data a s ” Daten ” Minimum = [ 0 . 1 0 . 1 0 . 1 0 ; 0 0 0 Phi ( 0 . 1 , 0 . 1 , 0 . 1 , 0 ,K, x0 , Daten ) ] ; f o r beta = 0 . 1 : 0 . 0 5 : 0 . 3 ; for alpha 1 = 0 . 1 : 0 . 0 5 : 0 . 3 ; for alpha 0 = 0 . 1 : 0 . 0 5 : 0 . 3 ; f o r beta p = − 0 . 0 3 : 0 . 0 1 : 0 . 0 3 ; Wert = Phi ( a l p h a 0 , a l p h a 1 , beta , b et a p , K, x0 , Daten ) ; 37 i f Wert < Minimum ( 2 , 4 ) , Minimum = [ a l p h a 0 a l p h a 1 b e t a b e t a p ; 0 0 0 Wert ] ; e l s e end ; end ; end ; end ; end ; e r g = Minimum ( 1 , : ) ; 38