* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download Bivariate Regression Analysis

Survey

Document related concepts

Transcript

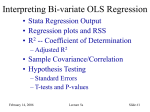

Interpreting Bi-variate OLS Regression • Stata Regression Output • Regression plots and RSS • R2 -- Coefficient of Determination – Adjusted R2 • Sample Covariance/Correlation • Hypothesis Testing – Standard Errors – T-tests and P-values Week 4, 2007 Lecture 4 Slide #1 Data • Use the “caschool.dat” file • Data description: – CaliforniaTestScores.pdf • Build a Stata do-file as you go • Model: – Test score=f(student/teacher ratio) Week 4, 2007 Lecture 4 Slide #2 Stata Regression Model: Regressing Student Teacher Ratio onto Test Score histogram str, percent normal 0 0 5 5 Percent Percent 10 10 15 15 histogram testscr, percent normal 15 Week 4, 2007 20 Student Teacher Ratio 25 600 Lecture 4 620 640 660 Test Score 680 700 Slide #3 Regression Output regress testscr str Source | SS df MS Number of obs = 420 -------------+-----------------------------F( 1, 418) = 22.58 Model | 7794.11004 1 7794.11004 Prob > F = 0.0000 Residual | 144315.484 418 345.252353 R-squared = 0.0512 -------------+-----------------------------Adj R-squared = 0.0490 Total | 152109.594 419 363.030056 Root MSE = 18.581 -----------------------------------------------------------------------------testscr | Coef. Std. Err. t P>|t| Beta -------------+---------------------------------------------------------------str | -2.279808 .4798256 -4.75 0.000 -.2263628 _cons | 698.933 9.467491 73.82 0.000 . ------------------------------------------------------------------------------ Week 4, 2007 Lecture 4 Slide #4 Regression Descriptive Statistics cor testscr str, means Variable | Mean Std. Dev. Min Max -------------+---------------------------------------------------testscr | 654.1565 19.05335 605.55 706.75 str | 19.64043 1.891812 14 25.8 | testscr str -------------+-----------------testscr | 1.0000 str | -0.2264 1.0000 Week 4, 2007 Lecture 4 Slide #5 Regression Plot 600 620 640 660 680 700 twoway (scatter testscr str) (lfitci testscr str) 15 20 str 95% CI testscr Week 4, 2007 Lecture 4 25 Fitted values Slide #6 Measuring “Goodness of Fit” • Root of Mean Squared Error (“Root MSE”) se RSS , where RSS = e2 , K = parameters n K – Measures spread around the regression line • Coefficient of Determination (R2) ESS (Yˆi Y ) 2 and TSS (Yi Y ) 2 “model” or explained sum of squares R2 Week 4, 2007 “total” sum of squares ESS RSS and (1 R 2 ) TSS TSS Lecture 4 2 e 2 ( Y Y ) i Slide #7 Explaining R2 For each observation Yi, variation around the mean can be decomposed into that which is “explained” by the regression and that which is not: Book terminology: TSS = (all)2 RSS = (unexplained)2 ESS = (explained)2 unexplained deviation explained deviation Y Yˆ Week 4, 2007 Lecture 4 Stata terminology: Residual = (unexplained)2 Model = (explained)2 Total = (all)2 Slide #8 Sample Covariance & Correlation • Sample covariance for a bivariate model is defined as: (Xi X )(Yi Y ) sXY n 1 • Sample correlations (r) “standardize” covariance by dividing by the product of the X and Y standard deviations: sX Y r sX sY Week 4, 2007 Sample correlations range from -1 (perfect negative relationship) to +1 (perfect positive relationship) Lecture 4 Slide #9 Standardized Regression Coefficients (aka “Beta Weights” or “Betas”) • Formula: sX b b1 sY * 1 1.892 • In our example: - 2.28 0.226 19.053 • Interpretation: the number of std. deviations change in Y one should expect from a onestd. deviation change in X. Week 4, 2007 Lecture 4 Slide #10 Hypothesis Tests for Regression Coefficients • For our model: Yi = 698.933-2.279808*Xi+ei • Another sample of 420 observations would lead to different estimates for b0 and b1. If we drew many such samples, we’d get the sample distribution of the estimates • We need to estimate the sample distribution, (because we usually can’t see it) based on our sample size and variance Week 4, 2007 Lecture 4 Slide #11 To do that we calculate SEbs (Bivariate case only) se SEb1 , where TSSX (Xi X ) 2 TSSX SEb0 se Week 4, 2007 1 X2 n TSSX Lecture 4 Slide #12 Interpreting Standard Errors • For our model: – b0 = 698.933, and SEb0 = 9.467 – b1 = -2.28, and SEb1 = .4798 Estimated Sampling Distribution for b1 Assuming that we estimated the sample standard error correctly, we can identify how many standard errors our estimate is away from zero. The T-test reports the number of standard errors our estimate falls away from zero. Thus, the “T” for b1 is 4.75 for our model. (rounding!) b1 = -2.28 b1 - SEb1=-2.76 b1 + SEb1= -1.8 Week 4, 2007 Lecture 4 0 (which is 4.75 SEb1 “units” away from b1) Slide #13 Classical Hypothesis Testing Assume that b1 is zero. What is the probability that your sample would have resulted in an estimate for b1 that is 4.75 SEb1’s away from zero? To find out, determine the cumulative density of the estimated sampling distribution that falls more than 4.75 SEb1’s away from zero. See Table 2, page 757, in Stock & Watson. It reports discrete “p-values”, given the sample size and t-values. Note the distinction between 1 and 2 sided tests In general, if the t-stat is above 2, the p-value will be <0.05 -- which is the acceptable upper limit in a classical hypothesis test. Note: in Stata-speak, a p-value is a “p>|t|” Assume that b1 = 0.0 (null hypothesis) Week 4, 2007 Estimated b1 = 2.27 (working hypothesis) Lecture 4 Slide #14 Coming up... • For Next Week – Use the caschool.dta dataseet – Run a model in Stata using Average Income (avginc) to predict Average Test Scores (testscr) – Examine the univariate distributions of both variables and the residuals • Walk through the entire interpretation • Build a Stata do-file as you go • For Next Week: – Read Chapter 8 of Stock & Watson Week 4, 2007 Lecture 4 Slide #15