* Your assessment is very important for improving the workof artificial intelligence, which forms the content of this project

Download Agents-part1 - Dr Shahriar Bijani

Survey

Document related concepts

Wizard of Oz experiment wikipedia , lookup

Existential risk from artificial general intelligence wikipedia , lookup

Soar (cognitive architecture) wikipedia , lookup

Incomplete Nature wikipedia , lookup

Ecological interface design wikipedia , lookup

Ethics of artificial intelligence wikipedia , lookup

History of artificial intelligence wikipedia , lookup

Adaptive collaborative control wikipedia , lookup

Human–computer interaction wikipedia , lookup

Agent-based model in biology wikipedia , lookup

Agent-based model wikipedia , lookup

Embodied cognitive science wikipedia , lookup

Transcript

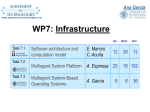

ARTIFICIAL INTELLIGENCE INTELLIGENT AGENTS 1 Dr. Shahriar Bijani Shahed University Spring 2017 SLIDES’ REFERENCES Michael Rovatsos, Agent-Based Systems, Edinburgh University, 2016. S. Russell and P. Norvig, Artificial Intelligence: A Modern Approach, Chapter 2, Prentice Hall, 2010, 3rd Edition. 2 OUTLINE Introduction Agent & Multi-agent Systems Agents and environments Rationality PEAS Environment types Agent types 3 NEW CHALLENGES FOR COMPUTER SYSTEMS Traditional design problem: How can I build a system that produces the correct output given some input? Modern-day design problem: How can I build a system that can operate independently on my behalf in a networked, distributed, large-scale environment in which it will need to interact with different other components? Distributed systems in which different components have different goals and need to cooperate have not been studied until recently 4 WHAT IS AN AGENT? (1) An agent is anything that can perceive its environment through sensors and acting upon that environment through actuators (effectors) Human agent: eyes, ears, and other organs for sensors; hands, legs, mouth, and other body parts for actuators Robotic agent: cameras and infrared range finders for sensors; various motors for actuators The agent can only influence the environment but not fully control it (sensor/actuator failure, nondeterminism) 5 WHAT IS AN AGENT? (2) Definition from the agents community: An agent is a computer system that is situated in some environment, and that is capable of autonomous action in this environment in order to meet its design objectives Adds a second dimension to the agent definition: the relationship between agent and designer/user Agent is capable of independent action Agent action is purposeful 6 AGENT AUTONOMY Autonomy is a prerequisite for Delegating (assigning) complex tasks to agents ensuring flexible action in unpredictable environments A system is autonomous: If it can learn and adapt if its behavior is determined by its own experience if it requires little help from the human user if we don’t have to tell it what to do step by step if it can choose its own goal and the way to achieve it if we don’t understand its internal workings Autonomy dilemma: how to make the agent smart without losing control over it 7 AGENTS AND ENVIRONMENTS The agent function maps from percept histories to actions: [f: P* A] The agent program runs on the physical architecture to produce f agent = architecture + program 8 VACUUM-CLEANER WORLD Percepts: location and contents, e.g., [A,Dirty] Actions: Left, Right, Suck, NoOp 9 MULTIAGENT SYSTEMS (MAS) Two fundamental ideas: Individual agents are capable of autonomous action to a certain extent (they don’t need to be told exactly what to do) These agents interact with each other in multiagent systems (and which may represent users with different goals) Foundational problems of multiagent systems research: The agent design problem: how should agents act to do their tasks? The society design problem: how should agents interact to do their tasks? 11 APPLICATIONS OF MULTI-AGENT SYSTEMS Two types of agent applications: Distributed systems (processing nodes) Personal software assistants (aiding a user) Agents have been applied to various application areas: Workflow/business process management Distributed sensing Information retrieval and management Electronic commerce Human-computer interfaces Virtual environments Social simulation 12 RATIONAL AGENTS An agent should try to "do the right thing", based on what it can perceive and the actions it can perform. The “right action” is the one that will cause the agent to be most successful. Performance measure: An objective criterion for success of an agent's behavior E.g., in a vacuum-cleaner agent: amount of dirt cleaned up, amount of time taken, amount of electricity consumed, amount of noise generated, etc. 13 RATIONAL AGENTS Rational Agent: For each possible percept sequence, a rational agent should select an action that is expected to maximize its performance measure, given the evidence provided by the percept sequence and whatever built-in knowledge the agent has. 14 RATIONAL AGENTS Rationality is distinct from omniscience (knowing everything) Agents can perform actions in order to modify future percepts so as to obtain useful information (information gathering, exploration) 15 WHAT IS AGENT TECHNOLOGY? Agents as a software engineering paradigm Interaction is most important aspect of complex software systems Ideal for loosely coupled “black-box” components Agents as a tool for understanding human societies Human society is very complex, computer simulation can be useful This has given rise to the field of (agent-based) social simulation Agents vs. distributed systems Long tradition of distributed systems research But MAS are not simply distributed systems, because of different goals Agents vs. economics/game theory Distributed rational decision making extensively studied in economics, game theory very popular Many strengths but also objections 16 AGENTS VS AI Agents grew out of “distributed” AI Much debate whether MAS is a sub-field of AI or vice versa AI is mostly concerned with the building blocks of intelligence reasoning and problem-solving, planning and learning, perception and action The agents field is more concerned with Combining these components (this may mean we have to solve all problems of AI, but agents can also be built without any AI) Social interaction, which has mostly been ignored by standard AI (and is an important part of human intelligence) Agents are a lot about integration (of abilities in one agent or of agents in one environment) 17