* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download chapter 8—sampling distributions

Survey

Document related concepts

Transcript

Chapter 8--Sampling Distributions.Doc

STATISTICS 301—APPLIED STATISTICS, Statistics for Engineers and Scientists, Walpole, Myers, Myers, and Ye, Prentice Hall

Goal:

We next investigate some sample statistics (eg X --the Sample Average and p̂ --the

Sample Proportion or X1 - X2 and pˆ1 - pˆ2 ) as Random Variables and further investigate

the distributions of these random variables. Lastly we use this information to

“ESTIMATE” a population parameter.

Consider the first few paragraphs from an article in the New York Times.

Trends: Halloween, for Skinnier Skeletons

By JOHN O'NEIL

Published: October 21, 2003

Trick-or-treaters can be satisfied with something other than candy, say researchers who conducted an

experiment last Halloween that arose from concern about the nation's obesity epidemic.

In the study, test households in five Connecticut neighborhoods offered children two bowls: one with

lollipops or fruit candy and one containing small, inexpensive Halloween toys, like plastic bugs that glow

in the dark.

Of the 283 children from 3 to 14 whose reactions were tallied, 148 chose candy and 135 toys; the

difference was statistically insignificant, the researchers reported in The Journal of Nutrition

Education and Behavior in the July-August issue.

Consider the following questions:

1. What is the population?

2. What is the parameter?

3. What is the sample?

4. What is the statistic?

5. Can we say anything about the theoretical “distribution” of the statistic?

So our goal is two-fold: Determine the distribution of statistics and then use this

information to provide information about a population parameter.

D:\582745739.doc

5/7/2017

1

Chapter 9—Estimation.Doc

STATISTICS 301—APPLIED STATISTICS, Statistics for Engineers and Scientists, Walpole, Myers, Myers, and Ye, Prentice Hall

Goal:

In this section we will investigate the concept of “Estimation” in which our goal is to use

sample information (assumed to be a random sample from the population of interest) to

arrive at a reasonable guess of a population parameter. Estimation is done in two ways—

point estimation (or single value) and interval estimation (an interval or range of likely

values). We will tackle POINT ESTIMATION at this point and leave INTERVAL

ESTIMATION to the next chapter.

Background

We assume that we are interested in one (or more) population parameter(s), such as the

mean (), the variance (2), the standard deviation (), the median ( μ ), or proportion (p).

In every case we assume the parameter is unknown and the population is so large that it

precludes our measuring every element of the population to obtain the parameter. So we

take a random sample from the population, of size n, and calculate the appropriate sample

statistic.

POINT ESTIMATION

Below we provide a table with each of the population parameters we have discussed along

with the corresponding point estimate or sample statistic of that parameter.

Population

Parameter

μ

Estimator

Estimate

X

x

σ2

S2

σ

S

# of obs with characteristic

ˆ

p=

total

p

D:\582745739.doc

s2 =

1

(xi -x)2

n-1

s = s2

# of obs with characteristic

ˆ

p=

total

5/7/2017

2

EXAMPLES

Miami Undergraduates and Graduate School Plans

Miami’s Graduate Dean contacts me about the percentage of students who plan on

pursuing a graduate degree. Using the information that I have collected on STA 301

students, I tell the Dean of Miami’s Graduate School that of the 123 students I

have surveyed 74 indicated they plan to attend graduate school. Hence we would

estimate the proportion of MU undergrads that plan to attend graduate school as

74

pˆ =

= 60.2% .

123

Underweight Milky Way Candy Bars

Recall that we found 20 of 85 candy bars weighed less than Milky Way’s claim that

none should weigh less than 58.1 gms. Hence we would estimate the proportion of

19

= 22.4% , or nearly one quarter!

underweight Milky Ways to be pˆ =

85

62.2

61.6

61.3

58.6

60.5

59.1

57.7

59.6

64.5

57.2

61.9

61.3

57.2

58.1

60.4

57.4

58.4

60.3

60.1

58.4

56.8

59.7

56.0

62.1

60.7

59.4

58.5

58.1

62.4

60.2

59.6

60.0

60.0

60.1

57.0

57.1

60.5

58.3

58.2

60.5

57.5

58.5

61.5

61.3

57.1

57.7

63.4

57.9

58.3

64.6

59.2

58.7

53.2

59.5

55.3

59.4

61.6

59.7

58.6

58.7

59.1

59.7

59.8

59.5

60.7

61.5

61.9

60.6

60.6

59.7

59.7

59.1

59.9

58.8

57.5

57.7

59.7

54.1

57.4

58.4

60.0

60.9

60.8

60.5 56.1

“High School Mathematics Course-Taking by Gender and Ethnicity,” American

Educational Research Journal, Fall 1998, Volume 35, Number 3, pages 497-514.

In this example one of the goals was to determine the mean number of Carnegie

units earned by high-school graduates in seven categories of mathematics courses.

Among all students in the study ( the sample size was 21,784 ), the average number

of math CU’s earned was x = 3.11. Thus we would estimate the mean number of math

CU’s earned by all high-school graduates is 3.11.

While not part of the question or purpose of the study, the authors also report the

sample standard deviation of the number of math CU’s earned, s = 0.93. This is an

estimate the population standard deviation (σ) of the number of math CU’s earned

by HS students.

D:\582745739.doc

5/7/2017

3

METHODS OF ESTIMATION

GOAL: Given a random sample (ie the X1, X2, …, Xn) from a population with mean = popln and

variance = 2popln,

HOW DO WE MANIPULATE THE X’s SO THAT WE ARRIVE AT A “GOOD” GUESS OF THE

POPULATION PARAMETER OF INTEREST? That is:

Sample

X1

X2

.

.

.

Xn

For example, if our goal is to estimate the population mean, popln, what function of the X’s

do we use to arrive at our ESTIMATOR of popln?

Or what function of the X’s do we use to arrive at our ESTIMATOR of 2popln?

DIFFERENT METHODS OF ESTIMATION

There are many different methods of estimation and all yield the function of the X’s that is

used to estimate a population parameter. The three most commonly used methods are:

1. Method of Least Squares Estimation (LSE): you’ll see this used in the regression

and design of experiments courses (STA 463 and 466, respectively).

2. Method of Maximum Likelihood Estimation (MLE): this method will be seen in STA

401 and other more advanced statistical theory classes.

3. Method of Moments: to be seen in STA 401 and infrequently otherwise.

We mention these methods in passing; we will assume for the remainder of this section that

the function and statistic is just given to us.

D:\582745739.doc

5/7/2017

4

PROPERTIES OF ESTIMATORS

We started this section with the goal of obtaining “good” guesses or estimates of population

parameters. There are two main criteria that determine “good” estimators and estimates

and we illustrate these criteria in the following pictures.

In the following “target” picture, think of the bulls-eye as being the “true” or actual

population parameter value and the “hits” on the target as being observations from the

distribution of a statistic used to estimate the parameter. And in the second picture, an

approximation to the distribution of various statistics all estimators of a parameter is given.

In both pictures, the idea of Unbiased and Best Estimators is illustrated and we give the

following definitions:

1. Unbiased Estimators are estimators whose expected value equals the parameter of

interest, so that E [ Estimator ] = parameter.

2. Best Estimators are those that have the smallest variance among a set of

estimators.

Summary of Estimators

1. Since E [ X ] = popln, X is an unbiased estimator of the popln mean, popln.

2. Since E[ p̂ ] = p, p̂ is an unbiased estimator of the popln proportion, p.

D:\582745739.doc

5/7/2017

5

In general, if we let ̂ be a sample statistic, we will show that:

1. ̂ is a Random Variable and hence

2. ̂ has a distribution consisting of i) Center = Mean of the RV = ̂ = E[ ̂ ],

ii) Spread = Variance of RV = 2̂ = E[ ( ̂ - ̂ )2 ], and

iii) Shape = Family or Kind of RV .

Recall that Families or Kinds of RV will fall into

one of the distributions that we have

encountered already, namely:

All Random Variables

Discrete

Binomial

Continuous

Normal

Finally recall some properties of functions of

random variables, namely, sums of linear

combinations of random variables.

If X and Y are two INDEPENDENT random

variables with means and variances, X and Y

and 2X and 2Y , respectively, and let a, b, and

c be constants, then we know that:

Chi-Square

T

F

1. The Mean of W (= aX + bY + c) = W = E [ W ] = aX + bY + c ALWAYS!!!

2. The Variance W = V(aX + bY + c) = 2W = a22X + b22Y ONLY IF X AND Y INDEP!!!

3. The Mean of W (= X ± Y) = W = E [ W ] = E [ X ± Y ] = X ± Y ALWAYS!!!

4. The Variance W (= X ± Y) = 2W = V[ W ] = V[ X ± Y ] = 2X + 2Y ONLY IF X & Y INDEP!

D:\582745739.doc

5/7/2017

6

Now consider the following experiment. Take a random sample of size n from a population of

measurements.

Population

Population of

measurements

Distn of the popln is:

popln

2popln

Shape

Sample

Random

Sample

X1 = first sample value

X2 = first sample value

.

.

.

Xn = first sample value

Some facts!

1. Every sample statistic is a function of X1, X2, …, Xn. Later we’ll briefly discuss this.

2. The values X1, X2, …, Xn are random variables. Why?

3. The random variables X1, X2, …, Xn are independent random variables. Why?

4. Since the X1, X2, …, Xn are random variables, they have a distribution (Center, Spread,

and Shape). It’s easy to “see” that the distribution of X1 is identical to the population

distribution and hence: Center of X1: popln

Spread of X1: 2popln

Shape of X1: Popln Shape

5. Less easy to see, but still true is that each of the X’s has the same distribution as the

population. This results in the following crucial theorem.

Thm: If X1, X2, …, Xn are a random sample from a population with mean = popln,

variance = 2popln, and some shape, then the Xi’s are said to independent and identically

distributed. That is, the Xi’s are i.i.d. (popln, 2popln) with same shape as population.

D:\582745739.doc

5/7/2017

7

NOTATION AND TERMINOLOGY

We will distinguish between ESTIMATORS and ESTIMATES. Recall that the X’s, the

random sample, are random variables. They are random quantities! The values of these X’s,

x’s, are the values that the random variables take on and are constants.

Whereas the X’s are random variables and hence have distributions, the x’s are constants

and DO NOT HAVE A DISTRIBUTION!

Hence we have the following definitions:

Defn:

An ESTIMATOR is a function of the random sample values, X’s, and is a random

variable with a distribution.

Defn:

An ESTIMATE is a value that the estimator can take on and hence is a single

number.

COMMON ESTIMATORS (aka SAMPLE STATISTICS)

Below are the most commonly used ESTIMATORS and ESTIMATES of population

parameters of most interest.

Population

Parameter

μ

σ2

σ

p

Estimator

(aka Sample Statistic)

1

1

X = (X1 + X2 +...+ Xn )= Xi

n

n

1

S2 =

(Xi -X)2

n-1

Estimate

1

1

x = (x1 + x2 +...+ xn )= xi

n

n

1

s2 =

(xi -x)2

n-1

S=

S2

s = s2

# of obs with characteristic 1

# of obs with characteristic 1

ˆ

ˆ

p=

= Xi p=

= xi

total

n

total

n

Median

X = middle X

x = middle x

Range

R = Max X – Min X

r = Max x – Min x

D:\582745739.doc

5/7/2017

8

Sampling Distributions of Sample Statistics

Population

Parameter of

Interest

popln

Medianpopln

2popln

popln

p

Sample

Random

Sample

Method

Corresponding

Statistic

X

M

S2

S

p̂

Statistical Inference

What does the sample statistic (eg X ) tells us

about the population parameter (eg )?

Foundation (Theory behind) Statistical Inference

Probability, Random Variables, Families of RV’s, and

SAMPLING DISTRIBUTIONS

D:\582745739.doc

5/7/2017

9

Sampling Distributions of Sample Statistics

Defn: The Sampling Distribution of a Statistic is the distribution of statistic (the random

variable) if every possible random sample of the same size were obtained from the

population.

Recall that Distributions have CENTERS, SPREADS, and SHAPES, hence:

SAMPLING DISTRIBUTION will have

CENTER

SPREAD

SHAPE

Typically only two things determine the distribution of a sample statistic:

1. sample size and

2. distribution of the population from which the sample came from.

The sampling distribution for any of the sample statistics that we have seen can be

determined. Thus we can find the sampling distribution of:

CENTER =

The sample average, X

SPREAD =

SHAPE =

2

The sample variance, S .

The sample standard deviation, S.

The sample proportion, p̂ .

In each of these cases, the sampling distribution of the sample statistic depends upon

1. The sample size

and

2. The distribution of the population.

In this class we will only investigate the sampling distribution of the sample average and

sample proportion in depth.

D:\582745739.doc

5/7/2017

10

NOTES AND COMMENTS ABOUT SAMPLING DISTRIBUTIONS

1. Since a Sampling Distribution is a distribution, it has a Center, Spread, and Shape.

While we had many ways of describing Center and Spread in general, we will use the

MEAN to define the Center and VARIANCE or STANDARD DEVIATION to define the

Spread for Sampling Distributions.

2. The Standard Deviation (SD for short) of the Sampling Distribution of a statistic is

also known as the Standard Error (SE for short) of the Statistic.

Interpretation: The Standard Error of a Statistic is a measure of how variable the

statistic is.

Example

Suppose a research paper reports that the sample

of 56 parents of 7th graders in a particular school

system was taken. It also reports that the

incomes of parents had a sample average income of

$45,000 with a standard deviation of $12,000. It

also reports that the Standard Error of the

Average was $1,600. Pictorially:

Here’s what this means.

1. The $12,000 standard deviation is a measure of how variable the collective 56

incomes were. This is a pretty large spread.

2. The $1,600 standard error of the average is interpreted as if we were to take

repeated random samples of 56 parents, measure their incomes, calculate the

sample average in each case, the standard deviation for all of the average

incomes that we calculated would be approximately $1,600.

The Standard Error of the (sample) Average is also more commonly referred to as the

Standard Error of the Mean. Again, poor choice of words, but you’ll see it routinely

used. Below is an example of Minitab descriptive statistics output that uses these

terms.

Descriptive Statistics: Score

Variable

Score

N

121

Mean

90.339

Median

92.000

TrMean

91.138

Variable

Score

Minimum

36.000

Maximum

99.000

Q1

88.000

Q3

94.000

D:\582745739.doc

StDev

6.947

SE Mean

0.632

5/7/2017

11

HOW ARE SAMPLING DISTRIBUTIONS DETERMINED?

Sampling distributions are a statement about the distribution of every possible value of a

statistic. That is, suppose we wanted to determine the sampling distribution of the sample

average. It might seem that we would have to take every sample of size n from the

population, observe, then summarize these values. That is:

RS1

S1

RS2

RS3

S2

S3

RS

S

The most surprising of all is the fact that to determine the sampling distribution of any

statistic requires ONLY ONE RANDOM SAMPLE, and contrary to first thoughts, NOT

MANY RANDOM SAMPLES!!!!

In fact, using statistical theory, we can determine what the distribution of any statistic

will be base on two things:

1. The size of the random sample only, and

2. Facts and characteristics OR assumptions about the population.

Lastly, we note that we will say or assume NOTHING ABOUT THE POPLN SIZE!!!

D:\582745739.doc

5/7/2017

12

SAMPLING DISTRIBUTION OF THE SAMPLE AVERAGE— X

Background/Assumptions—We will assume the following:

1. We have a population of measurements with a mean, popln, and standard deviation, popln.

2. We have a random sample of size n ( X1, X2, …, Xn ) from this population.

Facts—Here’s what we know:

1

1

1. Defn: The Sample Average is X = (X1 + X2 +...+ Xn )= Xi .

n

n

2. Xi = E [ Xi ] = popln

3. 2Xi = Var [ Xi ] = 2popln

4. The Xi’s are independent random variables.

Facts concerning Expectation Function.

5. E [ aX + bY + c ] = aX + bY + c

6. Variance of [ aX + bY + c ] = a22X + b22Y if X and Y are independent.

Using these facts:

Thm: If X1, X2, …, Xn are a RS from a population with mean, popln, and variance, 2popln, then

1. X = E [ X ] = popln and

2. = Var [ X ] =

2

X

Pf:

2

σpopln

n

.

1. E [ X ] =

2. Var [ X ] =

D:\582745739.doc

5/7/2017

13

Example #1

Suppose we take a random sample of 10 from a population that has a mean of 4 and a

variance of 5. We now know that:

n=

popln =

2popln =

X has a mean of 4 and a variance of

5

= ½ = 0.5.

10

Implications

1. The rather small variance of X , only 0.5, ( the standard deviation of X or standard

error of X is likewise small at 0.71) implies that the random variable X has a very

small spread and hence its values are very tightly grouped together.

The important implication is that no matter what value we obtain in our sample, it’s

not very far away from the center or mean of X or popln. As a result we could

conclude that our actual sample value of X will probably be very close to the

population mean, popln value of 4.

2. The other important point to take from the theorem is that it says nothing about

the shape of the population. The result is true FOR ANY POPULATION OF ANY

SHAPE!

3. What is the SE of X ?

Could you draw a picture of the distribution of X for this example? What’s missing?

D:\582745739.doc

5/7/2017

14

Shape

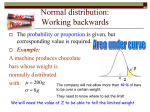

One missing detail about the distribution of X that is not addressed in the theorem is the

shape of the distribution. Recall that the shape of the distribution is equivalent to stating

the “kind” of random variable or distribution. To this end we have the following three

results that we combine into one theorem on the Shape of X .

Thm: If X1, X2, …, Xn are a RS from a population with mean = popln, and variance = 2popln, then

1. If the population is Normally distributed then X is also Normally distributed.

2. If the population is Normal and 2popln, is unknown then

X popln

S

= T(n-1) .

n

3. If n is large then X is approximately Normally distributed!

Implications

1. The first result is important since we note that if we sample from a Normal

population, then the sample average is exactly normally distributed, regardless of

the sample size.

As a follow-up, we also know that: If the population is approx Normal, then X ≈

Normal regardless of sample size.

2. In the second result we see our first encounter with another of the continuous

distributions, the T distribution.

3. The last result of the Theorem is the most important theorem in statistics and is

known as the CENTRAL LIMIT THEOREM. We restate here to emphasize it’s

importance!

CENTRAL LIMIT THEOREM: Let X be the sample average based on a large

random sample, then X ≈ Normal!

What makes this result so important is that it says nothing about the shape of the

population—NOTHING! The only requirement is that we have a “large” sample size.

But then we need to ask what’s large? It turns out that n > 30 will yield acceptable

results in most instances. However, if the population is know to be terribly skewed

(in either direction) then a larger sample size will be required; how large depends on

how skewed the population is.

D:\582745739.doc

5/7/2017

15

Example #2

Suppose X is the sample average lifetime ( in hours ) based on a random sample of n = 16

light bulbs from a population of light bulbs that is approximately Normally distributed with

a mean of 800 hours and a standard deviation of 40 hours.

What would a picture of the population of lifetimes look like?

What is the probability that a random chosen light bulb from the population lasts between

760 and 840 hours?

What is the distribution of X for this example? What does the picture look like? What

is the SE of X ?

What is the probability that our sample average is between 760 and 840 hours?

D:\582745739.doc

5/7/2017

16

Example #3

Suppose we take a random sample of 40 college students and obtain their cell phone usage

records for the last month and determine the # of minutes each student used that month.

What are the chances that our sample average is within ¼ a population standard deviation

of the population mean time spent on a cell phone by college students?

What do we know about X ?

What does it mean to say “our sample average is within ¼ a population standard deviation

of the population mean?”

What are the chances that our sample average is within ¼ a population standard deviation

of the population mean time spent on a cell phone by college students?

As a final question, if an event has an 88% chance of happening, would you guess that it

WOULD or WOULD NOT happen? Why?

Now suppose we actually take our sample of 40 college students and find that

x 749.5min s . What could you conclude about the mean time that college students spend

on their cell phones?

D:\582745739.doc

5/7/2017

17

NOTES AND COMMENTS

1. The sample size need only be greater than 30 for this result to hold. IT DOES NOT

MATTER HOW LARGE OR SMALL THE POPULATION IS.

2. For the Sample Average to be approximately Normal, we only need the sample size to

be bigger than 30. We do not need to make any assumption about the shape of the

population!

Example #4

Suppose we take a random sample of 150 students and measure the IQ of each student.

The population from which we selected the 150 students have a mean IQ of 115 and a

standard deviation of 18 or popln = 115 and popln = 18. Then, since the sample size of 150 is

> 30, we know the distribution of the average IQ in our sample is Normally Distributed

σpopln

18

18

with a mean of 115 and a standard deviation of

=

=

=1.4697.

n

150 12.1474

What does the picture of the distribution of X look like? What is the SE of X ?

If you were to chose one value, at random, from this population of X values, where would

it be likely to lie? Why?

D:\582745739.doc

5/7/2017

18

SAMPLING DISTRIBUTION OF THE SAMPLE PROPORTION— p̂

Background/Assumptions We will assume the following:

1. We have a population and in the population the proportion of items with a given

characteristic of interest is p.

2. We have a random sample of size n ( X1, X2, …, Xn ) from this population, where each of

the Xi is 1 if the ith observation has the characteristic and 0 if not.

Facts Here’s what we know:

1. Recall that the Binomial Experiment was an experiment with n trials, success or failure

at each trial, a constant probability of success at each trial, and independent outcomes

at each trial.

2. Recall that a Binomial random variable was the number of successes in a Binomial

Experiment.

3. Recall that if X was Binomial with parameter n and p, then X = np and 2X = np(1-p).

4. Note that if we let X be the sum of the Xi’s, then we have a Binomial Experiment and

we have a Binomial random variable. Hence

X = Sum of the Xi’s = Bin (n, p).

5. Finally note that since the Sample Proportion becomes

1

1

# with the characteristic

pˆ = (X1 +X2 +...+Xn )= Xi =

n

n

n

pˆ =

D:\582745739.doc

X

.

n

5/7/2017

19

Thm: If X1, X2, …, Xn are a random sample from a population with proportion p, then

The Mean of p̂ = E[ p̂ ] = p & The Variance of p̂ = Var[ p̂ ] =

Pf:

p(1-p)

n

1. The Mean of p̂ = E[ p̂ ] =

2. The Variance of p̂ = Var[ p̂ ] =

Unfortunately this theorem does not say anything about the “shape” of the distribution of p̂ .

However, the following theorem does!

Thm: If X1, X2, …, Xn are a random sample from a population with proportion p, then

p̂ ≈ Normal ( p,

if np > 5 AND n(1-p) > 5

D:\582745739.doc

p(1-p)

)

n

or # successes in sample > 5 AND # failures in sample > 5

5/7/2017

20

Implication

If the sample size is “large,” then the Sampling Distribution of the Sample Proportion

p̂ will have an approximately Normal Distribution with a mean equal to the proportion in

the population, p, and standard deviation equal to the population standard deviation divided

by the square root of the sample size, or

p(1-p)

.

n

What does the distribution of p̂ look like?

Example #1

A random sample of 1,108 adults yielded 78% that said they believe there is a heaven.

Letting pH be the proportion of adults who believe in heaven, p̂H is the 78%.

What can we say about the distribution of p̂H in this example? What do we need to check?

What is the probability that p̂H is within 3% of pH?

D:\582745739.doc

5/7/2017

21

Example #2

Consider the survey from class and whether a student drinks or not. Let pND be the

proportion of MU students who do not drink alcohol.

The results from our class of n = __ who

filled out the survey indicated that only

__ does not drink. Letting p̂ND 1 be the

sample proportion of students who do not

drink out of __, this distribution of p̂ND 1

is:

Can you draw a picture of it?

Now suppose we use all the other STA

301 students that I have survey

information on. I have information on 118

students (the six from this class are NOT

part of the 124). Of this 124, 19

indicated they do not drink. What is the

distribution of this sample proportion,

p̂ND 2 ?

Can you draw a picture of it?

How do these two distributions compare? What are the differences between them?

Why do they differ?

D:\582745739.doc

5/7/2017

22

Interesting fact about the SE of p̂

Recall that the Standard Error of a statistic is nothing more than the standard deviation

of the statistic. Also recall that the standard deviation of the sample proportion is given

by

p(1-p)

.

n

Consider the numerator of this expression and plot this function versus p.

p(1-p)

p

Hence we can conclude that the maximum that p(1-p) can be is 0.25. Hence without

knowing what the value of the population proportion actually is, we can argue that the

standard deviation of p̂ is less than or equal to

0.25

0.25 0.5

1

.

n

n

n 2 n

Hence a conservative estimate of the SD or SE of any p̂ is

1

2 n

.

So if n is large, we can conclude the p̂ ≈

D:\582745739.doc

5/7/2017

23

ASSESSING THE NORMALITY OF A POPULATION

Goal:

We consider as a quick aside of whether a population is Normally distributed or not.

The importance of this question should be obvious, since many of the techniques we

have seen are based on the premise that the population is Normal.

Normality was required in most of the inferences about a single population mean or the

difference of two means. It is also required for inferences about a population variance

and in the Regression and Analysis of Variance methods that we will see.

The basic premise of checking Normality of a population is that a random sample should

exhibit the same characteristics of the population. In particular, if the population is Normal,

then the sample should have “Normal” characteristics. In fact, the sample should have a

“bell-shape.”

Illustration: Consider the following histograms of data. The first two data sets are the soda

pop two-liter contents data and the Lithium Ion cell phone battery data. Based on the

histograms would you be willing to conclude the population is Normal or approximately Normal?

How about the last one?

Histogram of Pop Contents

Histogram of Lithium Ion Batteries:

12

2.0

10

1.5

Frequency

Frequency

8

1.0

6

4

0.5

2

0.0

1.6

1.8

2.0

Pop Contents

2.2

2.4

0

10

12

14

Lithium Ion Batteries:

16

18

Histogram of ShawnGreggResids

40

Frequency

30

20

10

0

-15

D:\582745739.doc

-10

-5

0

5

10

ShawnGreggResids

15

20

5/7/2017

24

METHODS OF ASSESSING THE NORMALITY OF A POPULATION

We will consider three methods of determining whether a population can be considered to

be Normally distributed. Two of the methods are graphical in nature and the last is a

hypothesis test.

In reality I typically use the graphical methods when the sample size is large (over 50 and

usually in the hundreds) and the testing method when the sample is smallish (less than 30).

The three methods are:

1. A histogram or stem and leaf of the data and if the population is Normal, then the

histogram should also be Normal or bell-shaped.

2. A Normal Probability Plot is a plot that will yield a straight line if the data came from

a Normal population.

3. A test in which Ho: The data came from a Normal population and HA: The data came

from a Non-Normal population.

The following examples will illustrate all three methods.

D:\582745739.doc

5/7/2017

25

EXAMPLE #1: Consider the Heart Rates (based on the 30sec measurement) from the

class survey for STA 261 sections in Winter ’98, Fall ’99, Fall ’00, & Fall ’02. Below are

the graphical descriptive statistics and the Normal Probability Plot for these data.

Descriptive Statistics

Variable: HR2

Anderson-Darling Normality Test

A-Squared:

P-Value:

30

50

70

90

110

130

150

95% Confidence Interval for Mu

1.285

0.002

Mean

StDev

Variance

Skewness

Kurtosis

N

71.8182

13.2895

176.610

1.29951

7.83947

231

Minimum

1st Quartile

Median

3rd Quartile

Maximum

30.000

64.000

72.000

80.000

160.000

95% Confidence Interval for Mu

70.095

69.8

70.8

71.8

72.8

73.8

73.541

95% Confidence Interval for Sigma

12.178

14.626

95% Confidence Interval for Median

95% Confidence Interval for Median

70.000

72.000

Normal Probability Plot for HR2

ML Estimates - 95% CI

ML Estimates

Mean 71.8182

99

StDev 13.2607

Percent

95

90

Goodness of Fit

80

70

60

50

40

30

20

AD*

1.37

10

5

1

50

100

150

Data

D:\582745739.doc

5/7/2017

26

EXAMPLE #2: This data is the parking ticket from the STA 261 Fall 2002 class.

Descriptive Statistics

Variable: PTickets

Anderson-Darling Normality Test

0

200

400

600

800

95% Confidence Interval for Mu

A-Squared:

P-Value:

13.060

0.000

Mean

StDev

Variance

Skewness

Kurtosis

N

60.517

150.128

22538.3

3.13743

10.9226

58

Minimum

1st Quartile

Median

3rd Quartile

Maximum

0.000

0.000

0.000

0.000

800.000

95% Confidence Interval for Mu

21.043

0

50

100

99.991

95% Confidence Interval for Sigma

126.919

183.803

95% Confidence Interval for Median

95% Confidence Interval for Median

0.000

0.000

Normal Probability Plot for PTickets

ML Estimates - 95% CI

99

ML Estimates

Mean 60.5172

95

StDev 148.828

90

Goodness of Fit

Percent

80

AD*

70

60

50

40

30

13.03

20

10

5

1

0

400

800

Data

D:\582745739.doc

5/7/2017

27

EXAMPLE #3: This data is the My Age from the STA 261 Fall 2002 class.

Descriptive Statistics

Variable: My Age

Anderson-Darling Normality Test

A-Squared:

P-Value:

35

39

43

47

51

55

95% Confidence Interval for Mu

0.660

0.081

Mean

StDev

Variance

Skewness

Kurtosis

N

47.0690

3.8428

14.7671

-1.7E-01

1.32698

58

Minimum

1st Quartile

Median

3rd Quartile

Maximum

35.0000

45.0000

47.5000

49.0000

57.0000

95% Confidence Interval for Mu

46.0586

46

47

48

48.0794

95% Confidence Interval for Sigma

3.2487

4.7048

95% Confidence Interval for Median

95% Confidence Interval for Median

46.0000

48.0000

Normal Probability Plot for My Age

ML Estimates - 95% CI

99

ML Estimates

Mean 47.0690

95

StDev 3.80953

90

Goodness of Fit

Percent

80

AD*

70

60

50

40

30

0.827

20

10

5

1

35

45

55

Data

D:\582745739.doc

5/7/2017

28

EXAMPLE #4: This data is pop data.

Summary for Pop Contents

A nderson-Darling N ormality Test

1.6

1.8

2.0

2.2

2.4

A -S quared

P -V alue

0.25

0.626

M ean

S tDev

V ariance

S kew ness

Kurtosis

N

2.0000

0.2828

0.0800

0.0

-1.2

7

M inimum

1st Q uartile

M edian

3rd Q uartile

M aximum

1.6000

1.8000

2.0000

2.2000

2.4000

95% C onfidence Interv al for M ean

1.7384

2.2616

95% C onfidence Interv al for M edian

1.7467

2.2533

95% C onfidence Interv al for S tDev

95% Confidence Intervals

0.1823

0.6228

Mean

Median

1.7

1.8

1.9

2.0

2.1

2.2

2.3

Probability Plot of Pop Contents

Normal - 95% CI

99

Mean

StDev

N

AD

P-Value

95

90

2

0.2828

7

0.248

0.626

Percent

80

70

60

50

40

30

20

10

5

1

D:\582745739.doc

1.0

1.5

2.0

Pop Contents

2.5

3.0

5/7/2017

29

EXAMPLE #5: This data is the Lithium Ion data.

Summary for Lithium Ion Batteries:

A nderson-Darling N ormality Test

10

12

14

16

A -S quared

P -V alue

0.35

0.463

M ean

S tDev

V ariance

S kew ness

Kurtosis

N

14.348

2.069

4.282

-0.077778

-0.658949

45

M inimum

1st Q uartile

M edian

3rd Q uartile

M aximum

18

9.750

12.705

14.200

16.155

17.900

95% C onfidence Interv al for M ean

13.727

14.970

95% C onfidence Interv al for M edian

13.630

14.939

95% C onfidence Interv al for S tDev

95% Confidence Intervals

1.713

2.614

Mean

Median

13.50

13.75

14.00

14.25

14.50

14.75

15.00

Probability Plot of Lithium Ion Batteries:

Normal - 95% CI

99

Mean

StDev

N

AD

P-Value

95

90

14.35

2.069

45

0.348

0.463

Percent

80

70

60

50

40

30

20

10

5

1

D:\582745739.doc

10.0

12.5

15.0

Lithium Ion Batteries:

17.5

20.0

5/7/2017

30

EXAMPLE #6: This data is the last data set.

Summary for ShawnGreggResids

A nderson-Darling N ormality Test

-15.0

-7.5

0.0

7.5

15.0

A -S quared

P -V alue <

1.46

0.005

M ean

S tDev

V ariance

S kew ness

Kurtosis

N

0.0000

6.0080

36.0955

0.80960

1.98405

144

M inimum

1st Q uartile

M edian

3rd Q uartile

M aximum

22.5

-15.5694

-3.4826

-0.6528

3.2708

22.6528

95% C onfidence Interv al for M ean

-0.9897

0.9897

95% C onfidence Interv al for M edian

-1.1023

0.0865

95% C onfidence Interv al for S tDev

95% Confidence Intervals

5.3850

6.7951

Mean

Median

-1.0

-0.5

0.0

0.5

1.0

Probability Plot of ShawnGreggResids

Normal - 95% CI

99.9

Mean

StDev

N

AD

P-Value

99

Percent

95

90

8.333331E-11

6.008

144

1.465

<0.005

80

70

60

50

40

30

20

10

5

1

0.1

D:\582745739.doc

-20

-10

0

10

ShawnGreggResids

20

5/7/2017

31

SUMMARY OF SAMPLING DISTRIBUTIONS AND ESTIMATION

Defn: A Sampling Distribution is the distribution of an estimator or sample statistic (ie RV),

such as X , S2, S, p̂ , M

SAMPLE AVERAGE

Defn: X is the sample average of a RS of size n from a popln with mean, popln and

variance, popln

2

Distribution of X

Mean of X = X = E[ X ] = popln

Variance of X = σ =

2

X

Shape:

2

σpopln

n

X will be exactly or approximately Normally distributed if the popln is

exactly or approximately distributed, regardless of the sample size, n

X will be approximately Normally distributed when n 30

X-μpopln

is Z if is known and popln is Normal

σpopln

n

T(n-1) if popln is Normal

X-μpopln

.

is

S

Z if n large

n

SAMPLE PROPORTION

Defn: P̂ =

X

, where X = number in random sample with characteristic = Bin ( n, p ) where

n

p is the proportion with characteristic in population.

Distribution of P̂

Mean of P̂ = μP̂ = E[ P̂ ] = p = population proportion with characteristic

p(1 - p)

n

x

n!

px (1 - p)n-x or

Shape of P̂ : Pr{ P̂ =

} = Pr{ X = x } =

n

x!(n- x)!

Variance of P̂ = σP̂ =

2

Normal if np 5 & n(1-p) 5

D:\582745739.doc

5/7/2017

32