* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download I am assembling a - RIT Center for Imaging Science

Stereo display wikipedia , lookup

Subpixel rendering wikipedia , lookup

Apple II graphics wikipedia , lookup

Indexed color wikipedia , lookup

BSAVE (bitmap format) wikipedia , lookup

Edge detection wikipedia , lookup

Image editing wikipedia , lookup

Hold-And-Modify wikipedia , lookup

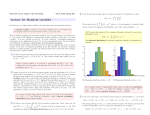

Q1…Focus: imaging systems Question: Two variants of the HD format are the 720p (1280H x 720V progressive scan) and 1080i (1920H x 1080V interlaced scan). An observer is seated 2m from a 65" LCDTV (16:9 aspect ratio, screen width = 144cm), viewing a 'slide show' displaying very high quality still images. Incorporating the properties of the human visual system, the viewing conditions, and the HD formats, discuss the tradeoffs between using the 720p and 1080i formats in this situation. Recommend one for this purpose. [[Answer: 65" 16:9 LCD TV has a width of 144cm. at a viewing distance of 200cm, that subtends a half angle of atan(72/200) = 20 degrees, or a full angle of 40 degrees. 1080i = 1920 pixels wide = 960 cycles wide = 960/40 cycles/degree = 24 cycles/deg Compare to 720p, which is 1280 x 720: = 640/40 = 16 cycles/degree, well within the visible range. Since there is a static image (therefore no concern about temporal aliasing with the interlace) there is an advantage to 1080i. The discussion should include the dis/advantages of progressive and interlaced scanning (e.g., spatial vs. temporal artifacts), the net difference in bandwidth between the two standards (1080i is slightly higher), and the fact that the sensitivitiy of the human visual system is limited to spatial frequencies below 60 cpd. The answer should recognize that the issue is cycles/angle (vs. cycles/distance), so the viewing distance has to be taken into account. A thoughtful answer might also talk about the effects of aliasing the 'very high quality" images.]] Q2…Focus: imaging systems Question: In a classical experiment, the line-spread function of the human eye was measured using a double-pass optical technique. The Figure below illustrates the effective on-axis LSF of the combined cornea and eyelens system at three pupil apertures; 1.5mm, 2.4mm, and 6.6 mm. The LSF is given in terms of visual angle subtended at the eye (1 arc min = 1/60th degree). a. Explain the likely mechanisms responsible for the variation in LSF as a function of aperture. b. Sketch the spatial-frequency response of the eyes at these three pupil sizes. Label and scale the axes, clearly mark your curves, and justify your response. [[[Answers: a. We are treating the eye as we would any optical system, so its performance is limited by diffraction and aberrations. The optical performance has nothing to do with rods & cones or sampling issues - diffraction limits the response at the smallest aperture (1.5mm), aberrations increase the LSF as pupil size increases. The answer should note that the optical quality increases between 1.5 and 2.4mm diameters, then decreases for larger pupils as the aberrations overwhelm the diffraction. b. The answer should recognize that the MTF is the FT of the LSF. The 2.4mm MTF curve should extend to the highest spatial frequencies (~30-60 cycles/degree), with both larger and smaller pupils dropping below that. All should be unity at low spatial frequencies (the drop in low-frequency CSF is due to neural computations, so it does not affect the MTF).]]] Q3…Focus: imaging systems Question: High-definition digital television displays come in two common varieties, 1080i and 720p. A 1080i display nominally has 1920x1080 pixels in an interlaced format, while a 720p display has 1280x720 pixels ina progressive scan format. Quantitatively describe how you would compare the expected image quality ofthe two displays. State all your assumptions. Draw a conclusion on which display type is better. Answer: First one can compute the nominal number of pixels. That’s 2.0736 million for 1080i and 0.9216 million for 720p. It appears that 1080i has a huge advantage (2.25 X). However, the 1080i display, being interlaced, is only refreshed at half the rate of the 720p display (assume equal frame rate). Thus the pixel count of the 1080i display must be cut in half for a fair comparison (some say a reduction to 75% is more appropriate, but anything in the range of 50-75% is defensible and 50% is the true information content). Thus the pixel count becomes 1.0368 million for 1080i, now a factor of 1.125 times 720p and much more comparable. This would still suggest that 1080i would have better image quality since there are 12.5% more pixels. However, that assumes that the interlaced display doesn’t introduce objectionable artifacts. If interlace is objectionable, as it often is for static graphics or fast-moving subjects), then it is quite likely that the 720p display will be perceived to be of higher overall quality. At a large enough viewing distance, the interlace artifacts might become more difficult to perceive, but at such a distance, the difference in pixel count would also be impossible to perceive. Given the small increase in pixel count that would be more than offset be the deleterious effects of interlace, it is clear to me that 720p provides higher image quality. (It is reasonable to give the opposite answer as long as the potential problems of interlace have been addressed and the conclusion is that they are not important ... personally, I think they are.) Q4…Focus: imaging systems Question: The recent Mars Rovers had a pair of cameras mounted 30cm apart with 1-degree of toe-in (both pointing in 1-degree from parallel). Each camera has a field of view of 16.8 x 16.8 degrees and sensors with1024x1024 pixels. One claimed use for the dual-camera configuration is to provide stereoscopic depthinformation. Given the above configuration, over what distances from the cameras can useful stereoscopic images be obtained? If the stereoscopic capabilities of this system are limited, what was the truevalue of having two cameras in such a configuration for this mission? Answer:There are two aspects to this, overlap in the field of view and angular differences in corresponding pixels. The FOV overlap is a matter of simple geometry and with some criterion for overlap (say 50% image area), one can easily compute the minimum and maximum distances given the camera FOV and geometry. It is also important to mention that it would be assumed the cameras can focus over that range. Angular differences for each pixel will also require some criterion. A reasonable value might be 5 pixels of angular difference at the furthest useful distance for stereopsis. The FOV of a single pixel can be computed and then the distance at which, the angular separation of the cameras is 5 times (or whatever the selected criterion) that value would be the far limit. The system is surprisingly limited for stereo and could be much better with a single camera taking two views after moving the rover slightly. The real reason for the two cameras is probably a back up and to have some more spectral channels (although that could have been done with one). It also makes the rover more human-like, probably good for NASA PR. Q5…Focus: imaging systems Question: Anti-aliasing is an important process in digital cameras and computer graphics. Describe what an anti-aliasing filter in a digital camera accomplishes and how it functions. Speculate on how a similar process would be performed in computer graphics rendering of images and why it might be important. Answer: Aliasing in image capture is caused by the sampling interval being at a lower spatial frequency than supported by the sampling function (sensor active area and optical point-spread function). This is particularly a problem with fixed area sensors such as CCDs that collect light over an area smaller than the interpixel area and is further exacerbated by the use of color filter arrays in single-chip cameras. An anti-aliasing filter is placed in contact with, or close proximity to, the sensor array and essentially blurs the incoming image with the objective being to make the sensor response function span an area greater than the raw sensor site on the chip (preferably overlapping with adjacent pixels to truly eliminate aliasing). This can be accomplished with simple blurring filters or sometimes with microlens arrays that serve to focus incoming energy from a larger area on each sensor pixel site (also increasing sensitivity for the package). In computer graphics, a rendered pixel area might be made up of fractional areas of several objects. The ideal pixel value would be a weighted average of the values within the pixel area (and perhaps slightly beyond). However, a rendering algorithm normally samples only a single point in the center of the pixel. Such a rendering will result in jaggy edges (as sometimes observed with text on a display that is not antialiased). A process similar to the anti-aliasing filter in a digital camera could solve this problem. Instead of blurring the rendered image, it is instead rendered at a higher resolution (perhaps as many as 8x8 pixels rendered for each pixel displayed) and then an appropriate averaging function (a Sinc function for those that are very particular) is used to compute the final displayed image. Q6…Focus: imaging systems Question: Blackbody (or Planckian) radiators that emit in the visible wavelength region can have their color (spectral power distribution) described with just one number, their absolute temperature. How is this possible? D aylight follows a similar trend in that the color of various phases of daylight can be described by one number (correlated color temperature, analogous to the color temperature of blackbodies). However, the spectral power distributions of daylight are much more complex. Principal components analysis (PCA) of relative daylight spectra suggests that accurate reconstructions can be made with a mean vector and two additional vectors. Outline a system that would allow one to specify the correlated color temperature of daylight and then reconstruct a relative spectral power distribution. How can you go from the one-dimensional data of correlated color temperature to the two-dimensions required for determining the weights of the PCA vectors? Answer: The spectral power distribution of a blackbody radiator can be computed from its absolute temperature (Kelvin) through enumeration of Planck’s equation. For daylight, if the color can be described by a similar temperature value, then there must be a daylight locus of chromaticities similar to the blackbody locus. A path traced through 2-D chromaticity space with absolute intensity as a free variable. Since it is stated that two-dimensions are required to reconstruct a spectral power distribution, that path must be curved in linear chromaticity space (in fact both the Planckian and daylight loci are curves. Thus, the temperature could be used as an index into the curve and then the 2D chromaticity coordinates could be used to transform into the necessary weights on each of the PCA vectors to recreate the desired spectrum. Q7…Focus: Basic Principles of Img Sci I Question: Consider the use of a sharp edge imaged onto an analog detector. Derive an equation relating the edge spread function to the line spread function. Verify this equation using (a) convolution theory, (b) probability theory. Solution: Mathematically, the line spread function integrated with respect to y. One can get the edge spread function in the x direction is simply the point spread function at by adding all the line spread functions at This equivalent to integrating a single line spread function from - . to (a) Assuming a linear, shift invariant system, the output is a convolution of the input knife-edge exposure with the impulse response function, the line-spread function . In 1D we have Using the commutative property of convolution The figure on the next page illustrates the convolution. To find the value of we stop the convolution at the point (b) An alternative method of understanding Eq. (14.2) is to use probability theory. Because the area of is normalized to unity, we can view it as a probability density function. This function is derived from the probability distribution function by differentiation. The distribution function represents the cumulative probability as a function of with a value of zero at and a value of one at . It represents the cumulative probability, or area under the probability density function. For a given value of we can find the value of We can recognize the similarity with Eq. (14.2) in the notes and then identify distribution function. by as a probability Q8…Focus: Basic Principles of Img Sci I Question: (a) Calculate the number of effective pixels in a 35-mm frame (24x36 mm) of an ISO 50 monochrome film whose 50% MTF is at 65 cyc/mm and assuming square pixels. (b) Assuming the same pixel size, calculate the number of pixels in a 2/3” (8.8x6.6 mm) optical format CCD. (c) Discuss the differences in pixel count in terms of system performance. (d) What size pixel would be needed in the CCD to match the pixel count in film? (e) Comment on the system performance in this case, continuing with the assumption that both imaging systems are monochrome. Now consider a monochrome CCD array is illuminated by a light source with emission at 450, 550, and 650 nm, each with an irradiance of 1 W/cm2. Each pixel is 9 -square, and the array has a 100% fill factor. The CCD is designed to be read out 10 times/s. The collection efficiency of the CCD is 0.2 at 450 nm, 0.35 at 550 nm, and 0.3 at 650 nm. (f) What is the minimum full-well capacity required to accurately detect the irradiance from the light source? Solution: (a) The pixel size is calculated using Eq. (14.38) giving The frame area is 24x36 mm = 864 mm2 = 8.64x108 . The number of pixels is then (b) A 2/3” format has a sensor size of 8.8x6.6 mm. The number of pixels is then (c) At equal print magnification the CCD will have poorer resolution, although it may be sufficient at low magnification (4x6 inch) to give acceptable images. (d) 107 pixels are now distributed over a 58 mm2 area (8.8x6.6 mm). The pixel size is then Assuming square pixels this means the pixel edge is 2.4 . (e) The CCD resolution is now better, although the CCD image will have to be magnified about 15x [(9.3)2/(2.4)2] more than the film image to produce approximately the same print pixel size. This could lead to pixelation artifacts in the CCD image, depending on the final print size. However, the sensitivity is now reduced by (2.4)2/(9.3)2 or about 15x. In addition, the dynamic range will be lowered because the full-well capacity is reduced by (2.4)2/(9.3)2, or also about 15x. (f) For this problem we use . We need the photon energies which are 2.76, 2.25, and 1.91 eV for the 450, 550, and 650 nm wavelengths, respectively. An irradiance of 1 converts to 6.24x1012 eV/cm2 s. Since the array is sampled every 0.1 s it sees only 6.24x1011 eV/cm2 per sample period. Multiplying by the pixel area gives 5.05x10 5 eV/pixel for each wavelength. To get the number of photons we need to divide this energy by the photon energy to get conversion to photons photons 450 5.05x105 eV/ 2.76 eV/photon 1.83x105 550 5.05x105 eV/ 2.25 eV/photon 2.25x105 650 5.05x105 eV/ 1.91 eV/photon 2.65x105 , nm Q9…Focus: Basic Principles of Img Sci I Question: From the Kubelka-Munk equation for reflectance, derive a much simpler equation assuming , , . Solution: For a Beer-Lambert system placed against a Lambertian reflecting surface, and provided that the reflectance is collected with a hemispherical detector, we have the conditions: We cannot immediately reduce the above equation, since infinities result when can recognize that as we get And, since , then Now we replace with Expanding and replacing . However, we in the given equation to get with in the hyperbolic trig function gives Since , all of the terms are very large, except for the 1 in the numerator and denominator. These two terms can therefore be dropped in the limit of . Thus Now we represent the hyperbolic trig functions in their exponential format in the Q10…Focus: Basic Principles of Img Sci I Question: Derive an equation relating the granularity variance to imaging particle area and transmission density. An equation giving the transmission density is Solution: For the granualrity variance we are concerned with fluctuations in density so we can recast the above equation into since the only variation from point-to-point as the aperture scans the image will be the number of image grains and their image density. We square both sides of Eq. (15.1) and take definition of standard deviation and then divide by summation to each side of the equation, we have where the . If we do likewise with as the numerator in the , and then apply a s represent the variances of the indicated quantities. If the positioning of the imaging particles is random, then the distribution of the is Poisson, in which case the variance of the distribution is equal to its mean, that is . This brings us to the following equation as an expression for the rms granularity in terms of the average number of imaging particles and their size. The noise level will also be a function of the signal level. To see this we recast the Nutting equation so that appears on the left-hand side This expression is then substituted into Eq. (15.3) so that which can be rearranged to get where we have introduced a new granularity constant coefficient . Specifically, that is analogous to the Selwyn granularity . From Eq. (15.6) we see that the granularity increases both with increasing imaging particle area and with increasing density. Q11…Focus: Basic Principles of Img Sci I Question: Calculate the number of photons/s incident on a detector 75square at a wavelength of 4 from a human 1 km away. Assume the human is 180 cm tall by 40 cm wide. Solution: This problem is solved by assuming the human is a perfect blackbody radiator radiating at 98.6 F or 310.16 K. The photon energy is 1240/4000 nm = 0.31 eV. Eq. (5.6) we have for this temperature is 0.0275 eV. Using where we have dropped the wavelength unit since we are considering monochromatic light. The irradiance at the detector is given by Eq. (5.18). We first get the radiant intensity by first deriving the flux from the radiant exitance by multiplying by the area of the source (the dimensions of the human) and then dividing by the total solid angle 4 . Then we divide by the 1 km distance to get the irradiance. Note we are using , not , because in the first equation assumes isotropic source. Now we multiply by the area of the detector and convert to photons/s The ability to detect this signal depends upon how it compares with the background. In midday in July the background will be approaching the temperature of the human and detection will be difficult. At 1 am in January on a cloudless night the background will be considerably colder and the possibility of detecting the human will be higher. Q12…Focus: Basic Principles of Img Sci II Question: Below is the histogram data for a 4 bit image of a black-and-white soccer ball on a gray background. This soccer ball is 230 mm in diameter. What is the pixel spacing? What fraction of the total image area is occupied by the soccer ball? Propose a processing scheme that will detect the circular edge of the soccer ball. In other words, ideally the final image should be black every where except that the edge pixels should be white. If you plan to chain together several different processing steps, then give details for each one (i.e. if you use a convolution kernel, then write it down and justify it). Discuss possible problems and non-ideal nature of the final image. Pixel count[0,520,920,490,30,40,5910,24040,6050,80,20,80,440,960,420,0] black gray white Answer: Area of the ball = 41,526 mm2 No. of pixels in the ball = 3860 area of a pixel = 10.76 mm2 Pixel spacing = 3.28 mm Total no. of image pixels = 40,000 Fractional area = 3860/40,000 = .0965 Possible processing steps: (i) (ii) (iii) Histogram transformation: LUT level 0 to 4 go to 15, 5 to 5 to 10 go to 0, and level 11 to 15 go to 0. Image should have all the ball pixels white and the background black. Now use a Laplacian kernel or a combination of x and y first derivative kernels. Kernel coefficient values must add up to zero. Take the absolute value at each pixel location. Image should have zero (almost) pixel values every where except at the edge locations. Re-map pixel values from 0 to 15, examine the histogram and pick a threshold to generate a binary output image. Q13…Focus: Noise & Random Processes Question: Show that the relationship is true for a linear, shift invariant system with a WSS input. is the power density spectrum. Assume the mean output is known to be a constant. Solution: Next, we write the equation for the output autocorrelation function of our linear, shift-invariant system. Now express in terms of the input and the impulse response function Using the commutative property of convolution again, we can rewrite this as Note that if we had followed the procedure used in getting the first integral to get the second integral, we would have had write this more simply as Because we have assumed . But because we have assumed a shift-invariant system we can . Swapping the and is WSS we rewrite this as where we used equation says operators again gives to get the argument of is WSS if is WSS because only depends on constant from Eq. (9.2). Now we compute the output power spectral density theorem gives . Note that this and is a . Using the EWK Making a change of variable using we get where is the system transfer function as defined earlier, is its complex conjugate, and is a deterministic gain ( in film systems). If is a random variable such as in the photon amplifier, then we shall use . Equation (9.9) says in a WSS system the output power spectrum is just the input power spectrum times the squared magnitude of the transfer function. The same relationship is found for discrete systems. In terms of the NPS and when the system adds noise we have where is the additive noise term. Q14…Focus: Noise & Random Processes Question: Given the following for a digital radiographic scintillator-detector, shown in the accompanying figure, in which the Poisson excess was assumed to be negligible, derive an expression for the of a high-resolution scintillator and discuss the implications. The NPS after going thru the detector is also given below. Solution: A high-resolution scintillator implies that the system MTF in the direction is limited by the detector-array aperture function rather than by the scintillator MTF. There is no correlated noise on the detector in this case because all the scattered photons from a single x-ray photon in the screen are collected in the same pixel. This corresponds to system designs incorporating high-resolution scintillator materials, as well as systems designed for “direct-detection” flat-panel detectors (X-rays are converted directly into electrons in the detector). In this case over the frequencies passed by and the simplifies to is approximately constant of It has been shown elsewhere (reference 2) that the sum of is always equal to a constant given by where is the detector fill factor in the and its aliases at harmonics direction. We then get for a one-dimensional detector. For two dimensions we get The one-dimensional detecto along the of this two-dimensional r, evaluated axis of the two-dimensional detector, is therefore given by For this special case of a photon-noise limited detector with a high-resolution scintillator screen, the is proportional to the screen quantum efficiency and the detector fill factor , and always has a shape given by . Fig. 10.1 illustrates the calculated for a twodimensional detector in the -direction with a high-resolution scintillator for various fill factor values , assuming . As the fill factor decreases for a constant , higher frequencies are passed by the detector-element aperture function and noise aliasing increases. This is directly responsible for the decreasing . Q15…Focus: Noise & Random Processes Question: A processed film has a true density and a density measured with a square aperture having area . Find an expression for the scale value of the NPS and discuss its implications. Solution: Aperture spatially integrates true density to give measured density. Then and can be converted into autocorr func. Then, after applying EWK The conversion factor in (12) is the squared magnitude of the system transfer func. The removes the complex exponentials, leaving a real func. Via cent ord thm, the If both and s have unit amplitude at (0,0) ( are large wrt any correlation distance in be a constant for frequencies where . Variance is has unit vol). So , will where double integral evaluates to b/c via cent ord thm area in freq domain is height in spatial domain, Thus and area of = area of . (13) was used in last step. (15) which is Eq. (15.16) of BP I with the granularity constant. So, when aperture (sampling function) large wrt any correlation distance, zero-freq value of NPS is measured variance scaled by aperture area. Sampling func is such that we can’t detect change in correlation. If sampling aperture made small enough to detect change in correlation, then Q16…Focus: Noise & Random Processes Question: Consider the case of a negative contact printed to make a positive as shown in the figure below. Find an expression for the of the system, including spatial frequency aspects, and discuss its implications. Solution: To include the spatial frequency aspect, a schematic of the system using symbols appropriate to the first part of the problem would be as follows The for the negative stage would be, using the standard formula for film The NPS transfer equation would be Finally, the system would be In those cases where second term in the denominator is much less than 1, the overall will be that of the first stage with negligible reduction due to the second print stage. Now suppose at all frequencies and clearly negligible. But, if at some frequency . Then, if the second term in the denominator is , the second term in the denominator will not be negligible and . In other words, we can no longer neglect the pos stage because its MTF may be such that stage does impact the system . Q17…Focus: Noise & Random Processes Question: A single-stage photon amplifier is used to enhance the low-light sensitivity of a CCD array, as shown in the figure below. Find an expression for the at the output of this amplifier, that is, before the photons enter the fiber optic, and discuss its implications. Spatial frequency aspects can be ignored. Recall that for a photon amplifier. Solution: The degree of photon amplification is limited by the sensitivity of the input stage to arriving quanta (the fraction of light absorbed and the efficiency of their conversion to secondary emission) and by noise that is introduced in each stage of amplification. The noise that is added in the first element of each stage is customarily called background noise and will be symbolized by . The output of the first element of the stage is These the stage emissions from the first element are then multiplied in the second element. The output of is then To calculate the of the image intensifier we need to know the variances for each element of each stage. Throughout the analysis we assume that the signal-propagated events and background events are statistically independent. Then the variance can be formed simply as the sum of the individual variances (Recall Peebles Example 5.1-3, the variance of the sum is the sum of the variances when the RVs are uncorrelated, and if they are statistically independent they are certainly uncorrelated.) to get for the first element of the stage To go further we need to know the statistics of both these contributions. For simplicity we assume that the background events are Poisson distributed. Then . The signal undergoes a binomial selection process (i.e., a Bernoulli trial) in the first element of each stage, and then undergoes a Poisson amplification process. To calculate the variances we can use the equation given in the problem statement. First element. Assuming no correlation between output of this stage is and , we can write the variance of , no background noise, and knowing the as where we used the fact that the variance of a binomial process is the mean times one minus the mean. The last equation in Eqs. (8.10) represents a preserved Poisson process with a new mean. Adding the background noise, we finally have Note that the variance equals the mean for this first element, as expected, since both the signal and noise processes are Poisson, and the “gain” is binomial. Second element. After the second element the output is we get where we have used the fact that both and are Poisson and used Eq. (8.7) for background noise for this stage were zero, the variance would be . If the which is analogous to Eq. (8.52) except the mean input is now instead of . Note that the second term of this equation indicates the variance is greater than that of a Poisson process with mean . This increased noise variance was seen in Eq. (8.52) and is due to the randomness of the amplification process. With this information we are now ready to calculate the element the is given by where the subscript is given by for the two elements. For the first indicates the first element of the first stage. The of the second element Suppose . Then Eq. (8.15) becomes Figure 8.16 shows that the maximum increases above this lower limit background noise on is , but only in the case when decreases for low exposures ( . This effect of exposure is increased. Of course on . As ) showing the effect of diminishes, and eventually vanishes, as the is just proportional to (background noise)/(photon noise) since we are assuming Poisson statistics for both noise sources. As shown in Fig. 8.17, when the system is background-noise limited, and when the system is photon-noise limited. Referring back to Eq. (8.15), if we instead assume no background noise then we have which is the same as Eq. (8.56) with replacing . Q18…Focus: Noise & Random Processes Question: Consider an ideal array of photon detectors. Each element of the array acts as an individual photon detector and the imaging system has the property that each photon detected can be read out individually — thus an ideal photon detector Assume the array consists of individual photon detectors, each having identical properties. Each detector is assumed to record incident photons in a manner independent of its neighbors and has a unique and distinguishable image state dependent only on the number of incident photons on that detector. Also, assume that the exposure over the array is uniform and that the number of photons incident on a detector obeys Poisson statistics. If the mean number of photons/detector is , then the actual number of photons incident on a detector will have probability (1) Calculate the variance of the noise for this idealized detector array. Solution: Given the Poisson probability formula in Eq. (1), it is easy to calculate the fraction of detectors that have reached the saturation level . This is given by This summation will provide an overall measure of the efficiency of the imaging process. First, we consider the relationship between the mean count level, , of the array and the mean exposure, Using the definition of the mean we have . where the first term takes into account those detectors that have not reached saturation and the second term takes into account those that have reached saturation. Figure 3 shows a plot of the mean count level in relation to the saturation level, as a function of the mean exposure level. As might be expected, there is a linear relationship between input quanta and number of image counts when . This linearity breaks down as . At exposures high enough for all receptors to have received at least photons, each detector records exactly counts. Equation (3) may be rewritten as There are summations on the right-hand side of this equation and, since these are all summed to , each term will be equal to unity less the missing initial terms. Hence, we can rewrite Eq. (4) as minus an expression for the missing terms. Then we get where There will be spatial fluctuations in the image which arise in the present ideal case from a statistical distri ution of the incident photons, that is, photon noise. We can calculate the variance of this noise if we have the first and second moments of the random variable . We already have the first moment in Eq. (5). The second moment of the distribution about the origin moment (Eq. (3)). Thus is written in a form analogous to the first (7) This expression can be rearranged to where Now the variance of can be written as noise merely mirrors the input noise, as we would expect for an ideal detector.