* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download What is Remote Sensing?

Survey

Document related concepts

Transcript

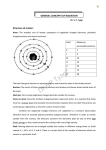

What is Remote Sensing? Defining Remote Sensing Remote sensing = collection of data about features or phenomena of the earth surface (and near surface) without being in direct contact Lack of contact with features or phenomena Sensors utilize electromagnetic radiation (EMR) Collection of data Analysis of data collected Sensing Data are collected by sensor Passive – collection of reflected or emitted electromagnetic radiation Active – Generates signal and collects backscatter from interaction with terrain Imaging & Non-Imaging Photographic vs. Non-Photographic Distance – How remote is remote? Platforms for sensors operate at multiple levels Cranes Balloons Aircraft Satellite Permit near-surface to global scale data collection Remote sensing: the collection of information about an object without being in direct physical contact with the object. Remote Sensing vs. Aerial Photography Remote sensing is performed using a variety of sensors and platforms that may operate in multiple parts of the EMR spectrum Aerial photography is performed using film-based cameras that sense only in UV, visible, and NIR spectrum and are operated on aircraft Aerial photography is a subset of remote sensing Image vs. Air Photo Interpretation Images can be produced by most remote sensing systems Air photos are produced by aerial photographic camera systems Air photo interpretation is a subset of image interpretation Science vs. Art Science RS is a tool for scientific analyses Draws on multiple scientific disciplines Probabilistic Art Interpretation combines scientific knowledge with experience and knowledge of the world Interpretation skill is primarily learned through practice Simplified Information Flow Passive systems – detect naturally upwelling radiation Flow: Source Surface Sensor Source, the sun, illuminates surface Surface reflects/emits radiation Sensor detects reflected radiation within its field of view (FOV). Photographic systems – detected radiation exposes film Non-photographic – detected radiation generates electrical signal Interpretation – manual or machine Complexities in Information Flow Variation in illumination Sun angle Clouds Aerosol concentrations, other scattering Variation in surface properties/coverage Soil moisture, vegetation growth/conditions, surface roughness affect reflectance properties Reflectance properties are dependent on solar angles, ratio of diffuse and direct, viewing angle Complexities of Information Flow (cont.) Sensor/platform variation Attitude Altitude Orbit Film/wavelength sensitivities Calibration or Optics Processing/interpretation variation Film or digital processing Repeatability of interpretation results In situ vs. Remote Sensing Both attempt to observe/measure phenomena In situ Physical contact Instruments for direct measure May be source of error Interaction with phenomena Sampling method Ground reference vs. “ground truth” In situ or remote sensing? Ground Measurement In Support of Remote Sensing Measurement Ground spectroradiometer measurement of soybeans Ground ceptometer leaf-areaindex (LAI) measurement Advantages of Remote Sensing Different perspective Obtain data for large areas In single acquisition – efficient Synoptic Systematic Obtain data for inaccessible areas No effect/interaction with phenomena of interest Disadvantages of Remote Sensing Accuracy and Consistency Artifacts Noise Generalization Processing Scale-related effects High initial outlays for equipment and training Data Collection - Sensors Cameras (film based) Video Systems Video cameras, Return Beam Vidicon Imaging Radiometers Metric, Strip, Panoramic, Multi-spectral Digital frame, Scanners, Pushbroom, Hyperspectral Passive Microwave Radar Operational vs. State-of-the-art Data Collection - Imagery Panchromatic (monochrome or B&W) – sensitive across broad visible wavelengths Color – may provide added discrimination Color film Color composites Thermal – in region 3 microns to 1 mm, sensitive to temperature Microwave – all weather capability Three-way Interaction Model Between the Mapping Sciences as Used in the Physical, Biological, and Social Sciences Jensen, 2000 Art vs. Science Image interpretation is not exact science Interpretations tend to be probabilistic not exact Successful interpretation depends on Training and experience Systematic and disciplined approach using knowledge of remote sensing, application area and location Inherent talents Image Interpretation - Defined Act of examining images for the purpose of identifying and measuring objects and phenomena, and judging their significance Image Interpretation (II) Tasks In order of increasing sophistication... Detection Identification Measurement Problem-Solving Not necessarily performed sequentially or in all cases II Tasks - Detection Lowest order Presence/absence of object or phenomena Examples: buildings, water, roads and vegetation IIMore Tasks - Identification advanced than detection Labeling or typing of the object/phenomena Tends to occur simultaneously with detection Examples: houses, pond, highway, grass/trees II Quantification Tasks - Measurement of objects / phenomena Direct physical measurement from the imagery Examples Inventories (count) Length, area and height of objects IIMost Tasks – Problem Solving complex task Uses information acquired in first three tasks to put objects in assemblages or associations needed for higher-level identification With experience, recognition becomes more automatic and tasks become less distinct Example: residential housing density Interpreter Requirements - Cognition Concerned with how interpreter derives information from the image data Varies from individual to individual Reasons for differences/inconsistencies among interpreters Cognitive processes are concerned with perceptual evaluation of elements of interpretation and how they are used in interpretation process Resolution & Discrimination Germane to Task Resolution Discrimination The ability of a remote sensing system to distinguish between signals that are radiometricall/spectrally/spatially near or similar The ability to distinguish an object from its background Function of spatial, spectral, and radiometric resolution Germane to task What is required for the particular assessment/task Resolution Four components of resolution Spatial Spectral Radiometric Temporal Spatial Resolution Indication of how well a sensor records spatial detail Refers to the size of the smallest possible feature that can be detected as distinct from its surroundings Aerial Camera: function of of platform altitude and film and optical characteristics Non-film sensor: function of platform altitude and instantaneous field of view (IFOV) Lower (coarser) spatial resolution Higher (finer) spatial resolution Spatial Resolution Jensen, 2000 Spectral Resolution The width of the specific EMR wavelength band(s) to which sensor is sensitive Broadband Few, relatively broad bands Hyper-spectral Many, relatively narrow bands Spectral Resolution Jensen, 2000 Airborne Visible Infrared Imaging Spectrometer (AVIRIS) Datacube of Sullivan’s Island Obtained on October 26, 1998 Color-infrared color composite on top of the datacube was created using three of the 224 bands at 10 nm nominal bandwidth. Jensen, 2000 Radiometric Resolution Ability of a sensor to distinguish between objects of similar reflectance Measured in terms of the number of energy levels discriminated 2n, where n = number of ‘bits’ (precision level) Example: 8 bit data = 28 = 256 levels of grey 256 levels = 0-255 range 0 = black, 255 = white Affects ability to measure surface properties 1 - bit 2 - bit 8 - bit Temporal Resolution The ability to obtain repeat coverage for an area Timing is critical for some applications Aircraft Crop cycles (planting, maximum greenness, harvest) Catastrophic events Potentially high Actually (in practice) lower than satellites Satellite Fixed orbit Systematic collection Pointable sensors Temporal Resolution Landsat Data Acquisition June 1, 2001 June 17, 2002 July 3, 2003 16 days Jensen, 2000 Discrimination Ability to distinguish object from its background and involves basic tasks of image interpretation: Detection, identification, measurement, and analysis As complexity of task increases, so do resolution requirements Key: target to background contrast Function of all 4 resolution elements Example: vegetation type, soil/rock type, building type Electromagnetic Radiation (EMR): Properties, Sources and Atmospheric Interactions EMR as Information Link Link between surface and sensor Sun Sensor Surface Energy Transfer (cont) Radiation Energy transferred between objects in the form of electromagnetic waves/particles (light) Can occur in a vacuum (w/o a medium) EMR Properties Wave Theory EMR => continuous wave Energy transfer through media (vacuum, air, water, etc.) Properties Quantum (Particle) Theory EMR => packets of energy Photons or quanta Interaction of energy with matter Wave Theory Explains energy transfer as a wave Energy radiates in accordance with basic wave theory Travels through space at speed of light at 3 x 108 ms-1 (meters per second) Electromagnetic Wave Two components or fields E = electrical wave M = magnetic wave http://www.colorado.edu/physics/2000/waves_particles/ Quantum Theory Wave theory doesn’t account for all properties of EMR Interaction of EMR with matter (atoms) Absorption Emission EMR is transferred in discrete packets (particles) Photons (quanta) Wavelength & Frequency Near Infrared Green Microwave Wavelength and Frequency Relationship is inverse High frequency associated with short wavelengths and high energy Low frequency associated with long wavelengths and low energy c=lx where: c = speed of light (3 x 108 m/s) l = wavelength v = frequency therefore: = c/l and l = c/ EMR Spectrum Short l, High , High Q Long l, Low , Low Q Wavelength and Frequency Example Energy of Green Light l = 0.5 mm = 0.5 x 10-6 m = 5 x 10-7 m = C/l = (3 x 108 m/s) / (5 x 10-7 m) = 0.6 x 1015 Hz = 6 x 1014 Hz Q = h = 6.626 x 10-34 Js x 6 x 1014 cycles/s = 39.756 x 10-20 J Energy of Microwave l = 3000 mm = 3 x 10-3 m = C/l = (3 x 108 m/s) / (3 x 10-3 m) = 1 x 1011 Hz Q = hf = 6.626 x 10-34 Js x 1 x 1011 cycles/s = 6.626 x 10-23 J Partitioning of Energy at Surface Radiant flux at the surface is partitioned among: Absorption Transmission Reflection Radiation Budget Equation Radiant Flux (F) incident at a surface = 1 + 2 + 3 1) Amount of energy absorbed by the surface 2) Amount of energy reflected from the surface 3) Amount of energy transmitted through the surface Radiation Budget Equation (cont.) Dimensionless ratios: al = Fabsorbed / Fil Spectral absorptance: Spectral transmittance: tl = Ftransmitted / Fil Spectral reflectance: rl = Freflected / Fil al + tl + rl = Fil = 1 Radiation Budget Equation (cont.) Proportion of energy absorbed/transmitted/reflected will vary from target-to-target Material type Material condition For a given target, proportion absorbed, transmitted, and reflected energy will vary with wavelength Ability to distinguish between targets Atmospheric Interactions Energy detected by sensor is a function of Atmospheric influences Surface properties Atmosphere will affect EMR in three ways Absorption Transmission Scattering Constituents in the Atmosphere Responsible for absorption and scattering Water droplets/ice crystals Gas Molecules clouds CO2, water vapor, ozone Aerosols particles suspended in the atmosphere smoke, dust, sea salt, chemical pollutants Atmospheric Windows Portions of the spectrum that transmit radiant energy effectively Wavelength Window Radiation Type 1.5 – 1.8 mm 2.0 – 2.4 mm 3.0 – 5.0 mm 8.0 – 14.0 mm 10.5 – 12.5 mm > 0.6 cm UV, visible, reflected IR (near) Reflected IR (shortwave) Reflected IR (shortwave) Thermal IR Thermal IR Thermal IR Microwave *0.3 – 1.1 mm *scattering may limit transmission for UV and shorter visible wavelengths Scattering Effects Scatter can occur anywhere in information flow: Sun -> Surface -> Sensor Reduces direct illumination from sun and creates diffuse illumination Creates noise and reduces contrast in image May add to or reduce signal received by sensor Filters may be used to reduce effects of haze and scatter Radiation Budget Equation (cont.) Proportion of energy absorbed/transmitted/reflected will vary from target-to-target Material type Material condition For a given target, proportion absorbed, transmitted, and reflected energy will vary with wavelength Ability to distinguish between targets Types of Reflection (cont.) Uniform reflection Smooth surface Rough surface Spectral Signature Concept Describes spectral reflectance of a target at different wavelengths of EMR Spectral reflectance curve - graphs reflectance response as a function of wavelength Key to separating and identifying objects Selection of optimum wavelength bands More Spectral Reflectance Curves Imaging Spectrometer Data of Healthy Green Vegetation in the San Luis Valley of Colorado Obtained on September 3, 1993 Using AVIRIS 224 channels each 10 nm wide with 20 x 20 m pixels Jensen, 2000 Air Photo Geometry and Stereo Viewing Photographic Elements Fiducials Minimum of four markers on photo A) Placed on center of each side of photo AND/OR B) Placed in photo corners Intersection of lines drawn between opposite fiducials marks the image principal point A B Photographic Elements Principal Point (PP) Center point of the image Used for finding center of photographic and aligning imagery for stereo viewing Photographic Elements Nadir The point directly below the aircraft If the image is truly vertical, then the principal point is the image of the nadir point Nadir PP Basic Photo Geometry Height (H) Altitude of the platform (and camera system) above the terrain AGL = Above ground level ASL = Above sea level H Basic Photo Geometry (cont) Focal Length (f) Distance from focal point (lens) to film plane at back of camera f Target-to-Film Energy Path Radiation reflected up from the surface and atmosphere Rays converge at the focal point (lens) Film is exposed at the back of the camera Optical axis Line from the focal point to the center of scene For a perfectly vertical photo, the optical axis, principal point, and nadir point will line up Air Photo Geometry – Negative Reversal Reversal Geometric Tonal Contact Positive Print Scale – Vertical Air Photo Flat Terrain Similar to map scale Length of feature on image : Length of feature on ground Expressed as a dimensionless representative fraction (RF) RF = 1:10,000 OR 1 / 10,000 Can be determined Knowing actual length of feature visible in image Knowing the height of the camera (H) above ground level (hAGL) and the focal length (f) of the camera & using the concept of ‘similar triangles’ Calculate Scale Scale – Note About ‘Height’ Height of Camera/Platform Should be stated as height above the terrain, e.g., 200 meters above ground level (AGL) If height is stated in terms of height above mean sea level (H’), then you must know height of terrain (h) and adjust denominator of representative fraction accordingly Height above terrain: H = H’ - h Scale Flat vs. Variable Terrain If terrain is flat, then scale can be determined for the entire image If height of terrain varies, then scale will also vary across the image Depending on the amount of variation, an average terrain value may be used Computing Flat Terrain Horizontal Distance (HD) Photo-coordinates for each location Apply the Pythagorean Theorem (x1,y1) (y1-y2) a2 + b2 = c2 c (x1-x2) (x2-x1)2 + (y2-y1)2 = c2 2 + (y2-y1) 2 = c Multiply ‘c’ by scale factor (x2-x1) (SF) to find distance if RF = 1:10,000 = SF = 10,000 (x2,y2) Relief Displacement - Definition Stereoscopic Viewing Provides 3rd dimension to air photo interpretation Stereopairs Identify 3-D form of an object (volcano, building, etc.) Overlapping vertical photographs Stereoscopes Used to create synthetic visual response by forcing each eye to look at different views of same terrain Gives perception of depth (3-D) Stereo Viewing Parallax Parallax – Air Photo Apparent change in relative positions of stationary objects Caused by change in viewing position Example – looking out car window (side) Caused by taking photographs of the same object from different positions --> relative displacement Relative displacement Forms the basis for 3-D viewing of successive overlapping photos Stereoscopic Parallax Types of Stereoscopes Lens Pair of magnifying lens that keep eyes working separately Used with pre-aligned stereopairs called stereograms Unable to view entire photo at one time Mirror/Reflecting Separates lines of sight using mirrors or prisms Can also magnify Less portable Oblique Photographs - Geometry Oblique Photos High oblique Low oblique StereoPlotters Various types Three main components 1. Projection system that creates the terrain model 2. Viewing system so operator can see model stereoscopically 3. Measuring and tracing system to record elevation and trace features onto a map sheet StereoPlotters Reverse process of projecting rays from terrain thru camera lens to film plane to create a terrain model Orientation and position of the aerial camera is recreated by adjusting projectors Adjust for side-to-side movement (roll) Adjust for up-down movement (pitch) Adjust for orientation (yaw) Traced using floating mark Planimetrically by raising/lowering mark to maintain contact with terrain Contours by setting elevation and moving mark along terrain so that contact is maintained Stereo Model StereoPlotter Orthophotography Images corrected for tilt and relief displacement Base of features will be shown in their true planimetric position Feature distortion is not eliminated e.g., tall buildings will still appear to “lean” Perspective of the image is changed from point to parallel rays orthogonal to the surface Useful as base map Digital Elevation Models Regular array of terrain elevations Normally stored as a grid of hexagonal pattern Created using Ground survey data Cartographic digitization of contour data Photogrammetric measurements Other remote sensing approaches Interferometric synthetic aperature radar (InSAR) Scanning LIDAR Photo Mosaics Stitching together series of aerial photographs to cover large areal extents Uncontrolled Photos are matched visually without ground control Generally limited to center of images Scale may not be constant Unequal brightness between photos may make interpretation difficult Photo Mosaics Controlled (relative to uncontrolled) More rigorous Photos have been rectified and precisely matched using ground control Greater accuracy, but greater cost