* Your assessment is very important for improving the workof artificial intelligence, which forms the content of this project

Download a la Finance University Paris 1 Stochastic Calculus 2 Annie Millet

Survey

Document related concepts

Transcript

M2 - Mathématiques Appliquées

à l’Économie et à la Finance

University Paris 1

Spécialité : Modélisation et Méthodes Mathématiques

en Économie et Finance

Erasmus Mundus

Stochastic Calculus 2

Annie Millet

15

14

13

12

11

10

9

8

7

6

5

4

3

2

1

0

−1

−2

0.0

0.2

0.4

0.6

0.8

1.0

1.2

1.4

1.6

1.8

2.0

Table des matières

1. Finite dimensional Itô processes . . . . . . . . . . . . . . . . . .

1

1.1. Reminders . . . . . . . . . . . . . . . . . . . . . . . . . . . .

1

4

12

13

13

15

1.2. Quadratic variation - Bracket of a local martingale . . . . . . . .

1.3. Real Itô processes. . . . . . . . . . . . . . . . . . . . . . . . .

1.4. Rd -valued Itô processes - General Itô’s formula . . . . . . . . . .

1.4.1. d-dimensional Itô processes . . . . . . . . . . . . . . . . . .

1.4.2. The general Itô formula . . . . . . . . . . . . . . . . . . . .

1.5.1. The Lévy characterizations . . . . . . . . . . . . . . . . . . .

1.5.2. The Markov property . . . . . . . . . . . . . . . . . . . . .

17

17

19

1.6. Exercises . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

22

2. Stochastic differential equations . . . . . . . . . . . . . . . . .

24

2.1. Strong solution - Diffusion. . . . . . . . . . . . . . . . . . . . .

25

32

33

33

35

38

1.5. Properties of Brownian motion. . . . . . . . . . . . . . . . . . .

2.2. Weak solution. . . . . . . . . . . . . . . . . . . . . . . . . . .

2.3. Some properties of diffusions . . . . . . . . . . . . . . . . . . .

2.3.1. Stochastic flows and the Markov property . . . . . . . . . . . .

2.3.2. Infinitesimal generator . . . . . . . . . . . . . . . . . . . .

2.3.3. Comparison Theorem . . . . . . . . . . . . . . . . . . . . .

2.4. Bessel Processes . . . . . . . . . . . . . . . . . . . . . . . . .

2.5. Diffusions and PDEs . . . . . . . . . . . . . . . . . . . . . . .

2.5.1. Parabolic problem . . . . . . . . . . . . . . . . . . . . . . .

2.5.2. The Feynman-Kac formula . . . . . . . . . . . . . . . . . . .

39

40

40

41

2.6.1. The Sturm-Liouville - Occupation time . . . . . . . . . . . . .

2.6.2. Introduction to the Black & Sholes formula . . . . . . . . . . . .

44

44

46

2.7. Exercises . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

49

3. The Girsanov Theorem . . . . . . . . . . . . . . . . . . . . . . .

54

3.1. Changing probability . . . . . . . . . . . . . . . . . . . . . . .

3.8. Exercises . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

54

55

57

58

61

62

64

67

4. Applications to finance . . . . . . . . . . . . . . . . . . . . . . .

69

4.1. Continuous financial market . . . . . . . . . . . . . . . . . . .

69

69

70

2.6. Examples in finance . . . . . . . . . . . . . . . . . . . . . . . .

3.2. The Cameron Martin formula . . . . . . . . . . . . . . . . . . .

3.3. The Girsanov Theorem . . . . . . . . . . . . . . . . . . . . . .

3.4. The Novikov condition and some generalizations . . . . . . . . . .

3.5. Existence of weak solutions . . . . . . . . . . . . . . . . . . . .

3.6. Examples of applications to computations of expectation . . . . . .

3.7. The predictable representation theorem

. . . . . . . . . . . . .

4.1.1. Financial market with d risky assets and k factors . . . . . . . . .

4.1.2. Description of the strategies . . . . . . . . . . . . . . . . . .

4.1.3. Arbitrage-free condition . . . . . . . . . . . . . . . . . . . .

4.1.4. Neutral risk probability . . . . . . . . . . . . . . . . . . . .

4.2. Extended Black & Sholes model . . . . . . . . . . . . . . . . . .

4.2.1. Arbitrage-free and change of probability - Risk Premium . .

4.2.2. Complete market . . . . . . . . . . . . . . . . . . .

4.2.3. Computing the hedging portfolio in the Black & Sholes model

4.2.4. Volatility . . . . . . . . . . . . . . . . . . . . . .

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

4.3. The Cox-Ingersoll-Ross model . . . . . . . . . . . . . . . . . . .

4.3.1. General Bessel processes . . . . . . . . . . . . . . . . . . . .

4.3.2. The Cox-Ingersoll-Ross model . . . . . . . . . . . . . . . . .

4.3.3. Price of a zero-coupon . . . . . . . . . . . . . . . . . . . . .

4.4. Exercises . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

71

72

74

75

77

79

82

83

83

84

85

87

1

1 Finite dimensional Itô processes

The aim of this chapter is to extend the notions of Brownian motion, Itô process and

the Itô formula from dimension 1 to any finite dimension d.

1.1 Reminders

In order to make these notes as self-contained as possible, let us start by recalling some

notions already introduced in the notes of Stochastic Calculus 1. The filtration provides the

information available at any time t.

Definition 1.1 Let (Ω, F , P ) be a probability space.

(i) A filtration is an increasing family (Ft , t ≥ 0) of sub σ-algebras of F , i.e., Fs ⊂ Ft ⊂

F for s ≤ t.

(ii) We say that the filtration (Ft ) satisfies

T the usual conditions if it is :

• right continuous, i.e., Ft = Ft+ := s>t Fs .

• complete, i.e., all σ-algebras Ft contain P -null sets, which means that P (A) = 0 implies

that A ∈ F0 .

Convention. In all these notes, we are

0), P ) and we assume that the filtration

we suppose that F0 is the completion of

measurable random variables are almost

statements.

given a filtered probability space (Ω, F , (Ft , t ≥

(Ft ) satisfies the usual conditions. Furthermore,

the trivial σ-field {∅, Ω}, which implies that F0 surely constant. This will not be recalled in the

Definition 1.2 An (Rd -valued) stochastic process is a family (Xt , t ≥ 0) of random variables Xt : (Ω, F ) → (Rd , Rd ).

(i) The stochastic process (Xt ) is (Ft )-adapted if at each time t ≥ 0, Xt is measurable

from (Ω, Ft ) to (Rd , Rd ).

(ii) The stochastic process (Xt ) is progressively measurable measurable if at each time

t ≥ 0, the map (s, ω) 7→ Xs (ω) is measurable from B([0, t]) ⊗ Ft in (Rd , Rd ).

(iii) Let (Xt ) be a stochastic process. Its natural filtration is (FtX , t ≥ 0) where FtX =

σ(σ(Xs , s ∈ [0, t]), N ) where N denotes the P -null sets. If the process (Xt ) is right-continuous,

its natural filtration (FtX ) satisfies the usual conditions.

Theorem 1.3 Let (Xt ) be an Rd -valued, adapted and right-continuous stochastic process.

Then (Xt ) is progressively measurable.

The following notion of stopping time plays a crucial role in the theory.

Definition 1.4 A random variable τ : Ω → [0, +∞] is a stopping time (with respect to the

filtration (Ft )), also called (Ft )-stopping time, if {τ ≤ t} ∈ Ft fir every t ≥ 0. If τ is a

stopping time with respect to (Ft ), we set

Fτ = {A ∈ F : A ∩ {τ ≤ t} ∈ Ft , ∀t ∈ [0, +∞[}.

Finally, if (Xt ) is a (Ft )-adapted stochastic process, we set Xτ (ω) = Xτ (ω) (ω) ; if (Xt ) is

right-continuous and adapted, Xτ 1{τ <+∞} is Fτ -measurable.

The following examples of stopping times are important.

2009-2010

Stochastic Calculus 2 - Annie Millet

2

1

Finite dimensional Itô processes

Proposition 1.5 Let (Xt , t ≥ 0) be an Rd -valued, (Ft )-adapted process and A ∈ Rd . Let us

recall that by convention, inf ∅ = +∞.

(i) If A is a closed set and (Xt ) is continuous, DA = inf{t ≥ 0 : Xt ∈ A} is a (Ft )stopping time.

(ii) If A is an open set and (Xt ) is right-continuous, then the hitting time of A, denoted

by TA = inf{t > 0 : Xt ∈ A}, is a (Ft+ )-stopping-time.

Proof. (i) Clearly, since X. is continuous and A is closed we have {DA ≤ t} = {ω :

inf s∈Q,s≤t d(Xs (ω), A) = 0} ∈ Ft , with d(x, A) = inf y∈A d(x, y).

(ii) To check that {TA ≤ t} ∈ Ft+ , it is enough to prove that {TA < t} ∈ Ft for every t.

Furthermore, if s < t and Xs (ω) ∈ A, the right-continuity of X. (ω) imply that for an open

set A, there exists ε ∈]0, t − s[ such that for all r ∈ [s, s + ε[, Xr (ω) ∈ A. Hence

{TA < t} = ∪s<t,s∈Q {Xs ∈ A} ∈ Ft .

Let us recall that TA need not be a (Ft )-stopping time.

2

Martingales are a central notion in the theory ; they enjoy a fundamental property of

constant use in finance : the process (Xt , t ∈ [0, T ]) is completely determined by its value

XT at terminal time T .

Definition 1.6 A real-valued (Ft )-adapted process X = (Xt , t ≥ 0) is a (Ft )-martingale if

• E[|Xt |] < +∞ (that is, Xt ∈ L1 (Ω)) for every t ≥ 0).

• E[Xt | Fs ] = Xs for all s ≤ t.

If X is a (Ft )-martingale such that E(Xt2 ) < +∞ for all t ≥ 0, X is called a squareintegrable martingale.

One may define martingales without referring to a filtration (Ft ). One requires that it is a

(FtX )-martingale, where (FtX ) is the natural σ-algebra of X. Clearly, any (Ft )-martingaleX

is also a (FtX )-martingale.

Finally, an Rd -valued process X = (Xti , i = 1, · · · , d, t ≥ 0) is a (Ft )-martingale if each

component (Xti , t ≥ 0), i = 1, · · · , d is a (Ft )-martingale.

Recall that a martingale (Xt ) with respect to a filtration (Ft ) which satisfies the usual

conditions has a right-continuous left-limited modification (cad-lag). Therefore, all the martingales we will consider will be right-continuous with left limits. The optional sampling

theorem extends to right-continuous martingales.

Theorem 1.7 (Optional Sampling theorem) Let M be a (right-continuous) (Ft )-martingale.

(i) Let S, T be (Ft )-stopping times bounded by a constant K, i.e., such that S ≤ T ≤ K.

Then MT is integrable and

E(MT |FS ) = MS a.s.

(ii) Let T be a stopping time. The stopped process (MtT , t ≥ 0) defined by

MtT = MT ∧t

(1.1)

is also a (Ft )-martingale.

Stochastic Calculus 2 - Annie Millet

2009-2010

1.1

3

Reminders

Proof. (i) For each n ≥ 1, set Sn (ω) = k2−n on {S ∈ [(k − 1)2−n , k2−n [}, k ≤ K2n + 1 and

let Tn be defined in a similar way. Then Sn and Tn are (Ft )-stopping times taking finitely

many values and such that S ≤ Sn ≤ Tn , limn Sn = S and limn Tn = T . If A ∈ FSn .

Decomposing

according

to the values of Sn and using the martingale property,

R the set A P

R

we deduce A MSn dP = k A∩{Sn =k} MK dP . Hence MSn = E(MK |FSn ). Since FSn ⊂ FTn ,

this yields

E(MTn |FSn ) = E(E(MK |FTn )|FSn ) = MSn .

R

R

Therefore, given any set A ∈ FS ⊂ FSn one has A MSn dP = A MTn dP . Furthermore,

the sequences of stopping times (Sn , n ≥ 1) and (Tn , n ≥ 1) are decreasing and the above

computation shows that the sequences (MSn , FSn ) and (MTn , FTn ) are backward martingales,

and hence are uniformly integrable. Since the martingale M is right-continuous,

MT =

R

1

a.s.

and

in

L

.

We

deduce

that

for

any

A

∈

F

,

M

and

M

=

lim

M

lim

M

S

S dP =

S

n

Sn

A

R n Tn

MT dP , which concludes the proof. Notice that this proof easily extends when S et T

A

are non-bounded stopping times such that S ≤ T when the martingale M is uniformly

integrable.

(ii) Let s ≤ t, and apply part (i) to the stopping times s ∧ T ≤ t ∧ T ≤ t. We deduce

that Mt∧T is a (Ft∧T )-martingale. Let us check that this process is (Ft )-adapted, integrable

and is also a (Ft )-martingale.

Let A ∈ Fs . Obviously, A ∩ {T > s} ∈ Fs∧T , and since E(Mt∧T |Fs∧T ) = Ms∧T , we have

Z

Z

Mt∧T dP =

Ms∧T dP.

A∩{T >s}

A∩{T >s}

Furthermore,

on the set {T ≤ s} it holds Mt∧T = MT = Ms∧T ; we deduce

R

Ms∧T dP , which concludes the proof.

2

A

R

A

Mt∧T dP =

This proposition justifies the next definition, which makes it possible to localize the

notion of martingale by introducing an increasing sequence of stopping times.

Definition 1.8 An (Ft )-adapted right-continuous process M is an (Ft ) local martingale if

there exists an increasing sequence of (Ft )-stopping times such that τn → ∞ and for every

n, the process M τn := (Mt∧τn , t ≥ 0) is a (Ft )-martingale.

Remark 1.9 (1) Let M be a local martingale. If one replaces the sequence of stopping times

(τn ) by (τn ∧ n) we see that we may require that each of the martingales M τn is uniformly

integrable. For each n ≥ 1, let Sn = inf{t ≥ 0 : |Mt | ≥ n}. Then Sn is a stopping time and

if the local martingale M is continuous, replacing τn by τn ∧ n ∧ Sn , we may impose that the

martingale M τn remains bounded.

(2) An integrable local martingale M need not be a martingale. Such an counter-example

can be found in [11], page 182.

Definition 1.10 The process (Bt , t ≥ 0) is a (standard) real -or 1-dimensional- Brownian

motion if the following properties (a)-(c) are satisfied :

a) P (B0 = 0) = 1 (the Brownian motion starts from 0).

b) For times 0 ≤ s ≤ t, the random variable Bt − Bs is centered Gaussian with variance

(t − s), denoted by N (0, t − s).

c) For every integer n and 0 ≤ t0 ≤ t1 · · · ≤ tn , the random variables Bt0 , Bt1 − Bt0 , · · · ,

Btn − Btn−1 are independent.

2009-2010

Stochastic Calculus 2 - Annie Millet

4

1

Finite dimensional Itô processes

Recall that the trajectories of the Brownian motion B are a.s. continuous, and even

α-Hölder functions with α < 12 , but that a.s. they are note differentiable (and do not even

1

belong the space C 2 ).

We will often suppose that the filtration (Ft ) is the natural Brownian filtration (FtB )

associated with the Brownian motion B. and hence that it satisfies the optional sampling

theorem 1.7. Property c) shows that for 0 ≤ s < t, the increment Bt − Bs is independent

from the σ-algebra FsB = σ(σ(Bu , 0 ≤ u ≤ s), N ). Therefore, the Brownian motion B is a

(FtB )-martingale.

Recall some properties of the Brownian motion which be constantly used. Their proof is

left as an exercise.

Proposition 1.11 Let (Bt ) be a Brownian motion. Then :

(i) (Scaling) For any constant c > 0, the process cBt/c2 is a Brownian motion and (−Bt )

is a Brownian motion.

(ii) (Bt2 − t, t ≥ 0) is a (FtB )-martingale.

2

(iii) For any θ ∈ R, the process exp θBt − θ2 t , t ≥ 0 is a (FtB )-martingale.

The next graphic shows three samples of Brownian trajectories of obtained by simulation.

5

4

3

2

1

0

−1

−2

−3

−4

−5

0.0

0.5

1.0

1.5

2.0

2.5

3.0

3.5

4.0

4.5

5.0

1.2 Quadratic variation - Bracket of a local martingale

Let us recall the following notions.

Definition 1.12 Let (Ω, F , (Ft, t ≥ 0), P ) be a filtered probability space. For a = 1, 2 and

T ∈]0, +∞] set :

Z t

a

Ha (Ft ) = h progressively measurable such that for all t ≥ 0, E

|hs | ds < ∞ ,

0

Z t

loc

a

Ha (Ft ) = h progressively measurable such that for all t ≥ 0,

|hs | ds < ∞ a.s. ,

0

Z T

T

a

Ha (Ft ) = h progressively measurable such that E

|hs | ds < +∞ .

0

Stochastic Calculus 2 - Annie Millet

2009-2010

1.2

5

Quadratic variation - Bracket of a local martingale

Rt 2

In particular, let h be a cad-lag (Ft )-adapted

process.

If

for

every

t

>

0

one

has

h ds <

0 s

R

t

+∞ a.s., then h ∈ H2loc ; if E 0 h2s ds < ∞ for every t, then h ∈ H2 (Ft ), ... When the

filtration (Ft ) is the natural Brownian filtration (FtB ) of a Brownian motion B, we simply

set H1loc := H1loc (FtB ), H2loc := H2loc (FtB ), ...

The following property of stochastic integrals with respect to the Brownian motion is

fundamental.

Theorem 1.13 Let B be a Brownian motion and let (FtB )R denote its natural Brownian

t

filtration. Let h ∈ H2 ; then the process defined by t → It = 0 hs dBs is a square integrable

(FtB )-martingale with a.s. continuous trajectories.

Rt

Let h ∈ H2loc (for example a cad-lag, (FtB )-adapted process such that 0 h2s ds < +∞

Rt

a.s.) Then the stochastic integral It = 0 hs dBs can be constructed and the process (It ) is a

continuous local martingale.

Let us recall the notion of variation satisfied by indefinite deterministic integrals.

Definition 1.14 (i) Let s < t and f : [s, t] → R. The function f has bounded variation on

the interval [s, t] if V[s,t] (f ) < +∞, where

(

)

X

V[s,t] (f ) := sup

|f (ti+1 ) − f (ti )| : {s = t0 < t1 < · · · < tn ≤ t} subdivision de [s, t] .

i

The function f : [0, +∞[ is of bounded variation on [0, +∞[ if it has bounded variation on

any interval [0, T ].

(ii) The process (Xt ) is of bounded variation on [s, t] (resp. of bounded variation) if its

trajectories a.s. have bounded variation on [s, t] (resp. have a.s. bounded variation).

Rt

Obviously, if b ∈ H1loc (Ft ), then the process t → It = 0 bs ds is a.s. of bounded variaRT

tion. Indeed, for any T ≥ 0, V[0,T ] (I) ≤ 0 |b(t)| dt. Stochastic integrals behave completely

differently.

Proposition 1.15 Let (Mt ) be an a.s. continuous (Ft ) local martingale with bounded variation. Then for any t the random variable Mt is a.s. constant (and equal to M0 ).

Proof. Since M0 is a.s. constant, replacing Mt by Mt − M0 , we may and do assume that

M0 = 0.

(i) Fix T and suppose that (Mt ) is a continuous martingale with variation V[0,T ] (M)

(a.s.) bounded by C. Let ∆ = {t0 = 0 < t1 < · · · < tn = T } be a subdivision of [0, T ],

n−1

|∆| = supi=0

|ti+1 − ti | denote its mesh and for 0 ≤ k ≤ n − 1 let Xk = Mtk+1 − Mtk . Then,

E(|MT |2 ) = E(|MT − M0 |2 ) =

X

0≤k<n

E(Xk2 ) + 2

X

E(Xi Xj ).

1≤i<j≤n−1

For i < j, Xi is Fti measurable and the martingale property

implies E(Xj |Fti ) = 0 ; hence, Pn

2

E(Xi Xj ) = E(Xi E(Xj |Fti )) = 0. Hence E(|MT | ) = k=0 E(Xk2 ) ≤ CE supk |Mtk+1 − Mtk |

and since M has continuous trajectories, a.s. the function t → Mt (ω) is uniformly continuous on [0, T ]. When |∆| → 0, we deduce that supk:tk+1 ≤t |Mtk+1 − Mtk | → 0 a.s. while

2009-2010

Stochastic Calculus 2 - Annie Millet

6

1

Finite dimensional Itô processes

supk:tk+1≤t |Mtk+1 −Mtk | ≤ C. The Dominated Convergence Theorem yields E supk |Mtk+1 − Mtk | →

0 as |∆| → 0. We deduce that E(|MT |2 ) = 0 which implies that MT = 0 a.s.

(ii) Let (Mt ) be an a.s. continuous martingale. For every n, let

τn = inf{t ∈ [0, T ] : V[0,t] (M) ≥ n} ∧ T

(using the convention inf ∅ = +∞). The definition of V[0,t] shows that it is a (Ft )-adapted,

continuous process. Proposition 1.5 (i) shows that (τn ) is a sequence of (Ft ) stopping times.

Obviously, (τn ) is increasing and converges to T . The Optional Sampling Theorem 1.7 implies

that the process (Mtτn ) = (Mt∧τn , t ≥ 0) is a (Ft )-martingale. Furthermore, by construction,

V[0,T ] (M τn ) ≤ n a.s. Let t ∈ [0, T ] ; part (i) shows that Mt∧τn = 0 a.s. Since the trajectories

of M are a.s. continuous, as n goes to infinity, we deduce that Mt = 0 a.s.

(iii) Let finally M be a continuous local martingale and let (τn ) be a sequence of stopping

times increasing to T and such that the process (Mt∧τn , t ≥ 0) is a continuous martingale

for every n. Then for every n, this martingale is of bounded variation on [0, T ] and hence

(ii) shows that is a.s. equal to 0. Using again the a.s. continuity of M and letting n → +∞

concludes the proof.

2

This proposition implies that a.s. the BrownianR motion B does not have a bounded

t

variation on [0, T ]. The stochastic integral t → 0 σs dBs cannot be defined ω by ω

and is not of bounded variation, except if it is identically equal to 0.

The « right notion » for stochastic integrals, as well as for the Brownian motion, is that

of quadratic variation. For any process X defined on [0, T ], 0 ≤ t ≤ T and any subdivision

∆ = {t0 = 0 < t1 < · · · < tk = T }, let

Tt∆ (X)

=

k−1

X

i=0

|Xti+1 ∧t − Xti ∧t |2 .

Definition 1.16 Let X : [0, T ] × Ω → R denote a stochastic process defined on [0, T ]. We

say that X has a finite quadratic variation if for every t ∈ [0, T ],

hX, Xit = lim Tt∆ exists in probability,

|∆|→0

or in other words that for any sequence (∆n ) of subdivisions de [0, T ] whose mesh convergences to 0, the sequence (Tt∆n , n ≥ 1) converges in probability to a limit denoted by hX, Xit .

The next two results relate processes with bounded variation and with finite quadratic

variation.

Proposition 1.17 Let X be a continuous process with bounded variation on [0, T ]. Then

the quadratic variation of X on [0, T ] is equal to 0.

Proof. Let ∆ = {0 = t0 < t1 < · · · < tk = T } be a subdivision of [0, T ]. Then

k−1

X

i=0

2

|Xti+1 − Xti | ≤

k−1

X

i=0

Stochastic Calculus 2 - Annie Millet

|Xti+1 − Xti | sup |Xti+1 − Xti |.

0≤i<k

2009-2010

1.2

Quadratic variation - Bracket of a local martingale

7

Since the trajectories of X are a.s. continuous on the interval [0, T ], we have sup0≤i≤k−1 |Xti+1 −

P

Xti | → 0 a.s. when |∆| → 0. Furthermore, a.s. k−1

i=0 |Xti+1 (ω) − Xti (ω)| ≤ V[0,T ] (X. (ω)) <

+∞, which concludes the proof

Notice that the previous argument and the Dominated Convergence Theorem imply that if

V[0,t] (X) ≤ C a.s. for some constant C, Tt∆ converges to 0 in L1 .

2

The next result has been proved in the lectures of Stochastic Calculus 1.

Theorem 1.18 Let B be Brownian motion. Then (Bt ) has finite quadratic variation on

any interval [0, T ] and hB, Bit = t. More precisely, when |∆| → 0, E(|Tt∆ (B) − t|2 ) → 0,

which means that the convergence holds in LR2 .

t

Furthermore, if σ ∈ H2loc , the process ( 0 σs dBs , t ≥ 0) has finite quadratic variation

Rt 2

σ ds on any interval [0, t].

0 s

We at first extend this result to continuous local martingales.

Notation Let ∆ = {t0 = 0 < t1 < t2 < · · · } be a subdivision of [0, +∞[ such that for any

t > 0, ∆ ∩ [0, t] only contains finitely many points. Similarly, for any t > 0 (adding the point

t to the subdivision if necessary) and for any process X, set

X

(1.2)

Tt∆ (X) =

(Xti+1 ∧t − Xti ∧t )2 .

i≥0

The process X has finite quadratic variation if for any t the family of processes Tt∆ (X)

converges in probability to hX, Xit when the mesh |∆| of the subdivision on [0, t] converges

to 0.

The following result is fundamental. It connects the quadratic variation of a process and

a martingale associated with the square of the process.

Theorem 1.19 Let M be a continuous (Ft )- local martingale. Then M has finite quadratic

variation and the quadratic variation process hM, Mit is the unique increasing, adapted,

continuous process null at zero, such that

Mt2 − hM, Mit , t ≥ 0 is a (Ft ) local martingale .

Furthermore, for s < t and any sequence (∆

n ) of subdivisions with mesh |∆n | converging to

0, the sequence sups≤t Ts∆n (M) − hM, Mis converges to 0 in probability.

If moreover M is a square integrable martingale (that means if E(Mt2 ) < +∞ for every

t), then (Mt2 − hM, Mit , t ≥ 0) is a (Ft ) continuous martingale such that for any pair of

bounded stopping times S ≤ T ≤ C,

E(MT2 − MS2 |FS ) = E(|MT − MS |2 |FS ) = E(hM, MiT − hM, MiS |FS ).

Proof. Uniqueness can be deduced from Proposition 1.15. Indeed, let Mt2 = Yt + At =

Zt + Bt where A, B are continuous processes with bounded variation null at 0, Y, Z are local

martingales which are continuous since the processes M.2 , A. et B. are continuous. Then the

difference Y − Z = B − A is a continuous local martingale, with bounded variation and null

at 0. Proposition 1.15 shows that is is null.

The proof of the existence will be done in several steps. To ease the notation, we will

skip the filtration when dealing with stopping times, martingales, ... Replacing (Mt ) by

(Mt − M0 , t ≥ 0) which is also a (local) martingale we may and do assume that M0 = 0.

2009-2010

Stochastic Calculus 2 - Annie Millet

8

1

Finite dimensional Itô processes

(1) Suppose that M is a martingale bounded by C. For any subdivision ∆, s < t, let i

and k denote the integers such that ti ≤ s < ti+1 and tk ≤ t < tk+1 .

Then if i = k, the martingale property implies that

E Tt∆ (M) − Ts∆ (M)Fs = E(|Mt − Mti |2 − |Ms − Mti |2 |Fs )

= E(|Mt − Ms |2 |Fs ) = E(Mt2 − Ms2 |Fs ).

If i < k, then

E |Mti+1 − Mti |2 |Fs = E |Mti+1 − Ms |2 |Fs + |Ms − Mti |2 .

P 2

Using the convention nj=n

xj = 0 if n1 > n2 , we deduce

1

k−1

X

E Tt∆ (M) − Ts∆ (M)Fs = E |Mti+1 − Ms |2 +

|Mtj+1 − Mtj |2 + |Mt − Mtk |2 Fs .

j=i+1

On the other hand, for s ≤ a < b ≤ c < d ≤ t,

E[(Mb − Ma )(Md − Mc )|Fs ] = E[(Mb − Ma )E[Md − Mc |Fc ]|Fs ] = 0.

Decompose Mt − Ms as a sum of increments using the points {s, ti+1 , · · · , tk , t} ; we obtain

k−1

X

E |Mt − Ms |2 |Fs = E |Mti+1 − Ms |2 +

|Mtj+1 − Mtj |2 + |Mt − Mtk |2 Fs ,

j=i+1

which yields

E Mt2 − Ms2 |Fs = E (Mt − Ms )2 |Fs = E Tt∆ (M) − Ts∆ (M)|Fs .

(1.3)

Therefore, the process (Mt2 − Tt∆ (M), t ≥ 0) is a square integrable martingale and E(Mt2 ) =

E(Tt∆ (M)) for any t ≥ 0.

(2) Let M be a continuous martingale bounded by C. Fix a and let (∆n ) be a sequence of

subdivisions of [0, a] with mesh converging to 0. We prove that the sequence (Ta∆n (M), n ≥ 0)

converges in L2 , which means that it is a Cauchy sequence in L2 .

˜ denote the subdivision obtained using the union

Let ∆ and ∆′ be subdivisions and let ∆

′

of the points of ∆ and ∆ . Set

′

X = T ∆ (M) − T ∆ (M).

Since (Mt2 − Tt∆ (M) , t ≥ 0) is a martingale, X is also a martingale such that X0 = 0 and

(Xt , 0 ≤ t ≤ a) remains bounded. The above computation with X instead of M shows that

h

i

i

h

˜

2

∆

∆′

∆

2

E(Xa ) = E |Ta (M) − Ta (M)| = E Ta (X) .

h

i

′

˜

˜

˜

Furthermore, Ta∆ (X) ≤ 2 Ta∆ T ∆ (M) + Ta∆ T ∆ (M) .

To check that the sequence Ta∆n (M) is Cauchy, it is enough to check that

h

i

˜

∆

∆

E Ta T (M)) converges to 0 as |∆| + |∆′ | → 0.

Stochastic Calculus 2 - Annie Millet

2009-2010

1.2

9

Quadratic variation - Bracket of a local martingale

˜ and let tl denote the unique element of ∆ such that tl ≤ sk < sk+1 ≤ tl+1 . Then,

Let sk ∈ ∆

Ts∆k+1 (M) − Ts∆k (M) = (Msk+1 − Mtl )2 − (Msk − Mtl )2 = (Msk+1 − Msk )(Msk+1 + Msk − 2Mtl ).

We deduce that

˜

Ta∆

∆

T (M) ≤

˜

Ta∆ (M)

2

sup |Msk+1 + Msk − 2Mtl | .

k

Since M is a continuous and bounded process, the dominated convergence theorem implies

4

E sup |Msk+1 + Msk − 2Mtl | → 0 when |∆| + |∆′ | → 0.

k

˜

Using Schwarz’s inequality, it remains to check that the sequence E(|Ta∆ (M)|2 ) is bounded

by some constant, which ends up to checking that

sup E(|Ta∆ (M)|2 ) < +∞.

∆

Let ∆ be a subdivision containing the point a = tn . Then

|Ta∆ (M)|2 =

=

n−1

X

i=0

n−1

X

i=0

=

n−1

X

i=0

|Mti+1 − Mti |2

!2

|Mti+1 − Mti |4 + 2

|Mti+1 − Mti |4 + 2

n−2

X

i=0

n−2

X

i=0

|Mti+1 − Mti |2

Mti+1 − Mti

n−1

X

j=i+1

2

|Mtj+1 − Mtj |2

Ta∆ (M) − Tt∆i+1 (M) .

Equation (1.3) shows that E Ta∆ (M) − Tt∆i+1 (M)|Fti+1 = E (Ma − Mti+1 )2 |Fti+1 . Since

(Mti+1 − Mti )2 is Fti+1 -measurable, we deduce

E(|Ta∆ (M)|2 )

=

n−1

X

i=0

E(|Mti+1 − Mti |4 )

n−1

X

+2

E |Mti+1 − Mti |2 E Ta∆ (M) − Tt∆i+1 (M)|Fti+1

i=0

=

n−1

X

E(|Mti+1

i=0

n−1

X

− Mti | ) + 2

E |Mti+1 − Mti |2 (Ma − Mti+1 )2

4

i=0

∆

2

2

≤ E sup |Mtk+1 − Mtk | + 2 sup |Ma − Mtk | Ta (M) .

k

k

Since supt |Mt | ≤ C and M0 = 0, equation (1.3) used with 0 and a implies E(Ta∆ (M)) ≤ C 2

and hence

E(|Ta∆ (M)|2 ) ≤ 12C 2 E(Ta∆ (M)) ≤ 12C 4 .

(1.4)

2009-2010

Stochastic Calculus 2 - Annie Millet

10

1

Finite dimensional Itô processes

Therefore, the sequence (Ta∆n (M), n ≥ 1) is Cauchy in L2 ; it converges in L2 (and hence in

probability) to a limit which is denoted by hM, Mia .

(3) Let M be a continuous martingale bounded by C. We check that the process hM, Mi

satisfies the properties claimed in the statement of the theorem. Let (∆n ) be a sequence of

subdivisions with mesh |∆n | converging to 0. For m < n, the process T ∆n (M) − T ∆m (M) is

a martingale and Doob’s inequality implies

h

∆n

∆m

2

E sup |Ts (M) − Ts (M)| ≤ 4E |Ta∆n (M) − Ta∆m (M)|2 .

0≤s≤a

∆

Let m(k) be an integer such that E(|Ts∆m (M) − Ts m(k) (M)|2 ) ≤ 2−k for m ≥ m(k). We

may and do suppose that the sequence m(k) is strictly increasing. The Borel Cantelli

lemma implies that we may extract a subsequence (T.∆m(k) (M), k ≥ 1) - from the sequence

(T.∆n (M), n ≥ 1) - which a.s. converges uniformly on the interval [0, a].

Using a diagonal procedure, we may extract a further subsequence which a.s. converges

uniformly on the interval [0, N] for any integer N. Hence the limiting process hM, Mi is a.s.

continuous. Furthermore, since the limit does not depend on the sequence of subdivisions,

we may assume that this sequence is such that ∆n ⊂ ∆n+1 and that ∪n ∆n is dense in

[0, +∞[.

Let s < t be elements of ∪n ∆n ; there exists n0 such that s, t ∈ ∆n for any n ≥ n0 . Then

obviously Ts∆n ≤ Tt∆n for n ≥ n0 , so that hM, Mis ≤ hM, Mit . By continuity, we deduce

that the process hM, Mi is increasing. Finally, letting n go to infinity in (1.3)written with

the subdivision ∆n and using the uniform integrability of the sequence (Tt∆n (M), n ≥ 1)

(which is bounded in L2 by (1.4)), we deduce that M 2 − hM, Mi is a martingale.

(4) Let M be a continuous local martingale and (Tn ) a sequence of stopping times a.s.

increasing to +∞ and such that for any n the process X(n) = M Tn defined by (1.1) is a

bounded continuous martingale. The preceding proof shows that there exists an increasing

process A(n) null at 0 such that for every n, the process (X(n)2t − A(n)t , t ≥ 0) is a

martingale. Furthermore, the stopped process

(X(n + 1)2 − A(n + 1))Tn = X(n)2 − A(n)Tn

is a martingale and A(n + 1)Tn is an increasing process null at en 0. Uniqueness proved in

part (1) shows that A(n + 1)Tn = A(n)Tn a.s. This defines without ambiguity an increasing

process hM, Mit = A(n)t for every t ≤ Tn . The uniqueness is deduced from that on any

interval [0, Tn ].

Fix t > 0, ε > 0 and δ > 0. The sequence of stopping times (Tn ) goes to +∞, so that

for large enough n, S = Tn is such that P (S ≤ t) < δ and the martingale M S remains

bounded. The first part shows that as the mesh of the subdivision |∆| goes to 0, Tt∆ (M S )

converges to hM S , M S it in probability. Since Ts∆ (M S ) = Ts∆ (M) and hM S , M S is = hM, Mis

for s ∈ [0, S], we deduce that for small |∆| one has

S

S

S

∆

∆

P sup |Ts (M) − hM, Mis | ≥ ε ≤ δ + P sup |Ts (M ) − hM , M is | ≥ ε ≤ 2δ.

s≤t

s≤t

(5) Let finally M be a square integrable martingale. Then Doob’s inequality implies,

E( sup |Ms |2 ) ≤ 2E(|Mt |2 ).

0≤s≤t

Stochastic Calculus 2 - Annie Millet

2009-2010

1.2

Quadratic variation - Bracket of a local martingale

11

On the other hand, let (Tn ) be an increasing sequence of stopping times a.s. converging

to +∞ and such that the process Xn = M Tn is a continuous bounded martingale for

any n. For every t, E(hM, Mit∧Tn ) = E(|Mt∧Tn |2 ) and the sequence (Mt∧Tn , n ≥ 0) is a

(Ft∧Tn , n ≥ 0) martingale bounded in L2 which converges in L2 to Mt2 . Furthermore, the

monotone convergence theorem shows that

E(hM, Mit ) = lim E(hM, Mit∧Tn ) = lim E(|Mt∧Tn |2 ).

n

n

Finally the discrete martingale (Mt∧Tn , n ≥ 1) is closed by Mt ∈ L2 and since ∨n Ft∧Tn = Ft ,

it converges in L2 to Mt . Therefore, E(hM, Mit ) = E(Mt2 ) < +∞. Doob’s inequality shows

that for s ≤ t,

Ms2 − hM, Mis ≤ sup Mr2 + hM, Mir ∈ L1 .

r≤t

Exercise 1.4 (ii) shows that this continuous, uniformly integrable local martingale is a martingale. The optional sampling theorem 1.7 concludes the proof.

2

The bracket of two continuous local martingales M and N id defined using polarization.

Theorem 1.20 Let M and N be continuous (Ft )-local martingales. There exists a unique

adapted, continuous process, with bounded variation and null at 0, denoted by hM, Ni such

that (Mt Nt −hM, Nit , t ≥ 0) is a continuous local martingale. Furthermore, for any sequence

(∆n ) of subdivisions of [0, t] with mesh converging to 0, the sequence

X

(Mti+1 ∧s − Mti ∧s )(Nti+1 ∧s − Nti ∧s ) − hM, Nis converges to 0 in probability.

sup s≤t

ti ∈∆n

Proof. Uniqueness is a straightforward consequence of proposition 1.15. To prove the existence of this process, it suffices to check that

i

1h

hM, Ni = hM + N , M + Ni − hM − N , M − Ni .

4

has this required properties. It is the difference of increasing processes and hence it has a

bounded variation.

2

Definition 1.21 The above process hM, Ni is called the bracket of M and N and the process

hM, Mi also denoted by hMi is the increasing process associated with M.

Definition 1.22 A process X is a continuous semi-martingale if for all t it can be written

as Xt = X0 +Mt +At , where (Mt ) is a continuous (Ft )-local martingale, (At ) is a continuous

process with bounded variation, and M0 = A0 = 0.

The following result is easily deduced form the above statements.

Proposition 1.23 The quadratic variation of a continuous semi-martingale X = X0 +

M + A is finite and equal to hM, Mi. The decomposition of X is unique process (up to

identification with an indistinguishable process). One denotes hX, Xi = hM, Mi and this

increasing process is called the bracket of X. Similarly, if X = X0 +M +A and Y = Y0 +N +B

are continuous semi-martingales (with continuous local martingales M, N and processes A

and B with bounded variation) the bracket of X and Y is defined as

i

1h

hX, Y i = hM, Ni = hX + Y , X + Y i − hX − Y , X − Y i .

4

2009-2010

Stochastic Calculus 2 - Annie Millet

12

1

Finite dimensional Itô processes

Proof. Let X = X0 + M + A be a continuous semi-martingale. Let X = X̄0 + M̄ + Ā be

another decomposition of X, where Ā is a process with bounded variation, M̄ is a continuous

local martingale, M̄0 = Ā0 = 0. Then X̄0 = X0 , the process M − M̄ = Ā − A is a continuous

locale martingale with bounded variation. Proposition 1.15 shows that is is a.s. equal to 0.

Let ∆ be a subdivision of [0, t]. Proposition 1.17 shows that A has a null quadratic

variation and in order to prove that the quadratic variations of X and M are equal, it is

enough to check that

X

(Mti+1 − Mti )(Ati+1 − Ati ) ≤ sup |Mti+1 − Mti | V ar[0,t] (A).

i

i

The trajectories of M are a. s. continuous (and hence uniformly continuous on [0, t]) and

V ar[0,t] (A) < +∞ ; this yields that a.s. the right hand-side of the previous inequality

converges to 0 as |∆| → 0.

2

1.3 Real Itô processes.

Definition 1.24 Let (Bt )be a Brownian motion, (FtB ) its natural filtration, x ∈ R, b ∈

H1loc (FtB ) and σ ∈ H2loc (FtB ). The process X defined by

Z t

Z t

Xt = x +

σs dBs +

bs ds

(1.5)

0

0

is an Itô process. It has continuous trajectories. The process b is its drift coefficient, the

process σ its diffusion coefficient and x is the initial condition. One often denotes (1.5) as

dXt = bt dt + σt dBt ,

(1.6)

X0 = x.

Rt

Theorem 1.13 shows that Mt = 0 σs dBs is a continuous (FtB ) local martingale. TheRt

Rt

refore, an Itô process Xt = x + 0 σs dBs + 0 bs ds is a continuous local semi-martingale ;

Rt

t → 0 σs dBs is called its « martingale part » (even if it is only a local martingale) and

Rt

B

t → x+ 0 bs ds is its « bounded variation part ». The martingale part

R of Xis a « true » (Ft )t

martingale if the diffusion coefficient σ is cad-lag such that E 0 σs2 ds < +∞ for every

t > 0, or more generally of σ ∈ H2 (FtB ). It is an L2 -bounded martingale if σ ∈ H2∞ (Ft2 ).

The results of the previous section immediately yield important properties of Itô processes.

Rt

Rt

Corolary 1.25 (i) The bracket of an Itô process Xt = x + 0 σs dBs + 0 bs ds is defined by

Rt

hX, Xit = 0 σs2 ds for all t ≥ 0.

Rt

Rt

(ii) More generally, the bracket of the Itô processes Xt = x + 0 σs dBs + 0 bs ds and

Rt

Rt

Rt

Yt = y + 0 σ̄s dBs + 0 b̄s ds is that of their martingale parts, that is hX, Y it = 0 σs σ̄s ds.

Rt

Rt

Rt

Rt

(iii) Let (Xt = x + 0 σs dBs + 0 bs ds = x̃ + 0 σ̃s dBs + 0 b̃s ds, t ≥ 0) be an Itô process,

where b, b̃ ∈ H1loc (FtB ), σ, σ̃ ∈ H2loc (FtB ), x, x̃ ∈ R. Then x = x̃, b = b̃ ds ⊗ dP a.e. and

σ = σ̃ ds ⊗ dP a.e., which means that the decomposition of X is unique.

(iv) Let (Xt ) be an Itô process which is a (FtB ) local martingale. Then its drift coefficient

b is ds ⊗ dP a.e. equal to 0.

Stochastic Calculus 2 - Annie Millet

2009-2010

1.4

Rd -valued Itô processes - General Itô’s formula

13

Proof. (i) and (ii) are immediate consequences of Proposition 1.17, Theorem 1.18 and of the

polarization property.

Rt

Rt

(iii) The difference Dt = 0 (b̃s − bs )ds = 0 (σs − σ̃s )dBs has both a bounded variation

on [0, T ] ( because of the deterministic integral) and a continuous local martingale (because

of the stochastic integral and of Theorem 1.13). Thus Proposition 1.17 shows that the

quadratic

R t variation2 of the stochastic integral of σ − σ̃ is null a.s. on any interval [0, t],

that is 0 |σs − σ̃s | ds = 0. This implies that σ = σ̃ ds ⊗ dP a.e. on [0, t] × Ω. Therefore,

Rt

(b − b̃s )ds = 0 for all t, which concludes the proof since X0 = x = x̃.

0 s

Rt

Rt

Rt

(iv) The Itô process Xt = x + 0 σs dBs + 0 bs ds is continuous. Since t → x + 0 σs dBs is

Rt

a continuous (FtB ) local martingale, the process t → 0 bs ds is a continuous local martingale

is of bounded variation on any interval [0, t]. Proposition 1.15 implies that it is a.s. constant,

and hence equal to 0.

2

1.4 Rd -valued Itô processes - General Itô’s formula

1.4.1 d-dimensional Itô processes

We at first extend the Brownian motion from R to Rd .

Definition 1.26 Let Bt = (Bt1 , Bt2 , . . . , Btr ), t ≥ 0 be a r-dimensional process and (Ft )

a filtration. The process B is a r-dimensional (Ft ) standard Brownian motion if the onedimensional processes (B i ), 1 ≤ i ≤ r are real-valued independent (Ft )-Brownian motions.

In other words, B0 = 0 and for 0 ≤ s ≤ t

(i) Bt − Bs is a Gaussian vector N (0, (t − s)Idr ) with mean zero and covariance matrix

(t − s)Idr .

(ii) the increment Bt − Bs is independent of Fs .

When the filtration is given explicitly, B is a standard d-dimensional Brownian if it is

a Brownian motion for its natural filtration (FtB ). If B is a standard Brownian for the

filtration (Ft ), it is also a standard Brownian for its natural filtration (FtB ). A Brownian is

a process with independent increments.

We will identify a vector (x1 , · · · , xr ) ∈ Rr and the column matrix of its components in the

canonical basis. Thus, we set

Bt1

Bt = ... .

Btr

We generalize Itô processes in a similar way. Let M(d, r) denote the set of d × r matrices

with d rows and r columns. The process X = (Xji (t) : 1 ≤ i ≤ d, 1 ≤ j ≤ r, t ≥ 0) taking

values in M(d, k) belongs to H1loc (Ft ) (resp. H2loc (Ft ), H22 (Ft ), H2∞ (Ft )) if each component

Xki is a real-valued process which belongs to H1loc (Ft ) (resp. H2loc (Ft ), H22 (Ft ), H2∞ (Ft )).

Definition 1.27 Let B be a r-dimensional (Ft ) standard Brownian, σ = (σki : 1 ≤ i ≤

d, 1 ≤ k ≤ r) : Ω × [0, +∞[→ M(d, r) ∈ H2loc , b = (b1 , · · · , bd ) : Ω × [0, +∞[→ Rd ∈ H1loc ,

and x = (x1 , · · · , xd ) ∈ Rd . The process (Xt ) taking values in Rd is an Itô process with

initial condition x, diffusion coefficient σ and drift coefficient b if for every i = 1, · · · , d,

Z t

r Z t

X

i

k

i

i

σk (s)dBs +

bi (s)ds.

(1.7)

Xt = x +

k=1

2009-2010

0

0

Stochastic Calculus 2 - Annie Millet

14

1

Finite dimensional Itô processes

Using matrix notation, identifying a vector x = (x1 , · · · , xd ) in Rd with the column matrix

if its components in the canonical basis, one can rewrite equation (1.7) as follows

Xt = x +

Z

t

σ(s)dBs +

0

Z

t

b(s)ds,

0

where

x1

x = ... ,

xd

Xt1

Xt = ... ,

Xtd

σ11 (s) · · · σr1 (s)

..

.. ,

σ(s) = ...

.

.

d

d

σ1 (s) · · · σr (s)

Let us consider one-dimensional Itô processes

ξt = x +

r Z

X

k=1

t

σk (s)dBsk

0

+

Z

t

ξ¯t = x̄ +

b(s)ds and

0

r

X

b1 (s)

b(s) = ... .

bd (s)

σ̄j (s)dBsk

+

k=1

Z

t

b̄(s)ds,

0

Rt

for b, b̄ ∈ H1loc and σk , σ̄k ∈ H2loc . Then the process 0 b(s)ds has continuous trajectories and

P Rt

is of bounded variation, while the process rk=1 0 σk (s)dBsk is a continuous local martingale as sum of continuous local martingales. The processes ξ and ξ¯ are semi-martingales.

In

their brackets, note

order to compute

that for every index k = 1, · · · , r, the process

2 R

R

t

t

0 σk (s)dBsk − 0 |σk (s)|2 ds , t ≥ 0 is a (Ft ) local martingale.

Let k 6= l ; suppose at first that the processes σk and σl are step processes, that is

σk =

n−1

X

ξki 1]ti ,ti+1 ]

and σl =

i=0

n−1

X

i=0

ξli 1]ti ,ti+1 ] , t0 = 0 < t1 < · · · and ξki , ξlj Fti -measurables.

Let s < t ; without loss of generality, we may and do assume that the instants s and t belong

to the list of the ti , with s = tI and t = tn . Then,

E

Z

t

0

σk (u)dBuk

Z

0

t

σl (u)dBul Fs

=

=

n−1 X

n−1

X

E

i=0 j=0

Z s

0

ξki ξlj

σk (u)dBuk

Z

0

[Btki+1

−

Btki ][Btlj+1

s

σl (u)dBul .

−

Btlj ]Fs

Indeed, the independence of Fti , Btki+1 − Btki and Btli+1 − Btli , implies for example :

if I ≤ i = j, since E[(Btki+1 − Btki )(Btli+1 − Btli )|Fti ) = E[(Btki+1 − Btki )(Btli+1 − Btli )] = 0,

one deduces E(ξki ξli E[(Btki+1 − Btki )(Btli+1 − Btli )|Fti )FtI ) = 0.

The independence of B l (tj+1 ) − B l (tj ) and Ftj yields :

if i < I ≤ j, then one has ξki (Btki+1 − Btki )E(ξlj E(Btlj+1 − Btlj |Ftj )|FtI ) = 0,

if I ≤ i < j, then one has E(ξki (Btki+1 − Btki )ξlj E(Btlj+1 − Btlj |Ftj )FtI ) = 0,

This property can be extended to processes σk and σl of H22 (Ft ) for which one deduces that

r Z t

r Z t

2 X

X

2

k

|σk (s)| ds , t ≥ 0

σk (s)dBs −

k=1

0

Stochastic Calculus 2 - Annie Millet

k=1

0

2009-2010

1.4

Rd -valued Itô processes - General Itô’s formula

15

is a (Ft )-martingale. A localization argument shows that this process is a (Ft ) local martingale when one only knows that σk , 1 ≤ k ≤ r belong to H2loc (Ft ).

The bracket

the processes ξ and P

ξ¯ is that

R t of their kmartingale parts

Pr Rof

t

r

k

mt = k=1 0 σk (s)dBs , and m̄t = k=1 0 σ̄k (s)dBs , that is

¯ t = hm, m̄it =

hξ , ξi

Z tX

r

σk (s)σ̄k (s)ds.

0 k=1

The bracket of the local martingale m is equal to that of ξ, that is

hξ , ξit = hm, mit =

Z tX

r

0 k=1

2

|σk (s)| ds =

Z

0

t

kσ(s)k2 ds,

where kσ(s)k denotes the Euclidian norm in Rr of the vector (σ1 (s), · · · , σr (s)).

1.4.2 The general Itô formula

The results of the previous section are gathered in the following

Proposition 1.28 Let (Bt ) be a r-dimensional standard (Ft )-Brownian motion, (Xt ) a

d-dimensional Itô processRof the form (1.7). Then X has continuous trajectories. For any

t

i = 1, · · · , d, the process ( 0 bi (s)ds, t ≥ 0) is continuous with bounded variation (that means

Rt

that each of its component is of bounded variation), null at time 0. The process 0 σ(s)dBs

is a continuous local martingale (that means that each component is a continuous local

martingale) null at time 0. The decomposition is unique. The bracket of the components X i

and X j , 1 ≤ i, j ≤ d is

Z tX

r

i

j

hX , X it =

σki (s)σkj (s)ds.

0 k=1

The Itô formula, proved in the lectures of Stochastic Calculus 1, can be generalized to

multidimensional Itô processes. Let us at first recall its simplest version in dimension one.

Rt

Rt

Theorem 1.29 Let Xt = x + 0 σ(s)dBs + 0 b(s)ds, where x ∈ R, (Bt ) is a (Ft )-Brownian

motion, b ∈ H1loc , σ ∈ H2loc . Let f : R → R be a function of class C 2 . Then for any t ≥ 0,

Z

t

Z

t

1 ′′

f (Xs )dhX, Xis

(1.8)

0

0 2

Z t

Z t

1 ′′

2

′

′

= f (x) +

f (Xs )σ(s)dBs +

f (Xs )b(Xs ) + f (Xs )σ (s) ds.

2

0

0

f (Xt ) = f (x) +

′

f (Xs )dXs +

Formally, one can set

1

df (Xt ) = f (Xt )dXt + f ′′ (Xt )dhX, Xit

2

′

where hX, Xit =

Z

t

σ 2 (s)ds.

0

The Itô formula has the following formulation in arbitrary dimension. Its proof, which

similar to that in dimension one, is omitted. Denote A∗ the transposed of the matrix A.

2009-2010

Stochastic Calculus 2 - Annie Millet

16

1

Finite dimensional Itô processes

Theorem 1.30 Let B be a standard r-dimensional (Ft ) Brownian, σ a process taking values

in M(d, r) which belongsR to H2loc (Ft ), Rb an Rd -valued process which belongs to H1loc (Ft ) and

t

t

x ∈ Rd . Let Xt = x + 0 σ(s)dBs + 0 b(s)ds denote the Itô process defined by equations

(1.7) for i = 1, · · · , d and let f : Rd P

→ R be of class C 2 . Then if a(s) = σ(s)σ(s)∗ denotes

the d × d matrix defined by ai,j (s) = rk=1 σki (s)σkj (s) for i, j ∈ {1, · · · , d}, on a :

Z

Z tX

d

d

∂f

1 t X ∂2f

i

(Xs )dXs +

(Xs )dhX i, X j is

f (Xt ) = f (x) +

∂x

2

∂x

∂x

i

i

j

0 i,j=1

0 i=1

Z tX

Z tX

k X

d

d

∂f

∂f

i

j

= f (x) +

(Xs )σj (s)dBs +

(Xs )bi (s)ds

0 j=1 i=1 ∂xi

0 i=1 ∂xi

Z

d

1 t X ∂2f

+

(Xs )ai,j (s)ds.

2 0 i,j=1 ∂xi ∂xj

Let

∂2f

∂x2

d

denote the symmetric matrix

∂2f

,1

∂xi ∂xj

(1.9)

(1.10)

≤ i, j ≤ d , (., .) denote the scalar pro-

denote the column matrix whose components are the partial derivatives

duct in R and ∂f

∂x

∂f

Then the Itô formula has the formal expression

∂xi

df (Xt ) =

∂f

1

∂2f

∗

(Xt ), dXt + T race σ(t)σ (t) 2 (Xt ) dt.

∂x

2

∂x

If the function f also depends on time, we have the second formulation of the Itô formula.

Theorem 1.31 Let B be a standard r-dimensional (Ft ) Brownian, σ a process taking values

in M(d, r) which belongsR to H2loc (Ft ), Rb an Rd -valued process which belongs to H1loc (Ft ) and

t

t

x ∈ Rd . Let Xt = x + 0 σ(s)dBs + 0 b(s)ds denote the Itô process defined by equations

(1.7) for i = 1, · · · , d and let f : [0, +∞[×Rd → R be of class C 1,2 , that means of class C 1

with respect to the time variable t and of class C 2 with respect to the space variable x. Then

if one sets a(s) = σ(s)σ(s)∗ , one has :

f (t, Xt ) = f (0, x) +

Z

t

Z

t

0

∂f

(s, Xs )ds +

∂t

d

X

Z tX

d

∂f

(s, Xs )dXsi

∂x

i

0 i=1

∂2f

(s, Xs )dhX i , X j is ds

(1.11)

∂x

∂x

i

j

0 i,j=1

Z tX

r X

d

∂f

= f (0, x) +

(s, Xs )σki (s)dBsk

∂x

i

0 k=1 i=1

#

Z t"

d

d

2

X

X

∂

f

∂f

∂f

1

+

(s, Xs ) +

(s, Xs )bi (s) +

(s, Xs )ai,j (s) ds.

∂t

∂x

2

∂x

∂x

i

i

j

0

i,j=1

i=1

+

1

2

Again, equation (1.11) can be written in a formal and compact way as follows :

∂f

1

∂2f

∂f

∗

(t, Xt )dt +

(t, Xt ), dXt + T race σ(t)σ (t) 2 (t, Xt ) dt.

df (t, Xt ) =

∂t

∂x

2

∂x

Stochastic Calculus 2 - Annie Millet

2009-2010

1.5

17

Properties of Brownian motion.

A useful particular case of the previous formula is an « integration by parts formula » .

We leave the poof as an exercise.

Let f : [0, +∞[→ R be of class C 1 and (Bt ) be a real-valued standard Brownian motion ;

then

Z

Z

t

0

t

f (s)dBs = f (t)Bt −

Bs f ′ (s)ds.

0

The Itô formula is a very useful tool. One of its consequences is the following inequality

giving upper estimates of the moments of stochastic integrals in terms of moments of the

integrand. Let B be a real-valued standard Brownian motion,

X be a continuous (FtB )R

T

adapted process, p ∈ [1 + ∞[ and T > 0 be such that E 0 |Xs |2p ds < +∞.Then

E

Z

0

T

2p !

Z

p

p−1

≤ [p(2p − 1)] T

E

Xs dBs 0

T

2p

|Xs | ds

(1.12)

This very powerful inequality is proved in exercise 1.8. The following result strengthens the

conclusion. The right inequality is proved in exercise 1.8.

Theorem 1.32 (The Burkholder-Davies-Gundy theorem) For any p ∈ [1, +∞[ there exist

universal constants kp > 0 and Kp > 0 (which only depend on p) such that for any continuous

square integrable (Ft )-martingale M and any T > 0,

p

2p

≤ Kp E (hMipT ) .

kp E (hMiT ) ≤ E sup |Ms |

(1.13)

0≤s≤T

1.5 Properties of Brownian motion.

1.5.1 The Lévy characterizations

Note that given a d-dimensional standard Brownian motion B, for every i, j = 1, · · · , d

the processes (Bti , t ≥ 0) and (Bti Btj − δi,j t , t ≥ 0) are (FtB )-martingales (with δi,j = 0

for i 6= j and δi,i = 1). This is proved in exercise 1.7 We next prove that these martingale

properties characterize the Brownian motion, which will play a crucial role to study changes

of probability. Let (u, v) denote the scalar product of the vectors u, v ∈ Rd and kuk denote

the Euclidean norm of u,

Theorem 1.33 (Paul Lévy’s characterization) Let X = (Xt = (Xt1 , · · · , Xtd ), t ≥ 0) be an

(Ft )-adapted processes taking values in Rd .

(i) Suppose that for any u ∈ Rd ,

1

2

E ei(u,Xt −Xs ) | Fs = e− 2 kuk (t−s) .

(1.14)

Then (Xt ) is a standard (Ft )-Brownian motion taking values in Rd .

(ii) Suppose that for every u ∈ Rd , the processes X and t 7→ exp [(u, Xt ) − kuk2t/2] are

(Ft )-martingales. Then X is a standard (Ft )-Brownian motion taking values in Rd .

2009-2010

Stochastic Calculus 2 - Annie Millet

18

1

Finite dimensional Itô processes

(iii) Suppose that the process defined by Mtj = Xtj − X0j for j = 1, · · · , d is a (Ft ) local

martingale null at 0 (i.e., M0i = 0 for every i) and that the brackets of M i and M j are

hM i , M j it = δi,j t.

(1.15)

Then M is a standard (Ft )-Brownian motion taking values in Rd .

Proof. (i) One has to prove that for 0 ≤ s < t the random vector Xt − Xs is Gaussian

N (0, (t − s)Id) and independent of Fs .

Let Z be a Gaussian vector N (0, (t − s)Id). Using condition (1.14), we have

kuk2 (t−s)

E ei(u,Xt −Xs ) |Fs = E ei(u,Z) = e− 2 .

(1.16)

Indeed, fix u ∈ Rd and set Φ(x) = ei(u,x) ; for any set A ∈ Fs we have E[1A Φ(Xt −

Xs )] = P (A)E[Φ(Z)]. Since the characteristic function characterizes the distribution, we

deduce

that for any bounded Borel function f : Rd → R and any set A ∈ Fs , we have

E 1A f (Xt − Xs ) = P (A)E[f (Z)]. This proves that Xt − Xs is independent of Fs and also

that the distribution of Xt − Xs is equal to that of Z.

(ii) Using part (i), it is enough to show that (1.16) holds. Let at first v ∈ Rd ; by

assumption, for 0 ≤ s < t,

kvk2t

kvk2 t E exp (Xt − Xs , v) | Fs = exp −(Xs , v) +

E exp (Xt , v) −

Fs

2

2

kvk2 (t − s)

= exp

.

2

An induction argument (based on the fact that both hand sides of (1.16) are analytic

functions of one of the variables vk ∈ C, the other variables being fixed either in R or in

C) proves that the previous identity can be extended from v ∈ Rd to v ∈ Cd . Using this

equality with the vector v with components vk = iuk shows that (1.16) holds.

(iii) We only prove this

R t characterization in the particular case if an Itô process (Xt ),

that is when Xt = X0 + 0 H(s)dBs with H ∈ H2loc . The general case requires a notion

of stochastic integral more general than that with respect to the Brownian motion defined

in the lectures of Stochastic Calculus

1. For j = 1, · · · , d and t > 0, the

bracket of X j at

R

P

P

t

any time t is hX j , X j it = t = rk=1 0 |Hkj (s)|2 ds, which implies that rk=1 |Hkj (s)|2 = 1

for almost every s. Furthermore, if j 6= l, then

t > 0, the bracket hX j , X l it =

Ra.s.

Pr for any

t Pr

j

j

l

l

k=1 Hk (s)Hk (s) ds = 0, which implies that

k=1 Hk (s)Hk (s) = 0 a.s. for almost every

0

s. We deduce that H ∈ H22 and that (Xt , 0 ≤ t ≤ T ) is a martingale. The above inequalities

prove that the vectors (H j (s), 1 ≤ j ≤ d) are an orthonormal family (and hence a linear

d

independent family)

of Rr , which yields

d ≤ r. For any set A ∈ Fs and any vector λ ∈ R ,

let f (t) = E 1A exp [i(λ, Mt − Ms )] . Using part (i), it is enough to prove that f (t) =

2

P (A) exp − kλ2 k (t − s) . The Itô formulaused separately

for the real and the imaginary

Pd

1

d

j j

part of the function x = (x , · · · x ) → exp i j=1 λ x , implies for s < t,

i(λ,Mt )

e

i(λ,Ms )

= e

+i

d X

r

X

j=1 k=1

−

Z

d X

r

X

λj λl

j,l=1 k=1

Stochastic Calculus 2 - Annie Millet

2

s

t

λ

j

Z

s

t

ei(λ,Mu ) Hkj (u)dBuk

ei(λ,Mu ) Hkj (u)Hkl (u)du,

2009-2010

1.5

19

Properties of Brownian motion.

which yields

i(λ,Mt −Ms )

e

=1+i

r

d X

X

j=1 k=1

λ

j

Z

t

i(λ,Mu −Ms )

e

s

Hkj (u)dBuk

−

Z

d

X

|λj |2

j=1

2

t

ei(λ,Mu −Ms ) du.

s

For any j = 1, · · · , d and k = 1, · · · , r the stochastic integrals (Nkj (t), t ≥ s) defined by

Rt

Nkj (t) = s ei(λ,Mu −Ms ) Hkj (u)dBuk for t ≥ s are martingales such that E(Njk (t)|Fs ) = 0. The

Fubini theorem implies that

Z t

Z

kλk2

kλk2 t

i(λ,Mu −Ms )

f (t) = P (A) −

E 1A

f (u)du.

e

du = P (A) −

2

2

s

s

2

Since f ′ (t) = − kλk

f (t) for t ≥ s and since f (s) = P (A), we have proved that for any t ≥ s,

2

kλ2 k

f (t) = P (A) exp(− 2 (t − s)).

2

1.5.2 The Markov property

A fundamental property of the Brownian motion is the Markov property, which says

that what happens in the future at time t does not really depend on the whole past, that is

on the whole σ-algebra Ft of the « past » , but only of the state of Bt at time t. We at first

describe how one moves from time s to time t ≥ s.

Definition 1.34 A transition probability on Rd is a map Π : Rd × Rd → [0, 1] such that

(i) for any x ∈ Rd , the map A ∈ Rd → Π(x, A) is a probability.

(ii) for any A ∈ Rd , the map x ∈ Rd → Π(x, A) is measurable from (Rd , Rd ) to

([0, 1], B([0, 1]).

A transition function on Rd is a family (Ps,t , 0 ≤ s < t) of transition probabilities such that

for s < t < v the Chapman-Kolmogorov is satisfied :

Z

Ps,t(x, dy)Pt,v (y, A) = Ps,v (x, A) , ∀A ∈ Rd , ∀x ∈ Rd .

(1.17)

For any positive (or bounded) Borel function f : Rd → R we set

Z

Ps,t f (x) =

f (y) Ps,t(x, dy).

Rd

If the transition function Ps,t only depends on the difference t−s, it is said to be homogeneous

and then, if one sets Pt = P0,t , the equation (1.17) can be written as follows

Z

Ps+t (x, A) = Ps (x, dy)Pt (y, A).

For any positive (or bounded) Borel function f : Rd → R, one has Ps+t f (x) = Pt Ps f (x)

and (Pt ) is called a semi-group.

The equation (1.17) est natural. Indeed, if X is a process and (x, A) ∈ Rd × Rd →

Ps,t (x, A) is a transition probability such that for A ∈ Rd and s < t,

P (Xt ∈ A|σ(Xu , u ≤ s)) = P (Xt ∈ A|Xs ) a.s.

2009-2010

Stochastic Calculus 2 - Annie Millet

20

1

and

P (Xt ∈ A|Xs = x) = Ps,t (x, A) =

Z

Finite dimensional Itô processes

1A (y) Ps,t(x, dy),

we deduce that Ps,t (x, dy) is a transition probability (since

R it is the conditional distribution

of Xt given Xs = x) and that E(f (Xt )|σ(Xu , u ≤ s)) = Rd f (y)Ps,t(Xs , dy) for any positive

(or bounded) Borel function f : Rd → R. Given s < t < v, A ∈ Rd and f (y) = Pt,v (y, A),

we deduce that

Ps,v (Xs , A) = P (Xv ∈ A|σ(Xu , u ≤ s))

= E P (Xv ∈ A|σ(Xu , u ≤ t) | σ(Xu , u ≤ s)

Z

= E f (Xt )|σ(Xu , u ≤ s) = Ps,t (Xs , dy)Pt,v (y, A).

These notions give a precise formulation of the « weak » Markov property.

Definition 1.35 (1) The Rd -valued, (Ft )-adapted process (Xt , t ≥ 0) is a Markov process

for the filtration (Ft ) if for any bounded Borel function f : Rd → R,

E f (Xt ) | Fs) = E f (Xt ) | Xs) pour tout s ≤ t.

(1.18)

(2) The Rd -valued, (Ft )-adapted process (Xt , t ≥ 0) is a Markov process for the filtration

(Ft ) with transition function Ps,t if for any bounded Borel function f : Rd → R,

Z

E f (Xt ) | Fs = Ps,t f (Xs ) :=

Ps,t (Xs , dy)f (y).

(1.19)

Rd

We say that the Markov process is homogeneous if its transition probability is homogeneous.

We prove that the Brownian motion is a homogeneous Markov process with transition

semi-group Ph defined by

Z

1

ky − xk2

Ph (x, A) = P (Bs+h ∈ A|Bs = x) =

exp −

dy,

d

2h

(2πh) 2 A

for s ≥ 0, h > 0, x ∈ Rd and A ∈ Rd , that is Ph (x, dy) is the distribution of a Gaussian

vector N (x, hId).

Theorem 1.36 (Weak Markov property) Let (Bt ) be a standard d-dimensional Brownian

motion and f : Rd → R be a bounded Borel function. Then for s ≤ t,

E[f (Bt ) | FsB ] = E[f (Bt ) | Bs ]

Z

Z

1

ky − Bs k2

dy = f (y)Pt−s(Bs , dy).

=

f (y) exp −

d

2(t − s)

(2π(t − s)) 2 Rd

Proof. The proof uses the following lemma :

Lemma 1.37 Let F be a σ-algebra, let G be a sub σ-algebra of F , and let X : (Ω, G) →

(E1 , E1 ) be a G-measurable map, Y : (Ω, F ) → (E2 , E2 ) be F -measurable map independent

of G. Then for any bounded measurable function Φ : (E1 × E2 , E1 ⊗ E2 ) → (R, R) one has

E(Φ(X, Y )|G) = ϕ(X), where ϕ : (E1 , E1) → (R, R) is defined by ϕ(x) = E[Φ(x, Y )].

Stochastic Calculus 2 - Annie Millet

2009-2010

1.5

21

Properties of Brownian motion.

Proof of the Lemma For any measurable map V : (Ω, F ) → (E, E), let P(V ) denote

the image measure of P by VR. The definition of P(Y ) and Fubini’s theorem imply that ϕ

satisfies the equation ϕ(x) = E2 Φ(x, y)dP(Y ) (y), is bounded and measurable from (E1 , E1 )

to s (R, R). Furthermore, if Z : (Ω, G) → (R, R) is bounded and G-measurable, since the

random variables Y and (X, Z) are independent, the Fubini theorem yields

Z Z

E Φ(X, Y )Z =

Φ(x, y) z dP(X,Z) (x, z) dP(Y ) (y)

Z Z

=

Φ(x, y)dP(Y ) (dy) z dP(X,Z) (x, z)

Z

= ϕ(x) zdP(X,Z) (x, z) = E ϕ(X)Z ,

which concludes the proof of the lemma.

2

Let s < t ; apply the previous lemma with E1 = E2 = Rd , G = Fs , F = Ft , X = Bs

and

function f : Rd → R, we have

Y = Bt − Bs . We deduce that for any bounded Borel

E f (Bt )|Fs = ϕ(Bs ) where ϕ(x) = E f (x + Bt − Bs ) . A similar computation made with

G = σ(B

s ) and F = Ft and for the same random variables X = Bs and Y = Bt − Bs implies

that E f (Bt )|Bs = ϕ(Bs ). The distribution of Bt − Bs is Gaussian N (0, (t − s)Id), which

concludes the proof.

2

Corolary 1.38 Let τ be as a.s. finite (Ft ) stopping time and B be a (Ft ) standard Brownian

motion of dimension d. Then the process (Mt = Bτ +t −Bτ , t ≥ 0) is a (Fτ +t , t ≥ 0) standard

Brownian motion independent of Fτ .

Proof. Suppose at first that τ ≤ C a.s. and set Gt = Fτ +t for all t ≥ 0. Then (Gt ) is a

filtration and the optional sampling theorem 1.7 shows that if (Xt ) is a (Ft )-martingale

and if Yt = Xτ +t , then the random variable Yt is integrable and that for s < t, E(Yt |Gs ) =

E(Xτ +t |Fτ +s ) = Xτ +s = Ys , that is, (Yt ) is a (Gt )-martingale.

We have to prove that for 0 ≤ s < t, the random vector Mt − Ms is Gaussian N (0, (t −

s)Id) an independent of Gs . An argument similar to that used in the proof of the Levy

characterization of the Brownian motion shows that is suffices to check that for any vector

u ∈ Rd the equation (1.16) holds.

Let u ∈ Rd and set f (x) = ei(u,x) for x ∈ Rd . Then |f | = 1 ; decompose f into its real

and imaginary part, apply the Markov property and the definition of the Brownian motion.

We obtain

(t − s)kuk2

E(exp(i(u, Bt )|Fs ) = E(exp(i(u, Bt )|Bs ) = exp(i(u, Bs )) exp −

.

2

This yields that the process Xt = exp i(u, Bt ) + tkuk2 /2) is a (Ft )-martingale, and the

above remark shows that the process (Yt = Xτ +t , t ≥ 0) is a (Gt )-martingale. Thus, we

deduce that for all s ≤ t

Yt E(Yt |Gs )

Ys

E

= ,

Gs =

Y0

Y0

Y0

which implies that YY0t = exp(i(u, Mt ) + tkuk2 /2), t ≥ 0 is a (Gt )-martingale. Therefore,

given s ≤ t,

tkuk2

(t−s)kuk2

tkuk2

i(u,Mt −Ms ) i(u,Mt )+ 2 E e

Gs e−i(u,Ms )− 2 = e− 2 ,

Gs = E e

2009-2010

Stochastic Calculus 2 - Annie Millet

22

1

Finite dimensional Itô processes

which concludes the proof of (1.16) when the stopping time τ is a.s. bounded.

Let τ be an a.s. finite stopping time ; to conclude the proof, it suffices to write (1.16) for

the sequence of bounded stopping times τ ∧ n and to let n go to +∞.

2.

This yields a result similar to the weak Markov property stated in Theorem 1.36, replacing fixes times by stopping times.

Theorem 1.39 (Strong Markov property) Let B be a Rd -valued standard (Ft )-Brownian

motion and τ be an a.s. finite (FtB ) stopping time ; then for all t > 0,

Z

1

ky − Bτ k2

dy. (1.20)

E[f (Bτ +t ) | Fτ ] = E[f (Bτ +t ) | Bτ ] =

f (y) exp −

d

2t

(2πt) 2 Rd

Proof. Apply Lemma 1.37 with X = Bτ , Y = Bτ +t − Bτ , G = Fτ (or G = σ(Xτ )), F =

Ft+τ and Corollary 1.38. We deduce that since (Bτ +t − Bτ , t ≥ 0) is a Brownian motion

independent of Fτ , the strong Markov property (1.20) holds true.

2

1.6 Exercises

Exercise 1.1 Show that a stopped local martingale M is a local martingale.

Exercise 1.2 Let (Mt ) be an integrable, cad-lag (Ft )-adapted process. Show that it is a

(Ft )-martingale if and only if for any bounded stopping time T one has MT ∈ L1 and

E(MT ) = E(M0 ).

Exercise 1.3 Let (Xt , 0 ≤ t ≤ T ) be a (Ft )-supermartingale, that is for 0 ≤ s ≤ t ≤ T ,

Xs ≥ E(Xt |Fs ), and suppose that E(XT ) = E(X0 ). Show that (Xt ) is a martingale.

Exercise 1.4 Let (Mt ) be a (Ft ) local martingale. Show that

(i) If Mt is non-negative, then it is a supermartingale.

(ii) If there exists Y ∈ L1 such that for all t, |Mt | ≤ Y a.s., then (Mt ) is a martingale.

Exercise 1.5 Let B be a real Brownian motion and (FtB ) denote its natural

filtration.

Rt

Characterize the values of α ∈ R such that the process defined by It = 0 |Bs |α dBs is a

square-integrable (FtB )-martingale.

Rt

Rt

Exercise 1.6 Let B be a (Ft )-Brownian motion, Xt = x + 0 σs dBs + 0 bs ds and X̄t =

Rt

Rt

x̄ + 0 σ̄(s)dBs + 0 b̄s ds be Itô processes. Write Xt Yt as a semi-martingale.

Exercise 1.7 Let (Bt1 , · · · , Btr ) be a standard r-dimensional Brownian motion.

1. Let X and Y be real-valued random variables, G be a sub σ-algebra of F such that

the σ-algebras σ(X), σ(Y ) and G are independent. Show that E(XY |G) = E(X)E(Y )

a.s.

2. Prove that for s < t, when i 6= j one has E(B i Btj Fs ) = B i B j .

t

i

s

s

j

3. Prove that hB , B it = δi,j t.

4. Prove that for every u = (u1 , · · · , ur ) ∈ Rr ,

1

1

2 2

E e(u,Bt )− 2 kuk t Fs = e(u,Bs )− 2 kuk s .

Stochastic Calculus 2 - Annie Millet

2009-2010

1.6

23

Exercises

R

T

0

2p

Exercise 1.8 Let (Xt ) be an (Ft )-adapted process and T > 0 be such that E

|Xs | ds <

+∞ for any p ∈ [1, +∞[.

Rt

1. Apply Itô’s formula to the process Mt = 0 Xs dBs to deduce that there exists an

increasing sequence (Tn ) if stopping times which goes to +∞ and such that

Z T

2p

2(p−1) 2

E(MT ∧Tn ) = p(2p − 1)E

Xt∧Tn dt .

|Mt∧Tn |

0

2. Is the function t → E(|Mt∧Tn |2p ) monotone ? Deduce that

Z T ∧Tn

p

2p

2p

p−1

|Xs | ds

E

E(|MT ∧Tn | ) ≤ [p(2p − 1)] T

0

and then that

2p

p

E(|MT | ) ≤ [p(2p − 1)] T

p−1

E

Z

T

2p

0

|Xs | ds .

3. Apply Doob’s inequality and show that there exists a constant Kp , which will be

written explicitly, such that

Z T

2p

2p

≤ Kp E

|Xs | ds .

E sup |Mt |

0≤s≤T

0

Exercise 1.9 Let (Xt ) be a continuous, nonnegative (Ft )-adapted process such that X0 = 0,

(At ) is an increasing, continuous (Ft )-adapted process such that for any bounded stopping

time T , E(XT ) ≤ E(AT ). Given t ≥ 0, set Vt = sup0≤s≤t Xs .

1. Show that for any stopping time T and any ǫ > 0, P (VT ≥ ǫ) ≤ 1ǫ E(AT ). (One can

use r τε = inf{t ≥ 0 : Xt ≥ ε} et Tn = T ∧ n ∧ τε .)

2. Show that for any stopping time T , and any δ > 0, ǫ > 0, si S = inf{t ≥ 0 : At ≥ δ},

P (VT ≥ ǫ, AT ≤ δ) ≤ P (VT ∧S ≥ ε) ≤ 1ǫ E(δ ∧ AT ).

3. Let F : [0, +∞[→ [0, +∞[ be a function differentiable on ]0, +∞[, strictly increasing,

′

such that F (0) = 0 and for any x > 0, u → F u(u) 1[x,+∞[(u) ∈ L1 (λ), where λ denotes

the Lebesgue measure. Let G :]0, +∞[→]0, +∞[ denote the function defined by

Z +∞ ′

F (u)

du.

G(x) = 2F (x) + x

u

x

R +∞ ′

(a) Prove that G′ (x) = F ′ (x) + x F u(u) du.

(b) Prove that

Z +∞

E(F (VT )) =

P (VT ≥ u)F ′(u)du

Z0 +∞

Z +∞

1

′

≤

E AT 1{AT ≤u} F ′ (u)du

2P (AT ≥ u)F (u)du +

u

0

0

≤ E(G(AT )).

Deduce that for every p ∈]0, 1[, every stopping time T , E(VTp ) ≤

2009-2010

2−p

E(ApT ).

1−p

Stochastic Calculus 2 - Annie Millet

24

2

Stochastic differential equations

2 Stochastic differential equations

These processes, which generalize ordinary differential equations, are fundamental in

finance. They model the price of financial assets.

Let us recall that an ordinary differential equation (ODE) on [0, +∞[×R is as follows :

yt′ = f (t, yt) and y0 = y,

(2.1)

where y : [0, +∞[→ R is the unknown function and f : [0, +∞[×R → R is a given function.

The study of ODE is extremely important for applications of mathematics, for example in

physics. In general, it is impossible to give an explicit analytic expression of the solution to an

ODE. However, one wants to know whether such an equation has a unique solution (and ways

to approximate it numerically). Uniqueness is important to prove that the approximation

scheme converges to the (unique) solution. A basic existence and uniqueness criterion is the

following :

Theorem 2.1 (The Cauchy-Lipschitz theorem) Let K > 0 be a constant such that for all

t ∈ [0, +∞[, x, y ∈ R :

|f (t, x) − f (t, y)| ≤ K|x − y|

(global Lipschitz condition),

|f (t, x)| ≤ K(1 + |x|) (linear growth condition).

Then the ODE (2.1) has a unique solution defined on [0, +∞[.

The global Lipschitz property is natural if the solution is required to be defined on the entire

half-line [0, +∞[. Indeed, let y0 = 1 and f (t, y) = y 2 , then it is easy to see that the unique

solution to the corresponding ODE is (2.1) the function defined by yt = 1/(1 − t), and that

this solution « explodes » at t = 1.

A stochastic differential equation (SDE) is a perturbation of (2.1) by a stochastic term

which models a « noise » around the deterministic phenomenon described by (2.1). The

simplest perturbation consists in adding a Brownian motion. This models the fact that on

disjoint time intervals, the perturbation is the sum of a very large number of « small »

shocks, that is independent random variables which have the same distribution. Using the

central limit theorem, we see that, when properly renormalized, the distribution of this sum

is close to that of a Gaussian random variable. The number of small shocks is supposed to

be proportional to the length of the interval, which means that the variance is a multiple

of the length of this time interval. Thus, we consider the SDE dYt = f (t, Yt )dt + σdBt and

Y0 = y. This formulation is formal (the Brownian motions is a.s. nowhere differentiable) ;

the only rigorous formulation is in integrated form :

Z t

Yt = y +

f (s, Ys )ds + σBt

0

for all t ≥ 0. It is usual to denote solutions to SDE by capital letters and keep lower case

letters for solutions of ODEs.

The trajectory of the solution y to the ODE (2.1) is regular and deterministic. The

added Brownian motion implies that for « small σ », the trajectory of the solution to the

above SDE, which is a.s. non-differentiable, is close to that of (2.1), and oscillates around

it. However, for large σ the trajectories of the SDE are no longer similar to that of (2.1).

Stochastic Calculus 2 - Annie Millet

2009-2010

2.1

25

Strong solution - Diffusion.

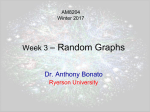

The next figure shows the trajectories on [0, 2] of the solution of the ODE

yt′ = −yt , et y0 = 5

(that is yt = 5e−t ) and of the SDEs

Yt = 5 −

Z

t

Ys ds + σBt

0

with σ = 0.4 and σ = 2 obtained by simulation.

6

5

4

3

2

1

0

−1

−2

0.0

0.2

0.4

0.6

0.8

1.0

1.2

1.4

1.6

1.8

2.0

2.1 Strong solution - Diffusion.

In the sequel, we will study stochastic differential equations with the following precise

definition.

Fix a right-continuous filtration (Ft ) with complete σ-algebras (but where F0 need not

be the σ-algebra of null sets) and B a (Ft )-Brownian motions taking values in Rr , ξ a

Rd -valued random variable independent of FsB = σ(Bs , s ≥ 0) and Borel functions

σ : [0, +∞[×Rd → M(d, r) ∼ Rdr

and

b : [0, +∞[→ Rd .

The stochastic differential equation (SDE) with initial condition ξ, diffusion coefficient

σ and drift coefficient b is a process X such that for any t ≥ 0,

Z t

Z t

Xt = ξ +

σ(s, Xs )dBs +

b(s, Xs )ds.

(2.2)

0

0

Equation (2.2) will also be denoted as follows :

dXt = σ(t, Xt )dBt + b(s, Xs )ds,

X0 = ξ.

We at first prove an existence and uniqueness result in the strong sense.

2009-2010

Stochastic Calculus 2 - Annie Millet

26

2

Stochastic differential equations

Definition 2.2 Let B be a given Brownian motion, ξ a random variable independent of the

σ-algebra σ(Bs , s ≥ 0) and let (Ft ) be the filtration defined as follows : for any t ≥ 0, Ft

is the completion of the σ-algebra σ(σ(Bs , 0 ≤ s ≤ t), σ(ξ)). A strong solution of (2.2) is a

process (Xt ) with a.s. continuous trajectories such that

(i) (Xt ) is adapted to the filtration (Ft ),

(ii) X0 = ξ a.s.,

Rt

P

(iii) For every i = 1, · · · , d, t ≥ 0 0 |bi (s, Xs )| + rk=1 |σki (s, Xs )|2 ds < +∞ a.s.

(iv) For every t ≥ 0 and every i = 1, · · · , d,

Xti

=

X0i

+

r Z

X

t

0

k=1

σki (s, Xs )dBsk

+

Z

t

bi (s, Xs )ds.

0

The following theorem is fundamental ; it gives sufficient conditions on the coefficients

(similar to that for ODEs) which ensure existence and uniqueness of a strong solution to

(2.2). Let |.| denote the Euclidian norm on Rd or on Rd×r . The following conditions extend

that used for ODEs.

Definition 2.3 Fix T > 0. A map ϕ : [0, T ] × Rd → RN satisfies

(i) The global Lipschitz condition on [0, T ] if there exists a constant C > 0 such that

|ϕ(t, x) − ϕ(t, y)| ≤ C|x − y| , ∀t ∈ [0, T ], ∀x, y ∈ Rd .

(2.3)

(ii) The global growth condition on [0, T ] if there exists a constant C > 0 such that

|ϕ(t, x)| ≤ C(1 + |x|) , ∀t ∈ [0, T ], ∀x, y ∈ Rd .

(2.4)

When ϕ is defined on [0, +∞[×Rd and conditions (2.3) and (2.4) are satisfied on any