* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download Ch 3

Laplace–Runge–Lenz vector wikipedia , lookup

Exterior algebra wikipedia , lookup

Euclidean vector wikipedia , lookup

Capelli's identity wikipedia , lookup

Vector space wikipedia , lookup

Linear least squares (mathematics) wikipedia , lookup

Covariance and contravariance of vectors wikipedia , lookup

Rotation matrix wikipedia , lookup

Principal component analysis wikipedia , lookup

Jordan normal form wikipedia , lookup

System of linear equations wikipedia , lookup

Matrix (mathematics) wikipedia , lookup

Eigenvalues and eigenvectors wikipedia , lookup

Singular-value decomposition wikipedia , lookup

Non-negative matrix factorization wikipedia , lookup

Determinant wikipedia , lookup

Perron–Frobenius theorem wikipedia , lookup

Orthogonal matrix wikipedia , lookup

Gaussian elimination wikipedia , lookup

Four-vector wikipedia , lookup

Cayley–Hamilton theorem wikipedia , lookup

Vector Spaces in Physics

8/6/2015

Chapter 3. Linear Equations and Matrices

A wide variety of physical problems involve solving systems of simultaneous linear

equations. These systems of linear equations can be economically described and

efficiently solved through the use of matrices.

A. Linear independence of vectors.

Let us consider the three vectors E1 , E2 and E3 given below.

2

E1 1 Ei 1 , i 1,3

1

4

E2 3 Ei 2 , i 1,3

3

(3-1)

3

E3 2 Ei 3 , i 1,3

1

These three vectors are said to be linearly dependent if there exist constants c1, c2, and c3,

not all zero, such that

c1 E1 c2 E2 c3 E3 Eij c j 0

(3-2)

The second form in the equation above gives the i-th component of the vector sum as an

implied sum over j, invoking the Einstein summation convention. Substituting in the

values for the components Eij from equation (3.1), this vector equation is equivalent to

the three linear equations:

2c1 4c 2 3c3 0

c1 3c 2 2c3 0

(3-3)

c1 3c 2 c3 0

These relations are a special case of a more general mathematical expression,

(3-4)

Ac d

where c and d are vectors represented as column matrices, and A is a sort of operator

which we will represent by a square matrix.

The goal of the next three chapters is to solve equation (3-4) by the audacious leap of

faith,

3-1

Vector Spaces in Physics

8/6/2015

c A 1d

.What is A 1 ? Dividing by a matrix!?! We will come to this later.

(3-5)

B. Definition of a matrix.

A matrix is a rectangular array of numbers. Below is an example of a matrix of

dimension 3x4 (3 rows and 4 columns).

1 1 1

2

(3-6)

A 1 2 2 1 Aij , i 1,3, j 1,4

1

2

1 1

We will follow the "RC convention" for numbering elements of a matrix, where Aij is the

element of matrix A in its i-th row

and j-th column. As an example, in

the matrix above, the elements which

are equal to -1 are A13, A21, and A34.

C. The transpose of a matrix.

The transpose A T of a matrix A is

obtained by drawing a line down the

diagonal of the matrix and moving

each component of the matrix across

the diagonal to the position where its

image would be if there were a mirror

along the diagonal of the matrix:

This corresponds to the interchange

of the indices on all the components

of A :

mirror line

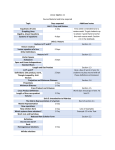

Figure 3-1. The transpose operation moves

elements of a matrix from one side of the

diagonal to the other.

Aij A ji

T

Transpose (3-7)

Example: Calculate the transpose of the square matrix given below:

2 4 3

A 1 3 2

(3-8)

1 3 1

Solution:

2 4 3

2 1 1

transpose

4 3 3

1 3 2

1 3 1

3 2 1

3-2

(3-9)

Vector Spaces in Physics

8/6/2015

D. The trace of a matrix.

A simple property of a square matrix is its trace, defined as follows:

Tr ( A ) Aii

Trace (3-10)

This is just the sum of the diagonal components of the matrix.

Example: Find the trace of the square matrix given in (3-8).

Solution:

2 4 3

Tr 1 3 2 A11 A22 A33 2 3 1 6

1 3 1

(3-11)

E. Addition of Matrices and Multiplication of a Matrix by a Scalar.

These two operations are simple and obvious – you add corresponding elements, or

multiply each element by the scalar. To add two matrices, they must have the same

dimensions.

A B

ij

cA

ij

Aij Bij

Addition (3-12)

cAij Multiplication by a Scalar (3-13)

F. Matrix multiplication.

One of the important operations carried out with matrices is multiplication of one

matrix by another. For any two given matrices A and B the product matrix C A B

can be defined, provided that the number of columns of the first matrix equals the number

of rows of the second matrix. Suppose that this is true, so that A is of dimension p x n,

and B is of dimension n x q. The product matrix is then of dimension p x q.

The general rule for obtaining the elements of the product matrix C is as follows:

C AB

n

Cij Aik Bkj , i 1, p, j 1, q

k 1

Cij Aik Bkj (Einstein's version)

3-3

Matrix Multiplication (3-14)

Vector Spaces in Physics

8/6/2015

This illustrated below, for A a 3 x 4 matrix and B a 4 x 2 matrix.

AB C

1 3

2 1 1 1

3 x

2 2

1 2 2 1 2 1 y 8

1 2 1 1

z

1 1 0

(3-15)

Example: Calculate the three missing elements x. y, and z in the result matrix

above.

Solution:

x C12

4

A1n Bk 2

k 1

2 * 3 1 * 2 (1) * (1) 1 *1

10;

y C 21

(1) * 1 (2) * (2) 2 * 2 1 * (1)

6

z 1 * 3 2 * 2 1 * (1) (1) *1

5

There is a tactile way of remembering how to do this multiplication, provided that the

two matrices to be multiplied are written down next to each other as in equation (3-15).

Place a finger of your left hand on Ai1, and a finger of your right hand on B1j. Multiply

together the two values under your two fingers. Then step across the matrix A from left

to right with the finger of your left hand, simultaneously stepping down the matrix B

with the finger of your right hand. As you move to each new pair of numbers, multiply

them and add to the previous sum. When you finish, you have the value of Cij. For

instance, calculating C21 in the example of equation (3-15) this way gives -1 + 4 + 4 - 1 =

6.

Einstein Summation Convention. For the case of 3x3 matrices operating on 3component column vectors, we can use the Einstein summation convention to write

matrix operations:

matrix multiplying vector:

y Ax

(3-16)

y i Aij x j

matrix multiplying matrix:

3-4

Vector Spaces in Physics

8/6/2015

C AB

C ij Aik Bkj

(3-17)

The rules for matrix multiplication may seem complicated and arbitrary. You might

ask, "Where did that come from?" Here is part of the answer. Look at the three

simultaneous linear equations given in (3-3) above. They are precisely given by

multiplying a matrix A of numerical coefficients into a column vector c of variables, to

wit:

A c 0;

2 4 3 c1 0

(3-18)

1 3 2 c2 0

1 3 1 c 0

3

The square matrix above is formed of the components of the three vectors E1 , E2 and E 3

, placed as its columns:

A E1

E2

E3

(3-18a)

This matrix representation of a system of linear equations is very useful.

Exercise: Use the rules of matrix multiplication above to verify that (3-18) is

equivalent to (3-3).

G. Properties of matrix multiplication.

Matrix multiplication is not commutative. Unlike multiplication of scalars, matrix

multiplication depends on the order of the matrices:

AB BA

(3-19)

Matrix multiplication is thus said to be non-commutative.

Example: To investigate the non-commutativity of matrix multiplication, consider

the two 2x2 matrices A and B :

1

A

4

2

B

1

2

,

3

1

2

Calculate the two products A B and B A and compare.

3-5

(3-20)

Vector Spaces in Physics

8/6/2015

Solution:

1 2 2 1 4 5

A B

4 3 1 2 11 10

(3-21)

2 1 1 2 2 1

B A

1 2 4 3 7 4

The results are completely different.

(3-22)

but

Other properties. The following properties of matrix multiplication are easy to verify.

A B C A B C

Associative Property (3-23)

A B C A B A C

Distributive Property (3-24)

H. The unit matrix.

A useful matrix is the unit matrix, or the identity matrix,

1 0 0

I 0 1 0 ij

0 0 1

(3-25)

This matrix has the property that, for any square matrix A ,

IA A I A

(3-26)

I has the same property for matrices that the number 1 has for scalar multiplication.

This is why it is called the unit matrix.

I. Square matrices as members of a group. The rules for matrix multiplication given

above apply to matrices of arbitrary dimension. However, square matrices (number of

rows equals the number of columns) and vectors (matrices consisting of one column)

have a special interest in physics, and we will emphasize this special case from now on.

The reason is as follows: When a square matrix multiplies a column matrix, the result is

another column matrix. We think of this as the matrix "operating" on a vector to produce

another vector. Sets of operators like this, which transform one vector in a space into

another, can form groups. (See the discussion of groups in Chapter 2.) The key

characteristic of a group is that multiplication of one member by another must be defined,

in such a way that the group is closed under multiplication; this is the case for square

matrices. (An additional requirement is the existence of an inverse for each member of

the group; we will discuss inverses soon.)

3-6

Vector Spaces in Physics

8/6/2015

Notice that rotations of the coordinate system form a group of operations: a rotation of

a vector produces another vector, and we will see that rotations of 3-component vectors

can be represented by 3x3 square matrices. This is a very important group in physics.

J. The determinant of a square matrix.

For square matrices a useful scalar quantity called the determinant can be calculated.

The definition of the determinant is rather messy. For a 2 x 2 matrix, it can be defined as

follows:

a b a b

det

ad bc

(3-27)

c d c d

That is, the determinant of a 2 x 2 matrix is the product of the two diagonal elements

minus the product of the other two. This can be extended to a 3x3 matrix as follows:

a b

d e

g h

c

e

f a

h

i

f

d

b

i

g

f

d

c

i

g

e

h

(3-28)

a(ei hf ) b(di gf ) c(dh ge)

Example: Calculate the determinant of the square matrix A of eq. (3-18) above.

Result:

2 4 3

1 3 2 2(3 6) 4(1 2) 3(3 3)

1 3 1

2

3-7

(3-29)

Vector Spaces in Physics

8/6/2015

m={{2,4,3},{1,3,2},{1,3,1}}

MatrixForm[m]

cm

a.b

Inverse[m]

MatrixPower[m,n]

Det[m]

Tr[m]

Transpose[m]

Eigenvalues[m]

Engenvectors[m]

Eigenvalues[N[m]],Eigenvectors[N[m]]

m=Table[Random[],{3},{3}]

defining a matrix

display as a rectangular array

multiply by a scalar

matrix product

matrix inverse

nth power of a matrix

determinant

trace

transpose

eigenvalues

eigenvectors

numerical eigenvalues and eigenvectors

3x3 matrix of random numbers

Table 3-1. Some mathematical operations on matrices which Mathematica can carry

out.

The form (3-28) for the determinant can be put in a more general form if we make a few

definitions. If A is a square nxn matrix, its (i,j)-th minor Mij is defined as the

determinant of the (n-1)x(n-1) matrix formed by removing the i-th row and j-th column of

the original matrix. In (3-28) we see that a is multiplied by its minor, -b is multiplied by

the minor of b, and c is multiplied by its minor. We can then write, for a 3 x 3 matrix A ,

3

A A1 j 1

1 j

j 1

M1 j

(3-30)

[Notice that j occurs three times in this expression, and we have been obliged to back

away from the Einstein summation convention and write out the sum explicitly.] This

expression could be generalized in the obvious way to a matrix of an arbitrary number of

dimensions n, merely by summing from j = 1 to n .

The 3 x 3 determinant expressed with the Levi-Civita tensor.

Loving the Einstein summation convention as we do, we are piqued by having to give it

up in the preceding definition of the determinant. For 3x3 matrices we can offer the

following more elegant definition of the determinant. If we write out the determinant of a

3 x 3 matrix in terms of its components, we get

A A11 A22 A33 A11 A23 A32 A12 A21 A33 A12 A23 A31

(3-31)

A13 A21 A32 A13 A22 A31

Each term is of the form A1iA2jA3k, and it is not too hard to see that the terms where (ijk)

are an even permutation of (123) have a positive sign, and the odd permutations have a

negative sign. That is,

3-8

Vector Spaces in Physics

8/6/2015

A A1i A2 j A3k ijk

(3-32)

(Yes, with the Einstein summation convention in force.)

The Meaning of the determinant. The determinant of a matrix is at first sight a rather

bizarre combination of the elements of the matrix. It may help to know (more about this

later) that the determinant, written as an absolute value, A , is in fact a little like the

"size" of the matrix. We will see that if the determinant of a matrix is zero, its operation

destroys some vectors - multiplying them by the matrix gives zero. This is not a good

property for a matrix, sort of like a character fault, and it can be identified by calculating

its determinant.

K. The 3 x 3 determinant expressed as a triple scalar product.

You might have noted that (3-31) looks a whole lot like a scalar triple product of three

vectors. In fact, if we define three vectors as follows:

A1 A11

A12

A13 ,

A2 A21

A22

A23 ,

A3 A31

A32

A33 ,

(3-33)

then we can write

A A1i A2 j A3k ijk

(3-34)

A1 A2 A3

and the matrix A can be thought of as being composed of three row vectors:

A11 A12 A13

A A21 A2 2 A2 3

(3-35)

A3 A3 A3

1

2

3

Thus taking the determinant is always equivalent to forming the triple product of the

three vectors composing the rows (or the columns) of the matrix.

L. Other properties of determinants.

Here are some properties of determinants, without proof.

Product law. The determinant of the product of two square matrices is the

product of their determinants:

det( A B ) det( A ) det(B )

(product law)

(3-36)

Transpose Law. Taking the transpose does not change the determinant of a

matrix:

3-9

Vector Spaces in Physics

8/6/2015

det( A T ) det( A )

(transpose law)

(3-37)

Interchanging columns or rows. Interchanging any two columns or rows of a

matrix changes the sign of the determinant.

Equal columns or rows. If any two rows of a matrix are the same, or if any two

columns are the same, the determinant of the matrix is equal to zero.

M. Cramer's rule for simultaneous linear equations.

Consider two simultaneous linear equations for unknowns x1 and x2:

Ax C

(3-38)

or

A11

A21

A12 x1 C1

A22 x 2 C 2

(3-39)

or

A11 x1 A12 x2 C1

A21 x1 A22 x2 C2

The last two equations can be readily solved algebraically for x1 and x2, giving

x1

C1 A22 C 2 A12

,

A11 A22 A21 A12

A C A21C1

x 2 11 2

A11 A22 A21 A12

(3-40)

(3-41)

by inspection, the last two equations are ratios of determinants:

x1

x2

C1

C2

A12

A22

A

,

A11 C1

A21 C 2

A

This pattern can be generalized, and is known as Cramer's rule:

3 - 10

(3-42)

Vector Spaces in Physics

8/6/2015

xi

A11

A21

.

.

.

.

.

.

.

An1

A12

A22

.

.

.

.

.

.

.

An 2

.

.

.

.

.

.

.

.

.

.

. A1,i 1

. A2,i 1.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

. An ,i 1

C1

C2

C3

.

.

.

.

.

C n 1

Cn

A1,i 1

A2,i 1

.

.

.

.

.

.

.

An ,i 1

.

.

.

.

.

.

.

.

.

.

. A1n

. A2 n

. .

. .

. .

. .

. .

. .

. .

. Ann

A

(3-43)

As an example of using Cramer's rule, let us consider the three simultaneous linear

equations for x1, x2, and x3 which can be written

A x C;

2 4 3 x1 1

1 3 2 x 2 1

1 3 1 x 4

3

First we calculate the determinant A :

(3-44)

2 4 3

A 1 3 2

1 3 1

2

3 2

1 2

1 3

4

3

2(3) 4(1) 3(0)

3 1

1 1

1 3

2

The values for the xi are then given by

3 - 11

(3-45)

Vector Spaces in Physics

8/6/2015

x1

x2

x1

1 4 3

1 3 2

4 3 1

A

1(3) 4(7) 3 9

1,

2

2(7) 1(1) 33

2,

2

2(9) 4(3) 1(0)

3,

2

2 1 3

1 1 2

1 4 1

A

2 4 1

1 3 1

1 3 4

A

So, the solution to the three simultaneous linear equations is

1

x 2

3

(3-46)

(3-47)

Is this correct? The check is to substitute this vector x into equation (3-44), carry out the

multiplication of x by A , and see if you get back C .

N. Condition for linear dependence.

Finally we return to the question of whether or not the three vectors E1 , E2 and E3

given in section A above are linearly independent. The linear dependence relation (3-2)

can be re-written as

x1 E1 x2 E2 x3 E3 0 ,

a special case of equation (3-38) above,

(3-2a)

(3-38)

Ax C

where the constants C are all zero. From Cramer's rule, equation (3-43), we can

calculate xi, and they will all be zero! This seems to say that the three vectors are linearly

independent no matter what. But the exception is when A = 0; in that case, Cramer's

rule gives zero over zero, and is indeterminate. This is the situation (this is not quite a

proof!) where the xi in (3.2a) above are not zero, and the vectors are linearly dependent.

We can summarize this condition as follows:

3 - 12

Vector Spaces in Physics

E1

8/6/2015

E2

E3 0 E1 , E 2 , E3 are linearly dependent

(3-48)

Example: As an illustration, let us take the vectors E1 and E 3 from eq. (3-1), and

construct a new vector E4 by taking their sum:

2 3 5

(3-49)

E 4 E1 E3 1 2 3

1 1 2

E1 , E 3 and E4 are clearly linearly dependent, since E1 + E 3 - E4 = 0 . We form the

matrix A by using E1 , E 3 and E4 for its columns:

A E1

E3

2 3 5

E4 1 2 3

1 1 2

(3-50)

Now the acid test for linear dependence: calculate the determinant of A :

2 3 5

A 1 2 3 2(1) 3(1) 5(1) 0

1 1 2

This confirms that E1 , E 3 and E4 are linearly dependent.

(3-51)

There is a final observation to be made about the condition (3-48) for linear dependence.

The determinant of a 3x3 matrix can be interpreted as the cross product of the vectors

forming its rows or columns. So, if the determinant is zero, it means that

E1 E2 E3 0 , and this has the following geometrical interpretation: If E1 is

perpendicular to E2 E3 , it must lie in the plane formed by E2 and E 3 ; if this is so, it

is not independent of E2 and E 3 .

O. Eigenvectors and eigenvalues.

For most matrices A there exist special vectors V which are not changed in direction

under multiplication by A :

AV V

(3-52)

In this case V is said to be an eigenvector of A and is the corresponding eigenvalue.

In many physical situations there are special, interesting states of a system which are

invariant under the action of some operator (that is, invariant aside from being multiplied

by a constant, the eigenvalue). Some very important operators represent the time

3 - 13

Vector Spaces in Physics

8/6/2015

evolution of a system. For instance, in quantum mechanics, the Hamiltonian operator

moves a state forward in time, and its eigenstates represent "stationary states," or states of

definite energy. We will soon see examples in mechanics of coupled masses where the

eigenstates describe the normal modes of motion of the system. Eigenvectors are in

general charismatic and useful.

So, what are the eigenstates and eigenvalues of a square matrix? The eigenvalue

equation, (3-52) above, can be written

(3-53)

A I V 0

or, for the case of a 3x3 matrix,

A12

A13 V1 0

A11

(3-54)

A22

A23 V2 0

A21

A

A32

A33 V3 0

31

As discussed in the last section, the condition for the existence of solutions for the

variables V1 ,V2 ,V3 is that the determinant

A11

A21

A31

A12

A22

A32

A13

A23

A33

(3-55)

vanish. This determinant will give a cubic polynomial in the variable , with in general

three solutions, (i ) (1) , (2) , (3) . For each value, the equation

A I V 0

i

i

(3-56)

can be solved for the components of the i-th eigenvector V (i ) .

Example: Rotation about the z axis.

In Chapter 1 we found the components of a vector rotated by an angle about the

z axis. Including the fact that the z component does not change, this rotation can

be represented as a matrix operation,

x Rz x

(3-57)

where

cos sin 0

Rz sin cos 0

(3-58)

0

0

1

Now, based on the geometrical properties of rotating about the z axis, what vector

will not be changed? A vector in the z direction! So, an eigenvector of Rz is

V

1

0

0

1

3 - 14

(3-59)

Vector Spaces in Physics

8/6/2015

Try it out:

cos sin 0 0 0

Rz V sin cos 0 0 0

0

0

1

1 1

V1 is an eigenvector of Rz , with eigenvalue 1 1 .

1

(3-60)

PROBLEMS

Problem 3-1. Consider the square matrix

1 1 3

B 2 3 1 .

1 2 1

(a) Calculate the transpose of B .

(b) Verify by direct calculation that det B det B T .

Problem 3-2. Consider the two matrices

2 4 3

A 1 3 2

1 3 1

and

1 1 3

B 2 3 1 .

1 2 1

If C is the product matrix, C A B , verify by direct calculation that

det C det A B det A det B .

Problem 3.2a. There is a useful property of the determinant, stated in Problem 3-2

above, which is rather hard to prove algebraically:

det C det A B det A det B ,

where A , B and C are square matrices of the same dimension. Let's consider a

numerical "proof" of this theorem, using Mathematica. (See Table 3-1 for a collection of

useful Mathematica operations.)

(a) Fill two 4x4 matrices - call them A and B - with random numbers.

(b) Calculate their determinants.

(c) Calculate the product matrix C A B , and the determinant of C .

(d) See if the theorem above is satisfied in this case.

3 - 15

Vector Spaces in Physics

8/6/2015

(e) Another theorem concerning square matrices is stated in Problem 3-1 above. Test

it, for both of your random matrices.

Problem 3-3. Using tensor notation and the Einstein summation convention, prove the

following theorem about the transpose of the product of two square matrices: If C is the

product matrix, C A B , then

C T B T AT .

[As a starting point, under the Einstein Summation Convention,

Cij Ail Blj .]

Problem 3-4. Calculate det I and Tr I , where I is the nxn identity matrix.

Problem 3-5. Starting with the definition (3-30) of the determinant, but generalized to n

dimensions by carrying out the sum over j from 1 to n, use the fact that interchanging two

rows of a matrix changes the sign of its determinant to prove the following expression for

the determinant of a n x n matrix:

n

A Aij 1

i j

j 1

M ij ,

where i can take on any value from 1 to n. This theorem says that, while the determinant

is usually calculated as a sum of terms with the first factor coming from the top row of

the matrix, that the first factor can in fact be taken from any chosen row of the matrix, if

the correct sign is factored in.

Problem 3-6. Verify by multiplying out A x that the xi’s of equation (3-47) are a

solution of equation (3-44).

Problem 3-7. Consider the system of linear equations below for x1, x2, and x3.

x1 x 2 x3 2

x1 x 2 x3 0 .

x1 x 2 x3 6

Consider this to be a matrix equation of the form

Ax c .

First, write down the matrix A . Then, using Cramer's rule, solve for x1, x2, and x3.

Finally, as a check, multiply out A x and compare it to c .

Problem 3-8. Consider the system of linear equations below for the components (x1, x2,

x3 , x4) of a four-dimensional vector,

3 - 16

Vector Spaces in Physics

8/6/2015

x1 x 2 2

x1 x 2 x3 3

x 2 x3 x 4 3

.

x3 x 4 2

. These equations can be represented as a matrix equation of the form

Ax c ,

with A a 4x4 matrix, and x and c 4-element vectors.)

(a) Write down the matrix A and the vector c .

(b) Use Mathematica to solve the system of equations; that is, find the unknown vector

x . (Hint: first calculate the inverse of the matrix A .)

(c) Check the solution by multiplying x by A and comparing to c . (Do this check by

hand, not with Mathematica.)

Problem 3-9. (a) Show that the three vectors given in equation (3-1) of the text are

linearly independent.

(b) Make a new E 3 by changing only one of its three components, such that E1 , E2 , and

E 3 are now linearly dependent.

Problem 3-10. Consider the rotation matrix discussed in the chapter,

cos sin 0

Rz sin cos 0 .

0

0

1

(a) Consider the vector

1

V2 i .

0

Calculate Rz V2 , and thus show that V2 is an eigenvector of Rz . [Here the

second component of V2 is i, the complex number whose square is equal to -1.]

(b) What is the eigenvalue corresponding to the eigenvector V2 of part (a)?

Problem 3-11. In this chapter we defined addition of matrices and multiplication of a

matrix by a scalar. Do square matrices form a vector space V? Refer to Table 1-1 for the

formal properties of a vector space.

(a) Demonstrate whether or not this vector space V is closed under addition and

multiplication by a scalar.

(b) Do the same for the existence of a zero and the existence of a negative.

(c) Show that property (1-36) is satisfied. [Hint: This is most economically done using

index notation.]

(d) A vector space without a metric is not very interesting. Could the matrix product as

we have defined it be used as an inner product? Discuss.

3 - 17

Vector Spaces in Physics

8/6/2015

3 - 18