* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download Hair Cells

Survey

Document related concepts

Transcript

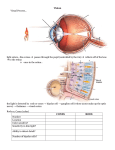

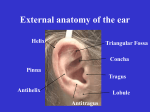

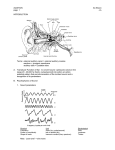

George Berkeley (1710) “A Treatise Concerning the Principles of Human Knowledge” • “If a tree falls in the woods, and there’s no one around to hear it… does it make a sound?” George Berkeley (1710) “A Treatise Concerning the Principles of Human Knowledge” • “If a tree falls in the woods, and there’s no one around to hear it… does it make a sound?” • Is there “objective” reality or only “subjective” perspective? – Objective reality defined: pressure changes in the air or other medium (water). – Subjective reality defined: the experience of hearing (perceiving). You hear vibrations object vibrates air molecules vibrate eardrum vibrates sound waves Periodicity of waves The simplest sound waves – Pure Tones – a sound that is portrayed by a single sine wave Compression (density increase) of air molecules Frequency (Hz) = 1 cycle/t (unit of time or distance) A Amplitude (dB) = difference between atmospheric pressure & maximum pressure of a sound wave time Depression (density decrease) of air molecules Pure tones can vary in frequency (Hertz (Hz = cycles/second)) Pure tones can vary in amplitude (Decibels (dB) – Sound Pressure Level (SPL)) the softest sounds we hear are about 1/10,000,000th the amplitude of the loudest sounds Sound SPL (dB) Barely audible sound (whisper) Leaves rustling Quiet residential community Average speaking voice Loud music from radio/heavy traffic Express subway train Propeller plane at takeoff Jet engine at takeoff (pain threshold) Spacecraft launch at close range 0+-ish 20 40 60 80 100 120 140 160 Elements of Sound Magnitude of displacement Intensity Loudness: amount of sound energy falling on a unit area (cm2) Because air pressure changes are periodic (i.e. they repeat a regular pattern), one can do a Fourier analysis Fourier Analysis reduces sound wave to its fundamental frequency and harmonics frequencies “Fundamental Frequency” is the lowest frequency of a vibrating object Relative Power Fourier frequency spectrum 0 440 880 1320 1760 Frequency (Hz) The auditory system does break down tones into simpler components = Ohm's acoustic law Additive synthesis & Fourier analysis Fundamental Frequency (“1st harmonic”) 2nd Harmonic 3rd Harmonic Perception of sound Pitch: Subjective quality of “high” or “low” tone (tone height) Constructed from fundamental & harmonics Octave: multiples of pitch (200 Hz, (x2) 400 Hz, (x4) 800 Hz, (x8) 1600 Hz, etc.) Perception of sound Timbre (or “tambre”): Subjective quality of sounding different at same pitch and loudness (flute v. bassoon) Constructed from differential energies (loudness) at different fundamental & harmonic frequencies, and differences in the number of harmonics Timbre: differences in number & relative strength of harmonics guitar- many harmonics 400 800 1200 1600 2000 2400 Frequency (Hz) flute - few harmonics (only one actually) 400 800 1200 1600 2000 2400 Ear—overall view Outer Ear • pinna(e) • auditory canal - amplifies sounds in 2-5 kHz range • tympanic membrane (eardrum) Middle Ear • Ossicles: malleus (hammer); incus (anvil); stapes (stirrup) • Oval Window • Middle ear muscles - at high intensities they dampen the vibration of the ossicles Inner Ear • Cochlea Redrawn by permission from Human Information Processing, by P. H. Lindsay and D. A. Norman, 2nd ed. 1977, pg 229. Copyright ©1977 by Academic Press Inc. Outer ear Airborne vibratory processing – helps capture, direct & modulate sound Auditory canal amplifies sounds through Resonance (combining reflected sound in canal with newly entering sound) Tympanic membrane vibrates Middle ear Mechanical processing – vibration from airborne processes translate into movement of bones & muscles -- causing a second level of vibrations on the oval window Inner ear Liquid medium – Neural processing – translation of vibrations into neural signals Copyright © 2002 Wadsworth Group. Wadsworth is an imprint of the Wadsworth Group, a division of Thomson Learning Figure 10.14, page 345 Middle ear ossicles tympanic membrane surface area of stapes The ossicles concentrate the vibration on a smaller surface area which increase the pressure per unit area (factor of 17) The ossicles act as a lever, thereby increasing the vibration by a factor of 1.3 Ear—overall view Redrawn by permission from Human Information Processing, by P. H. Lindsay and D. A. Norman, 2nd ed. 1977, pg 229. Copyright ©1977 by Academic Press Inc. The inner ear is responsible for both: • Balance (vestibular system) • Transduction for hearing (Cochlea) The outer and middle ear are purely mechanical. Neural transduction and analysis begin at the inner ear. • The cochlea is the site at which vibrations of the stapes and inner ear fluid are transduced to neural responses in fibers of the auditory nerve. The cochlea is a coiled tube that resembles a snail shell • In humans, the cochlea coils about 2.5 times • 2 mm diameter • 35 mm long • A cross-section through the coiled cochlear tube reveals that the inside is divided into three compartments The receptor cells are located in the Scala Media, part of the Organ of Corti. Organ of corti The Organ of Corti – located on the basilar membrane in the Scala Media • Three rows of outer hair cells • One row of inner hair cells – main receptor cells involved in hearing • Tectorial membrane covers the tops of the hair cells • Basilar membrane sits underneath the Organ of Corti (underneath the hair cells) Hair Cells 3,500 inner hair cells 12,000 outer hair cells -connected to fibres which leave the cochlea as the auditory nerve inner hair cells diverge - each connects to 8-30 Ganglion Cells (auditory nerve fibres) outer hair cells converge - each auditory nerve fibre is connected to many outer hair cells hair cells have cilia - when they bend, the transduction process starts Innervation of hair cells • Each inner hair cell innervated many spiral ganglion cells • A single spiral ganglion cell is innervated by many outer hair cells Transduction in auditory receptor cells • The tops of the cilia extend up to the tectorial membrane • The base of each hair cell is contacted by one or more spiral ganglion cells Stapes vibrates - oval window vibrates - this causes pressure changes in the fluid - the basillar membrane vibrates at the same frequency as the stapes - the cilia of the hair cells are embedded in the tectorial membrane -when the basillar membrane vibrates, the hair cells bend tectorial membrane basillar membrane Release of neurotransmitter Termination of neurotransmitter depolarization hyperpolarization The traveling wave of sound transduction across the Basilar Membrane Movement of the cilia results in transduction • When pressure at the oval window increases, the basilar membrane moves downward. When pressure decreases, it moves upward. These movements drag the stereocilia in opposite directions. The auditory nerve and central auditory system • The auditory nerve projects in an organized way so that the map of frequency is maintained at every level of the central auditory system. Tonotopic processing Cochlear Base Cochlear Apex The location of the peak of the envelope is frequency dependent high frequency close to base Hi Hz low frequency close to apex Low Hz 1. Place code - von Bekesy basilar membrane is narrower at the base than at the apex stiffer at base -present vibration to basilar membrane traveling wave results Peak base envelope apex Tonotopic Map of the Cochlea #’s indicate fundamental frequency (& pitch) Base Apex Hair cells along the basilar membrane are sharply tuned to a best or characteristic frequency threshold (dB SPL) single-unit recording 70 60 50 40 30 2 5 10 Frequency (kHz) 20 Tuning frequency curve: Specific hair cell locations respond to specific frequencies across the Basilar Membrane How do we code for frequency (pitch)? 1. Place coding neurons on basilar membrane code different frequencies oval window basilar membrane 2,000 Hz 1,000 Hz 2. Rate coding -the frequency (& amplitude) of the sound is reflected in the firing rates of neuron(s) 500 Hz Sound Complexity: (i) number of peaks in the traveling wave, and (ii) how much “side to side” activation occurs. The traveling wave of sound transduction across the Basilar Membrane Basilar Membrane’s response to complex tones Fourier frequency spectrum Relative Power Fourier Analysis reduces sound wave to its fundamental frequency and harmonics frequencies 0 440 880 1320 1760 Frequency (Hz) The auditory system does break down tones into simpler components = Ohm's acoustic law COMPLEX TONES The Central Auditory System Where do we go after the cochlea? “What?” information Nuclei of Lateral Lemniscus “Where?” information The Central Auditory System Where do we go after the cochlea? The cochlear nucleus is the first stage in the central auditory pathway • Each auditory nerve fiber diverges to three divisions of the cochlear nucleus. This means that there Are three tonotopic maps In the cochlear nucleus. Each map provides a separate set of pathways & neural code patterns. Each division of the cochlear nucleus contains specialized cell types • Different cell types receive different types of synaptic endings, have different intrinsic properties, and respond differently to the same input. Changes in response pattern • The stereotyped auditory nerve discharge is converted to many different temporal response patterns. • Each pattern emphasizes different information. Neurons in the cochlear nucleus (and at higher levels) transform the incoming signal in many different ways. • The auditory nerve input is strictly excitatory (glutamate). Some neurons in the cochlear nucleus are inhibitory (GABA or glycine). • Auditory nerve discharge patterns are converted to many other types of temporal pattern. • There is a progressive increase in the range of response latencies. Increase in range of latencies • Each synapse adds approximately 1 ms latency. • Any integrative process that requires time adds latency. The Central Auditory System • There are many parallel pathways in the auditory brainstem. • The binaural system receives input from both ears. “What?” information Nuclei of Lateral Lemniscus • The monaural system receives input from one ear only. • 1st place that binaural information can merge: Superior Olivary Nuclei “Where?” information Monaural pathways (“what?”) Information from each ear is distributed to parallel pathways In the cochlear nucleus and from there, the contralateral lateral lemniscus Binaural Pathways: (“Where?”) The superior olivary complex receives input from both cochlear nuclei and compares the input to the right and left ears. Auditory information from receptors to Auditory Association Areas: Each set of auditory pathways has a specialized function More Analysis & Transduction Route of auditory impulses from the receptors in the ear to the auditory cortex: “SONIC MG” SON (Superior Olivary Nucleus) IC (Inferior Colliculus) MG (Medial Geniculate Nucleus) “A1” Auditory areas in monkey cortex: lobe between (and under) the Lateral and Superior Temporal Sulcus Lateral Sulcus (LS) Information flow within the auditory cortex: Received in Core Area (A1) Secondary area Association area Superior Temporal Sulcus (STS) Outside the primary auditory area are other specialized areas for speech, language, and probably other purposes Unlike vision, much less overlap of left & right hemispheres: called “hemispheric lateralization” Cortical Representation of Frequency: tonotopic representation A1 columnar organization in cortex: neurons in same column prefer the same characteristic frequency Cochlea Combination & integration of sound happens “above” the cochlea (at the level of the cortex?): “periodicity pitch” • Pitch perception significantly influenced by the fundamental frequency • Pitch can be processed at the level of the cochlea (by analyzing different “peak waves” on the basilar membrane) • However, what happens if you: – take out the fundamental frequency (no 400 Hz) – only play some harmonics in one ear (800, 1600) – and other harmonics in the other ear (1200, 2000) • Effect of the “missing fundamental:” we hear the pitch (400 Hz) from only hearing the harmonics • Called “periodicity pitch” Periodicity Pitch The effect of the missing fundamental Merging left-right information in the Central Auditory System • The monaural system receives input from one ear only • The binaural system receives input from both ears • 1st place that binaural information can merge: Superior Olivary Nuclei “What?” information Nuclei of Lateral Lemniscus “Where?” information Combination & integration of sound happens “above” the cochlea (at the level of the cortex?): “periodicity pitch” • Can’t be explained by pitch processing at cochlea (neither cochlea has all harmonic information) • Could be happening at the level of Superior Olivary nucleus or Nuclei of Lateral Lemniscus (both have first combination of information from both ears) Combination & integration of sound happens “above” the cochlea (at the level of the cortex?): “periodicity pitch” • However, patients with damage to a specific area auditory cortex of the right hemisphere no longer can process periodicity pitch – Hence, use of “merged” information may be processed at the level of the cortex – “Hemispheric lateralization”: differences in what left-right hemispheres process (i.e., left language; right non-lang sound) Cortical response to sound: Rhesus monkey calls • Cells in the non-associative area (i.e., beyond A1 in right temporal lobe) of the monkey cortex respond poorly to pure tones, and respond best to “noisy” sounds (lots of fundamental and harmonic frequencies combined) • Some cells respond “best” to monkey calls (same left temporal lobe area responsive to language in humans)