* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download Chapter 2 Defn 1. - URI Math Department

Exterior algebra wikipedia , lookup

Eigenvalues and eigenvectors wikipedia , lookup

Perron–Frobenius theorem wikipedia , lookup

Euclidean vector wikipedia , lookup

Laplace–Runge–Lenz vector wikipedia , lookup

Jordan normal form wikipedia , lookup

Symmetric cone wikipedia , lookup

System of linear equations wikipedia , lookup

Cayley–Hamilton theorem wikipedia , lookup

Matrix calculus wikipedia , lookup

Vector space wikipedia , lookup

Chapter 2

Defn 1. (p. 65) Let V and W be vector spaces (over F ). We call a function T : V → W a

linear transformation form V to W if, for all x, y ∈ V and c ∈ F , we have

(a) T (x + y) = T (x) + T (y) and

(b) T (cx) = cT (x).

Fact 1. (p. 65)

1. If T

W.

2. T is

3. If T

4. T is

is linear, then T (0) = 0. Note that the first 0 is in V and the second one is in

linear if and only if T (cx + y) = cT (x) + T (y) for all x, y ∈ V and c ∈ F .

is linear, then T (x − y) = T (x) − T (y) for all x, y ∈ V .

linear if and only if, for x1 , x2 , . . . , xn ∈ V and a1 , a2 , . . . , an ∈ F , we have

n

n

X

X

T(

ai xi =

ai T (xi ).

i=1

i=1

Proof. .

1. We know 0 · x = 0 for all vectors x. Hence, 0 = 0 · T (x) = T (0 · x) = T (0)

2. Assume T is linear and c ∈ F and x, y ∈ V , then T (cx) = cT (x) and T (cx + y) =

T (cx) + T (y) = cT (x) + T (y).

Assume T (x + y) = T (x) + T (y) for all x, y ∈ V . Let a ∈ F and x, y ∈ V .

Then T (ax) = T (ax + 0) = aT (x) + T (0) = aT (x) and T (x + y) = T (1 · x + y) =

1 · T (x) + T (y) = T (x) + T (y).

3. To start with, the definition of subtraction is: x − y= x + (−y). We also wish to

show: ∀x ∈ V, −1 · x = −x. Given 0 · x = 0 which we proved earlier, we have

(1 + (−1))x = 0

1 · x + (−1) · x = 0

Since the additive inverse in unique, we conclude that (−1) · x = −x.

AssumeT is linear. Then T (x − y) = T (x + −1 · y) = T (x) + (−1)T (y) =

T (x) + (−T (y)) = T (x) − T (y) for all x, y ∈ V .

4. ( ⇐) Let n = 2, a1 = c, and a2 = 1. We have that T (cx1 + x2 ) = cT (x1 ) + T (x2 ).

(⇒) This is done by induction. Using the definition of linear transformation, we

get the base case (n = 2). That is,

T (a1 x1 + a2 x2 ) = T (a1 x1 ) + T (a2 x2 ) = a1 T (x1 ) + a2 T (x2 ).

Now assume that n ≥ 2 and for all x1 , x2 , . . . , xn ∈ V and a1 , a2 , . . . , an ∈ F , we

have

n

n

X

X

T(

ai xi ) =

ai T (xi ).

i=1

i=1

1

2

Then for all x1 , x2 , . . . , xn , xn+1 ∈ V and a1 , a2 , . . . , an an+1 ∈ F , we have

n+1

n

X

X

T(

ai xi ) = T (

ai xi + an+1 xn+1 )

i=1

i=1

n

X

= T(

ai xi ) + T (an+1 xn+1 )

i=1

=

n

X

ai T (xi ) + an+1 T (xn+1 )

i=1

=

n+1

X

ai T (xi ).

i=1

Defn 2. (p. 67) Let V and W be vector spaces, and let T : V → W be linear. We define the

null space (or kernel) N (T ) of T to be the set of all vectors x in V such that T (x) = 0;

N (T ) = {x ∈ V : T (x) = 0}.

We define the range (or image) R(T ) of T to be the subset W consisting of all images

(under T ) of vectors in V ; that is, R(T ) = {T (x) : x ∈ V }.

Theorem 2.1. Let V and W be vector spaces and T : V → W be linear. Then N (T ) and

R(T ) are subspaces of V and W , respectively.

Proof. To prove that N (T ) is a subspace of V , we let x, y ∈ N (T ) and c ∈ F . We will show

that 0 ∈ N (T ) and cx + y ∈ N (T ). We know that T (0) = 0. So, 0 ∈ N (T ). We have that

T (x) = 0 and T (y) = 0 so T (cx + y) = c · T (x) + T (y) = c · 0 + 0 = c · 0 = 0. (The last

equality is a fact that can be proved from the axioms of vector space.

To prove that R(T ) is a subspace of W , assume T (x), T (y) ∈ R(T ), for some x, y ∈ V and

c ∈ F . Then, cT (x)+T (y) = T (cx+y), where cx+y ∈ V , since T is a linear transformation

and so, cT (x) + T (y) ∈ R(T ). Also, since 0 ∈ V and 0 = T (0), we know that 0 ∈ R(T ).

Let A be a set of vectors in V . Then T (A) = {T (x) : x ∈ A}.

Theorem 2.2. Let V and W be vector spaces, and let T : V → W be linear. If β =

{v1 , v2 , . . . , vn } is a basis for V , then R(T ) = span(T (β)).

Proof. T (β) = {T (v1 ), T (v2 ), . . . , T (vn )}. We will show R(T ) = span(T (β)). We showed

that R(T ) is a subspace of W . We know that T (β) ⊆ R(T ). So, by Theorem 1.5,

span(T (β)) ⊆ R(T ).

Let x ∈ R(T ). Then there is some x0 ∈ V such that T (x0 ) = x. β is a basis of V , so there

exist scalars a1 , a2 , . . . , an such that x0 = a1 x1 + a2 x2 + · · · + an xn .

x = T (x0 )

= T (a1 x1 + a2 x2 + · · · + an xn )

= a1 T (x1 ) + a2 T (x2 ) + · · · + an T (xn )

which is in span(T (β)).

Thus R(T ) ⊆ span(T (β)).

3

Defn 3. (p. 69) Let V and W be vector spaces, and let T : V → W be linear. If N (T ) and

R(T ) are finite-dimensional, then we define the nullity of T , denoted nullity(T ), and the

rank of T , denoted rank(T ), to be the dimensions of N (T ) and R(T ), respectively.

Theorem 2.3. (Dimension Theorem). Let V and W be vector spaces, and let T : V → W

be linear. If V is finite-dimensional, then nullity(T )+ rank(T ) = dim(V ).

Proof. Suppose nullity(T ) = k and β = {n1 , . . . , nk } is a basis of N(T ). Since β is linearly

independent in V , then β extends to a basis of V ,

β 0 = {n1 , . . . , nk , xk+1 , . . . , xn } ,

where, by Corollary 2c. to Theorem 1.10, ∀i, xi 6∈ N (T ). Notice that possibly β = ∅.

It suffices to show:

β 00 = {T (xk+1 ), . . . , T (xn )}

is a basis for R(T ).

By Theorem 2.2, T (β 0 ) spans R(T ). But T (β 0 ) = {0} ∪ β 00 . So β 00 spans R(T ). Suppose

00

β is not linearly independent. Then there exist c1 , c2 , . . . , cn ∈ F , not all 0 such that

0 = c1 T (xk+1 ) + · · · + cn T (xn ).

Then

0 = T (c1 xk+1 + · · · + cn xn )

and

c1 xk+1 + · · · + cn xn ∈ N(T ).

But then

c1 xk+1 + · · · + cn xn = a1 n1 + a2 n2 + · · · + ak nk

for some scalars a1 , a2 , . . . , ak , since β = {n1 , . . . , nk } is a basis of N(T ). Then

0 = a1 n1 + a2 n2 + · · · + ak nk − c1 xk+1 − · · · − cn xn .

The c0i s are not all zero, so this contradicts that β 0 is a linearly independent set.

Theorem 2.4. Let V and W be vector spaces, and let T : V → W be linear. Then T is

one-to-one if and only if N (T ) = {0}.

Proof. Suppose T is one-to-one. Let x ∈ N(T ). Then T (x) = 0 also T (0) = 0. T being oneto-one implies that x = 0. Therefore, N(T ) ⊆ {0}. It is clear that {0} ⊆ N(T ). Therefore,

N(T ) = {0}.

Suppose N(T ) = {0}. Suppose for x, y ∈ V , T (x) = T (y). We have that T (x)−T (y) = 0.

T (x − y) = 0. Then it must be that x − y = 0. This implies that −y is the additive inverse

of x and x is the additive inverse of −y. But also y is the additive inverse of −y. By

uniqueness of additive inverses we have that x = y. Thus, T is one-to-one.

Theorem 2.5. Let V and W be vector spaces of equal (finite) dimension, and let T : V → W

be linear. Then the following are equivalent.

(a) T is one-to-one.

(b) T is onto.

(c) rank(T ) = dim(V ).

4

Proof. Assume dim(V ) = dim(W ) = n.

(a) ⇒ (b) : To prove T is onto, we show that R(T ) = W . Since T is one-to-one, we know

N(T ) = {0} and so nullity(T ) = 0. By Theorem 2.3, nullity(T ) + rank(T ) = dim(V ). So we

have dim(W ) = n = rank(T ). By Theorem 1.11, R(T ) = W .

(b) ⇒ (c) : T is onto implies that R(T ) = W . So rank(T ) = dim(W ) = dim(V ).

(c) ⇒ (a) : If we assume rank(T ) = dim(V ), By Theorem 2.3 again, we have that

nullity(T ) = 0. But then we know N(T ) = {0}. By Theorem 2.4, T is one-to-one.

Theorem 2.6. Let V and W be vector spaces over F , and suppose that β = {v1 , v2 , . . . , vn }

is a basis for V . For w1 , w2 , . . . , wn in W , there exists exactly one linear transformation

T : V → W such that T (vi ) = wi for i = 1, 2, . . . , n.

Proof. Define a function T : V → W by T (vi ) = wi for i = 1, 2, . . . , n and since β is a

basis, we can express every vector in V as a linear combination of vectors in β. For x ∈ V ,

x = a1 v1 + a2 v2 + · · · + an vn , we define T (x) = a1 w1 + a2 w2 + · · · + a2 wn .

We will show that T is linear. Let c ∈ F and x, y ∈ V . Assume x = a1 v1 +a2 v2 +· · ·+an vn

and y = b1 v1 + b2 v2 + · · · + bn vn . Then

cx + y =

n

X

(cai + bi )vi

i=1

n

X

T (cx + y) = T ( (cai + bi )vi )

i=1

(1)

=

X

= c

(cai + bi )wi

n

X

i=1

ai wi +

n

X

bi wi

i=1

= cT (x) + T (y)

Thus, T is linear.

To show it is unique, we need to show that if F : V → W is a linear transformation such

that F (vi ) = wi for all i ∈ [n], then for all x ∈ V , F (x) = T (x).

Assume x = a1 v1 + a2 v2 + · · · + an vn . Then

T (x) = a1 w1 + a2 w2 + · · · + an wn

= a1 F (v1 ) + a2 F (v2 ) + · · · + an F (vn )

= F (x)

Cor 1. Let V and W be vector spaces, and suppose that V has a finite basis {v1 , v2 , . . . , vn }.

If U, T : V → W are linear and U (vi ) = T (vi ) for i = 1, 2, . . . , n, then U = T .

Defn 4. (p. 80) Given a finite dimensional vector space V , an ordered basis is a basis

where the vectors are listed in a particular order, indicated by subscripts. For F n , we have

{e1 , e2 , . . . , en } where ei has a 1 in position i and zeros elsewhere. This is known as the

standard ordered basis and ei is the ith characteristic vector. Let β = {u1 , u2 , . . . , un } be an

ordered basis for a finite-dimensional vector space V . For x ∈ V , let a1 , a2 , . . . , an be the

5

unique scalars such that

x=

n

X

ai ui

i=1

We define the coordinate vector of x relative to β, denoted [x]β , by

a1

a2

[x]β =

... .

an

Defn 5. (p. 80) Let V and W be finite-dimensional vector spaces with ordered bases β =

{v1 , v2 , . . . , vn } and γ = {w1 , w2 , . . . , wn }, respectively. Let T : V → W be linear. Then for

each j, 1 ≤ j ≤ n, there exists unique scalars ai,j ∈ F , a ≤ i ≤ m, such that

T (vj ) =

m

X

ti,j wi

1 ≤ j ≤ n.

i=1

So that

t1,j

t2,j

[T (vj )]γ =

... .

tm,j

We call the m × n matrix A defined by Ai,j = ai,j the matrix representation of T in the

ordered bases β and γ and write A = [T ]γβ . If V = W and β = γ, then we write A = [T ]β .

Fact 2. [a1 x1 + a2 x2 + · · · + an xn ]B = a1 [x1 ]B + a2 [x2 ]B + · · · + an [xn ]B

Proof. Let β = {v1 , v2 , . . . , vn }. Say xj = bj,1 v1 + bj,2 v2 + · · · + bj,n vn .

The LHS:

a1 x1 + a2 x2 + · · · + an xn = a1 (b1,1 v1 + b1,2 v2 + · · · + b1,n vn )

+a2 (b2,1 v1 + b2,2 v2 + · · · + b2,n vn )

+···

+an (bn,1 v1 + bn,2 v2 + · · · + bn,n vn )

= (a1 b1,1 + a2 b2,1 + · · · + an bn,1 )v1

+(a1 b1,2 + a2 b2,2 + · · · + an bn,2 )v2

+···

+(a1 b1,n + a2 b2,n + · · · + an bn,n )vn

The ith position of the LHS is the coefficient ci of vi in:

a1 x1 + a2 x2 + · · · + an xn = c1 v1 + c2 v2 + · · · + cn vn

And so, ci = a1 b1,i + a2 b2,i + · · · + an bn,i .

The RHS: The ith position of the RHS is the sum of the ith position of each aj [xj ]β , which

is the coefficient of vi in aj xj = aj (bj,1 v1 + bj,2 v2 + · · · + bj,n vn ) and thus, aj bj,i . We have

the ith position of the RHS is a1 b1,i + a2 b2,i + · · · + an bn,i .

6

Fact 2. (a) Let V and W be finite dimensional vector spaces with bases, β and γ, respectively.

[T ]γβ [x]β = [T (x)]γ .

Proof. Let β = {v1 , v2 , . . . , vn }. Let x = a1 v1 + a2 v2 + · · · + an vn . Then

[T (x)]γ = [T (α1 v1 + α2 v2 + · · · + αn vn )]γ

= [α1 T (v1 ) + α2 T (v2 ) + · · · + αn T (vn ))]γ

= α1 [T (v1 )]γ + α2 [T (v2 )]γ + · · · + αn [T (vn )]γ by fact 2

t1,1 · · · t1,n

α1

.

.

.

.

.

=

.

.

. .

tn,1 · · · tn,n

αn

= [T ]γβ [x]γ

Fact 3. Let V be a vector space of dimension n with basis β. {x1 , x2 , . . . , xk } is linearly

independent in V if and only if {[x1 ]β , [x2 ]β , . . . , [xk ]β } is linearly independent in F n .

Proof.

⇐⇒

⇐⇒

⇐⇒

⇐⇒

{x1 , x2 , . . . , xk } is linearly dependent

∃a1 , a2 , . . . , ak , not all zero, such that a1 x1 + a2 x2 · · · + ak xk = 0

∃a1 , a2 , . . . , ak , not all zero, such that [a1 x1 + a2 x2 · · · + ak xk ]β = [0]β = 0

since the only way to represent 0(∈ V ) in any basis is 0(∈ F n )

∃a1 , a2 , . . . , ak , not all zero, such that a1 [x1 ]β + a2 [x2 ]β · · · + ak [xk ]β = 0

{[x1 ]β , [x2 ]β , . . . , [xk ]β }is a linearly dependent set

Defn 6. (p. 99) Let V and W be vector spaces, and let T : V → W be linear. A function

U : W → V is said to be an inverse of T if T U = IW and U T = IV . If T has an inverse,

then T is said to be invertible.

Fact 4. v and W are vector spaces with T : V → W . If T is invertible, then the inverse of

T is unique.

Proof. Suppose U : W → V is such that T U = IW and U T = IV and X : W → V is such

that T X = IW and XT = IV .

To show U = X, we must show that ∀w ∈ W , U (w) = X(w). We know IW (w) = w and

IV (X(w)) = X(w).

U (w) = U (IW (w)) = U T X(w) = IV X(w) = X(w).

Defn 7. We denote the inverse of T by T −1 .

Theorem 2.17. Let V and W be vector spaces, and let T : V → W be linear and invertible.

Then T −1 : W → V is linear.

7

Proof. By the definition of invertible, we have: ∀w ∈ W , T (T −1 (w)) = w. Let x, y ∈ W .

Then,

(2)

(3)

(4)

(5)

T −1 (cx + y) =

=

=

=

T −1 (cT (T −1 (x)) + T (T −1 (y)))

T −1 (T (cT −1 (x) + T −1 y))

T −1 T (cT −1 (x) + T −1 y)

T −1 (x) + T −1 y

Fact 5. If T is a linear transformation between vector spaces of equal (finite) dimension,

then the conditions of being a.) invertible, b.) one-to-one, and c.) onto are all equivalent.

Proof. We start with a) ⇒ c). We have T T −1 = IV . To show onto, let v ∈ V then for

x = T −1 (v), we have: T (x) = T (T −1 (v)) = IV (v) = v. Therefore, T is onto.

By Theorem 2.5, we have b) ⇐⇒ c).

We will show b) ⇒ a).

Let {v1 , v2 , . . . , vn } be a basis of V . Then {T (v1 ), T (v2 ), . . . , T (vn )} spans R(T ) by Theorem 2.2. One of the corollaries to the Replacement Theorem implies that {x1 = T (v1 ), x2 =

T (v2 ), . . . , xn = T (vn )} is a basis for V .

We define U : V → V by, ∀i, U (xi ) = vi . By Theorem 2.6, this is a well-defined linear

transformation.

Let x ∈ V , x = a1 v1 + a2 v2 + · · · + an vn for some scalars a1 , a2 , . . . , an .

U T (x) =

=

=

=

=

=

U T (a1 v1 + a2 v2 + · · · + an vn )

U (a1 T (v1 ) + a2 T (v2 ) + · · · + an T (vn )

U (a1 x1 + a2 x2 + · · · + an xn )

a1 U (x1 ) + a2 U (x2 ) + · · · + an U (xn )

a1 v1 + a2 v2 + · · · + an vn

x

Let x ∈ V , x = b1 x1 + b2 x2 + · · · + bn xn for some scalars b1 , b2 , . . . , bn .

T U (x) =

=

=

=

=

=

T U (b1 x1 + b2 x2 + · · · + bn xn )

T (b1 U (x1 ) + b2 T (x2 ) + · · · + bn T (xn )

T (b1 v1 + b2 v2 + · · · + bn vn )

b1 T (v1 ) + b2 T (v2 ) + · · · + bn T (vn )

b1 x1 + b2 x2 + · · · + bn xn

x

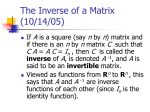

Defn 8. (p. 100) Let A be an n × n matrix. Then A is invertible if there exits an n × n

matrix B such that AB = BA = I.

Fact 6. If A is invertible, then the inverse of A is unique.

Proof. A ∈ Mn (F ) and AB = BA = In . Suppose AC = CA = In . Then we have B =

BIn = BAC = In C = C.

8

Defn 9. We denote the inverse of A by A−1 .

Lemma 1. (p. 101) Let T be an invertible linear transformation from V to W . Then V is

finite-dimensional if and only if W is finite-dimensional. In this case, dim(V ) = dim(W ).

Proof. (⇒) T : V → W is invertible. Then T T −1 = IW and T −1 T = IV . Assume dim V = n

and {v1 , v2 , . . . , vn } is a basis of V .

We know T is onto since, if y ∈ W then for x = T −1 (y), we have T (x) = y.

We know R(T ) = W since T is onto and {T (v1 ), T (v2 ), . . . , T (vn )} spans W , by Theorem

2.2.

So W is finite dimensional and dim W ≤ n = dim V .

(⇐) Assume dim W = m. T −1 : W → V is invertible. Applying the same argument as in

the last case, we obtain that V is finite dimensional and dim V ≤ dim W .

We see that when either of V or W is finite dimensional then so is the other and so

dim W ≤ dim V and dim V ≤ dim W imply that dim V = dim W .

Theorem 2.18. Let V and W be finite-dimensional vector spaces with ordered bases β and

γ, respectively. Let T : V → W be linear. Then T is invertible if and only if [T ]γβ is invertible.

Furthermore, [T −1 ]βγ = ([T ]γβ )−1 .

Proof. Assume T is invertible. Let dim V = n and dim W = m. By the lemma, n = m. Let

β = {∨1 , ∨2 , . . . , ∨n } and γ = {w1 , w2 , . . . , wn }.

We have T −1 such that T T −1 = IW and T −1 T = IV .

We will show that [T −1 ]βγ is the inverse of [T ]γβ .

We will show the following 2 matrix equations:

(6)

[T ]γβ [T −1 ]βγ = In

(7)

[T −1 ]βγ [T ]γβ = In

To prove (1) we will take the approach of showing that the ith column of [T ]γβ [T −1 ]βγ is ei ,

the ith characteristic vector.

Notice that for any matrix A, Aei is the ith column of A.

Consider [T ]γβ [T −1 ]βγ ei . By repeated use of Fact 2a, we have:

[T ]γβ [T −1 ]βγ ei = [T ]γβ [T −1 ]βγ [wi ]γ

= [T ]γβ [T −1 (wi )]β

= [T T −1 (wi )]γ

= [wi ]γ

= ei

To prove (7) the proof is very similar. We will show that the ith column of [T −1 ]βγ [T ]γβ is

ei , the ith .

9

Consider [T −1 ]βγ [T ]γβ ei . By repeated use of Fact 2a, we have:

[T −1 ]βγ [T ]γβ ei = [T −1 ]βγ [T ]γβ [vi ]β

= [T −1 ]βγ [T (vi )]γ

= [T −1 (T (vi ))]β

= [vi ]β

= ei

Thus we have (⇒) and the Furthermore part of the statement.

Now we assume A = [T ]γβ is invertible. Call its inverse A−1 . We know that it is square,

thus n = m. Let β = {∨1 , ∨2 , . . . , ∨n } and γ = {w1 , w2 , . . . , wn }. Notice that the ith column

of A is Ai = (t1,i , t2,i , . . . , tn,i ) where T (vi ) = t1,i w1 + t2,i w2 + . . . + tn,i wn

To show T is invertible, we will define a function U and prove that it is the inverse of T .

Let the ith column of A−1 be Ci = (c1,i , c2,i , . . . , cn,i ). We define U : W → V by for all

i ∈ [n],

U (wi ) = c1,i ∨1 +c2,i ∨2 + · · · + cn,i ∨n

. Since we have defined U for the basis vectors γ, we know from Theorem 2.6 that U is a

linear transformation.

We wish to show that T U is the identity transformation in W and U T is the identity

transformation in V . So, we show

(1) T U (x) = x, ∀x ∈ W and

(2) U T (x) = x, ∀x ∈ V .

Starting with (1.). First lets see why, ∀i ∈ [n], T (U (wi )) = wi .

T (U (wi )) = T (c1,i ∨1 +c2,i ∨2 + · · · + cn,i ∨n )

= c1,i (t1,1 w1 + t2,1 w2 + . . . + tn,1 wn )

+c2,i (t1,2 w1 + t2,2 w2 + . . . + tn,2 wn )

+ · · · + cn,i (t1,n w1 + t2,n w2 + . . . + tn,n wn )

Gathering coefficients of w1 , w2 , . . . , wn , we have:

T (U (wi )) = (c1,i t1,1 + c2,i t1,2 + · · · + cn,i t1,n )w1

+(c1,i t2,1 + c2,i t2,2 + · · · + cn,i t2,n )w2

+ · · · + (c1,i tn,1 + c2,i tn,2 + · · · + cn,i tn,n )wn

We see that the coefficient of wj in the above expression is Row j of A dot Column i of

A−1 , which is always equal to 1 if and only if i = j.

Thus we have that T (U (wi )) = wi .

Now we see that for x ∈ W then x = b1 w1 +b2 w2 +· · ·+bn wn for some scalars b1 , b2 , . . . , bn .

T U (x) =

=

=

=

=

T U (b1 w1 + b2 w2 + · · · + bn wn )

T (b1 U (w1 ) + b2 U (w2 ) + · · · + bn U (wn ))

b1 T (U (w1 )) + b2 T (U (w2 )) + · · · + bn T (U (wn ))

b1 w1 + b2 w2 + · · · + bn wn

x

10

Similarly, U T (x) = x for all x in V .

Cor 1. Let V be a finite-dimensional vector space with an ordered basis β, and let T :

V → V be linear. Then T is invertible if and only if [T ]β is invertible. Furthermore,

[T −1 ]β = ([T ]β )−1 .

Proof. Clear.

Defn 10. Let A ∈ Mm×n (F ). Then the function LA : F n → F m where for x ∈ F n ,

LA (x) = Ax and is called left-multiplication by A.

Fact 6. (a) LA is a linear transformation and for β = {e1 , e2 , . . . , en }, [LA ]β = A.

Proof. We showed in class.

Cor 2. Let A be an n × n matrix. Then A is invertible if and only if LA is invertible.

Furthermore, (LA )−1 = LA−1 .

Proof. Let V = F n . Then LA : V → V . Apply Corollary 1 with β = {e1 , e2 , . . . , en } and

[LA ]β = A, we have:

LA is invertible if and only if A is invertible.

The furthermore part of this corollary says that (LA )−1 is left multiplication by A−1 .

We will show LA LA−1 = LA−1 LA = IV . So we must show LA LA−1 (x) = x, ∀x ∈ V and

LA−1 LA (x) = x, ∀x ∈ V .

We have LA LA−1 (x) = AA−1 x = x and LA−1 LA (x) = A−1 Ax = x.

Defn 11. Let V and W be vector spaces. We say that V is isomorphic to W if there exists

a linear transformation T : V → W that is invertible. Such a linear transformation is called

an isomorphism from V onto W .

Theorem 2.19. Let V and W be finite-dimensional vector spaces (over the same field).

Then V is isomorphic to W if and only if dim(V ) = dim(W ).

Proof. (⇒) Let T : V → W be an isomorphism. Then T is invertible and dim V = dim W

by the Lemma.

(⇐) Assume dim V = dim W . Let β = {v1 , . . . , vk } and γ = {w1 , w2 , . . . , wk } be bases

for V and W , respectively. Define T : V → W where vi 7→ wi for all i.

Then [T ]γβ = I which is invertible and Theorem 2.18 implies that T is invertible and thus,

an isomorphism.

Fact 7. Let P ∈ Mn (F ) be invertible. W is a subspace of F n implies LP (W ) is a subspace

of F n and dim(LP (W )) = dim(W ).

Proof. Let x, y ∈ LP (W ), a ∈ F , then there exist x0 and y0 such that P x0 = x and P y0 = y.

So P (ax0 + y0 ) = aP x0 + P y0 = ax + y. So ax + y ∈ LP (W ). Also, we know 0 ∈ W and

LP (0) = 0 since LP is linear, so we have that 0 ∈ LP (W ). Therefore, LP (W ) is a subspace.

Let {x1 , x2 , . . . , xk } be a basis of W .

⇒

⇒

⇒

⇒

a1 P (x1 ) + a2 P (x2 ) · · · + ak P (xk ) = 0

P (a1 x1 + a2 x2 · · · + ak xk ) = 0

a1 x1 + a2 x2 · · · + ak xk = 0

a1 = a2 = · · · = ak = 0

{P (x1 ), P (x2 ), . . . , P (xk )} is linearly independent

11

To show that {P (x1 ), P (x2 ), . . . , P (xk )} spans LP (W ), we let z ∈ LP (W ). Then there is

some w ∈ W , such that LP (W ) = z. If w = a1 x1 + a2 x2 + · · · + ak xk , we have

z = P w = P (a1 x1 + a2 x2 + · · · + ak xk ) = a1 P (x1 ) + a2 P (x2 ) + · · · + ak P (xk ).

And with have that {P (x1 ), P (x2 ), . . . , P (xk )} is a basis of LP (W ) and dim(W ) = dim(LP (W )).

So far, we did not define the rank of a matrix. We have:

Defn 12. Let A ∈ Mm×n (F ). Then, Rank(A)= Rank(LA ). And the range of a matrix, R(A)

is the same as R(LA ).

Fact 8. Let S ∈ Mn (F ). If S is invertible, then R(S) = F n .

Proof. LS : F n → F n . We know S is invertible implies LS is invertible. We already know

that, since LS is onto, R(LS ) = F n . By the definition of R(S), we have R(S) = F n .

Fact 9. S, T ∈ Mn (F ), S invertible and T invertible imply ST is invertible and its inverse

is T −1 S −1 .

Proof. This is the oldest proof in the book.

Theorem 2.20. Let V and W be finite-dimensional vector spaces over F of dimensions n

and m, respectively, and let β and γ be ordered bases for V and W , respectively. Then the

function

Φ : L(V, W ) → Mm×n (F )

γ

defined by Φ(T ) = [T ]β for T ∈ L(V, W ), is an isomorphism.

Proof. To show Φ is an isomorphism, we must show that it is invertible. Let β = {v1 , v2 , . . . , vn }

and γ = {w1 , w2 , . . . , wn }.

We define U : Mm×n (F ) → L(V, W ) as follows.

Let A ∈ Mm×n (F ) and have ith column:

a1,i

a2,i

.

..

an,i

So U maps matrices to transformations. U (A) is a transformation in L(V, W ). We describe

the action of the linear transformation U (A) on vi and realize that this uniquely defines a

linear transformation in L(V, W ).

∀i ∈ [n], U (A)(vi ) = a1,i w1 + a2,i w2 + · · · + an,i wn .

Then A =

Thus, ΦU (A) = A.

To verify that U (Φ)(T ) = T , we see what the action is on vi .

[U (A)]γβ .

U (Φ(T ))(vi ) = U ([T ]γβ )(vi ) = t1,i w1 + t2,i w2 + · · · + tn,i wn = T (vi )

Cor 1. Let V and W be finite-dimensional vector spaces over F of dimensions n and m,

respectively. Then L(V, W ) is finite-dimensional of dimension mn.

12

Proof. This by Theorem 2.19.

Theorem 2.22. Let β and γ be two ordered bases for a finite-dimensional vector space V ,

and let Q = [IV ]γβ . Then

(a) Q is invertible.

(b) For any v ∈ V , [v]γ = Q[v]β .

Proof. We see that

Let β = {v1 , . . . , vn } and γ = {w1 , . . . , wn } We claim the inverse of Q is [IV ]βγ . We see

that the ith column of [IV ]γβ [IV ]βγ is [IV ]γβ [IV ]βγ ei .

We have

[IV ]γβ [IV ]βγ ei = [IV ]γβ [IV ]βγ [wi ]γ

= [IV ]γβ [IV (wi )]β

= [IV (IV (wi ))]γ

= [wi ]γ

= ei

and

[IV ]βγ [IV ]γβ ei = [IV ]βγ [IV ]γβ [vi ]β

= [IV ]βγ [IV (vi )]γ

= [IV (IV (vi ))]β

= [vi ]β

= ei

We know, [v]γ = [IV (v)]γ = [IV ]γβ [v]β = Q[v]β .

Defn 13. (p. 112) The matrix Q = [IV ]γβ defined in Theorem 2.22 is called a change

of coordinate matrix. Because of part (b) of the theorem, we say that Q changes βcoordinates into γ-coordinates. Notice that Q−1 = [IV ]βγ .

Defn 14. (p. 112) A linear transformation T : V → V is called a linear operator.

Theorem 2.23. Let T be a linear operator on a finite-dimensional vector space V , and let

β and γ be ordered bases for V . Suppose that Q is the change of coordinate matrix that

changes β-coordinates into γ-coordinates. Then

[T ]β = Q−1 [T ]γ Q.

Proof. The statement is short for:

[T ]ββ = Q−1 [T ]γγ Q.

13

Let β = {v1 , v2 , . . . , vn }. As usual, we look at the ith column of each side. We have

Q−1 [T ]γγ Qei = [IV ]βγ [T ]γγ [IV ]γβ [vi ]β

= [IV ]βγ [T ]γγ [IV (vi )]γ

= [IV ]βγ [T ]γγ [vi ]γ

= [IV ]βγ [T (vi )]γ

= [IV (T (vi ))]β

= [T (vi )]β

= [T ]ββ [vi ]β

= [T ]ββ ei

The ith column of [T ]ββ .

Cor 1. Let A ∈ Mn×n (F ), and let γ be an ordered basis for F n . Then [LA ]γ = Q−1 AQ,

where Q is the n × n matrix whose j th column is the j th vector of γ.

Proof. Let β be the standard ordered basis for F n . Then A = [LA ]β . So by the theorem,

[LA ]γ = Q−1 [LA ]β Q.

Defn 15. (p. 115) Let A and B be in Mn (F ). We say that B is similar to A if there exists

an invertible matrix Q such that B = Q−1 AQ.

Defn 16. (p. 119) For a vector space V over F , we define the dual space of V to be the

vector space L(V, F ), denoted by V ∗ . Let β = {x1 , x2 , . . . , xn } be an ordered basis for V .

For each i ∈ [n], we define fi (x) = ai where ai is the ith coordinate of [x]β . Then fi is in V ∗

called the ith coordinate function with respect to the basis β.

Theorem 2.24. Suppose that V is a finite-dimensional vector space with the ordered basis

β = {x1 , x2 , . . . , xn }. Let fi (1 ≤ i ≤ n) be the ith coordinate function with respect to β and

let β ∗ = {f1 , f2 , . . . , fn }. Then β ∗ is an ordered basis for V ∗ , and, for any f ∈ V ∗ , we have

n

X

f=

f (xi )fi .

i=1

Proof. Let f ∈ V I . Since dim V ∗ = n, we need only show that

n

X

f=

f (xi )fi ,

i=1

∗

∗

from which it follows that β generates V , and hence is a basis by a Corollary to the

Replacement Theorem.

Let

n

X

g=

f (xi )fi .

i=1

For 1 ≤ j ≤ n, we have

g(xj ) =

n

X

i=1

!

f (xi )fi (xj ) =

n

X

i=1

f (xi )fi (xj ) =

n

X

i=1

f (xi )δi,j = f (xj ).

14

Therefore, f = g.