* Your assessment is very important for improving the workof artificial intelligence, which forms the content of this project

Download Math 850 Algebra - San Francisco State University

Cartesian tensor wikipedia , lookup

System of polynomial equations wikipedia , lookup

Birkhoff's representation theorem wikipedia , lookup

Bra–ket notation wikipedia , lookup

Field (mathematics) wikipedia , lookup

Covering space wikipedia , lookup

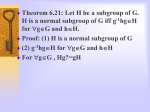

Group (mathematics) wikipedia , lookup

Algebraic K-theory wikipedia , lookup

Gröbner basis wikipedia , lookup

Dedekind domain wikipedia , lookup

Deligne–Lusztig theory wikipedia , lookup

Polynomial greatest common divisor wikipedia , lookup

Linear algebra wikipedia , lookup

Factorization wikipedia , lookup

Complexification (Lie group) wikipedia , lookup

Tensor product of modules wikipedia , lookup

Homological algebra wikipedia , lookup

Cayley–Hamilton theorem wikipedia , lookup

Modular representation theory wikipedia , lookup

Basis (linear algebra) wikipedia , lookup

Eisenstein's criterion wikipedia , lookup

Fundamental theorem of algebra wikipedia , lookup

Factorization of polynomials over finite fields wikipedia , lookup

Polynomial ring wikipedia , lookup